The GPU you posted is more than an order of magnitude under powered for rendering a standard PS4 game at 4k 30fps. It essentially just supports output at the resolution and frame rate like playing a video.

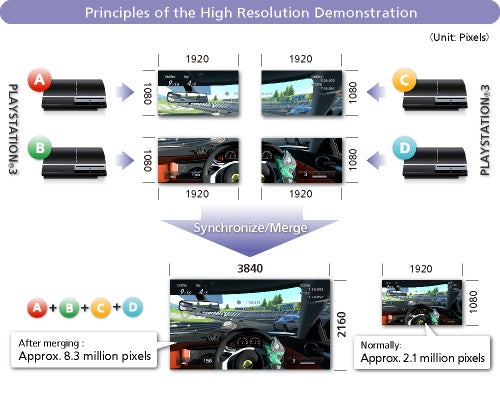

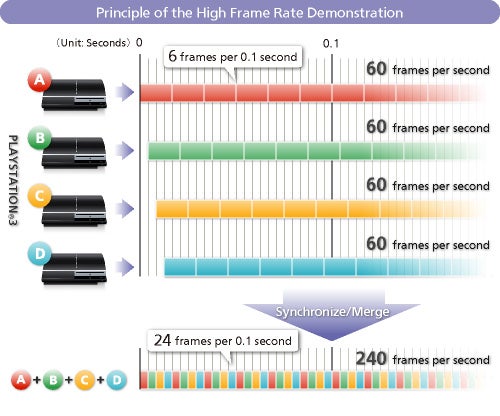

Those other 3 pixels are each equally as demanding as the first. That is why uprendering to 4k is more taxing than natively rendering at 4k. If they were less demanding to create such as applying an algorithm to the original image then it would just be upscaling.

You need to look into how rasterization is done on a GPU to get a sense of just how much processing is actually being done. Try reading through this:

http://www.scratchapixel.com/lesson...plementation/overview-rasterization-algorithm

Upscaling is already incorporated into most 4k tvs. It would be pointless to have the hardware do what the tv already can.

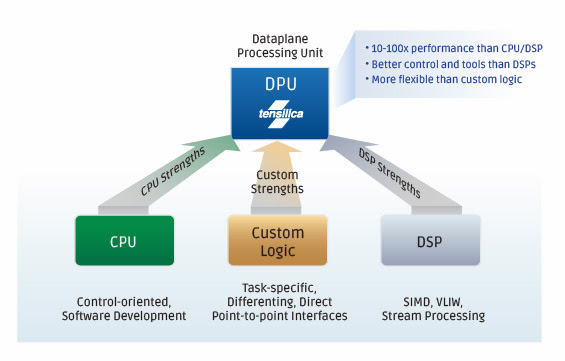

The task in question, "up-rendering", is still rendering 8.3 million unique pixels, same as a native 4k frame. If there was something 5 times more efficient than GPUs at that task we wouldn't be using GPUs.

That is a 2006 paper on global illumination, which is a type of lighting model. It has nothing to do with final output resolution. Edit: I see where you were drawing parallels, but rendering per pixel presents a different challenge than a per area impact of a light on the G-buffer.