I don't use frame generation, and when it's available I also use Reflex.

Latency of PC games is usually lower than on consoles and that was even before PSSR and DLSS were invented. PSSR is just another type of reconstruction, I don't get how it can reduce latency vs. for example FSR2 when cost of it is similar.

Yep, PSSR is similar to other reconstruction technology in this aspect. It usually produces better results than FSR2/3 (not in all aspects) but is a bit heavier as well.

I really wonder if PSSR2/FSR4 will have the same cost on PS5 Pro as current PSSR.

You might not use frame-gen, but Nvidia's opaque implementation for DLSS and the VRAM and cache memory bandwidth requirements don't add up on mid-tier Nvidia hardware at high frame-rate IMO which for me means DLSS3 and 4 by default probably uses FG even when toggled off on 4xxx and above hardware.

The validation of the Pro's PSSR inferencing cost and the recent Amethyst presentation by AMD and Mark discussing the bandwidth pressures even compared to the PS5 Pro's should tell you that Nvidia are doing a lie by omission on when exactly the latency (processing)cost of adding DLSS upscaling to a brand new frame actually is - when they aren't able to hide the latency cost with prior frame interpolation.

PC gamers have been using a form of frame-gen for multiple decades, where the driver provided the ability to (pre-render) process X number of frames ahead of time.

As for Reflex, like frame-gen it only works while everything is predictable and it is giving the illusion of smoother control, you still need to see a real new frame first and can't present a valid game input - that isn't predictive - before the game logic allows for it and that is limited by the native frame-rate, double buffering and the tick-rate of the CPU to read user input while being in lock-step with the GPU server rendering under the CPU client's control

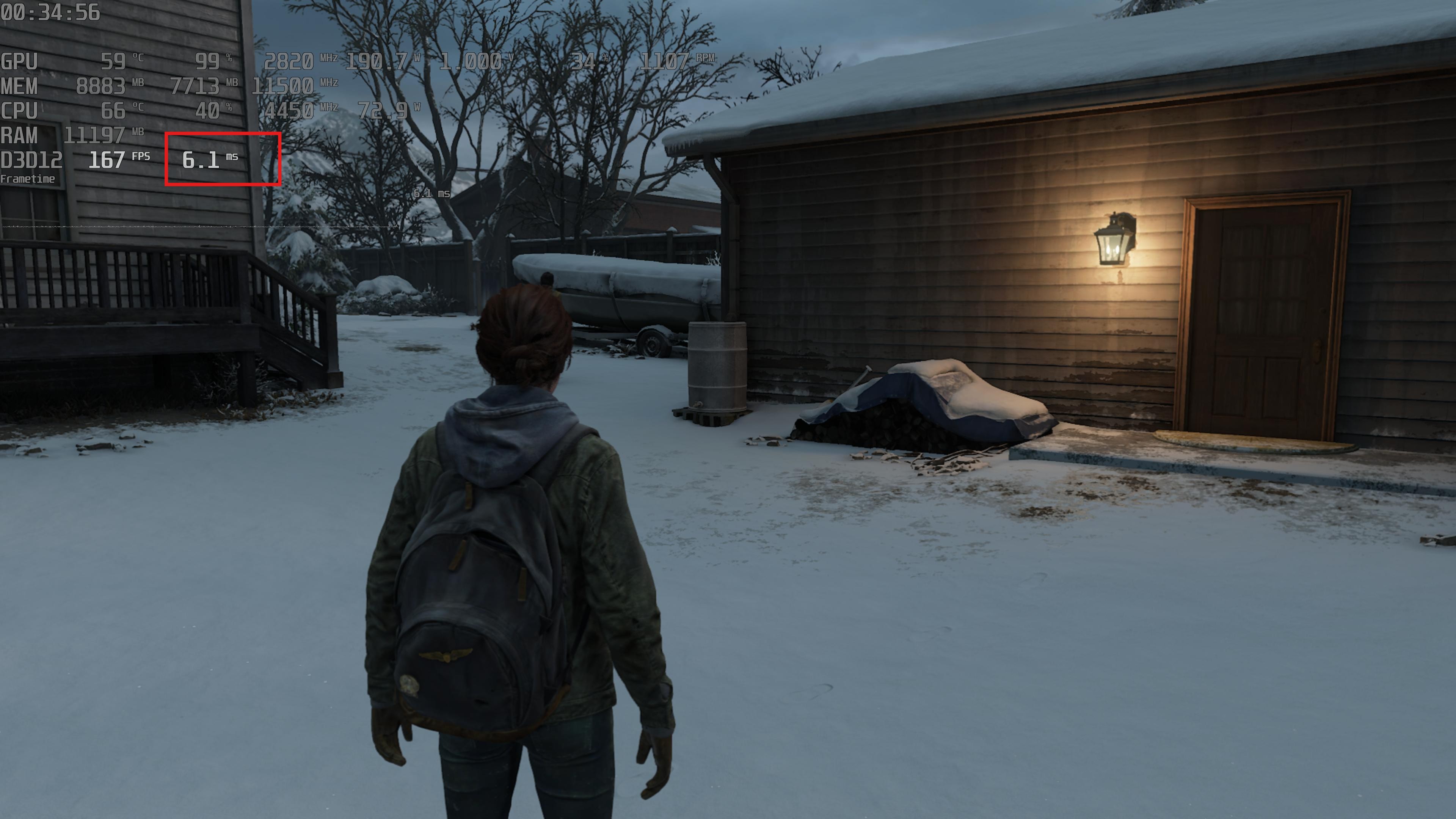

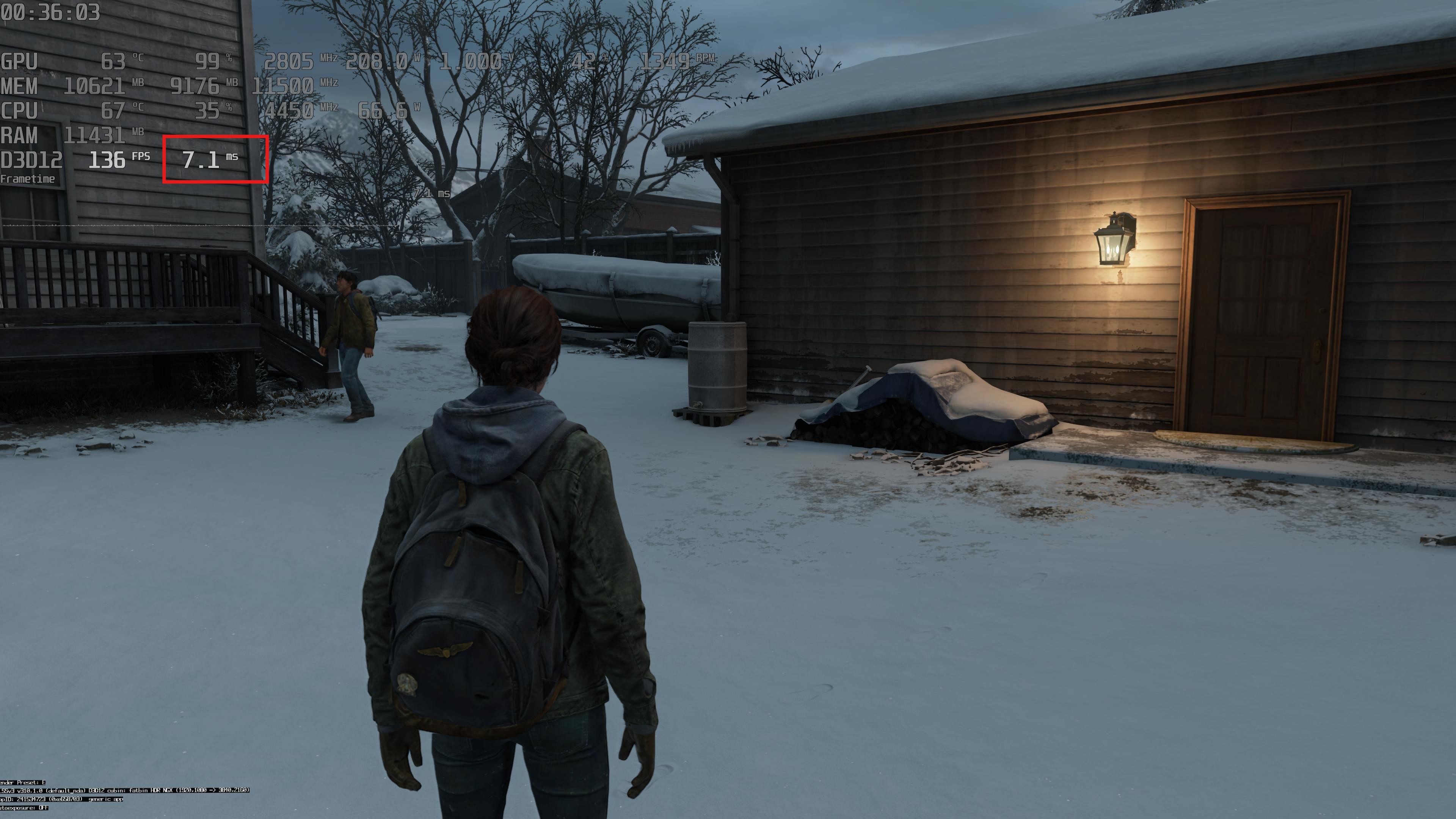

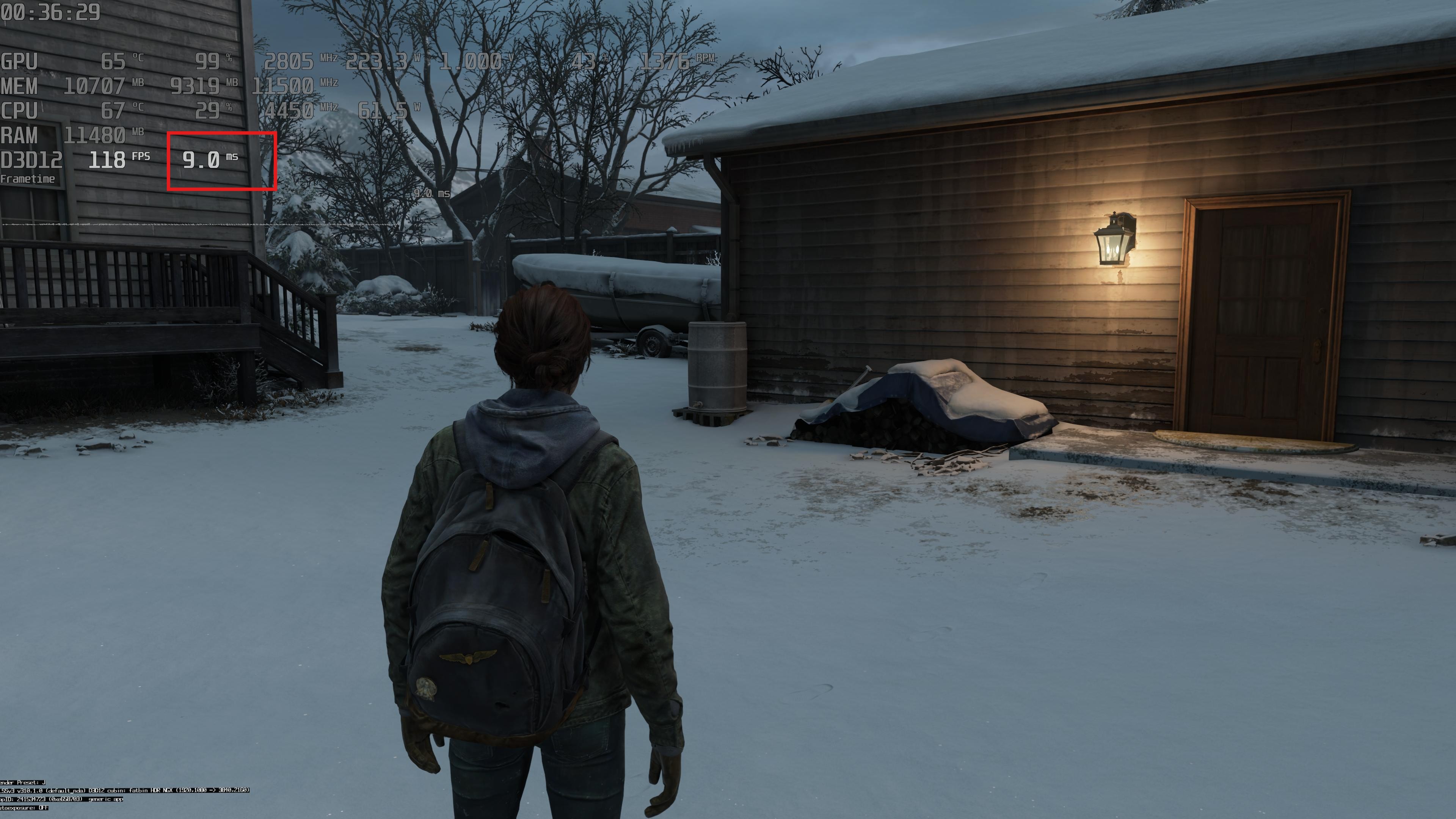

It works 99.99% of the time because most games like Last of Us 2 and HFW are designed around 30fps as the lowest common denominator where frame to frame and input to input is very predictable, but for a native high fps game that 0.01% failed prediction is a variable input latency spike, hence why I just ignore Reflex as a technology much like I didn't play GT5 at 1080p because the 0.01 screen tearing was a massive input latency spike causing me to crash.

And IIIRC I said nothing about PSSR reducing processing latency against non-ML AI techniques like FSR2/3. AFAIK it doesn't, but that was never part of the discussion about the importance of these ML AI upscalers in relation to next-gen and against FSR4 on an Xbox with 30% more power than a PS6 and how PS6's use or strategy for Amethyst comes into play by comparison.

I get the PC gamers love to point at higher-frame-rates and nicer settings, but IMO there is a major lack of scrutiny on the legitimacy of real worst case frame-rates and real worst case latency on DLSS3 and DLSS4, and FSR4 on PC by comparison to PSSR, even more so since new info has emerged this week on Amethyst that looks like register memory solution of the PS5 Pro is being extended to make CUs able to globally reference the register memory in any CUs.

This would allow them to work on tensor tiles at a much larger scale than those shown in the PS5 Pro seminar video at ~23:54 for Rachet and Clank - that have high processing redundance at borders because they are small -, which suggests that AMD believe the Pro hardware solution at a larger scale and enhanced is superior to evolving their existing RX 9070XT solution for FSR4.

That supports my view that PSSR at a hardware-use-level is better in ways that are being obscured by the back drop of a RTX 5090 using DLSS4 and MFG.