Pandora's box has been opened... Now one ivy plant becomes four.

1. Metallica and Britney Spears thought they were untouchable... For every album they released 10 months later... AI does it in 1 minute, with their voices and your style.

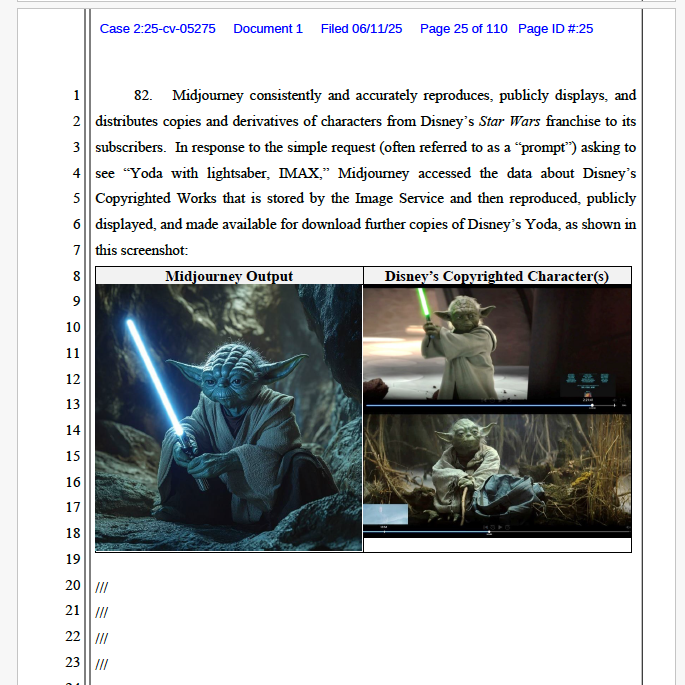

2. I can make a TMNT and Hellboy crossover exactly how I want.

3. Voice actors who used to be picky... Now AI is doing the dubbing.

4. An artist who asked me for money for fan art... Gone, because now we do it ourselves, exactly how we want.

5. Figma figures we wanted but they wouldn't make... Even my neighbor can make a figure with 3D printing and AI for $7 or less.

6. We no longer need translators, because AI already does it.

7. We can discover people's mental state... We can predict their actions.

Before, artists in bands, singers, or film actors thought they were so important... Now they have to accept that people will come to us and we'll get to know them. They're no longer living on a silver platter like before... Now it all depends on concerts.

We can now do crossovers of Goku, Peppa Pig, Messi, the Queen of England, the Muppets Babies and Fast & Furious, if we want.

Now we can ask the most difficult and impossible questions to our teachers. We now hold the power of human knowledge in our hands.

We now have the ultimate limit of power.

AI is the best invention of 2025.