lastflowers

Banned

Can the original Kinect distinguish between an open hand, and a closed fist?

This has to do with algorithmically analyzing the data produced by the Kinect. The hardware within the Kinect has remained the same.

Can the original Kinect distinguish between an open hand, and a closed fist?

Using LZO has been standard practice for most/all game-assets on disk since PS1 days - so yes, it's extremely common usage pattern, and it's also the main reason why hardwiring the algorithm in new consoles is cost-efficient/sensible.LukasTaves said:Is it really that common?

I have read some of the patent and everything I have a come across so far has been DMA + Compression, there was nothing other then regular DMA + Compression mentioned by vgleaks so why expect something else?.

DXTCn is far far better for textures then JPEG, a shame that it wont decode into it.

It's worth looking beyond external device I/O though. Eg. fast-Jpeg on PS2 had the benefit of using it as on-demand decompressor from Ram->eDram, and with the hw-extensions for virtualizing memory access, decompression can get even more mileage.spwolf said:200 MBs for zlib decoder is extremely fast. It is so fast that it is not needed to be that fast

GPUs do realtime decompression of DXT, despite these "rumors" and "secret saucing" of otherwise. This is why DXT is used for texture data.

jpeg creates smaller sizes for texture storage but then it requires decompression and compression into DXT before sending it to GPUs. It is not just cpu intensive but also it introduces another lossy format into the mix which lowers overall quality of textures.

what developers currently use is they zip their textures into one file and then stream it from the game when needed. Zip is lossless so no data lost. PNG uses same compression algo as Zip. With intelligent compression, you can get great results on lossless compression of DXT textures.

200 MBs for zlib decoder is extremely fast. It is so fast that it is not needed to be that fast, unless you have SSD, because your BD and HDD cant feed enough data to make it fully used.

So thats very good... It allows for many things that will make our experience on PS4/720 better... from faster loading, to "faster" installation of games, both things that PS3 has struggled with for instance. In fact, I would say that right now, that 200 MBs will be more useful than 8 GB everyone is focusing on.

The leaked performance figures are fine - the usual usage pattern is to speed up external media (HDD, Optical or Network) and 200MB/s will keep up with all but the fastest HDDs (the rumor also indicate a slightly more demanding algorithm than LZO, so that factors in as well).

I'll agree though that de-compression is not a big-deal - but rumors suggest unit can also do compression, which makes it much more interesting IMO.

is the Zlib Decompression Hardware in the PS4 the same as the hardware that's in the Xbox 3?

is the Zlib Decompression Hardware in the PS4 the same as the hardware that's in the Xbox 3?

Depending on which algorithm it implements it could even be better, but chances are its the same. Durango implements LZ77

Manufacturers have announced mass production for 4gb density GDDR5 chips (like samsung)where do guys get this info ;p

It's worth looking beyond external device I/O though. Eg. fast-Jpeg on PS2 had the benefit of using it as on-demand decompressor from Ram->eDram, and with the hw-extensions for virtualizing memory access, decompression can get even more mileage.

So there isn't any specs on it besides VGleaks saying it's there?

yeah but DXT changes that... since GPU takes DXT, you have to recompress the data in memory which sucks. GPUs dont do realtime DXT compression, just decompression.

I didnt run any benchmarks, but for instance crunch is much better solution than using jpeg to store textures on disk. And their solution for textures might be very similar to crunch in nature, where DXT compressed texture is smartly compressed with lossless codec that is adjusted to the nature of DXT format/data.

The amount of data-writes(or sending) in modern games is increasing at a rapid pace - it's becoming more important to compress those, and a dedicated unit can be a major win there. Of course I don't expect it to run at 200MB/s - but then it doesn't need to.spwolf said:Compression doesnt matter, and 200 MBs is certainly not for compression.

Only if you're doing it on highly-persistant data.yeah but DXT changes that... since GPU takes DXT, you have to recompress the data in memory which sucks.

Very true - but that's a different usage pattern, where your data remains resident in memory for long time.I didnt run any benchmarks, but for instance crunch is much better solution than using jpeg to store textures on disk.

i have been trying to follow it, looked for past 3 pages... so jpeg... 373 MBs?

Modern GPUs dont have texture compression support?

Please bear with me, I am trying to learn.

Textures are sometimes stored and decoded as JPEG in games. To decode it, normally you assign that to your cpu, or in compute capable gpu. This is done to keep the file size small.After that, it is then fed into the gpu which will then convert it it to the texture format it supports. Reading the durango summit papers, it seems the gpu supports several standard and some proprietary texture format. What this does is that it saves the cpu or gpu resources (read: alu/flop) you will spend in order to do this. So, like the other fixed function units in both systems (audio unit, video decoder/encoder etc) it is there to save cpu/gpu resource that will be better spent doing other stuffs.

GPUs do realtime decompression of DXT, despite these "rumors" and "secret saucing" of otherwise. This is why DXT is used for texture data.

jpeg creates smaller sizes for texture storage but then it requires decompression and compression into DXT before sending it to GPUs. It is not just cpu intensive but also it introduces another lossy format into the mix which lowers overall quality of textures.

what developers currently use is they zip their textures into one file and then stream it from the game when needed. Zip is lossless so no data lost. PNG uses same compression algo as Zip. With intelligent compression, you can get great results on lossless compression of DXT textures.

200 MBs for zlib decoder is extremely fast. It is so fast that it is not needed to be that fast, unless you have SSD, because your BD and HDD cant feed enough data to make it fully used.

So thats very good... It allows for many things that will make our experience on PS4/720 better... from faster loading, to "faster" installation of games, both things that PS3 has struggled with for instance. In fact, I would say that right now, that 200 MBs will be more useful than 8 GB everyone is focusing on.

Why in gods name would you want to move the JPEG to eSRAM decompress it into a raw format and then re-encode it again, thats a incredible waste of CPU/GPU performance? why not have it compressed in DXTCn the first place. Also the Durango GPU supports everything the GCN cards do, thats it, no special formats.

VGleaks specifically says x86, so we're pretty sure it isn't PowerPC.

I see

You know, We are just haunting for rumors and speculation cuz right now its totaly dead

who knows, Maybe its AMD maybe its IBM. Nothing is 100% until MS reveals the console, right?

I just hope for us gamers that what ever they release will keep us happy 10 years down the road. Not another WII U BS

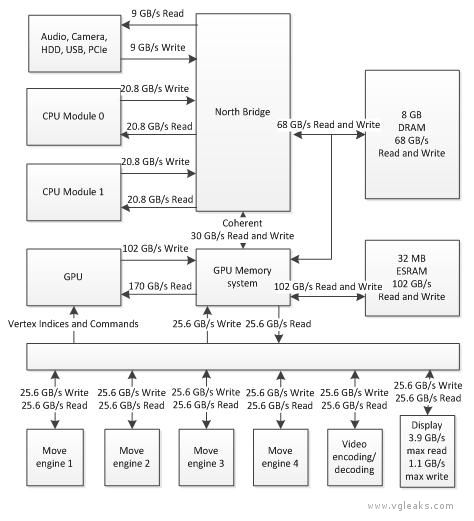

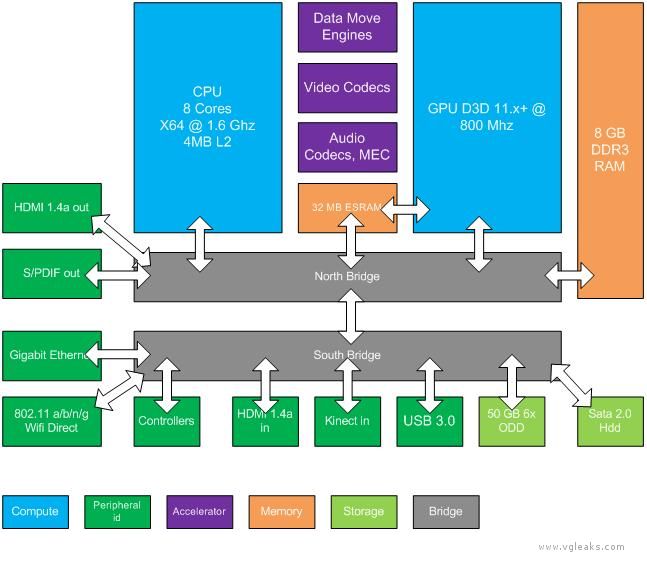

if it's a APU or SoC why is their a North Bridge showing in the VGleaks documents?

Shouldn't the CPU & GPU already be connected?

Maybe they are going to go down the shorter lifespan and copy the PC/apple model of releasing a slightly updated version every year or two. Using generic hardware could make this more feasible for developers. This is easily manged with PC hardware so why not with a more generic console?

Maybe they are going to go down the shorter lifespan and copy the PC/apple model of releasing a slightly updated version every year or two. Using generic hardware could make this more feasible for developers. This is easily manged with PC hardware so why not with a more generic console?

I see the Kinect defense force has invaded the CPU thread, great.

Ah, gaming forums... where if you don't hate something 100% then you are part of a "defense force".

Very mature.

Give it a rest, the thread has moved on

I can't think how this would work with 3+ year dev cycles. Which model would devs target?

Which model do PC devs target?

I'm not saying this will happen, just saying it's entirely possible. Because these new consoles are built on direct PC architecture, it would be easier than ever to just release updated models every few years. Devs could just program two, or three "settings" of the game, to run on each iteration of the hardware.

It would be like PC development of today, but even easier because you're still not dealing with dozens of different configurations, you're dealing with a few.

Because it will be as confusing as shit to consumers, the N64 mem expansion pack was bad enough but a entire new console? every year?, no.

This ignoring the fact that it takes nearly a year to just design a console.

Apple manage it with iPad and IPhone and consumers deal with that easily. Direct download and xbla would be straight forward with a not compatible with your device as with iTunes or google play.

Plenty of other electrical devices go through this design and release over short periods of time I don't see this being an issue if the base design is generic enough again like pc's/Android/apple

This wouldn't work because different market and cost variations of apps/games. A 5 yr refresh rate would work just fine, but annually would kill the console markers and games would never truly advance because the devs/pubs would always be focused on the lowest common denominator(ie the first gen) which is what most people would have.

Ah, gaming forums... where if you don't hate something 100% then you are part of a "defense force".

Very mature.

Which model do PC devs target?

I'm not saying this will happen, just saying it's entirely possible. Because these new consoles are built on direct PC architecture, it would be easier than ever to just release updated models every few years. Devs could just program two, or three "settings" of the game, to run on each iteration of the hardware.

It would be like PC development of today, but even easier because you're still not dealing with dozens of different configurations, you're dealing with a few.

it's unnecessary since the thread has come back on topic. Just because you feel you should reply to something doesn't mean it's wise to actually do so

Well it is a CPU thread and someone mentions Kinect and suddenly the usual suspects rush in and try to convince everyone it is a good thing. So ya, it is a defense force. Don't want to be lumped in, don't post so predictably about the same topics so much.

Saying it won't work makes little sense though this has worked for PC gaming for decades, with multiple platforms and multiple operating system versions. Apple have proven this works with iPad having 4 generations since 2010.

....I agree a yearly release wouldn't be great but that doesn't mean something like it won't happen, maybe 2 or 3 years is more feasible.

This wouldn't work because different market and cost variations of apps/games. A 5 yr refresh rate would work just fine, but annually would kill the console markers and games would never truly advance because the devs/pubs would always be focused on the lowest common denominator(ie the first gen) which is what most people would have.

Different markets. Different expectations from consumers in terms of where their money is going.

Consoles have been around for a pretty long time now and people are used to spending $300-400 every 5 years or so on a console for video game entertainment.

Doing something every 2 years would put the Xbox brand in a similar position to Sega during the mid '90s -- many consoles, customer confusion, devs not knowing what to support, etc. Just wouldn't be that smart to do imo.

Sorry for replying to a message directed towards me.

I thought that was the whole idea of forums.

Not trying to convince anyone of anything. Just made a simple reply to what was being discussed.

Don't see why it's so hard to have an actual discussion instead of lumping people in due to different views.

But whatever -- never meant to derail the spec-speculation thread.

either way in 5-6 years the lowest common denominator will be x720/ps4.

But with more frequent releases we would get more capable options more often instead of one big jump every 5 years.

Lets say after x720 MS releases new updated console every 2 years, same architecture, just better specs (faster gpu/cpu, ram). They support last 3 versions and developers must do it too.

All new games are compatible with last 3 versions and because of DirectX maybe old games can be made to run better on new consoles (more AA, ssao, etc.) without updates. Just like on PC developers make low/mid/high settings they could do the same for consoles. Even easier because they have only 3 very similar targets.

I would prefer that business model over current. Waiting 6 years for new console is bullshit. I buy new ipad every 2 years and i want the same with consoles.

I hope MS tries something like this. It is time someone changes console business.

either way in 5-6 years the lowest common denominator will be x720/ps4.

But with more frequent releases we would get more capable options more often instead of one big jump every 5 years.

Lets say after x720 MS releases new updated console every 2 years, same architecture, just better specs (faster gpu/cpu, ram). They support last 3 versions and developers must do it too.

All new games are compatible with last 3 versions and because of DirectX maybe old games can be made to run better on new consoles (more AA, ssao, etc.) without updates. Just like on PC developers make low/mid/high settings they could do the same for consoles. Even easier because they have only 3 very similar targets.

I would prefer that business model over current. Waiting 6 years for new console is bullshit. I buy new ipad every 2 years and i want the same with consoles.

I hope MS tries something like this. It is time someone changes console business.

I think that's the thing though this box isn't just targeted at video game development hence the reason Ms could go down this route, sure the nextbox could be under spec compared to Sony but in 2 years nextbox gen 2 is out with full BC and a spec that goes past Sony for the same price and then two years after that they have the strongest living room box in terms of power and Ms has been taking in double sometimes triple hardware sales, again I an not saying it's the right way to go but if it was me I would certainly be looking at it as an option

Maybe they are going to go down the shorter lifespan and copy the PC/apple model of releasing a slightly updated version every year or two. Using generic hardware could make this more feasible for developers. This is easily manged with PC hardware so why not with a more generic console?

Big problem with this is pricing. Consoles are usually sold with really low or negative margins, in hope of making money on software and later on reduction of manufacturing complexity. This would not be possible if new consoles would have to be designed and sold every next year. They'd be constantly losing money on hardware. They only reason this model works for apple is because they managed to convince people that it's worth paying so much money for tablets and phones that they make large profit on each device sold. No one will pay such premium for a game console or set top box device, PS3 very clearly proved that.I'm not saying it's a good thing just that I wonder of Ms are looking at the likes of apple and Android think they can shoe horn this type of product release schedule into the home living room box product.

Durango is a generational leap on its own and the differences between PS4 games and games on Durango won't be obvious to the general market, even if they're things that seems substantial to enthusiasts. Durango isn't underpowered, PS4 is just more powerful. There's a big difference. There's way too much money invested in its research and development to drop it after a couple years, it'll be here to stay for the whole gen. It'll take a game or two for devs to even get a handle on how to really push it, and that'll be 2-4 years into the gen.

The differences between X360 games and PS3 games have driven plenty of 360 sales.