Agree 100%.

You know what's wrong with this thread? There's only so much one can say about a subject and most of us do it and get done with it until there's something meaningful to discuss again.

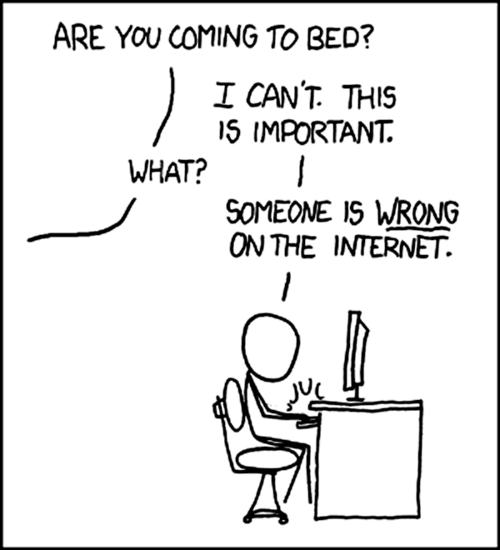

It's not so much as "nothing else can be said" as it is... well, this:

Seriously. This thread should be named "krizzx against logic and the world" or something, dudes that have something meaningful to say have nothing on him (and no, this is not an attack).

Some of us have access to documentation, are developers, engineers or programmers, some are knowledgeable in various degrees or forms (no point in self-defining; really), some like to read technical jargon or simply want to have a good conversation (and I understand you're trying to be in this last criteria; but it's not working out, because...). This thread is always going nowhere when people keep insisting on the very same points; that includes people that, pitch topics whose silver lining is: "help me prove Wii U is really something else" which are honestly as annoying as "it's not even better than X360"; and that's where my interest in this thread is dwindling; I'm very interested in reading knowledge posts, stuff I didn't know or even different non-previously-stated-opinions and don't take me wrong but the krizzx circle is pissing me off grandly for a while now; because we keep having silly arguments all over.

Anyway my point is; dude, take a chill pill and stop getting your head under the guillotine for the Wii U graphical prowess; bumping a thread to put things on the table like 1080p or something is not something you're gonna get out unscathed not only because there aren't many Wii U games like that but because everyone doubts PS4 or XBone will be able to comply to it either (and for good reason); suggesting the Wii U has hidden muscle to completely cloak that and pull 1080p standard for loads of games, at 60 frames per second no less... is silly. And I understand there's an ego associated and some people won't let you save face hence you feel cornered all the time and go damage control all over (and you're not a uneducated fellow, so you really try to patch things and don't hold us in bad light, as I don't towards you); but learn to save face before you're under everyone's scrutiny; it's that situation when you took it as a routine for a while and somebody says "hey that dude is annoying" and in the next moment it clicked for everyone; you've pushed it past that point, till the point of behaving like a caricature or yourself. I could pitch something to take the piss and know you'd cling to it providing it catered to your wishes, that's the position you put yourself into.

If anything, the fact this has gone for so long, and you totally dominated in post count a thread meant for discussing Wii U technicalities without having much to say/add has certainly driven away lots of people that perhaps had something to say (I'd like to have seen more posts from Thraktor, Fourth Storm, wsippel, StevieP, bgassassin... I'm forgetting people for sure) instead of

655 posts by you (again, no offense, but you post too much for the reality of this thread), or perhaps they tried and kept seeing the thread revolving around discussing cyclic misinformation; stuff like the recent "MORE PERFORMANCE WILL BE UNLOCKED" on the next update, or speculation about "incomplete gpu firmware" and just gave up, you keep hoping for hugely unlikely best case scenarios and, while you won't agree, you keep refusing to see the big picture; and get the queue. My point: it's not just karma the reason people fall over you, learn to stay back a little more, and it'll go away, and I want to read knowledgeable points, not 1080p silliness.

I wish Nintendo would release specs just for that, not because it's gonna change my outlook of whatever (what the console is, is pretty obvious at this point) but because of things like this.

Sorry if I'm being too hard; it's not you, it's the situation. Carry on.