I would like to clarify something about this performance vs architecture thing.

Architecture doesn't refer only to having newer features, but also things that affect performance in a crucial way.

Let's speak about Gflops. A flop is a floating point operation, usually done when you take two 32 bits operands and get 1 32 bit result. What this means is that a 100 Gflops card needs (to have a 100% efficiency) a total bandwidth of 9600 Gbits or 1.2 TBytes.

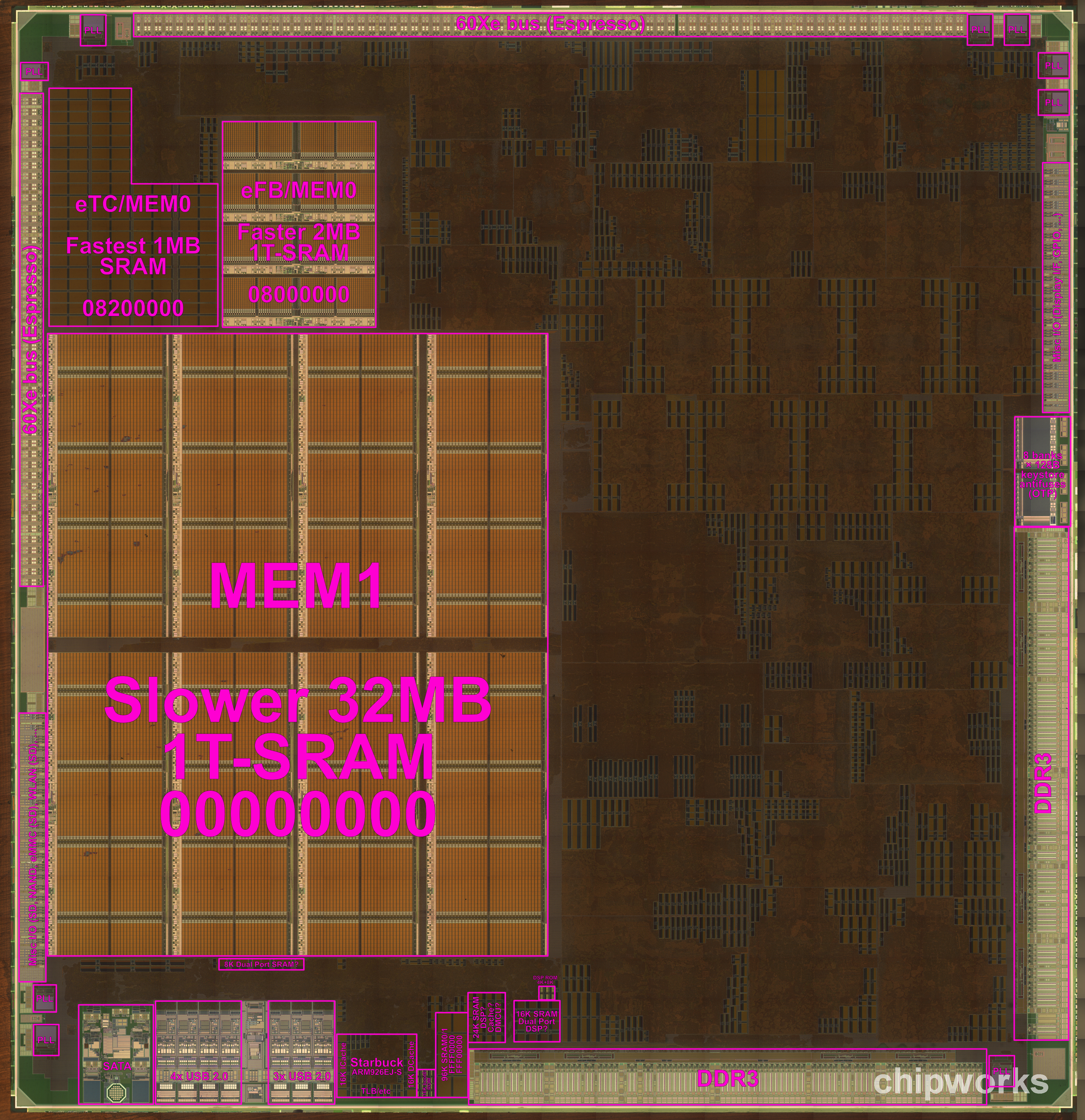

Of course, there are local caches and registers on every GPU that takes the most impact of that memory bandwidth need, but those caches even on the most modern model rarely surpasses the few MB in total (which goes from register memory to L1 and L2 caches), and of course, since they are different level memories the real amount of data you can store without impacting the main memory is even smaller than that.

Now, even if the Xbox 360 only had 200 GFlops of raw power (it had a bit more than that) it would need 2.4 TBytes of bandwidth only for the GPU, and of course, it had even smaller caches than current GPUs which means that it's dependency on external memories was even bigger, and all there was outside that was the main memory of 512MB and 22.4GB/s of total bandwidth, and the write-only 32GB/s bus to the eDram chip. Every time that something stored on the 10MB eDram pool had to be read, it would also impact the main 22.4 GB/s main memory bandwidth, so in most cases those 22.4 GB/s were the real bandwidth limit (plus maybe a bit more for the data that could be stored on the eDram and was resolved inside the son die like updating the z-buffer, but even those operations had to do compromises because 10MB of memory aren't enough to handle well a 720p resolution).

To that, you have to add the bandwidth needed by the CPU (and it being focused on fast matrix operations and reaching around 70 GFlops on an ideal scenario meant that it was a really hungry CPU in terms of bandwidth) and the fact that it "only" had 1MB of L2 cache for the whole 6 threads it had to handle.

So let's compare that to the WiiU architecture. We don't know exactly how much bandwidth does the 32MB of eDram provide, but knowing Nintendo, it wouldn't surprise me if it's even at the same level or higher than the similar pool found on the Xbox One (this is Nintendo's number 1 priority when designing hardware). But what's most important is that it's a multipurpose pool of memory that can be read and written. Not only it's much bigger than the Xbox 360 eDram die in terms of capacity, but it also is able to handle everything that can be stored on it without impacting the bigger pool of memory.

Regarding the CPU, it has an enormous L2 cache that provides 3-12 (depending on the core) more memory space per thread compared to the Xbox 360 one, and it's design more focused on integer operations isn't as dependant on bandwidth as the one of the Xbox 360 CPU. So all in all, it's impact on the bigger pool of memory could be an order of magnitude less than the one the Xbox 360 CPU had on it's 512MB pool of memory or even less than that.

And if even all of that wasn't enough, there are 3 more MB of cache on the GPU that of course will also alleviate the bandwidth impact on both the 2GB pool of memory and the 32MB one.

All that has a brutal impact on performance, not only in the one you see in a paper and compare shader processros and maximum flop output, but the one you see on your screen when you execute optimized code on the machine (in other words, its from here that most of the "it punches above its weight" sentences come from).

Will it still be much closer to Xbox 360 than to Xbox One in terms of performance? Of course. Not only because in total brute strength the Xbox One is too far to compensate it with efficiency, but also because that console is much more efficient in its design than the Xbox 360.

The only scenario where the WiiU could be closer to the Xbox One than to the Xbox 360 is on deferred engines that heavily rely on the 32MB buffer and only in the case that this eDram pool has enough bandwidth to sustain all the graphical needs (although in this regard I'm pretty confident whith what Nintendo does) and even then the difference would still be big in favour of the Xbox One thanks to the much superior raw power.

All in all, I do thing that under some circumstances the WiiU can still surprise us, but the diminishing returns that will in part hide the huge difference in power between it and the other next gen machines, will also hide the difference between it and the Xbox 360 games. In the end and as always happen, will be the games the ones that will tell us how capable the system really is.

Nintendo may not have the biggest 3rd party support, but when it comes to technical feats it has more than respectable internal studios like Retro, and Shin'en multimedia being a 2nd party will also help us to see the power of this console untapped even if only in some low-budget games (but them being low budget won't make them less spectacular technically speaking).

The_Lump said:

What wsippel suggested and what you're suggesting above are both perfectly plausible hypotheses - and might well be correct - but there is no more evidence for them than what USC (and Fourth etc) have to arrive at the 176GFlop figure. In fact there is less. "Occum's Razor" and all that...

Well, Occum's Razor is in fact what makes me thing that there is something more than a vanilla 176Gflop R700 chip connected to the eDram. As I said on my previous message, a 2008 R700 chip had 352 stream processors on a die area of 146 mm^2, at 55nm.

Between the fact that the WiiU GPU has been hand-layered in order to increase the relatively available area, that 40 nm also provide a much higher transistor density than 55nm, that it working at a lower frequency (550Mhz vs 750Mhz on the 2008 R700) also may increases the transistor density even if not much, that 40nm on 2012 was a much more mature process than 55nm on 2008 (which means that even better density can be achieved comparatively)...

So in my case, it's precisely the Occum's Razor that makes me think that the GPU chip is beefier than a 176 GFlop vanilla R700 (160 shader processors) with eDram. Not only I can't imagine Nintendo wasting even a single mm^2 of area in something useless, but it's love towards customized hardware also pushes me into believing that or the GPU has more power than those 176 GFlops, or it has a really respectable set of hardware functions or a bit of both (352 shader processors with some hardware functions).

But 105 mm^2 for just a 160 shader vanilla R700? I don't think so.