-

Hey, guest user. Hope you're enjoying NeoGAF! Have you considered registering for an account? Come join us and add your take to the daily discourse.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

What I mean about OC differentials:

I'd wait before drawing any conclusion about OC capability because the FE are hitting power limit even at stock and GDDR6X has some kind of ECC that allows clocking the VRAM up to unstable levels at the cost of performance, but without producing any artifacts etc, so unless you do a lot of testing, you're not going to realize.

Jack Videogames

Member

Well 2080TI upgrade path is not 3080... so expected... like you said wait 3080TI.

This is something most people are not taking into consideration and it's IMO very important. The 1080 was a noticeable improvement over the 980Ti on release, but the 2080 barely scraped past the 1080Ti which was a beast. The 3080 compared to the 2080Ti is back to 1080 vs 980Ti levels.

The 080 is at its core a Tier 2 machine. 2080Ti owners will have to get the Enthusiast Tier, the 3090, or wait for 3080Super/TI to get their money's worth.

ethomaz

Banned

Just to add.This is something most people are not taking into consideration and it's IMO very important. The 1080 was a noticeable improvement over the 980Ti on release, but the 2080 barely scraped past the 1080Ti which was a beast. The 3080 compared to the 2080Ti is back to 1080 vs 980Ti levels.

The 080 is at its core a Tier 2 machine. 2080Ti owners will have to get the Enthusiast Tier, the 3090, or wait for 3080Super/TI to get their money's worth.

2080TI is basically twice the price of the 3080.

Rikkori

Member

No it can't, read the reviews.That is a very low OC.

The card can push more.

Huh? What are you waiting for that's going to change? Unless you shunt mod then this is it. MAYBE Kingpin and the like will do better but then you are paying a heft premium for single digit % gains. Hardly compelling.I'd wait before drawing any conclusion about OC capability because the FE are hitting power limit even at stock and GDDR6X has some kind of ECC that allows clocking the VRAM up to unstable levels at the cost of performance, but without producing any artifacts etc, so unless you do a lot of testing, you're not going to realize.

What you are describing has already been encountered by the reviewers and they point it out, so you're not getting bad data from unstable OCs because they've weeded those out already.

Jack Videogames

Member

Just to add.

2080TI is basically twice the price of the 3080.

That too. Let's see how the series 30 price match, the 3090, compares to the 2080Ti.

Spukc

always chasing the next thrill

no better get 1500W to be sureSoon you will be mine!!!

But seriously I hope my 750W power supply can still fork out enough for this beast.

Strider Highwind

Banned

Thanks! If I don't get one, I don't get one. I'm honestly still doing OK at 1440p on my GTX 1080, but definitely want to upgrade if I don't have to pay exorbitant prices.

Im abit of a tech whore so i prolly will camp out for the 3090 lol. But im like you..shit I got a 2080 Super and a beast of a system..its not like Its anything coming out I won't be able to blast through...I got a 4K monitor and a huge 1440 165hz curved monitor and tbh I actually prefer my 1440p one cause of its size and I like the curve....

But as my wife says "You just can't wait for shit you so got damn spoiled"

Rikkori

Member

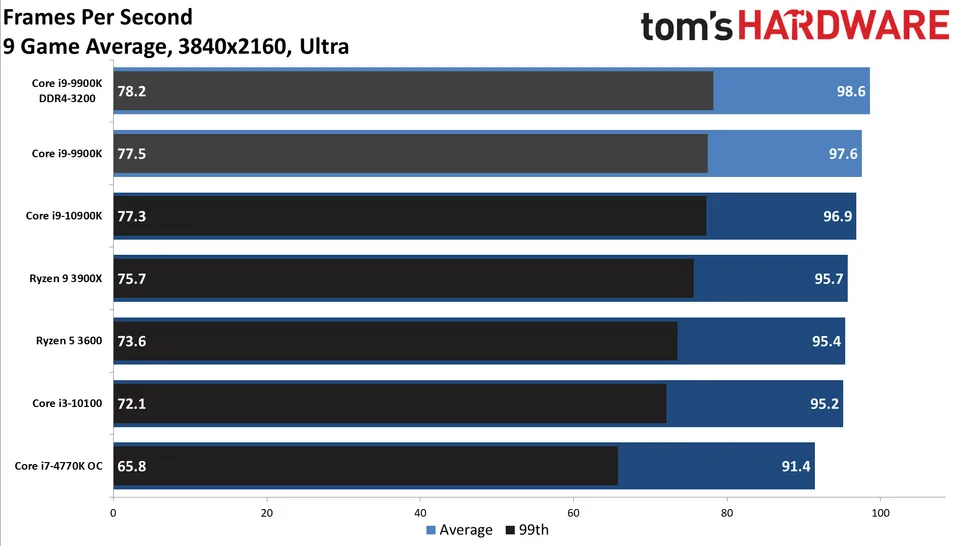

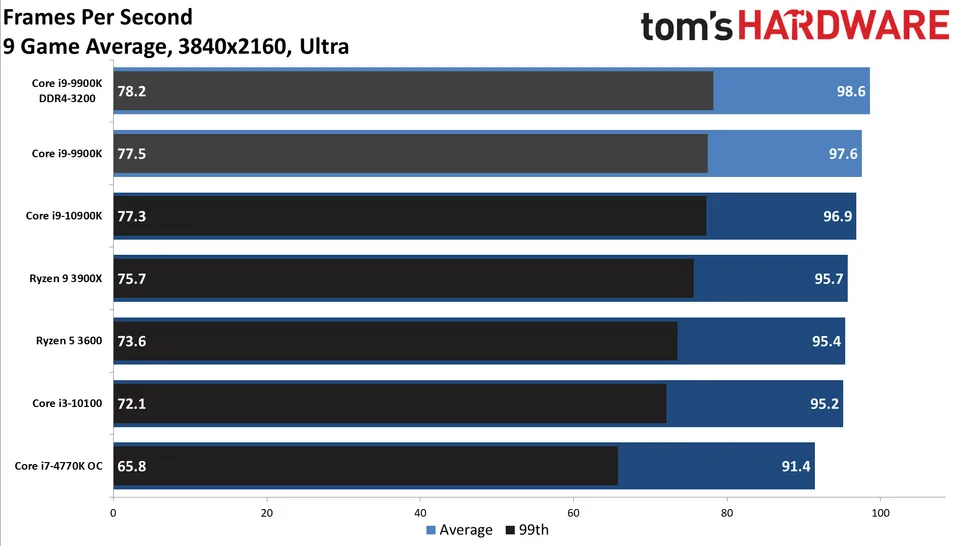

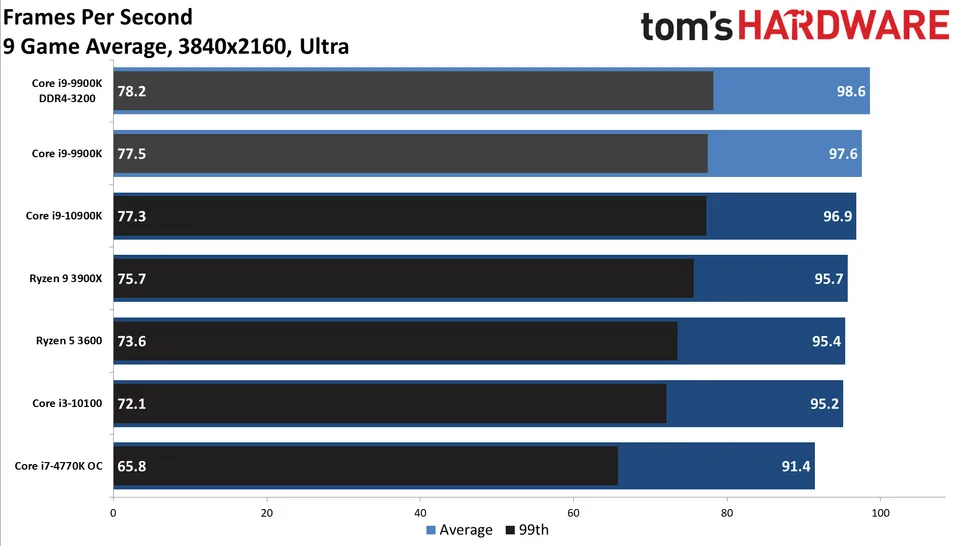

At least at 4K people don't have to rush out and upgrade CPUs:

ethomaz

Banned

I read reviews...No it can't, read the reviews.

"The Ampere cards continue to delivery the usual good performance with overclock in nVidia cards. We could get over 100Mhz on RTX 3080 even with a software without optimization for that (The latest version of Afterburn doesn't support this new generation yet) plus the drivers are still beta. We believe the clocks will get a bit highers with "mature" drivers and software."

They overclocked to 1815Mhz (+115Mhz) / 20.4Ghz (+1.4Ghz) without need to change the voltage.

ANÁLISE: GeForce RTX 3080 - O maior salto de performance já dado pela NVIDIA

A Nvidia GeForce RTX 3080 é a melhor placa de vídeo para games da empresa. Ela introduz a nova microarquitetura Ampere ao mercado gamer, e promete um

The temps are great too... better than 1080TI and 2080TI even in OC.

The OC give about 5-10 more FPS in average in games that runs at 60fps.

Last edited:

No it can't, read the reviews.

Huh? What are you waiting for that's going to change? Unless you shunt mod then this is it. MAYBE Kingpin and the like will do better but then you are paying a heft premium for single digit % gains. Hardly compelling.

What you are describing has already been encountered by the reviewers and they point it out, so you're not getting bad data from unstable OCs because they've weeded those out already.

AIB will raise the power limit and we're eventually going to be able to flash bios with higher power limits as well. Unless you get an FE and leave the bios unmodified, the OC released today are not representative of the 30 series as a whole.

Steve didn't mention anything about the VRAM IIRC (I watched a lot of reviews, so maybe I'm wrong?) and seeing it's the first consumer grade Nvidia GPU with it, it's an odd omission.

supernova8

Banned

TL;DW if you have a 2080 of any kind, you don't really need to upgrade.

If you have a 1080 Ti it's a massive increase.

Shame we can't have 3070 benchmarks yet.

If you have a 1080 Ti it's a massive increase.

Shame we can't have 3070 benchmarks yet.

Rickyiez

Member

TL;DW if you have a 2080 of any kind, you don't really need to upgrade.

If you have a 1080 Ti it's a massive increase.

Shame we can't have 3070 benchmarks yet.

Not sure about that , 2080 isn't that much faster than 1080Ti in rasterization , and the 3080 is as good as 80% faster than 2080 in 4k . It's still a damn awesome upgrade for it's price .

small_law

Member

I'm camping for a 3090 too. I'm actually scouting the 3080 launch at the store tonight so I can plan for next week.Im abit of a tech whore so i prolly will camp out for the 3090 lol. But im like you..shit I got a 2080 Super and a beast of a system..its not like Its anything coming out I won't be able to blast through...I got a 4K monitor and a huge 1440 165hz curved monitor and tbh I actually prefer my 1440p one cause of its size and I like the curve....

But as my wife says "You just can't wait for shit you so got damn spoiled"

I just don't think anyone's going to have significant availability beyond launch for a few months. Lingering supply chain and distributions issues aside, the crypto miners are starting to stir.

smbu2000

Member

3080 seems like a great improvement over the 2080, but that is really because the 2080 was zero improvement over the 1080ti. If the 2080ti had been slotted in the same 980ti/1080ti price slot then the 3080 would still be a good upgrade over the 2080ti.

The 3090 is more like the Titan replacement without having to use the Titan branding/Nvidia-only cooling solution so it can't really be compared to the 2080ti.

Just use what's best.

In the past I've used Intel/AMD/Nvidia/3DFX/ATI, etc. Why limit yourself?

The 3090 is more like the Titan replacement without having to use the Titan branding/Nvidia-only cooling solution so it can't really be compared to the 2080ti.

Why would you be against a company? My previous system was Intel(i7-5960X)/Nvidia(980Ti), then I switched to AMD (3900X)/AMD (5700XT) and recently switched over my GPU back to Nvidia.I'm very firmly set againts AMD, even if their cards are very very good, I won't buy. Only Nvidia.

9700k and 2080s.

Just use what's best.

In the past I've used Intel/AMD/Nvidia/3DFX/ATI, etc. Why limit yourself?

supernova8

Banned

Not sure about that , 2080 isn't that much faster than 1080Ti in rasterization , and the 3080 is as good as 80% faster than 2080 in 4k . It's still a damn awesome upgrade for it's price .

Yeah fair enough, perhaps I should say 2080 Super or Ti.

In other words if you've spent a fortune on a card in the last year or so, don't waste your money unless you really have a crazy amount to waste.

GaviotaGrande

Banned

Great value. I wonder how 3090 will fit in this picture.Nice.

Krappadizzle

Member

It's more than enough. Most reviews are showing 550~W power draw and that's with Ram and CPU overclocks. 700 is plenty.If I wanted to upgrade from my 1080TI, I am not sure my 700 watt PSU is enough. This is disappointing considering they they are on a new smaller process node.

Bolivar687

Banned

At least at 4K people don't have to rush out and upgrade CPUs:

Yeah, I'm definitely not upgrading my 4790k anytime soon.

Krappadizzle

Member

Well, 3090 won't be winning any value awards. It'll easily be 2 or 3 worst on the list on value. It'll just be the king of performance. Look at it like, you'll be paying more than 2x the 3080 and probably getting only another 15-25% improvement in performance.Great value. I wonder how 3090 will fit in this picture.

Which is absolutely acceptable if you know what you should be expecting.

Krappadizzle

Member

Maybe with DLSS. 3090 won't be a great 8k card unless DLSS becomes a standard. It'll be a great top of the line 4k card though.the F is all this talk about 4k?

3090 is all about 8k

GaviotaGrande

Banned

I'm upgrading from 2080Ti, which is almost at the bottom of that chart. I'm hoping for at least ~30% average improvement over 3080. That would make me a happy boy.Well, 3090 won't be winning any value awards. It'll easily be 2 or 3 worst on the list on value. It'll just be the king of performance. Look at it like, you'll be paying more than 2x the 3080 and probably getting only another 15-25% improvement in performance.

Which is absolutely acceptable if you know what you should be expecting.

Looks like Nvidia pulled an AMD and the 3080 would benefit a lot from being undervolted (assuming it's not a bottom of the barrel bin):

Nice. I'll have to look into this when I get one.

GymWolf

Member

Are you telling me that a 750w could not be enough?Starting to worry about my 750W, the power draw is not to be trifled with

Tranquil

Member

The 3080 should be compared to it's equivalent card, which is the 2080.It bugs me a little it's being compared to the 2080 and not the super. I guess it makes for a little more impressive numbers.

Jack Videogames

Member

Are you telling me that a 750w could not be enough?

Seems to be enough, benchs are going around 550w with a full system, but some reviews have reported power draws of 440v for the 3080 which is almost absurd

Krappadizzle

Member

What are playing that you can't wait? If I had. 2080ti I'd wait, plain and simple for a 3080ti. Coming from the 10xx series or at most the 2060 is where one should be considering an upgrade. There's gonna be some great revision models in ~6 mos. or so. I'd at least wait for the revisions as there's no reason to get rid of a 2080ti.I'm upgrading from 2080Ti, which is almost at the bottom of that chart. I'm hoping for at least ~30% average improvement over 3080. That would make me a happy boy.

If I could buy a 2080ti for $350 or so I'd buy one right now and just wait for the revisions.

GymWolf

Member

I have to be honest, only 25-30% better than 2080ti without rtx doesn't sound all that hot after all the big talks...So reviews/benchmarks for the most part seem to be pretty good!

Rasterization:

Avg of +25-30% uplift vs stock 2080ti at 4K

Ray Tracing:

Minor uplift with mixed rendering

Major uplift with only Path Tracing

Productivity:

Great performance boost in Blender and most productivity applications. Much higher than in gaming.

Cooling:

Great custom cooler on the FE cards, keeps temps in line and is relatively quiet for the power draw. Great job by Nvidia here. (will AIBs fare as well?)

Power Draw:

No getting around it, this card draws a huge amount of power but I think we all knew that already based on the 320W TDP. If power draw is a big deal to you then this might not be the card for you. If you only care about performance then you are good to go. Will most likely need a minimum 750W PSU.

Overclocking:

Doesn't appear to have much OC potential in gaming. Not sure what that means for AIB cards but I'm sure they will be able to squeeze out a little more performance. Keep an eye on power usage though.

Price:

Compared to Turing? Absolutely great at 699 dollars for 25-30% more performance than a 2080ti.

Does it live up to the hype?:

Well....no, but in fairness the hype was absolutely insane with funky TFLOP numbers and Nvidia claiming 2x performance of a 2080. Having said that it seems to be overall a great card and good buy, I think anyone getting this card will be really happy with the results.

Maybe not a great buy if you already have a 2080ti, you may want to wait for an eventual 3080ti or just splurge and go for the 3090. Memory is a little low for a 2020 flagship GPU but we all know that new higher memory models are just around the corner so if you are happy with 10GB VRAM then buy away but those not in a rush might want to wait for larger memory models.

If you are a 1080ti owner then the consensus seems to be that this is a great upgrade and will be well worth the money.

Isn't in line with the usual jump from top tier card to top tier card? Was the difference between 1080ti and 2080ti that much lower?

Last edited:

IbizaPocholo

NeoGAFs Kent Brockman

Last edited:

Rikkori

Member

Looks like Nvidia pulled an AMD and the 3080 would benefit a lot from being undervolted (assuming it's not a bottom of the barrel bin):

Was just about to post this, this is exactly the right approach. Was the same story for Vega.

GaviotaGrande

Banned

What are playing that you can't wait? If I had. 2080ti I'd wait, plain and simple for a 3080ti. Coming from the 10xx series or at most the 2060 is where one should be considering an upgrade. There's gonna be some great revision models in ~6 mos. or so. I'd at least wait for the revisions as there's no reason to get rid of a 2080ti.

If I could buy a 2080ti for $350 or so I'd buy one right now and just wait for the revisions.

I hardly play anything lately. I'm buying it simply because I can.

Amateurs.

AquaticSquirrel

Member

I have to be honest, only 25-30% better than 2080ti without rtx doesn't sound all that hot after all the big talks...

Isn't in line with the usual jump from top tier card to top tier card? Was the difference between 1080ti and 2080ti that much lower?

I'm not 100% sure about 1080ti vs 2080ti but I think it was around 20-25% in 4K.

Of course given that the 1080ti wasn't the most amazing card in the world for 4K we are looking at a smaller jump in number of frames.

In 1080p I think there was almost no difference at all or at least within like 5-7%

I think the biggest issue was the price to performance ratio at 1200 dollars for a 2080ti, plus at the time most people were not using 4K monitors and were likely playing at 1080p/1440p compared to now.

I still think 2080ti to 3080 (25-30%) is a solid enough jump, roughly in line with pre release leaks and expectations in the tech community. The problem was once the Nvidia hype machine started a lot of people lost any sense of rationality and got swept up in the hype expecting crazy performance boosts. A few people tried to bring expectations back to reality but people were too swept up in the hype.

In closing, it is only really disappointing if you bought into the hype. Otherwise it comes in around where we expected for a better price but with lower memory than originally expected.

So in gaming: good but not mind blowing. That Blender performance does look really tasty though.

GymWolf

Member

I bought into the hype, yeah.I'm not 100% sure about 1080ti vs 2080ti but I think it was around 20-25% in 4K.

Of course given that the 1080ti wasn't the most amazing card in the world for 4K we are looking at a smaller jump in number of frames.

In 1080p I think there was almost no difference at all or at least within like 5-7%

I think the biggest issue was the price to performance ratio at 1200 dollars for a 2080ti, plus at the time most people were not using 4K monitors and were likely playing at 1080p/1440p compared to now.

I still think 2080ti to 3080 (25-30%) is a solid enough jump, roughly in line with pre release leaks and expectations in the tech community. The problem was once the Nvidia hype machine started a lot of people lost any sense of rationality and got swept up in the hype expecting crazy performance boosts. A few people tried to bring expectations back to reality but people were too swept up in the hype.

In closing, it is only really disappointing if you bought into the hype. Otherwise it comes in around where we expected for a better price but with lower memory than originally expected.

So in gaming: good but not mind blowing. That Blender performance does look really tasty though.

And the only blender i use is to make smooties, only interested in ingame performances.

Basically this card and most probably the 3090 are not gonna be enough to max cyberpunk at 4k60+rtx without dlss, i know that ultra details are for morons and dlss look splendid, but still, cyberpunk is still a full fledged current gen game, not even a nextgen one...

Last edited:

Krappadizzle

Member

The difference between the 2080ti and 1080ti was like 20-30% while adding $500 on top of the price card.I have to be honest, only 25-30% better than 2080ti without rtx doesn't sound all that hot after all the big talks...

Isn't in line with the usual jump from top tier card to top tier card? Was the difference between 1080ti and 2080ti that much lower?

They essentially released the 1080ti 3 times in the 20xx series with the 2070, 2070super, 2080 and added RT and tensor cores on top. In practical application there was no value.

What the 30xx series is doing is bringing it back in line with what it used to be. At a slight premium price.

Jensen himself said that if you were 10xx series, this is the card for you.

People on the 20xx series are either inexperienced with GPU upgrades or were smoking crack rocks in thinking there's be way higher jumps. Nvidia didn't do themselves any favors with their 2x the performance of 2080. More like 70% performance gains overall.

Which is fantastic, Nvidia shouldn't have hyped the cards more and just let the numbers speak for themselves because the 30xx is a performer and way better value than garbage trash value of the 20xx series.

I'm getting a 3080 for Cyberpunk and I expect it to run just about everything maxed out or close to it at 3440x1440 with a great frame rate(85+ fps). And I'm super excited.

Last edited:

GymWolf

Member

I don't know if the jump from my 2070super is enough...The difference between the 2080ti and 1080ti was like 20-30% while adding $500 on top of the price card.

They essentially released the 1080ti 3 times in the 20xx series with the 2070, 2070super, 2080 and added RT and tensor cores on top. In practical application there was no value.

What the 30xx series is doing is bringing it back in line with what it used to be. At a slight premium price.

Jensen himself said that if you were 10xx series, this is the card for you.

People on the 20xx series are either inexperienced with GPU upgrades or were smoking crack rocks in thinking there's be way higher jumps. Nvidia didn't do theselves any favors with their 2x the performance of 2080. More like 70% performance gains overall.

Which is fantastic, Nvidia shouldn't have hyped the cards more and just let the numbers speak for themselves because the 30xx is a performer and way better value than garbage trash value of the 20xx series.

I'm always impulsive when i have to buy a gpu

Barry Curtains

Member

3080 compared to 2080 Ti by 2kliksphilip.

Krappadizzle

Member

If I was in your situation I'd honestly wait. If Cyberpunk is what you are upgrading for I'd really wait and see what DLSS can do for the title. I think it's gonna work really well for the game. People forget that CDPR is a PC developer at heart and all their games have scaled very well across a ton of different hardware configurations. Just get out of the mindset of needing to max shit. It's a heartbreaking endeavor that'll leave you constantly disappointed.I don't know if the jump from my 2070super is enough...

I'm always impulsive when i have to buy a gpu

I remember Ubersampling in Witcher 2 and how upset I was that I couldn't run it. Turns out, no one can run it, it's even hard to run to this day.

Max what you can for a frame rate that's acceptable, if you can't get that anymore then upgrade.

My 1080ti isn't doing it very well anymore at 3440x1440 120hz so it's time for ME to upgrade.

If I was sitting on 2070super or higher I'd just wait until the card can't do it anymore.

Though I get it, it's fun to jump into the mix on new hardware and just the experience and comradery of new hardware with buddies and forum friends can be a really fun time.

GymWolf

Member

I was not on board with ultra setting for the last 10 years of pc gaming, i already know all of that.If I was in your situation I'd honestly wait. If Cyberpunk is what you are upgrading for I'd really wait and see what DLSS can do for the title. I think it's gonna work really well for the game. People forget that CDPR is a PC developer at heart and all their games have scaled very well across a ton of different hardware configurations. Just get out of the mindset of needing to max shit. It's a heartbreaking endeavor that'll leave you constantly disappointed.

I remember Ubersampling in Witcher 2 and how upset I was that I couldn't run it. Turns out, no one can run it, it's even hard to run to this day.

Max what you can for a frame rate that's acceptable, if you can't get that anymore then upgrade.

My 1080ti isn't doing it very well anymore at 3440x1440 120hz so it's time for ME to upgrade.

If I was sitting on 2070super or higher I'd just wait until the card can't do it anymore.

Though I get it, it's fun to jump into the mix on new hardware and just the experience and comradery of new hardware with buddies and forum friends can be a really fun time.

The fact is, sometimes you really just want to put everything on max and start playing instead of losing hours to get the perfect setup for heavy or broken games (so 80% of pc portings).

I have 35 hours with the fucking avengers game and i still search for the best performance, sometimes is jist beatiful to brute force everything and just fucking play the videogame...

I was capable of doing that with the 970 or 1070 in fullhd for a while but here seems that you have to study even with the first big gamethat come out to get decent performances with a 800 euros gpu...is kinda underwhelming even with all the thing we discussed in mind.

Last edited:

Tripolygon

Banned

Beastly GPU but it sucks a lot of power which is to be expected from the size of it.

thelastword

Banned

Imagine if they used an Intel system max oc'd and also oc'd the GPU, that 750w would be on the verge. Cutting a bit too close imo.Starting to worry about my 750W, the power draw is not to be trifled with

Imagine the power draw of the 3090 with 24Gb of vram.That power draw and only 10gb vram. A big nope for me.

Every time Nvidia releases new cards I hear this, because they use games made for last gen cards with boosted fps. Even then, many of the current gen games will not run at 4k 60fps....When the new gen games start releasing next year, I will want to hear about this 4k 60fps with upcoming games, during the lifetime of this gpu with only 10Gb of vram going forward.Seems like we finally have proper 4k 60fps cards in the market.

ethomaz

Banned

Hum? I said every single time there is no proper 4k GPU on the market unless you want 30fps.Every time Nvidia releases new cards I hear this, because they use games made for last gen cards with boosted fps. Even then, many of the current gen games will not run at 4k 60fps....When the new gen games start releasing next year, I will want to hear about this 4k 60fps with upcoming games, during the lifetime of this gpu with only 10Gb of vram going forward.

That is the first time I says the GPU finally reached the power for 4k 60fps.

Tripolygon

Banned

The whitepaper for Ampere

NVIDIA Ampere Architecture Whitepaper Available Now

Peek under the hood of the new NVIDIA Ampere GA10x architecture, which powers GeForce RTX 30 Series graphics cards.

www.nvidia.com

Rickyiez

Member

Looks like Nvidia pulled an AMD and the 3080 would benefit a lot from being undervolted (assuming it's not a bottom of the barrel bin):

Very cool , will try this . Even without the undervolt , I'm only seeing 500W system consumption which is kinda assuring