dgrdsv

Member

You don't need to reduce anything on a 12GB card unless you're aiming at 4K+PT+FG which is likely too heavy for a 5070's performance anyway.nope. I'm okay reducing texture quality with my now ancient 3070

but with brand new 5070? nah. it should have 16 GB VRAM

even 3070 was able to play with maximum texture quality up until 2023. so this is even worse than 3070 at launch

"Should have" is nice. Are you ready to pay +$100 for something which will help you in half a dozen of games over the card's lifespan?

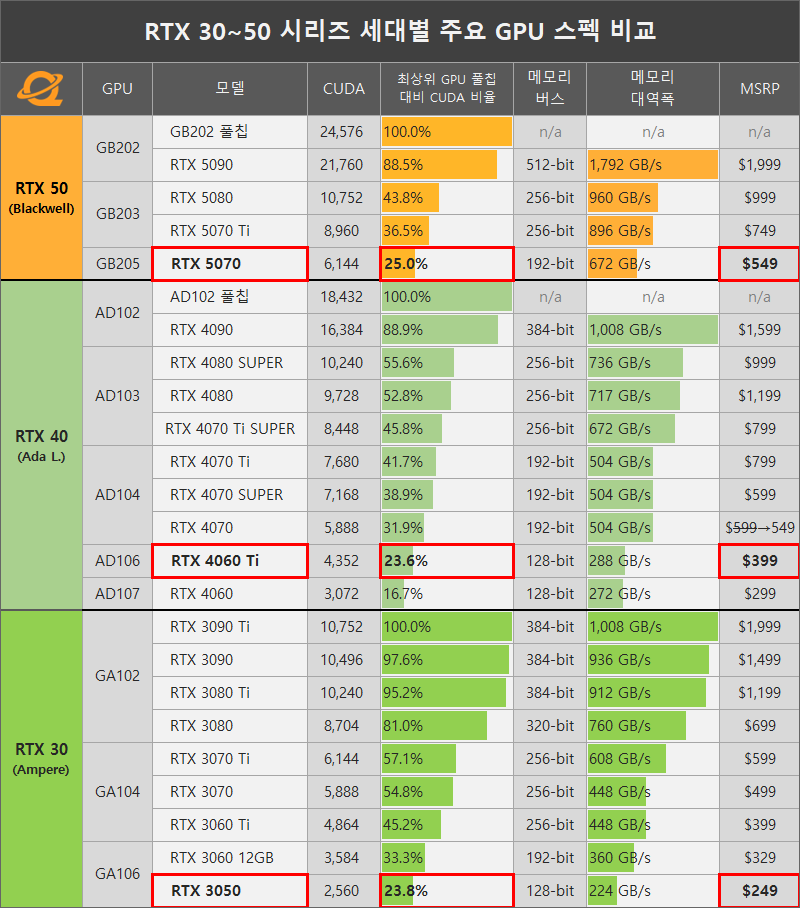

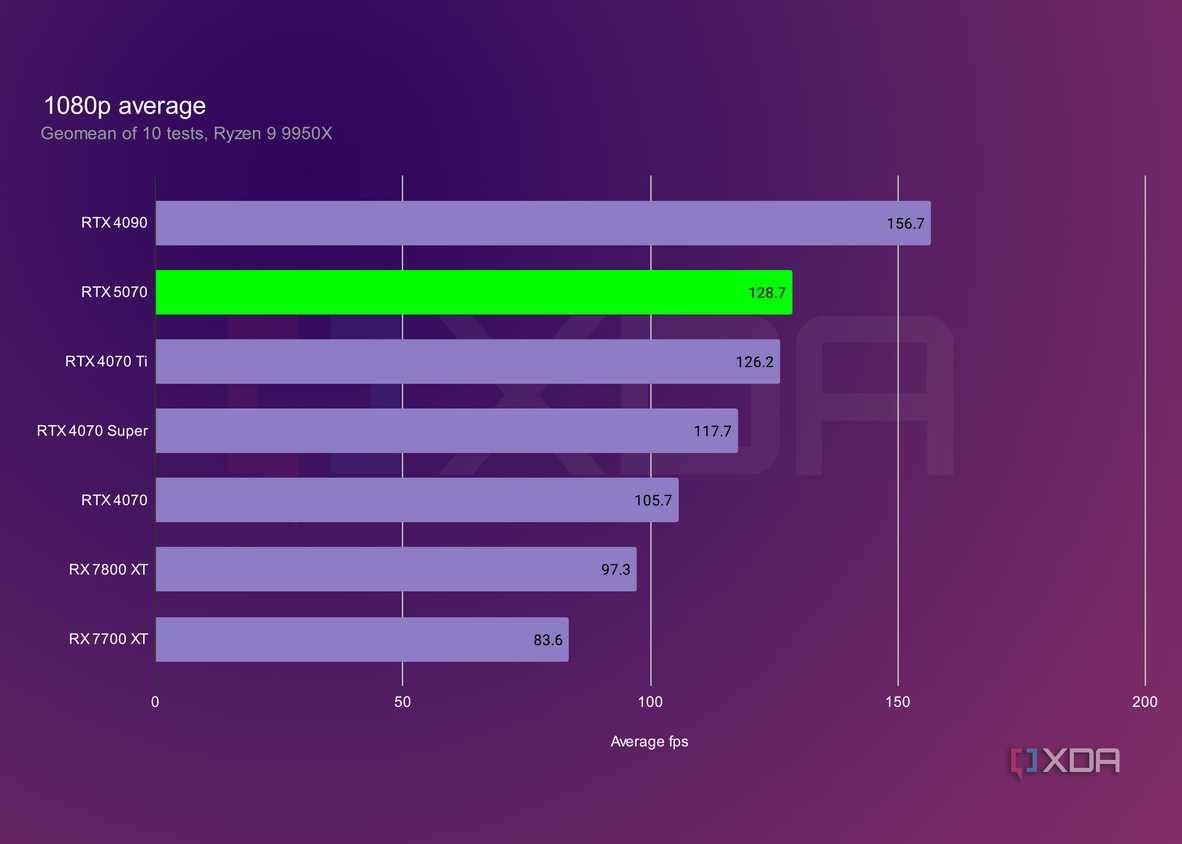

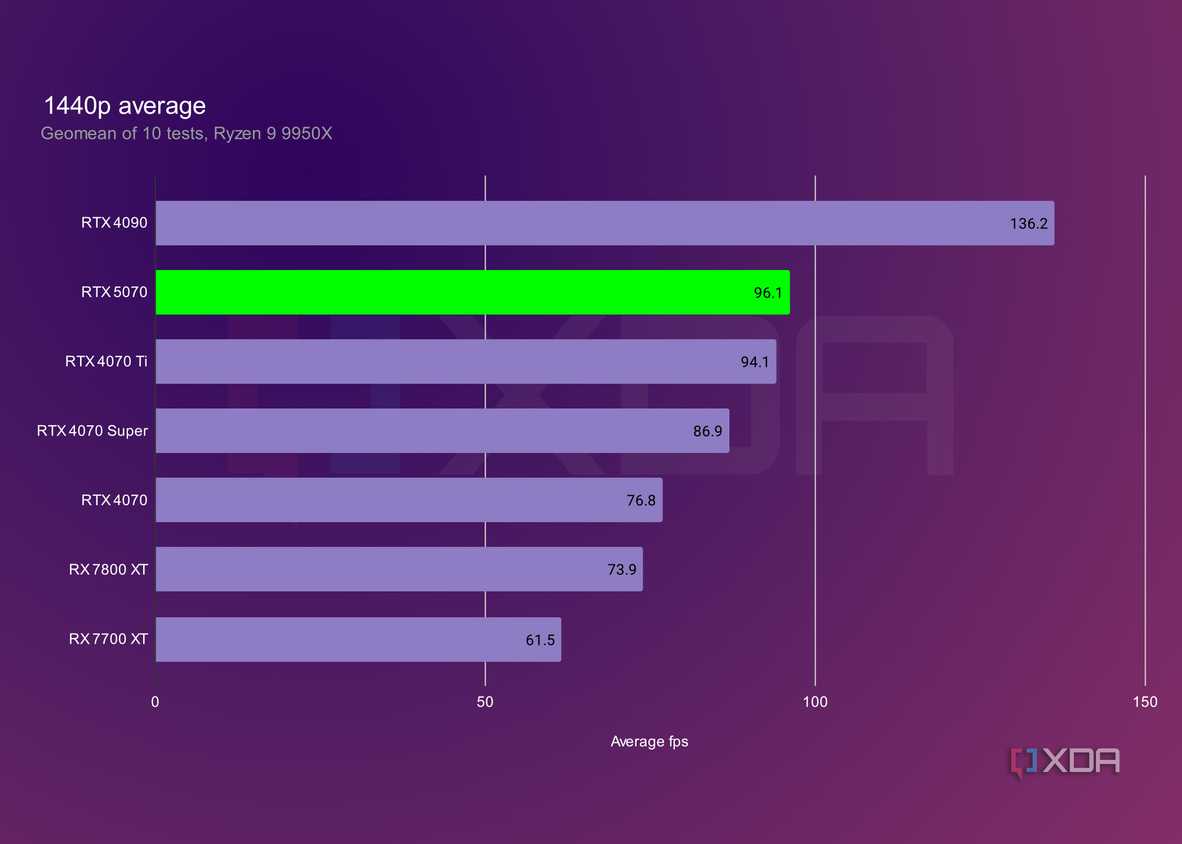

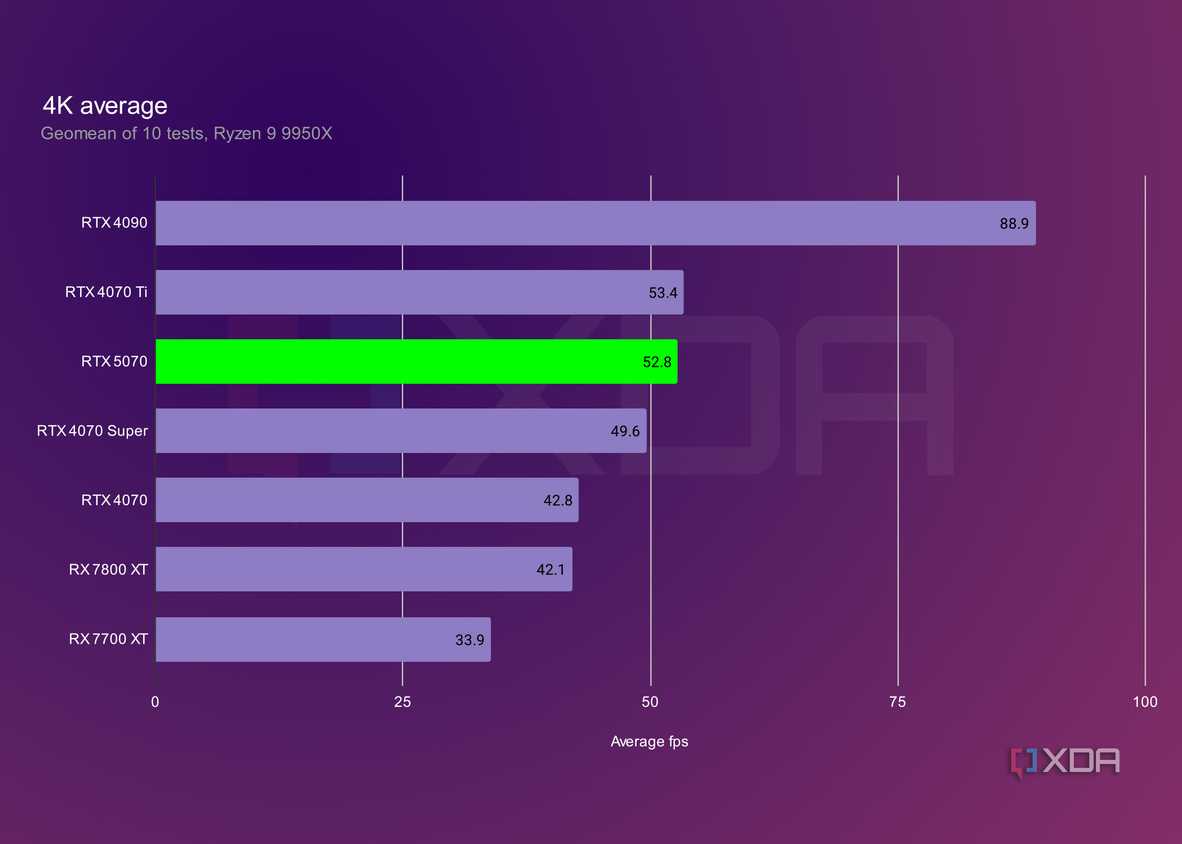

31 Tflops vs 40 TflopsThe fact that it's actually slower than a 4070 ti is pretty crazy.

$550 vs $800 launch price

Why is this "crazy"?

Last edited: