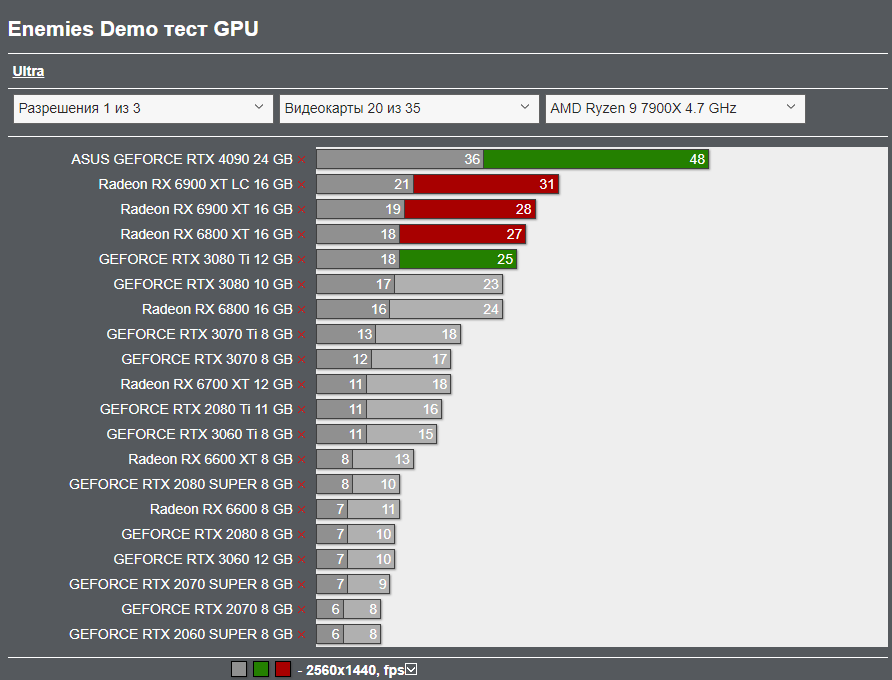

I've been thinking about that and checked my suspicions with technpowerups specs for Rapid Packed Maths for each of the cards, and Nvidia is getting nothing extra, and the AMD cards are getting double flops - just like Cerny said with PS4 IIRC - so there's every likelihood that even the 7900XT will run comparatively well against the 4090 in UE5.

https://www.techpowerup.com/gpu-specs/geforce-rtx-3080.c3621

https://www.techpowerup.com/gpu-specs/radeon-rx-6950-xt.c3875

https://www.techpowerup.com/gpu-specs/geforce-rtx-4090.c3889

https://www.techpowerup.com/gpu-specs/radeon-rx-7900-xtx.c3941

On older games I suspect the ability to batch all the independent deferred render passes to run in parallel on these monster cards results in high frame-rates, but where RT on a nvidia card can run in parallel to that work too, and be ready for the gather pass earlier that produces the final frame, on the AMD cards the BVH accelerator is part of the CU, so without an excess of CUs for rendering and RT, that work probably gets done a pass later on AMD cards, hence why the RTX cards have consistently beaten them. With UE5, the Rapid pack maths boost on AMD probably means that nanite takes far less time, leaving headroom for them to do the lumen pass as fast without dedicated RTX cores, and the RTX cards probably can't use the RTX cores until nanite finishes, meaning the RTX cores are probably idle through the slower nvidia processing of nanite. Or at least that's what I would expect, and now think that long term AMD will get massive gains on all UE5 games.