- it's a RT coprocessor inside the same cluster of the CU as the texture mapping units.

- it's doing exactly the same steps in the RT pipeline as the RT core in nvidias archs does. nv is not doing any step of the RT pipeline in its RT core that amd isn't doing in it's coprocessor!!!

- therefore it doesn't matter if it's inside or outside the compute unit

- (it might be better if it's inside the CU because in theory that should use less energy for the same work and safe die space)

- AMD could also have named it RT core (if it isn't taken)

- infact sony is calling it (iirc) intersection engine and amd intersection unit (edit: in it patents. going by the newly relewased spec sheet they are calling it Ray Accelerator now )

that info is all out there in the fucking raytracing patents

------------------------------------------------------------------------

on the topic of raytracing performance. as of now we only have two (maybe questionable) datapoints.

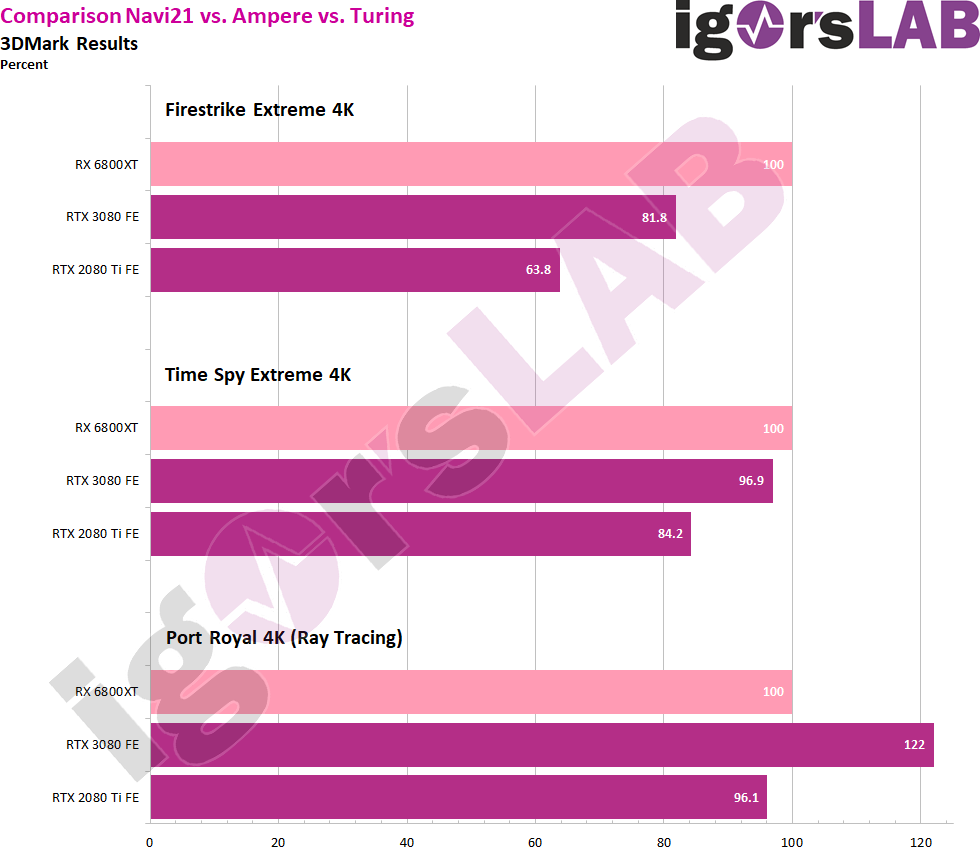

first one is port royal (RT benchmark in 3D mark) from leaks:

18% down on the 3080. that's not brilliant. it should be considered that drivers might not be there, where they need to be. igors datapoint is 5415 points in 4K port royale. my gigabyte 3080 gaming OC also does around 5400 points at 4K. that said, there is no standardised 4k test in port royal (port royal standard runs at a lower res). so we can't be sure if the results are really comparable.

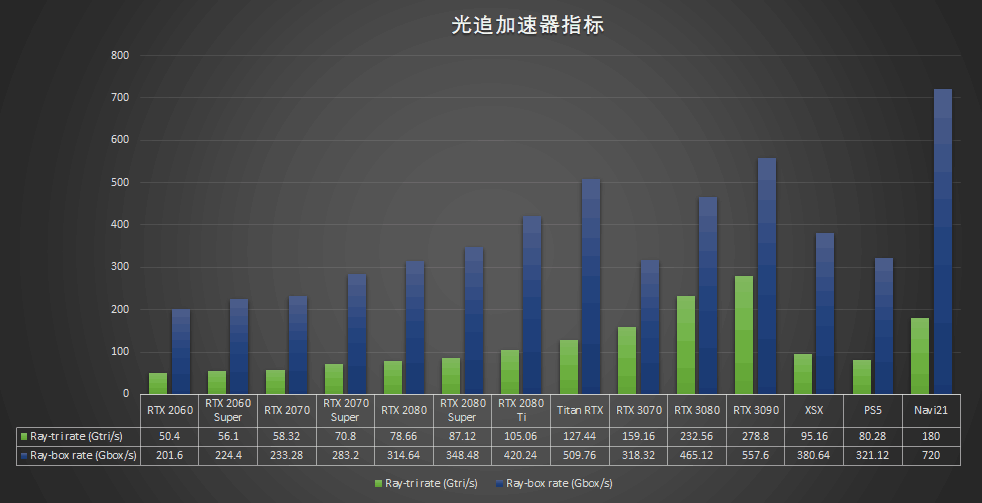

second datapoint is from a chinese leaker board that's probably full of AIB employees and 3rd party engineers. it's a theoretical performance comparison of the different intersection testing types:

so make of that what you will. but i think we will get lots more info in the coming hours / days.