Im waiting for this so next month I can do a ITX build.

Is that the only ITX board that's been announced?

Im waiting for this so next month I can do a ITX build.

The game in question is DX9.

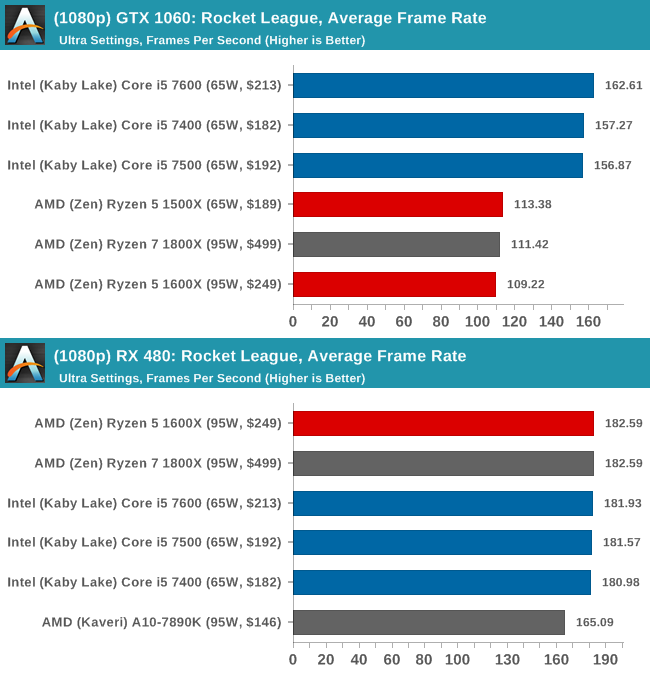

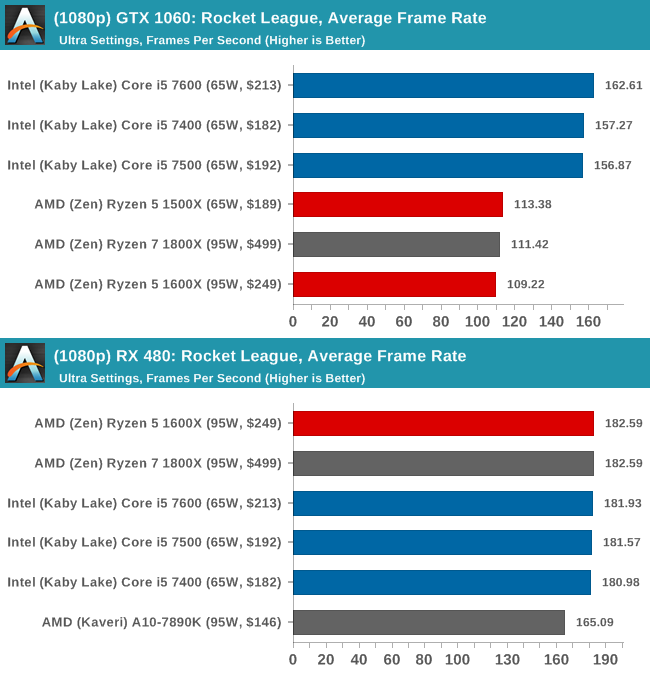

Would be really interesting to see if and how that specific chart would differ with AMD GPUs. Maybe once Vega is out.·feist·;233771095 said:Note, Tech Report and TechPowerUp used only Nvidia GPUs for their testing.

It may well. Though the oddity is Nvidia randomly performing 25% weaker on Rzyen than on Intel for seemingly no reason.I wonder if Rocket League would perform better on Kepler cards like the 780 TI, since they might have spent more effort on DX9 with the older generations of GPUs.

The 1400 is pretty good too, it's just that it has half the L3 cache that the other R5's have, the higher the cache.. the higher the clocks.. and the higher the memory, yields better performance as a result.So it sounds like the 1600 and 1500 trade blows with the 7600k at cheaper price points. Also sounds like a 1400 isn't something people should be looking at unless they really wanna go budget.

The top R3 model may have the same base/turbo clock as R7 1800X and R5 1600X and 16Mb L3 cache just to have an oddly balanced 4c4t model that at first may seem like a good deal. ^^At this point, I'm thinking the R3's will also the same 8Mb of L3 cache as the R51400...

Is that the only ITX board that's been announced?

A lot of the explanations I've seen don't really present the issue very well, or people just post graphs without explaining what they represent.That turned out to be jumping to the wrong conclusion, by the way. The actual issue is that some games runs worse on Nvidia cards in DX12 compared to DX11. This was true six months ago, and Ryzen hasn't magically fixed it.

There may be some small performance gains to be made by Nvidia optimisation, but it's not going to be the silver bullets that will make Ryzen vastly superior to Intel in all gaming benchmarks, despite what some plonkers in /r/AMD believe.

It would be awesome if it had 24Mb of L3 cache and overclocking capabilities to 5GHz...That would make a $129.00 chip compete with a 7700K for shits and giggles...Hey, but I wonder if they'd do something odd with R3 though...The top R3 model may have the same base/turbo clock as R7 1800X and R5 1600X and 16Mb L3 cache just to have an oddly balanced 4c4t model that at first may seem like a good deal. ^^

A lot of the explanations I've seen don't really present the issue very well, or people just post graphs without explaining what they represent.

It seems that, with AMD GPUs, Ryzen and Intel CPUs perform the same.

But with NVIDIA GPUs, Ryzen is performing much worse than Intel in some games.

Ignore that the RX480 runs the game at 180 FPS vs 160 FPS on the GTX 1060.

The issue is that the 1060 drops from 160 FPS to 110 FPS when you swap out the Intel CPU for a Ryzen CPU, while the RX480 performs the same on both.

That's a 50% drop in performance, and points to the issue being the GPU driver, not the game.

I really hope it's something that NVIDIA just have to optimize their driver for, and not an architectural difference between the two causing this.

A reason for the Nvidia driver to crash in performance?Architectural difference in what exactly?

Doesn't GTA V have some weird thing where it actually goes slower if you have more than 4 cores? I remember GamersNexus had a video on it.

Doesn't GTA V have some weird thing where it actually goes slower if you have more than 4 cores? I remember GamersNexus had a video on it.

A reason for the Nvidia driver to crash in performance?

So you were referring to the GPU hardware and say (for that DX9 game) 480 is a better match for Ryzen and there's nothing Nvidia can do about that?What architectural difference would explain the comparative results between 480 and 1060 in a DX9 game running on different CPUs?

So you were referring to the GPU hardware and say (for that DX9 game) 480 is a better match for Ryzen and there's nothing Nvidia can do about that?

I really hope it's something that NVIDIA just have to optimize their driver for, and not an architectural difference between the two causing this.

No, if at all it is the driver being limited by single thread performance (possibly combined with inter-CCX thread hopping). Which means there is clear room for driver optimization on Ryzen in 1060's case. I guess we agreed all along then, thanks for the clarification.I can't think of any architectural difference between 480 and 1060 which may cause this. Can you?

I meant their driver architecture/design rather than the GPUs. Basically, that it might not be a simple fix.I can't think of any architectural difference between 480 and 1060 which may cause this. Can you?

That's really weird, there's no way that should happen. Even if it was completely single threaded it should have basically the same FPS. Would be nice to see a more benchmarks of it.Ran into my first real problem with the 1700X today in Bayonetta:Overclocking the memory to 3596MT/s only boosted the minimum by 4-5 FPS. Same thing as I've seen in every other game so far.

- GTX 960, i5-2500K @ 4.5GHz, 1600MT/s DDR3 - 60 FPS

- GTX 1070, R7-1700X @ 3.9GHz, 2666MT/s DDR4 - 29 FPS

Restricting the game to 4c/8t or 4c/4t did nothing to help.

I meant their driver architecture/design rather than the GPUs. Basically, that it might not be a simple fix.

Bayonetta has a weird issue with AMD CPUs in general, so it might need a patch before it works perfectly on Ryzen.Ran into my first real problem with the 1700X today in Bayonetta:Overclocking the memory to 3596MT/s only boosted the minimum by 4-5 FPS. Same thing as I've seen in every other game so far.

- GTX 960, i5-2500K @ 4.5GHz, 1600MT/s DDR3 - 60 FPS

- GTX 1070, R7-1700X @ 3.9GHz, 2666MT/s DDR4 - 29 FPS

Restricting the game to 4c/8t or 4c/4t did nothing to help.

I meant their driver architecture/design rather than the GPUs. Basically, that it might not be a simple fix.

Is that a general issue or just specific scenes? Here is a video from the start of the game using R5 1600 + RX470 which aside cutscenes and loading screens doesn't appear to drop below 59 fps. So possibly an Nvidia driver specific issue again?Ran into my first real problem with the 1700X today in Bayonetta

This is exactly what I was hoping would not happen with Ryzen.Bayonetta has a weird issue with AMD CPUs in general, so it might need a patch before it works perfectly on Ryzen.

The game was locked to 60 until I reached that area.Is that a general issue or just specific scenes? Here is a video from the start of the game using R5 1600 + RX470 which aside cutscenes and loading screens doesn't appear to drop below 59 fps. So possibly an Nvidia driver specific issue again?

I find it more likely that they just won't bother as running games in 720p or even DX9 games in 1080p at >100 fps already is hardly the focus for their optimization efforts.I meant their driver architecture/design rather than the GPUs. Basically, that it might not be a simple fix.

If you've got P-state overclocking, you can drop the clocks and power usage down to 2.2GHz or lower.https://m.hardocp.com/article/2017/0...0%2C3285887976

The power usage is quite high after overclocking. (their results are Ryzen at 4ghz and Intel at 5ghz). If you are an enthusiast gamer who needs to keep their monthly bills as low as possible, you might want to go with Intel.

If you dont think power usage matters----moving my girlfriend from a final revision slim PS3 to a Blu-ray player, for her F.R.I.E.N.D.S.-on-in-the-background habit, dropped our electric bill at least $15

Right. But I'm saying if you game a few hours every day and plan to do it all maxed out: You might want to go with Intel quad cores, due to power savings. And therefore, lower monthly bill.If you've got P-state overclocking, you can drop the clocks and power usage down to 2.2GHz or lower.

Right. But I'm saying if you game a few hours every day and plan to do it all maxed out: You might want to go with Intel, due to power savings. And therefore, lower monthly bill.

Link doesn't work.https://m.hardocp.com/article/2017/...400_cpu_review/?_e_pi_=7,PAGE_ID10,3285887976

The power usage is quite high after overclocking. (their results are Ryzen at 4ghz and Intel at 5ghz). If you are an enthusiast gamer who needs to keep their monthly bills as low as possible, you might want to go with Intel.

If you dont think power usage matters----moving my girlfriend from a final revision slim PS3 to a Blu-ray player, for her F.R.I.E.N.D.S.-on-in-the-background habit, dropped our electric bill at least $15

Yeah. I'm talking about the enthusiast gamer who wants to run their games with their hardware maxed.Link doesn't work.

It's known from the beginning that overclocking disables all the different forms of turbo on Ryzen, essentially putting all cores into a permanent turbo mode. This combined with binning for any model below the top model let's power usage grow exponentially. If you are conscious of power consumption it's better to stay on stock speed (and respectively pick the model accordingly) as the single core, all core and XFR turbo can work its magic at far lower power consumption. Be sure to also use AMD's Ryzens specific Balanced power plan that enables power saving while (unlike the standard Balanced profile) mostly reaching the performance of the standard High Performance profile.

Yeah. I'm talking about the enthusiast gamer who wants to run their games with their hardware maxed.

Link updated. I must have fudged it while editing that post.

Yeah. I'm talking about the enthusiast gamer who wants to run their games with their hardware maxed.

Link updated. I must have fudged it while editing that post.

Well, these dont show power usage after over locking. I guess wait until probably next week, when hardocp posts their gaming specific article.Ryzen will pull significantly less power while gaming than in that test. You really can't reach that conclusion from a gaming perspective.

I'm talking per month. There are a lot of people who can make splurge purchases, but need monthly costs as low as possible.That gamer probably wont mind a few extra dollars a year for electricity...

Well, these dont show power usage after over locking. I guess wait until probably next week, when hardocp posts their gaming specific article.

But based on the power scaling shown by them with handbrake, I bet we see a similar separation while gaming. Albeit with lower overall power usage from both companies, due to lower average CPU load, while gaming.

https://m.hardocp.com/article/2017/04/11/amd_ryzen_5_1600_1400_cpu_review/

The power usage is quite high after overclocking. (their results are Ryzen at 4ghz and Intel at 5ghz). If you are an enthusiast gamer who needs to keep their monthly bills as low as possible, you might want to go with Intel.

If you dont think power usage matters----moving my girlfriend from a final revision slim PS3 to a Blu-ray player, for her F.R.I.E.N.D.S.-on-in-the-background habit, dropped our electric bill at least $15

* we are religious about keeping lights off and unplugging electronics when not in use. Only heat rooms as needed.

I'm talking per month. There are a lot of people who can make splurge purchases, but need monthly costs as low as possible.

That link isn't working for me, but I assume you're referring to this:https://m.hardocp.com/article/2017/...400_cpu_review/?_e_pi_=7,PAGE_ID10,3285887976

The power usage is quite high after overclocking. (their results are Ryzen at 4ghz and Intel at 5ghz). If you are an enthusiast gamer who needs to keep their monthly bills as low as possible, you might want to go with Intel.

The PS3 Slim seems to draw 75W for Blu-ray playback, so that doesn't surprise me.If you dont think power usage matters----moving my girlfriend from a final revision slim PS3 to a Blu-ray player, for her F.R.I.E.N.D.S.-on-in-the-background habit, dropped our electric bill at least $15

* we are religious about keeping lights off and unplugging electronics when not in use. Only heat rooms as needed.

Yeah I saw these benchmarks and was confused - what is going on here?

I'm talking per month. There are a lot of people who can make splurge purchases, but need monthly costs as low as possible.

Just taking these at a surface level as they're not directly similar tasks.

BCDEdit /deletevalue useplatformclockI think 1600 is the best if you are fine with OCing it little bit. Otherwise either get 1600X and run it stock. Or 1500X if you wanna save some $$.

I realize the blu-ray player/ps3 slim Vs. CPU power is very loose.

I was simply trying to point out that Intel seems to use a lot less power, when overclocked to the max, vs Ryzen OC'd to the max. And that hey, that can actually make a difference in your monthly bill.

But for a little more specifics:

I have seen two PS3 slim power usage charts. Both compared it to a Samsung BD-S3600, which is officially rated for 30w operation. And those two comparisons said it used about 20w for blu-ray playback.

I have a Sony BDP-S390. It is rated for 11w during operation.

In my case, F.R.I.E.N.D.S. Was 720p h.264 rips, running off the slim's internal hard drive. PS3 power usage, when reading h.264 rips, is an unknown. It's gotta be at least as much as blu-ray playback.

My BDP-S360 was pulling the same files off a compact 3tb USB hard drive, which only requires the power provided over USB, to operate.

Same platform.Will AMD support AM4 for the next gen CPU or are they going to make a new platform for it?

Same platform.

Source btw - http://m.neogaf.com/showthread.php?t=1332216Sweetmakes it easier getting a 1600x or 1800x and upgrading it next year