The Cockatrice

I'm retarded?

Just get a PC my guy. Problem solved.

Stop asking an IA what to respond. You're putting yourself in evidence.You need bigger CU for bigger workloads like advanced shaders and RT and smaller CUs for post processing effects and geometry processing, the fact that it saves power is an added bonus.

West City latest tech presented from Dr. Gero right here.

Why does he wink like a lizard?

On the subject the HD generation ditched out of order instructions because it was not cost effective. The better you make a chip the more is going to take in yields. Although some people are chanting victory because MS is going to smash prices with 900€$ hardware that actually should cost 1.000€$ this is a market of people rising an eyebrow every time something crosses the 299 price tag. Ready with their keyboards already on fire. If, if, the advantages outclass the space in silicon, like with AI cores for machine learning, it's implemented. If not:

Out with it!

Strix Point's Zen 5c has Zen 4's 256-bit SIMD layout.AMD ZEN 6 Hybrid Concept for PS6:

8 "Worker Cores":

Zen 6c ● 512KB L2 Per Core ● 128-Bit SIMD ● SMT

7C/14T @ 4.0GHz for GAME

1C/1T Disabled for Yield

2 "Showrunner Cores":

Zen 6 ● 1MB L2 Per Core ● 128-Bit SIMD + AVX256 & AVX512-S ●SMT

2C/2T @ 4.8GHz for GAME

2 "System Cores":

Zen 6lp ● 256KB L2 Per Core ● 128-Bit SIMD ● SMT

2C/4T @ 3.0GHz for OS

Unified 12 Core CCX ● SmartShift ● No On-Die L3

48MB CPU L3 via 3D V-Cache (Low-Yield Generic AMD 64MB Die)

---

Even well-threaded games tend to use 1-2 main saturated threads that handle the bulk of the game logic, then delegate more parallelizable tasks out to the other cores that rarely get close to full utilisation.

With consoles focused on efficiency, it may make sense knowing that this is a given to have only a limited amount of full cores with deleted SMT, crankable clocks, more cache and extended instruction logic which "run the show" (some AVX capability could also make any prospective PS3 BC more viable), then have a bunch of smaller compact cores with less cache, reduced instruction logic, SMT and lower clock capabilities that are fundamentally geared towards being "workers"; handling tasks which can be more easily chopped up. In addition, use Zen low power cores for the OS. Finally, given you'd wanna get the die as small as possible, drop L3 off the die entirely and use the low-yield cast offs from AMD's mass-produced, generic V-cache dies instead.

---

No chatgpt used in this post..

..unless you think it's a shit idea, then let's say I did; and offload the blame.

Well. That's my point. Silicon is measured to the atom since you're going to sell 100 mill+ of these things every dollar saved is a massive difference. Consoles are not a boutique market.

Someone run games on AMD BC-250 under Linux * Cut down PS5 die to 6 CPU cores 24 GPU cores for use in crypto mining

If community mange to fix current driver issues, can be potentially entry level gaming PC for tech savvy people due can be find for only $50. After OC iGPU is only slightly behind RX 6600 performance. CPU performance is close to Ryzen 5 2600xwww.techpowerup.com

Six-core Zen 2 performance from a defective PS5 APU is close to Ryzen 5 2600x (six-core Zen 1.5 with quad 128-bit FPU pipelines for each CPU core).

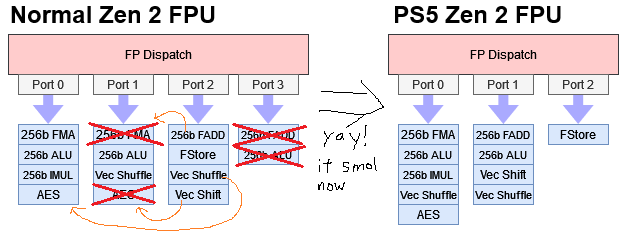

PS5 Zen 2's 256-bit AVX2 throughput is similar to desktop Zen 1.x.

This is a misrepresentation of the two GPUs at best. It's like when people compare PS4 to XB1 and equate them to 40% gap (because of compute delta) where the gap was consistently much larger than that, but most of it came on the back of XB1 GPU having a host of other limitations PS4 didn't.It's why the shitty NVidia PS3 GPU got its ass handed to it by the XB360 GPU, because the former had specific pixel and vertex shaders, whereas the latter boasted AMD's latest unified shader architecture

I came up with this while being infected with the lawless one's unclean spirit. I made a bet with deepenigma that either xbox would crawl out of their grave or the PC I was building wouldn't work. It's still too early to tell with Xbox, but my pc's bios wouldn't prioritize the windows 11 installation drive over SSD, and when I loaded the installer from the boot menu it said my specs were incompatible. I have crucial 32 gb ddr5, wd black sn850x 2tb, pny rtx 5070 12gb, Intel core i7 14700k. Now the monitor isn't responding. I'm not dumb, I've studied 5 manifold spaces at the graduate level.Asymmetrical CPU cores and GPU Compute Units combined with an intelligent task scheduler, offer significant benefits for video game consoles by optimizing performance, power efficiency, and responsiveness. In this architecture, high-performance cores handle demanding tasks like physics calculations and AI, while smaller efficiency cores manage background operations such as audio processing or system services without wasting power. Similarly, GPU CUs can be designed with varying capabilities—some optimized for complex shading and others for simpler rendering or post-processing. A smart scheduler dynamically assigns workloads based on the nature and priority of each task, ensuring that resources are used efficiently. This enables smoother frame rates, reduced latency, and improved thermal performance, all while extending hardware lifespan and supporting more immersive and complex game worlds without requiring developers to micromanage hardware resources.

yes I used chatgpt but its my idea.

Operating systems are already going to have a task scheduler to prioritize and schedule threads accordingly, even those on video game consoles. It doesn't take machine learning to do that. That's been happening ever since multi-threading became a thing decades ago.Big CUs for big workloads, small CUs for small workloads. Task Scheduler; Machine Learning distributes workloads automatically. All of this means more optimized machine

You should have used chatgpt to make it readable too.yes I used chatgpt but its my idea.

It's better to have big core running 2 tasks than 2 smaller ones running the same 2 tasksBig CUs for big workloads, small CUs for small workloads. Task Scheduler; Machine Learning distributes workloads automatically. All of this means more optimized machine

Did you call for me?Why do that when they can bring the cell back… except it's the super cell

Yep seems the logical way, I hope they have decent cache though as that does seem to make a decent difference in gaming.My bet is that the next consoles will use AMD's "c" cores.

They are more compact, use less die space and are more power efficient, sacrificing a bit of clock speed.

No non-sense like small and big cores combined.

Yep seems the logical way, I hope they have decent cache though as that does seem to make a decent difference in gaming.

Ah didn't realise it was that severe of a cut, it makes sense to optimise die space in a console, but losing cache does have a performance impact. PS5/XSX have cache reduced zen 2 cores and you can it runs worse with the lower cache.Usually, the "C" core variants sacrifice the L3 cache. Going to around half what a normal core would have.

Ah didn't realise it was that severe of a cut, it makes sense to optimise die space in a console, but losing cache does have a performance impact. PS5/XSX have cache reduced zen 2 cores and you can it runs worse with the lower cache.