Thrashing the PCI-e bus? Why would ray tracing traverse the PCI-e bus? That sounds like a pretty big bottleneck. Do you mean RT would slow down the decompression?

That's not really what I meant. What I meant was that enabling RT adds to VRAM requirements which can push you over your VRAM capacity. If you have an 8GB GPU and your game needs 7GB then you're probably going to be fine. But if you then enable RT and it adds, say, 1.5GB then you are now 0.5GB over budget. This remaining 0.5GB will then be stored in system RAM under 'Shared GPU memory' and thrash back and forth across the PCIe bus for every frame. It's a fair bit more complex in reality but that's the gist of it.

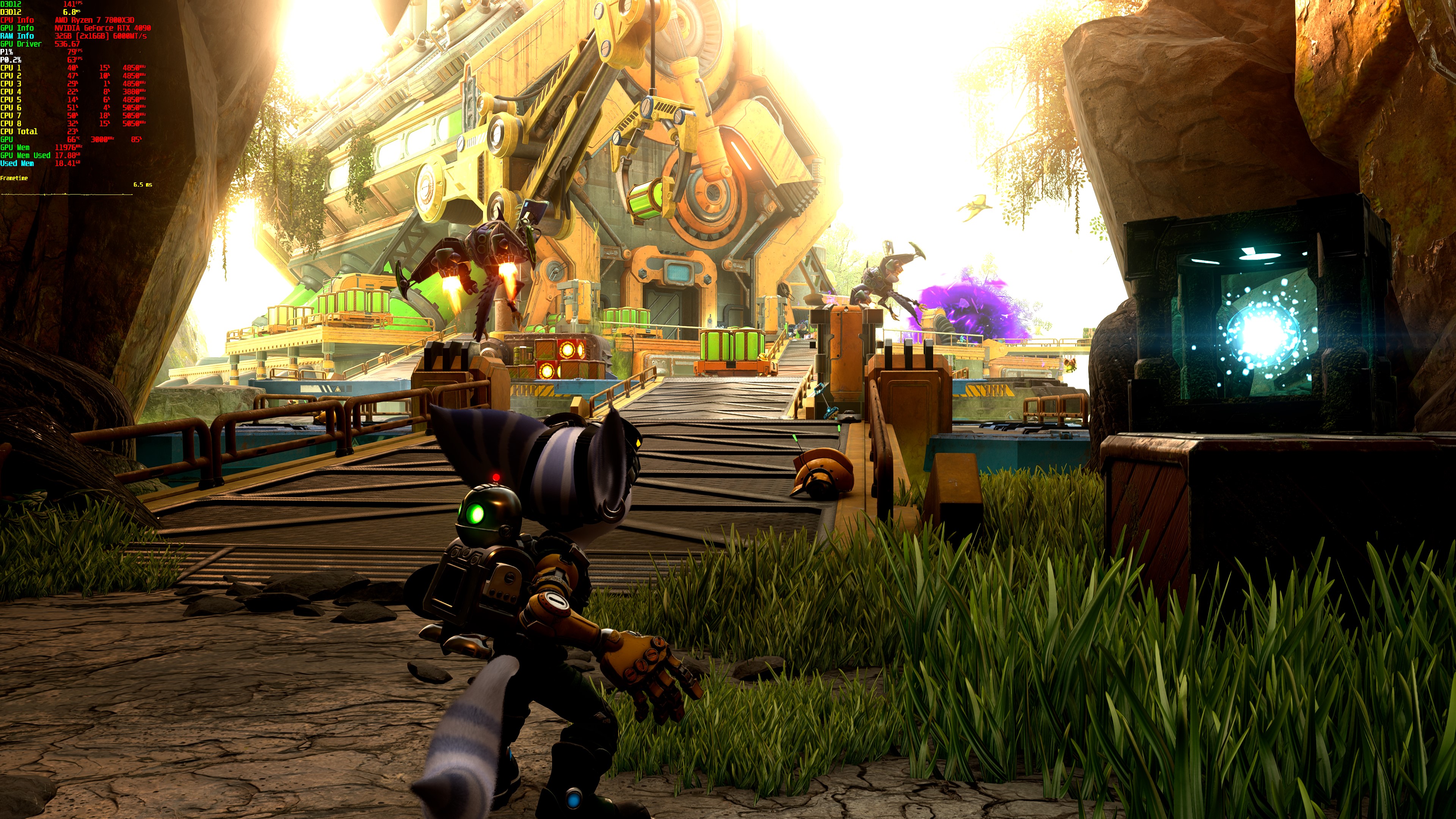

This has little to do with DirectStorage decompression which only uses a trivial amount of PCIe bandwidth by comparison. PCIe 3.0 has a total bandwidth of 16GB in each direction and it's fairly common for well performing games to transfer 3-4GB per second. R&C is only adding around a gigabyte on top of that at the most and much less on average.

How did you perform a read test on PS5? Where does that number come from?

This was already answered by

H

hlm666

(thanks for that) but here's how it looks if you're curious. I just plug my PS5 NVMe drive into a USB adapter and connect it with my PC.

Here's my (then new) drive

before running the test and here it is

after. That differential in Total Host Reads basically tells you all you need to know. And now that we can compare with a PC release we know that these numbers are accurate.

Are you running 32GB of system RAM?

Yes, in a 9900k + 2080 Ti system. What did you have in mind?

using nvme it seems it doesn't use much of it

I've seen some confusion online regarding this but the reason is that BypassIO will make your drives invisible while using DirectStorage. Disk counters still work perfectly fine on anything that doesn't use DirectStorage but it's currently not possible to measure DS performance on drives that use BypassIO.

There are ways to disable that in Windows' registry but it's up to you if you want to. I still haven't found the slightest performance benefit in BypassIO but that could change at some point.