PaintTinJr

Member

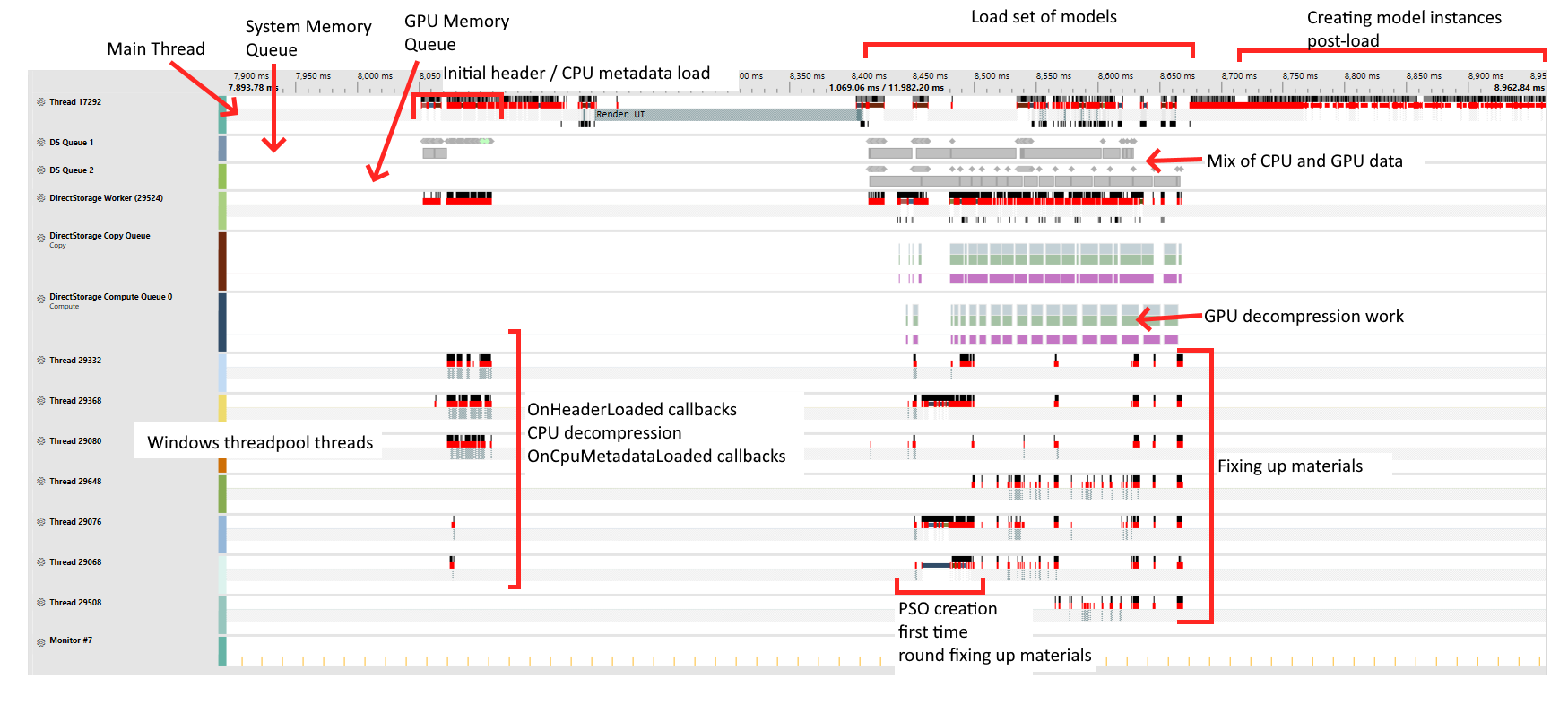

No, I think with rebar being a win for games as a recent necessity, and games like Star Citizen having train sections where asset streaming is tanking frame-rate, while CPU and GPU remain half stressed is going to result in either an unwanted hardware solution - like APUs - or a software compromise where texture streaming is going to be capped and supplemented with procedural textures, and live with the reality that a £500 console yields superior texture quality to PC, and because texturing is so much of the signal on screen, overall IQ of consoles can't be surpassed.Are you saying PC developers will have to figure out a way to take advantage of all this cutting-edge hardware because just telling people to buy more expensive stuff isn't gonna work anymore? Imagine that.

I'm glad. SSDs have been a thing for over a decade and it took fucking consoles to give PC developers a kick in the ass to start using what they've had for a long time.

Personally, I think the APU hardware solution will win out to keep the rainbow chasing hardware market going.

Last edited: