FingerBang

Member

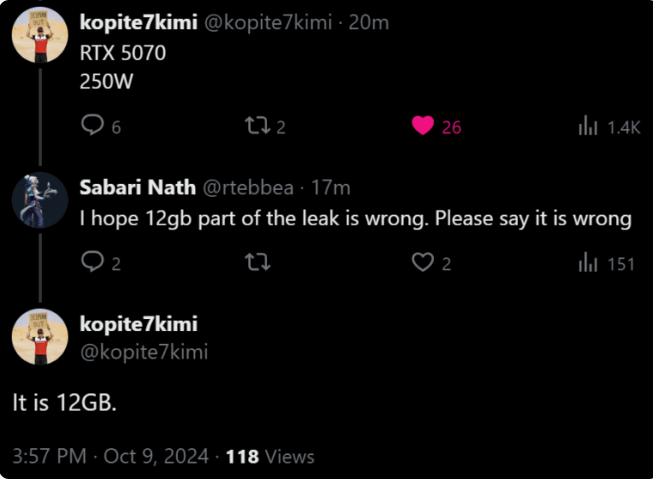

This is ridiculous. Ada was a scam generation where they basically raised the price of eavh tier by naming them the tier above. They would have done even worse by naming the now called 4070ti a 4080.

If this leak is true, Nvidia will do the same thing again, making player lose another tier, selling the xx70ti as a 4080.

Compared to Ampere you are basically paying overinflated xx80 prices for a xx70 (non ti). I don't even know what is going to happen on the low end. But I imagine you'll now just be gaining a single tier for the same performance, again. A 5060 will perform like a 4070. A 4070 like a 5080 and so on. It sounds good, but its half of what we used to get.

The 5090 sounds like a beast but I really don't know why I would upgrade from a 4090 for gaming.

If this leak is true, Nvidia will do the same thing again, making player lose another tier, selling the xx70ti as a 4080.

Compared to Ampere you are basically paying overinflated xx80 prices for a xx70 (non ti). I don't even know what is going to happen on the low end. But I imagine you'll now just be gaining a single tier for the same performance, again. A 5060 will perform like a 4070. A 4070 like a 5080 and so on. It sounds good, but its half of what we used to get.

The 5090 sounds like a beast but I really don't know why I would upgrade from a 4090 for gaming.