-

Hey, guest user. Hope you're enjoying NeoGAF! Have you considered registering for an account? Come join us and add your take to the daily discourse.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Intel 11th Gen Processor (Rocket Lake-S) Architecture Detailed. Ice Lake Core. Double Digit IPC Gains. PCIe Gen4. Xe iGPU. Launches next Quarter.

llien

Banned

Intel 14nm is still top tier gaming

TPU tested with 2080Ti and got outlier results.

Most reviewers put Zen 3 firmly ahead.

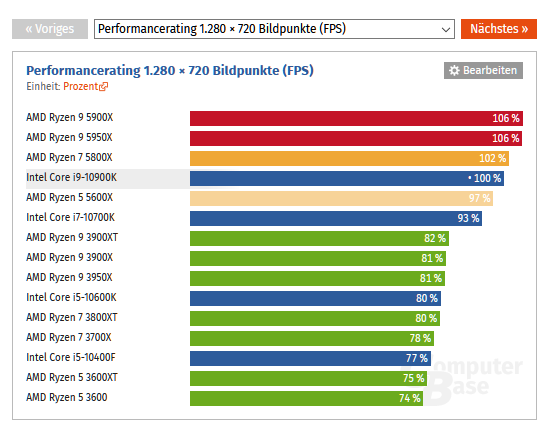

E.g. computerbase (tested on 3080):

more:

Last edited:

Leonidas

AMD's Dogma: ARyzen (No Intel inside)

Indeed. More of a wash than I expected

Belief in marketing slides strike yet again.

I don't disagree with that, but your current CPU didn't exactly stop the 9900k & 10900k getting "crowned", in your eyes, did it?

Those were crowned because they had higher OOB frequencies (despite my 5.0 GHz OC) and more cores which a small number of games benefit over the 8700K.

Hard to crown another CPU since it's very game dependent. Intel topped basically all gaming charts previously, now AMD wins some and Intel wins some.

We now have a $300 6c that, in gaming, frequently beats or matches a 10900K that is meant to retail for ~$500 . That is very exciting to see.

Rocket Lake will fire back in a few months and we'll have actual sustained competition in the market again

If you want to look at it through that slant sure, but you could also say 3 years after the 8700K we have a CPU that is a little bit cheaper and a little bit faster.

Very exciting? I don't see it. A 5600 non X at $220 would have been exciting though...

TPU tested with 2080Ti and got outlier results.

Most reviewers put Zen 3 firmly ahead.

E.g. computerbase (tested on 3080):

720p

Last edited:

Rentahamster

Rodent Whores

*cough*720p

Genuine question since I don't understand: why are those benchmarks at 720p?

Edit: curiously enough, according to those benchmarks in AC:O the Ryzen 2 seem to be at the top, while with Zen 1 both Ryzen and Threadripper seemed to struggle.

Stolen from Reddit

At the risk of a lot of downvotes, I figured I would try to explain why testing CPUs at low resolutions is important. I am seeing a lot of misinformation and rationalizing for why we shouldn't test at low resolutions like gamers playing at high resolutions and all, but honestly, when you test CPUs, you actually want to test the CPU. Including benchmarks at high resolutions can be nice to give people who game at higher resolutions an idea how their system performs, but all in all, to actually TEST the CPU, you want to test at low resolutions.

Benchmarking tests are a lot like scientific trials. You want to link an independent variable, to a dependent variable. In this case you want to test how your CPU runs at gaming. What you don't want interfering with your trials is other variables. You want to CONTROL the environment, and ensure that the only factor at work making a difference is the thing you are testing. EVERYTHING ELSE should be controlled for, as it does not interfere with the result.

When you game at low resolutions, you are doing just that. When you test at high resolutions, you are introducing a third variable into the mix: the graphics card. When you test at high resolutions, it's possible that the GPU is taking on more stress from the system, and this could be the primary variable affecting results, NOT the CPU. This gives the viewer the impression that the CPUs are performing more equally than would in a more controlled setting.

To minimize the stress of the GPU, you want to test at max settings (since settings CAN impact CPU performance), and you want to test at as low of a resolution as possible. I'm seeing joking comments asking, what are tests only valid if you test at 640x480 or something? Well, let me put it this way. If you're running a GTX 1080 at 640x480, you're not going to be GPU bound in most games. You would be testing the CPU, and this would lead to more valid results, since that's what we are testing.

This isn't to say that high res benchmarks can't be good. It's good to test at higher resolutions to give people some baseline expectations to how it will perform in a real world environment, but testing at low resolutions is more important, because it tells you how the CPU will perform under stress, and this could give an indication of the long term performance.

Depending on the resolution/game that's a mostly GPU bound scenario.

For example, if you're gaming at 1440p or 4K, the slowest 6 Core Ryzen 1600 ($100) will be within single digits of the fastest 16 Core Zen 2 Ryzen 3950X ($750). Not a good way to test the CPU...

Try to be consistent please.

Leonidas

AMD's Dogma: ARyzen (No Intel inside)

*cough*

Try to be consistent please.

I never advocated 720p testing, I simply posted the reason why it was done in the leaked review I linked.

I am amused that you would waste time digging up such an old post of mine and that's the best you came up with though

Last edited:

Insane Metal

Member

There's nothing left for Intel right now. Maybe next year they can be back to the fight.

Epic Sax CEO

Banned

onunnuno

Neo Member

I don't understand, I really don't. What does he win with ignoring facts?

Soulblighter31

Banned

What is wrong with these outlets ? Seriously? 640x480 resolution and low details ? Who is that result and testing help ? Jesus christ

JohnnyFootball

GerAlt-Right. Ciriously.

I never advocated 720p testing, I simply posted the reason why it was done in the leaked review I linked.

I am amused that you would waste time digging up such an old post of mine and that's the best you came up with though

Soltype

Member

I had an all ATI/AMD PC back then, and it might happen again.Seriously did not see the day that amd would destroy Intel like they are. It feels like the early 2000s

Epic Sax CEO

Banned

What is wrong with these outlets ? Seriously? 640x480 resolution and low details ? Who is that result and testing help ? Jesus christ

This is normal.

This isn't the only resolution and present.

Is just to see how far the CPU alone can push the game.

Soulblighter31

Banned

This is normal.

This isn't the only resolution and present.

Is just to see how far the CPU alone can push the game.

its not normal at all. They're using ancient cpu testing methodology from 20 years ago. Like, testing at 640x480 and low details at the end of 2020 is just busy work that helps no one. I havent seen other sites go to such extremes as anandtech

CormacMcNulty

Banned

Eliminating GPU bottleneck completely and trying to push CPU as much as possible. It's a CPU benchmark, if you don't push the CPU into extreme bottleneck territory you wouldn't be able to compare them clearly.What is wrong with these outlets ? Seriously? 640x480 resolution and low details ? Who is that result and testing help ? Jesus christ

CormacMcNulty

Banned

Agree with you too. 1080p should be the baseline although lower resolutions make comparison easier.its not normal at all. They're using ancient cpu testing methodology from 20 years ago. Like, testing at 640x480 and low details at the end of 2020 is just busy work that helps no one. I havent seen other sites go to such extremes as anandtech

Intel 14nm is still top tier gaming

I have some news...

ZywyPL

Banned

Eliminating GPU bottleneck completely and trying to push CPU as much as possible. It's a CPU benchmark, if you don't push the CPU into extreme bottleneck territory you wouldn't be able to compare them clearly.

This got me thinking - why not just get rid of the GPU all alone and render on CPU entirely, just like in the good old days?

This got me thinking - why not just get rid of the GPU all alone and render on CPU entirely, just like in the good old days?

AMD Ryzen Threadripper 3990X can run Crysis without a GPU

It's not really playable, but it is a huge milestone for CPUs.

www.windowscentral.com

www.windowscentral.com

Manstructiclops

Banned

Relegated to clown posting dead threads where the only activity is people taking the piss out of him.

Brutal.

Rentahamster

Rodent Whores

Irrelevant. You laughed at it here, and justified it there, hence the inconsistency.I never advocated 720p testing, I simply posted the reason why it was done in the leaked review I linked.

It took me all of 30 seconds to find your quote. I remembered that you had a hot take about 720p from the last Ryzen review thread, and lo and behold it was post number 12.I am amused that you would waste time digging up such an old post of mine and that's the best you came up with though

blastprocessor

The Amiga Brotherhood

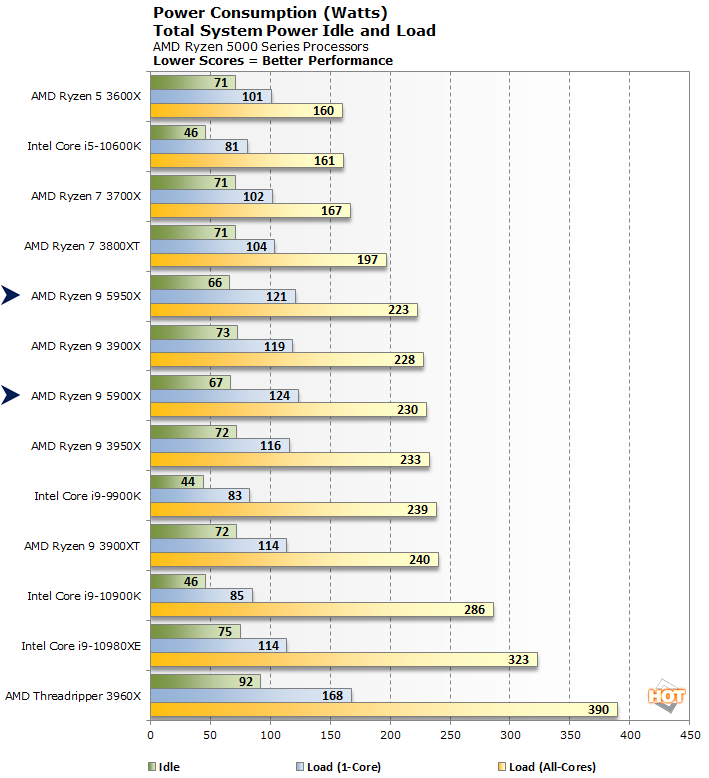

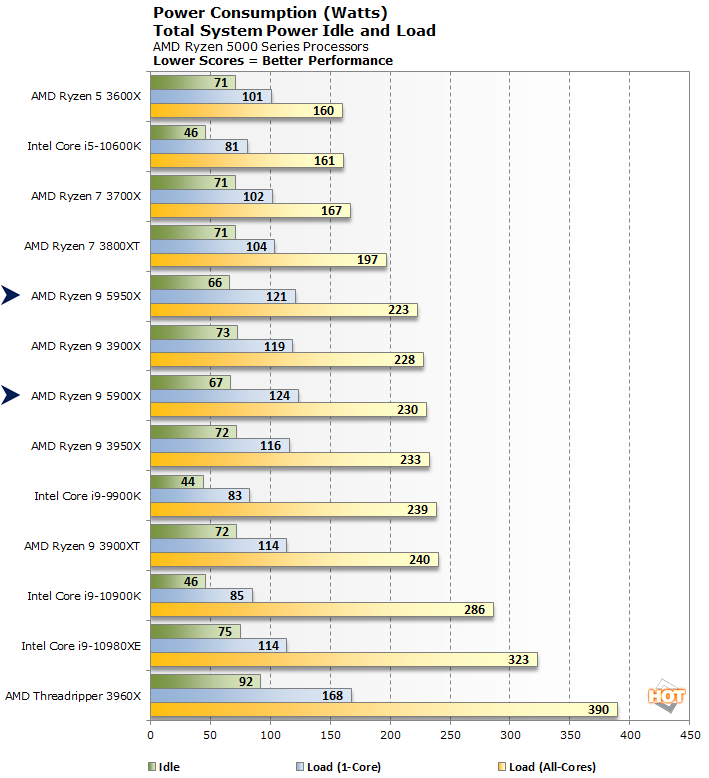

Holy shit that's terrible couple that with a GTX 3080 and you're talking ridiculous power consumption.

Last edited:

thelastword

Banned

I remember a time when 720p gaming tests and low power draw was all the rage, the most important thing in gaming....Do they still matter may I ask?

Leonidas

AMD's Dogma: ARyzen (No Intel inside)

Holy shit that's terrible couple that with a GTX 3080 and you're talking ridiculous power consumption.

Intel power draw in gaming is similar to AMD 7nm / 12nm hybrid, it will again (most likely) land somewhere in the middle of this graph.

Last edited:

onunnuno

Neo Member

Intel power draw in gaming is similar to AMD 7nm / 12nm hybrid, it will again (most likely) land somewhere in the middle of this graph.

It's not:

And you know why right? But just in case you don't know:

Using a old game with bad core scaling will be under using the CPU, so TPU is not measuring and comparing the power of the CPU, it's comparing the power usage of the GPU. Why would they do that? Who knows, it's just amateur stuff.

So, it looks like TPU were using 2 x 8 GB DIMMs, so were running into the issue reported by Gamers Nexus.Intel 14nm is still top tier gaming

AMD Ryzen 9 3900X Review

The flagship of AMD's new Ryzen 3000 lineup is the Ryzen 9 3900X, which is a 12-core, 24-thread monster. Never before have we seen such power on a desktop platform. Priced at $500, this processor is very strong competition for Intel's Core i9-9900, which only has eight cores.

Last edited:

GymWolf

Member

Does the new ram type come out in late 2021? don't you wanna wait for the prices to be more stable? i'm kinda in your situation but i don't know if i can't wait until late 2021...the waiting game never really ends.I'm waiting until late 2021 for my next upgrade. It will be Ryzen 6000 or Intel 12th gen on 10nm with DDR5 RAM

Last edited:

BluRayHiDef

Banned

Will it support DDR5?

duhmetree

Member

DDR5 mass production in 2021.Does the new ram type come out in late 2021? don't you gonna wait for the prices to be more stable? i'm kinda in your situation but i don't know if i can't wait until late 2021...the waiting game never really ends.

Yeah I get it. I told myself I'd wait... Intel 10nm is attractive, since it will be first to market with DDR5. Problem is, AMD will be right behind them with their Zen4 5nm DDR5 launch in 2022

I'm probably going to upgrade my 8700k soon to match my 3080. Upgrading every 3 years or so. Problem is, I know Intel will probably leap AMD in gaming with the 11th gen... but I'd have to upgrade my power supply. 750w would be cutting it close

GymWolf

Member

Wait what? is that true even with zero overclock?!DDR5 mass production in 2021.

Yeah I get it. I told myself I'd wait... Intel 10nm is attractive, since it will be first to market with DDR5. Problem is, AMD will be right behind them with their Zen4 5nm DDR5 launch in 2022

I'm probably going to upgrade my 8700k soon to match my 3080. Upgrading every 3 years or so. Problem is, I know Intel will probably leap AMD in gaming with the 11th gen... but I'd have to upgrade my power supply. 750w would be cutting it close

duhmetree

Member

no thats with overclocking. an efficient 750w PSU should be enough... but it's becoming slightly uncomfortably close.Wait what? is that true even with zero overclock?!

If i'm going to have all of these power hungry toys... I shouldn't skimp out on PSU.

Leonidas

AMD's Dogma: ARyzen (No Intel inside)

It's not:

My chart is about gaming power consumption, yours is not. Gaming is still mostly lightly threaded. Your non-gaming chart even shows Intel 14nm with the advantage at light threads.

So, it looks like TPU were using 2 x 8 GB DIMMs, so were running into the issue reported by Gamers Nexus.

AMD Ryzen 9 3900X Review

The flagship of AMD's new Ryzen 3000 lineup is the Ryzen 9 3900X, which is a 12-core, 24-thread monster. Never before have we seen such power on a desktop platform. Priced at $500, this processor is very strong competition for Intel's Core i9-9900, which only has eight cores.www.techpowerup.com

2 DIMM setup is what most people use, there is no issue with TPU numbers. It reflects the performance most people will see. For most people to get GN/HUB numbers they'll have to buy another set of DIMMs, which adds another $60-$150 to the already increased costs of those CPUs.

Last edited:

The point is not that TPUs numbers are wrong; just that they don't reflect the "top tier" performance that will be delivered when:2 DIMM setup is what most people use, there is no issue with TPU numbers. It reflects the performance most people will see. For most people to get GN/HUB numbers they'll have to buy another set of DIMMs, which adds another $60-$150 to the already increased costs of those CPUs.

A.) Someone upgrades to 32 GB via an extra pair of DIMMs after 16 GB becomes insufficient.

B.) Someone builds a PC using 2 x 16/32 GB DIMMs.

My memory is fuzzy, but I seem to recall someone on these forums being concerned about which vendor could deliver the absolute best gaming performance.

Leonidas

AMD's Dogma: ARyzen (No Intel inside)

The point is not that TPUs numbers are wrong; just that they don't reflect the "top tier" performance that will be delivered when:

A.) Someone upgrades to 32 GB via an extra pair of DIMMs after 16 GB becomes insufficient.

B.) Someone builds a PC using 2 x 16/32 GB DIMMs.

True, but when the average consumer, who has 2x8 looks at GN and HUB, they'll probably unknowingly believe they will get the same performance.

I hate to say it but most guys on the internet looking at graphs in a review are clueless and tons of people will be and were already mislead by Zen3 gaming performance, since most people don't look at and don't understand the nuances involved in the review test setup, they just look at the graphs.

The average person will get TPU numbers while thinking they are getting GN/HUB numbers because they are clueless about certain details...

Last edited:

Rentahamster

Rodent Whores

lmfao are you serious? What Happened to GN being a "trustworthy source"?True, but when the average consumer, who has 2x8 looks at GN and HUB, they'll probably unknowingly believe they will get the same performance.

I hate to say it but most guys on the internet looking at graphs in a review are clueless and tons of people will be and were already mislead by Zen3 gaming performance, since most people don't look at and don't understand the nuances involved in the review test setup, they just look at the graphs.

The average person will get TPU numbers while thinking they are getting GN/HUB numbers because they are clueless about certain details...

Yes, I only post trustworthy sources, you'll not see me posting videos from fanboys on YouTube making false claims like I've seen on this forum numerous times before...

GN for example found some games being ~30% faster with 9900K, text review not up yet but timestamped the part where he discusses gaming performance and in some places it's not even close, Intel has a huge lead in instances.

I wonder what happened?

You used to be concerned about the hands down best gaming performance overall, not best gaming performance with artificial handicaps.2 DIMM setup is what most people use, there is no issue with TPU numbers. It reflects the performance most people will see. For most people to get GN/HUB numbers they'll have to buy another set of DIMMs, which adds another $60-$150 to the already increased costs of those CPUs.

Last edited:

Leonidas

AMD's Dogma: ARyzen (No Intel inside)

lmfao are you serious? What Happened to GN being a "trustworthy source"?

Where did I say they weren't trustworthy? All I'm saying is their test setup doesn't reflect most users.

You take this stuff way too seriously...

You used to be concerned about the hands down best gaming performance overall, not best gaming performance with artificial handicaps.

I still am. I think it's also important that places reveal the performance that people will typically receive.

I was never deceived by any of the Zen3 numbers but many people are, including you who jumped to conclusions about TPU numbers...

BattleScar

Member

They're completely eliminating the GPU bottleneck, so they can test the CPU performance.What is wrong with these outlets ? Seriously? 640x480 resolution and low details ? Who is that result and testing help ? Jesus christ

Rentahamster

Rodent Whores

Where did I say they weren't trustworthy? All I'm saying is their test setup doesn't reflect most users.

You said tons of people will be mislead by Zen3 gaming performance. GN lays out all their testing methodology very transparently. They are not misleading at all.True, but when the average consumer, who has 2x8 looks at GN and HUB, they'll probably unknowingly believe they will get the same performance.

I hate to say it but most guys on the internet looking at graphs in a review are clueless and tons of people will be and were already mislead by Zen3 gaming performance, since most people don't look at and don't understand the nuances involved in the review test setup, they just look at the graphs.

Try a mirror please.You take this stuff way too seriously...

If you are then you wouldn't have posted this:I still am.

Intel 14nm is still top tier gaming

They did. But you want to look at an outlier for some reason.I think it's also important that places reveal the performance that people will typically receive.

I think you're confusing me with someone else.I was never deceived by any of the Zen3 numbers but many people are, including you who jumped to conclusions about TPU numbers...

Leonidas

AMD's Dogma: ARyzen (No Intel inside)

You said tons of people will be mislead by Zen3 gaming performance. GN lays out all their testing methodology very transparently. They are not misleading at all.

People are dumb, 95% of PC threads I see on the internet have some misinformation/idiocy in it. Including this one where people (including you) jump to conclusions without having all the info.

They did. But you want to look at an outlier for some reason.

Not in the review they didn't. They posted it after the fact.

Last edited:

Rentahamster

Rodent Whores

Way to miss the pointPeople are dumb, 95% of PC threads I see on the internet have some misinformation/idiocy in it. Including this one where people (including you) jump to conclusions without having all the info.

Not in the review they didn't. They posted it after the fact.

onunnuno

Neo Member

My chart is about gaming power consumption, yours is not. Gaming is still mostly lightly threaded. Your non-gaming chart even shows Intel 14nm with the advantage at light threads.

For Witcher 3? Yes, it's lightly threaded. For Tomb Raider for instance is not. Using an engine that is over 5 years old to show "power consumption" is not a gaming power consumption. It's just another example that they're trying to skew the results to one side. You're smarter than this.

Leonidas

AMD's Dogma: ARyzen (No Intel inside)

For Witcher 3? Yes, it's lightly threaded. For Tomb Raider for instance is not. Using an engine that is over 5 years old to show "power consumption" is not a gaming power consumption. It's just another example that they're trying to skew the results to one side. You're smarter than this.

No game fully utilizes a CPU the way your "Cinebench like" full load only graph in this thread shows.

Until you post real gaming numbers like I did, you're just speculating. Posting Cinebench power consumption figures is misleading. I'm the only one who posted gaming power consumption numbers on this page.