You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

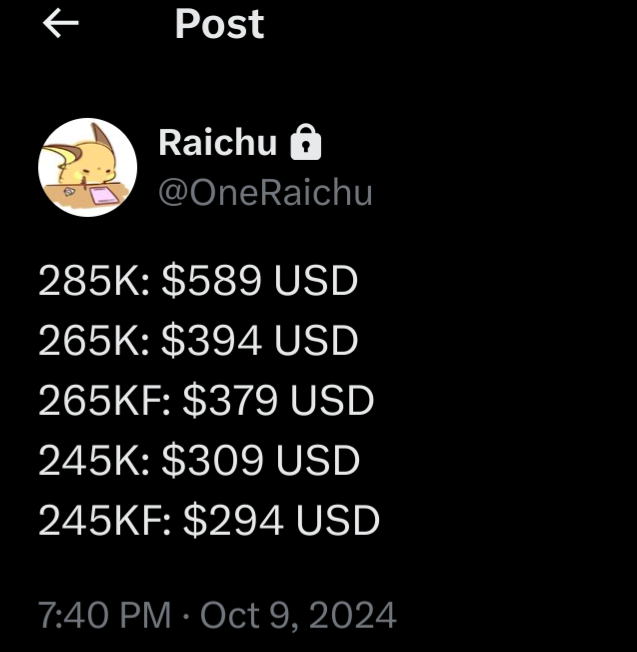

Intel admits Core Ultra 9 285K will be slower than i9-14900K in gaming

Dirk Benedict

Gold Member

Buggy Loop

Gold Member

He was a king in the digital war,

NeoGAF forums, Intel to the core,

Never would admit to the silicon flaws,

13th-gen degradation? He'd ignore the cause.

"Voltage spikes? Nah, it's running great!"

Leonidas stands tall, won't debate,

Even as frames start to fall behind,

Brainwashed by Intel, he won't mind!

V-cache smokes him, but he doesn't agree,

"That's just AMD marketing trickery!"

14th-gen's just playing the game,

Core Ultra's a joke, just chasing fame,

Slower in gaming, it's losing the fight,

But Leonidas says, "Intel's alright!"

Leonidas, Leonidas, Leonidas!

(Leonidas, Leonidas, Leonidas!)

Leonidas, Leonidas, oh, oh, oh Leonidas,

Come and rock me, Leonidas!

"Just wait, Intel's got tricks up its sleeve,"

But AMD's 3D stacking makes him grieve,

Numerous reports showing Intel lost its way,

But Leonidas defends them every day!

Pat's running round, asking for government cheese,

Begging senators to help him, saying "please oh please!"

Pat can't compete with AMD or Arm,

But Leonidas says, "What's the harm?"

"Voltage spikes? Stop crying, you fool!"

14th-gen's a dumpster fire, but to him it's "cool."

"Who needs stability when you've got the lore?"

"Intel's for us fanboys, we don't care about more!"

Leonidas, Leonidas, Leonidas!

(Leonidas, Leonidas, Leonidas!)

Leonidas, Leonidas, oh, oh, oh Leonidas,

Come and rock me, Leonidas!

"Silicon degradation? That's just noise!"

He'll defend Intel, like they're one of his boys,

Core Ultra 9's got nothing to prove,

Leonidas gets down to Team Blue's groove,

Leonidas, Leonidas, Leonidas!

(Leonidas, Leonidas, Leonidas!)

Leonidas, Leonidas, oh, oh, oh Leonidas,

Come and rock me, Leonidas!

Intel forever, no need to concede,

Even if Gelsinger's down on his knees!

Holy shit

Can anyone AI these lyrics into a sea shanty? I would die

They're basically playing with yogurt, it's basically the same.After the major screw up that was 13 and 14th gen, Intel really needed to make sure people understand these CPUs were different.

Mobilemofo

Member

Intel: Because less is more.

Hawk The Slayer

Member

A awesome trade off since it's less watts and runs cooler most likely. Even a 5% would be alright. Especially good for me because I game on 1080p so I don't the strongest CPU and would definitely rather have my CPU last long with less watt and less heat.

JohnnyFootball

GerAlt-Right. Ciriously.

Nvidias GPU actually have outstanding power consumption. That was what shocked me about Ada Lovelace since everyone was expecting them to go balls to the wall with power usage.I mean I always took it into account when thinking about PC it's just that on this forum nobody likes to mention it... at all. Unless it's for the sake of ripping on Intel and even then people are more pissed about their heat than their power. But Nvidia and AMD's dedicated GPUs have the exact same power consumption issues and nobody will ever bat an eye, even when the new 12VHPWR connectors are frying GPUs and turning them into bricks nobody gives a shit. Connectors that wouldn't be needed if Nvidia buckled the fuck up and optimized their GPU's power consumption.

Chiggs

Gold Member

A awesome trade off since it's less watts and runs cooler most likely. Even a 5% would be alright. Especially good for me because I game on 1080p so I don't the strongest CPU and would definitely rather have my CPU last long with less watt and less heat.

If only there was a power efficient, less toasty CPU alternative that came out prior to this new Intel CPU. If only.

Last edited:

The Mad Draklor

Member

Oxidation issues killed his 13600K.

I don't know if that is actually true, but it wouldn't be surprising at the slightest.

YCoCg

Member

Sadly he was laid off in the last batch of lay offs at Intel.

asdasdasdbb

Member

Nvidias GPU actually have outstanding power consumption. That was what shocked me about Ada Lovelace since everyone was expecting them to go balls to the wall with power usage.

That's more because pretty much every die is memory bandwidth bottlenecked. So there was no real point in cranking up the clock speeds.

Blackwell (well the models with GDDR7 anyway) might change that.

nowhat

Member

Last edited:

ap_puff

Banned

Yeah... No chance this beats x3d. I wonder if CPU gains are a thing of the pastEnglish

StereoVsn

Gold Member

It's good to see better power and temperature numbers. Still not amazing but much better vs previous two gens.

Hopefully Intel can iterate on this further. Unfortunately it's not enough from gaming perspective to get people away from 7800x3D or upcoming 9000 x3d CPUs. 9000 should be even more power efficient.

Hopefully Intel can iterate on this further. Unfortunately it's not enough from gaming perspective to get people away from 7800x3D or upcoming 9000 x3d CPUs. 9000 should be even more power efficient.

Celcius

°Temp. member

What is Final Fantasy 14: Golden Legacy?English

I've played all the expansions and never heard golden legacy mentioned anywhere...

cinnamonandgravy

Member

just be so GPU bound it hurts and youre good

SolidQ

Member

it's machine translation.What is Final Fantasy 14: Golden Legacy?

ResilientBanana

Member

Not going to lie, the AMD camp is the same way. From what I see in a lot of benches both Intel and AMD are within 1% of each other, which is a fairly tight lead. But honestly heat has become an issue for both manufacturers.That's the greatest exchange in this thread...and really tells the tale.

In other news...

Keep it up, cool guys. Nobody will catch on to your brilliant strategy.

- Intel fans two months ago: "Zen 5 is shit and won't take the gaming crown."

- Intel fans today: "Gee whiz, just look at that power and efficiency of the 285k! Who cares if it's not the fastest?!"

Shadowstar39

Gold Member

Geforce 6 era. Back in the good ole days around when elder scrolls 4 came out or was it half-life 2 /farcry/doom3 . All i know as I had went from a voodoo5 -> Geforce3ti to a 5700 that sucked ass. That 6800 card was a return to form for nvidia graphics. then the 8800 series blew everything away. Good times....and how much was that due to Windows 11 sucking ass and later getting patched?

(I have no horse in this race, the last dedicated GPU I had was a 6600 GT, and yes, that was NVidia at the time, just here for the popcorn. Please continue.)

Yeah the fanboyism is strong with some for sure.

nowhat

Member

Neverwinter Nights may not have been the best game ever, but it was among the few that had a native Linux port at the time. I played the fuck out of that one.Geforce 6 era. Back in the good ole days around when elder scrolls 4 came out or was it half-life 2 /farcry/doom3 . All i know as I had went from a voodoo5 -> Geforce3ti to a 5700 that sucked ass. That 6800 card was a return to form for nvidia graphics. then the 8800 series blew everything away. Good times.

Yeah the fanboyism is strong with some for sure.

(Yes, I'm "that guy" when it comes to Linux. So no PC gaming for me any more. Oh wait. I have a Steam Deck. But it's running Arch. I guess I'm still "that guy".)

(And yes, I've been running Arch for over two decades now. I think it's within the TOS that I should mention this.)

ResilientBanana

Member

Why? How does this affect you?There should be a law where gpu's can't pass over 300w, and cpu's cant go higher then 100w.

500 vs 400w my god.

Soodanim

Member

I hate that every article with a company saying something is now "admits" as if they were pressured and a scandalous secret slipped out. No, they just fucking said it you clickbaity cuntflaps, stop being so dramatic you teenage girls.

Not you winjer

, I'm not shooting the messenger.

winjer

, I'm not shooting the messenger.

Also I'd happily trade 3fps for 80w less, that's a no-brainer and you'd have to have no brain to care.

Not you

Also I'd happily trade 3fps for 80w less, that's a no-brainer and you'd have to have no brain to care.

Thebonehead

Gold Member

Shadowstar39

Gold Member

Seriously, Who is in charge over there? AMD is just as guilty.Cowards need to unleash the Pentium V!

For desktop chips:

We went from ibm xt/8088- > 286-> 386 -> (80)486 -to Pentium -> Pentium pro - Pentium 2 -> Pent.3/Celeron - > Pent4.- > core - > Core2duo -> Core2quad- > i3/i5/i7/i9 (for 15 or so years) with multiple numbers after that like 9750h or some crap)

Amd isn't any better we went AM386 - K5 -K6 - > Athlon/Duron- > > Athlon XP - > Athlon64- > Athlon64x2 - Phenom - Bulldozer AMDFX- > Zen series...here like intel's chips the names get confusing.

Zen1 Ryzen 5 1600 - >Zen 2 Ryzen 7 3800x, Shit got confusing real quick with Zen 1,2,3 and Ryzen 3, 5, 7, 9 and threadripper., ryzen 8000- > 9000 - then add 3d at the end, shit gets confusing real quick.

Next major change one of these companies needs to cave and go back to simple naming scheme. Call it Pentium 5 and Athlon 3 and call it a day!

Chiggs

Gold Member

Not going to lie, the AMD camp is the same way. From what I see in a lot of benches both Intel and AMD are within 1% of each other, which is a fairly tight lead. But honestly heat has become an issue for both manufacturers.

Going to have to disagree there, especially with the Windows update that improves Ryzen performance across the board.

AMD fanboys are more guilty of pretending their betrothed is some sort of crusader of light and justice, even when it's clear Dr. Su and friends are acting in their own best interest.

winjer

Member

Seriously, Who is in charge over there? AMD is just as guilty.

For desktop chips:

We went from ibm xt/8088- > 286-> 386 -> (80)486 -to Pentium -> Pentium pro - Pentium 2 -> Pent.3/Celeron - > Pent4.- > core - > Core2duo -> Core2quad- > i3/i5/i7/i9 (for 15 or so years) with multiple numbers after that like 9750h or some crap)

Amd isn't any better we went AM386 - K5 -K6 - > Athlon/Duron- > > Athlon XP - > Athlon64- > Athlon64x2 - Phenom - Bulldozer AMDFX- > Zen series...here like intel's chips the names get confusing.

Zen1 Ryzen 5 1600 - >Zen 2 Ryzen 7 3800x, Shit got confusing real quick with Zen 1,2,3 and Ryzen 3, 5, 7, 9 and threadripper., ryzen 8000- > 9000 - then add 3d at the end, shit gets confusing real quick.

Next major change one of these companies needs to cave and go back to simple naming scheme. Call it Pentium 5 and Athlon 3 and call it a day!

Most of those names make sense, if we know the internal logic.

For example, the Pentium. At the time, Intel wanted a unique brand for their CPUs. And the greek word for 5 is "Penta". So instead of naming it the 586. It became the Pentium. After than they just added a new number with every major arch.

The origin of Celeron, is from Latin, meaning swift or fast. Which sometimes the Celeron kinda was.

Until the Pentium 4, that used the Netburst architecture. Which was a significant failure. So Intel wanted a departure. Core2Duo was Intel's first desktop true dual core CPU. This is also the time when Intel stopped using clock speeds to differentiate their CPUs.

The Core generation was a fully new arch. Once again, Intel wanted a departure from the old names. At the time, the "i" branding was in vogue, mostly because of Apple. The i3, i5, i7 and i9, actual make a lot of sense to insert each CPU in their price range.

For AMD, they started by just using the Intel nomenclature, because they were just making Intel CPU clones, for IBM. The K5, K6, etc, was just a continuation of the "4"86, numerology.

Athlon, means competition. For example, as in triathlon.

For the Zen CPUs numbering, it's very easy. With each new architecture, they first release the CPUs. The next year, they release the APUs.

So the CPUs are odd numbers. And the APUs are even numbers.

Silver Wattle

Member

English

polybius80

Member

but but, that's impossible 14900K are far more K's than 285, also "ultra 9" sounds like its faster than "i9" which sounds like an apple product

Last edited:

ShaiKhulud1989

Gold Member

Those chips essentially solve nothing for Intel.

Efficiency is still miles behind ARM for casual laptops and mobile productivity. Leaked M4 is no slouch and Qualcomm is getting surprisingly competent.

Power and gamer mindshare? Yeah, good luck with restoring your reputation after that voltage fiasco without a semblance of RMA policy.

APU? We are not even touching that subject. Strix Point will be a default choice for portable gaming and PS5 Pro APU shows that you can get quite a lot of even with older Zen chips.

Strictly speaking there is very little room for growth on a gaming CPU market (I still see almost zero reasons to upgrade my 5800x3d) and Intel has nothing to offer to the most fast-growing segments.

Efficiency is still miles behind ARM for casual laptops and mobile productivity. Leaked M4 is no slouch and Qualcomm is getting surprisingly competent.

Power and gamer mindshare? Yeah, good luck with restoring your reputation after that voltage fiasco without a semblance of RMA policy.

APU? We are not even touching that subject. Strix Point will be a default choice for portable gaming and PS5 Pro APU shows that you can get quite a lot of even with older Zen chips.

Strictly speaking there is very little room for growth on a gaming CPU market (I still see almost zero reasons to upgrade my 5800x3d) and Intel has nothing to offer to the most fast-growing segments.

bender

What time is it?

Neverwinter Nights may not have been the best game ever.

Maybe the biggest understatement ever posted on GAF.

marquimvfs

Member

Seems that Arrow Lake (probably) the fastest gaming CPU architecture of 2024...

All hail to king LeonidasWill be a disaster if this comes out and trades blows with 1.5-2 year old CPUs, like Zen5.

Schmendrick

Banned

There you go https://suno.com/song/9cd6cf78-11e2-455e-90d4-a4adde04eceb

Holy shit

Can anyone AI these lyrics into a sea shanty? I would die

Low effort with no finetuning, but for this occasion that should be enough

Last edited:

winjer

Member

That makes sense, considering the previous gens had their ringbus killing itself.

Buggy Loop

Gold Member

There you go https://suno.com/song/9cd6cf78-11e2-455e-90d4-a4adde04eceb

Low effort with no finetuning, but for this occasion that should be enough

Holy shit, way better than I expected, AI is nuts.

Imagine if that was able to integrate in the post

Chiggs

Gold Member

Also I'd happily trade 3fps for 80w less, that's a no-brainer and you'd have to have no brain to care.

You've perfectly described a large segment of Intel supporters here on NeoGAF.

Buggy Loop

Gold Member

Also I'd happily trade 3fps for 80w less, that's a no-brainer and you'd have to have no brain to care.

So a slight undervolt of the previous gen?

Gen to gen we should see bigger improvements than that. They sacrificed hyper-threading to gain better performances and a ton of silicon area and that's the meager improvement we get? From their 10, fabs to TSMC's N3P node?

Something doesn't add up.

marquimvfs

Member

To my knowledge, the ring bus was killing itself because it shared the voltage line with the cores, and the cores were asking for high voltage left and right, it was not because they were running on higher clock and needed a higher voltage for that.That makes sense, considering the previous gens had their ringbus killing itself.

Skifi28

Member

We miss him.Leonidas hasn't even posted in this thread and y'all are out here writing songs about him and everything

ChuckeRearmed

Member

what's the hell with that naming? Is it a new line to replace i-series or what?

I guess the main advantage is lower consumption. Though would not be surprised if eventually we will get portable nuclear reactors at home,

I guess the main advantage is lower consumption. Though would not be surprised if eventually we will get portable nuclear reactors at home,

Last edited: