Besides doom 2016 there were quite a few games in techpowerup review, where 1080ti was 90%+ faster compared to previous generation.

Anno - 28fps vs 54fps 93% relative difference

Battlefield 1 - 36fps vs 69fps 91% relative difference

COD Infinite Warfare - 44fps vs 84fps 91% relative difference

Fallout 4 - 40fps vs 78fps 95% relative difference

GTA5 - 44fps vs 84fps 91% relative difference

Hitman - 33fps vs 70fps 112% relative difference

Mafia 3 - 21fps vs 42fps 100% relative difference

Rise Of The Tomb Raider - 27 fps vs 52fps 92% relative difference

The Witcher 3 - 31fps vs 59fps 90% relative difference

Total War Warhammer - 22fps vs 42fps 90% relative difference

Watch Dogs 2 - 21fps vs 41fps 95% relative difference

There were also quite a few games with 80-89% relative difference. I think some games were CPU limited as well.

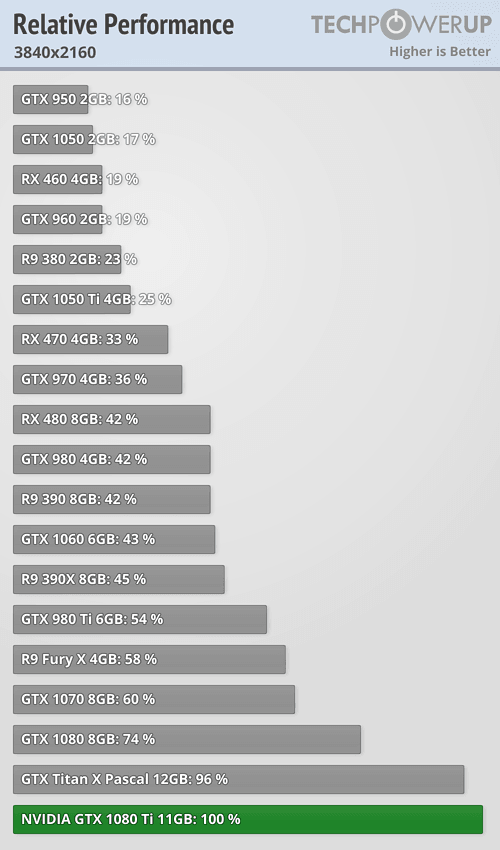

Based on all 25 games tested the 1080ti was 85% faster on average compared to 980ti, so IDK where you find that 67%.

The RTX4090 was only 46% faster on average compared to the 3090ti and I havent found a single raster game where the RTX4090 would be 90% faster compared to previous generation, or even close to 80%. The RTX4090 can be 2x faster, but only in RT, or if you use Frame Generation.

I'm glad you mentioned 2080ti. The RTX2080ti was released in 2018 and it took 7 years before console (PS5Pro) could match it's performance (the 2080ti is still faster, especially in RT, but not that much). How many years do you think it will take for AMD to build an APU that will be able to compete with the RTX4090, let alone the RTX5090?