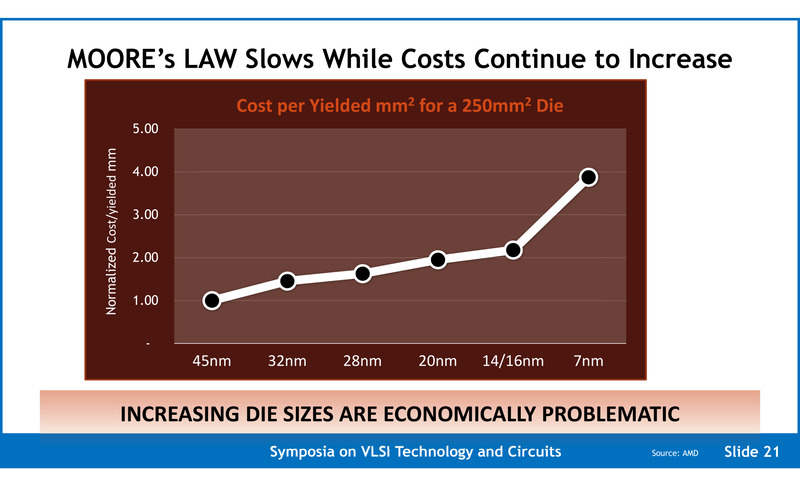

Just look at PS4Pro with 320mm² die on 16nm node. It was smaller die, but system cost more then PS4 with bigger die because cost per mm² went up with each smaller node.

Source?

PS4 Pro has a lower BoM cost (around $321, IIRC) compared to OG PS4. The fact that it has half the amount of DRAM chips drops the cost quite a bit. OG PS4 shipped with 16 DRAM chips.

If anything, consoles have a trend to increase the DRAM budget, not decrease it (XBOX 360 had only 4 GDDR3 chips at 128-bit, same for PS3 RSX).

OG PS4 and XB1 increased the DRAM bus to 256-bit and the number of DRAM chips (both for GDDR5 and DDR3) to 16.

Scorpio increased the bus to 384-bit. I don't think it's unrealistic to expect 24GB 384-bit for $499 (MSRP) consoles. 512-bit would be too much (heat-wise), so I don't expect it.

Sony didnt even put in UHD because they wouldnt be able to sell it for $400.

They did this because: 1) movie streaming has taken over the market, 2) BDXL discs for PS4 games wouldn't make sense (it would break BC with OG consoles).

PS5 will have BDXL 100GB discs for next-gen games, first and foremost. Supporting physical UHD movies as well will be an added bonus.

Notice how XBOX is trying to paint a digital-only narrative (with Game Pass) and how contradictory is to tout physical UHD movie discs at the same time... does it make sense marketing-wise?

It's the very definition of a confusing message.

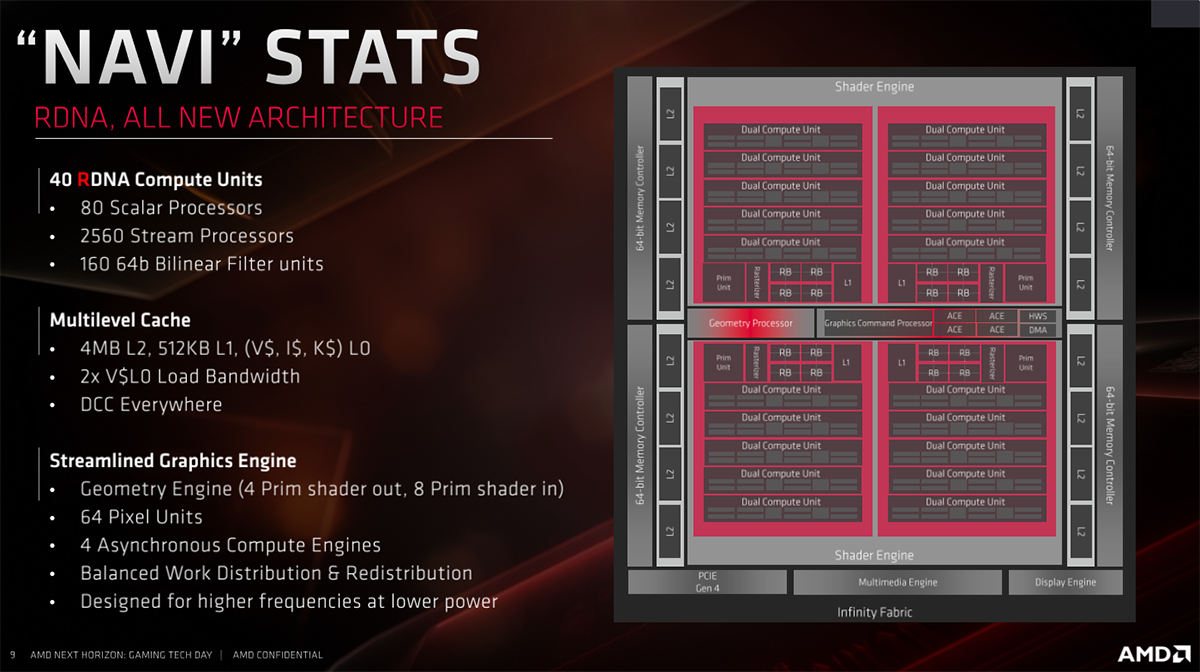

So how do we get chip with Zen2, ~ Navi 5700, Ray Tracing, 16GB of GDDR6, UHD and 1TB of SSD for $400? We dont. And we wont.

Nobody in their right mind expects $399 consoles this time around. Personally, I don't even expect a $499 BoM cost. These consoles will have to be sold at a loss, that's a given.

I've clarified my position, since I don't want to be accused of "unrealistic expectations" (offering high-end hardware for $399 and making a profit at the same time a la 2013).

Pretty sure we only got 8gb because memory prices took a big dive in the months leading up to the start of production.

Who's to say that GDDR6 chips won't take a similar dive? Samsung has already announced 2GB chips with 60% lower consumption (newer lithography/less costs).

I don't think we need 32GB (unless they go with a 2TB HDD + NAND cache), 24GB seems the best compromise for an SSD-only system and it will provide a big fat bus for the GPU.

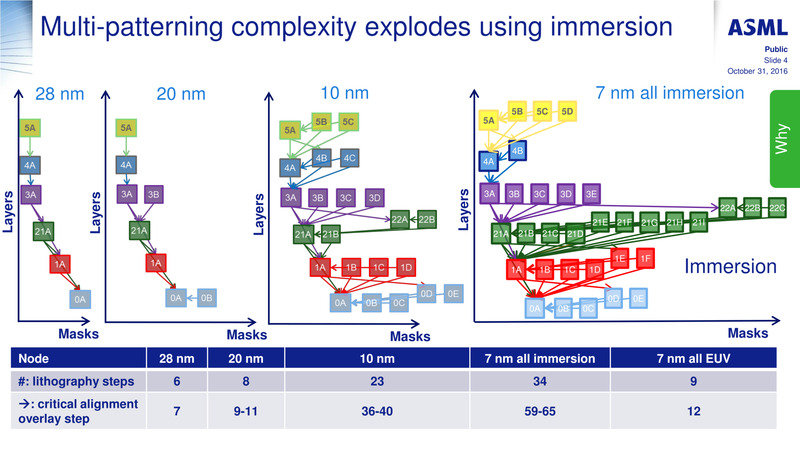

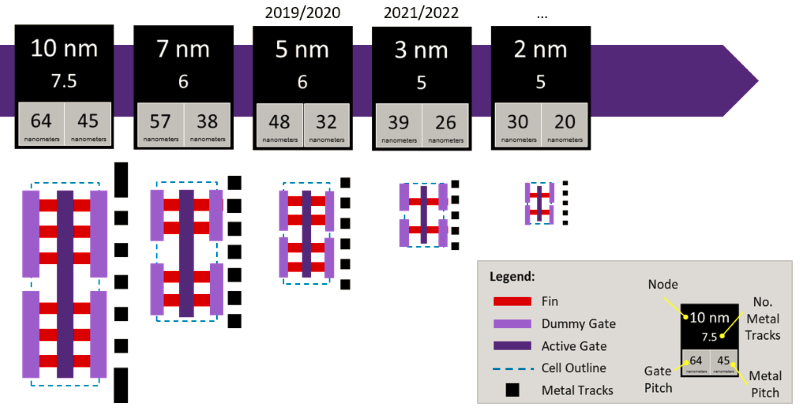

I really don't think that is about yields. Yields always start poor(ish) and then improve. That article is clearly describing a new phenomenon (part of the end of Moore's Law).

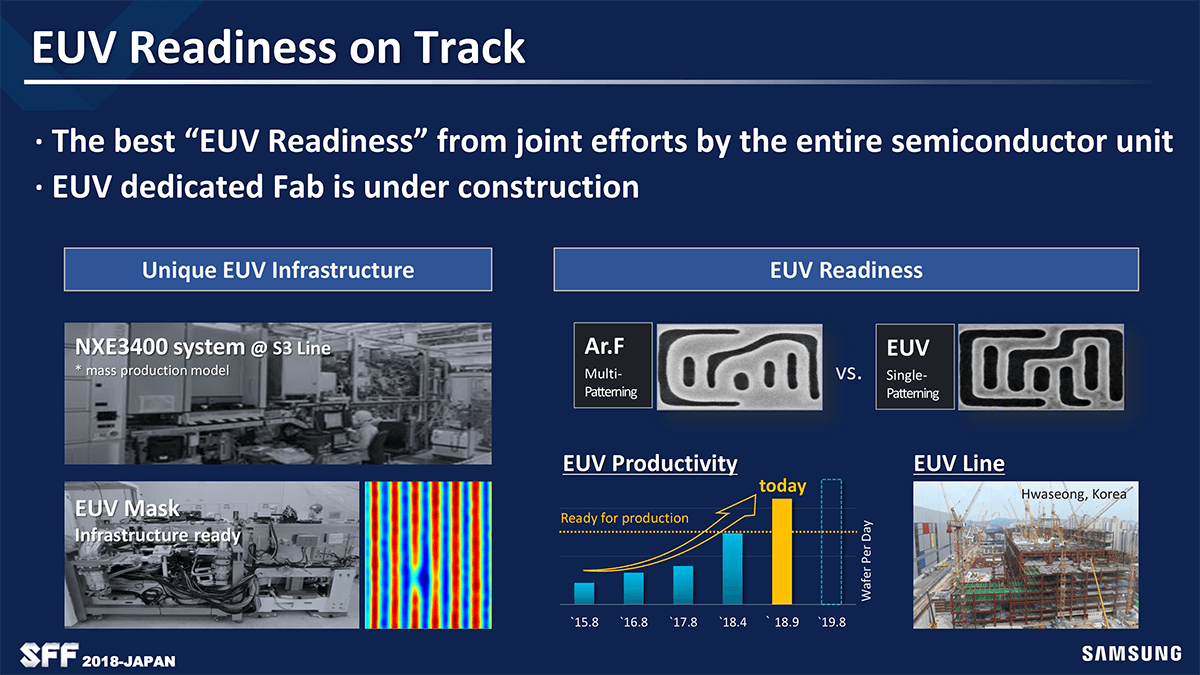

EUV will accelerate Moore's Law once again and the silicon will be alive and kicking for a long time:

I don't think people realize how important EUV is for the entire tech industry.

2nm will be probably ready for the PS6 (last gen of physical consoles, then it's all about the cloud and 3D die stacking).

I'm not arguing anything. The article I posted is saying, leaving aside yields, that a 250mm^2 chip costs twice as much as the previous node. This puts huge pressure on the console makers to limit as much as possible the size of their SoCs

At least that is my running logic here.

It will be a huge mistake to limit consoles with a 10-year lifecycle (2020-2030), only because of 1st gen 7nm poor yields with multi-patterning.

They either have to subsidize huge 400mm2 dies (expect 200W+ consoles) or just wait for TSMC's 2nd gen 7nm with EUV (that's the refined 6nm/N6 process from what I understand).

If you refer to the Pro, they had a goal in mind and they met it. The Pro is likely never udergoing a shrink so it makes sense to make the chip as small as possible. Thats not the case with PS5

Both PS4 Pro and XB1X will likely be EOL by late 2020.

They're consoles aimed at enthusiasts and PS5/SNEK will make them irrelevant.

PS4/XB1 Super Slim will still be relevant for casuals, so a 7nm die shrink (tape-out costs around ~250 million) makes financial sense.

And now look how entire graphics sector has moved from 2005 to 2013 in terms of FLOPS (0.2 to ~4) and from 2013 to 2020 (4 to ~ 14) and you will notice that :

A) FLOP increase has slow down considerably

B) Prices of GPUs have gone dramatically upwards

C) Node shrinking has become harder and more expensive

A) Moore's Law hasn't slowed down when it comes to GPGPUs. You have to also take into account INT8/FP16 increases, which will be quite useful for next-gen AI. Rasterization isn't the only thing consoles are meant to perform.

Moore’s Law, one of the fundamental laws indicating the exponential progress in the tech industry, especially electronic engineering, has been slowing down lately (since 2005, to be more precise)…

towardsdatascience.com

B) Because of mining and Nvidia being a tad bit greedy:

Top cryptocurrency prices and charts, listed by market capitalization. Free access to current and historic data for Bitcoin and thousands of altcoins.

coinmarketcap.com

Nvidia reported earnings and revenues that were well below last year's figures, but the graphics and AI chips company beat analyst expectations.

venturebeat.com

C) EUV will replace multi-patterning and Moore's Law will keep progressing just fine.

Multi-patterning is just not viable for sub-10nm nodes.

Or why not check raw perf of 2005 CPU and 8 Core Jag clocked at 1.6GHZ? I think Cell was actually more capable of the two, with 8 years on its back.

It wasn't:

We got a 30-watt octa-core CPU at 28nm (Jaguar at 1.6 GHz) and we're also getting a 30-watt octa-core CPU at 7nm (Zen 2 at 3.2 GHz).

Not many people realize this, but die and TDP budget is the same in both cases. The lion's share will still be consumed by the GPU, as always.

ps: I'm old enough to remember the "death" of transistor scaling since the 80s at least. Back in 2000 I remember engineers saying that 30nm is the lowest we can expect and yet, we got 28nm consoles in 2013.

This is true. This has been talked about for last 5 years. Each new node is more expensive then last one. From design to production, verification and yields, its getting worse and worse.

This is why GPU costs have gone up so much compared to 10yrs ago (well, along with Nvidia dominating).

But AMD showed normalized wafer cost have gone 100% from 28nm to 7nm, which is obviously huge.

That's why exotic chips like Cell have been abandoned and that's why we might also see a Sony/MS hardware collaboration in the future.

Why pay AMD twice for doing R&D? They can share the costs and increase economies of scale even further, more so if they think Google Stadia is against their interests.

They clearly think that it's pointless to invest on 2 separate cloud infrastructures (multi-billion dollar ventures) and that's why they collaborate on Azure.

What was unthinkable back in 2013 is totally realistic these days.