TheThreadsThatBindUs

Member

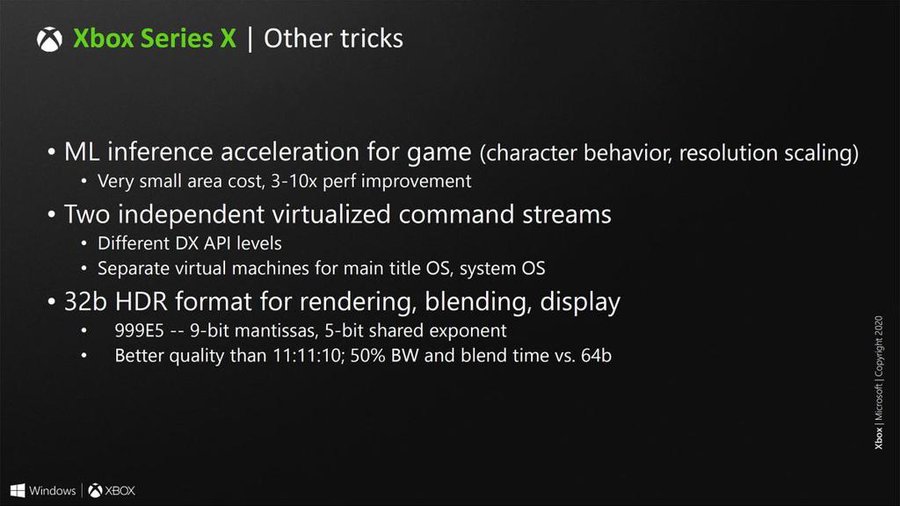

It totally can, look at DLSS 1.9 that was running on regular compute units. Sure, it did not look as good as DLSS 2.0, but Turing couldn't do INT8/INT4 like RDNA2 can (on compute units that is).

Will it be as performant as having dedicated Tensor cores? No. Will it still provide a substantial performance boost? Yes.

DLSS is on PC, where there don't exist hardware limitations as it's an open platform.

When I talk about performance, I'm thinking more pragmatically as it pertains to the closed console hardware.

DL-based computation, even inference models are themselves inherently computationally intensive. So while yes, running a DL-based model for Super-Resolution on your Next-Gen game to boost the resolution to 4k from a lower internal rendering res, will save you performance when compared to rendering your game natively in 4K, the DL model itself might not prove worth the performance cost when there are cheaper temporal upsampling methods available to do the same job but significantly cheaper.

As such, on console, you run into the dilemma where for example you can be faced with the following three choices:

A) Render internally at 1080p with medium graphics settings --> use FSR to boost to 4k ---- cost 10ms

B) Render internally at 1080p with high graphics settings --> use Temporal Upsampling to boost to 4k --- cost 10ms

C) Render internally at 1600p with medium to high graphics settings ---> use Temporal Upsampling to boost to 4k --- cost 10ms

In all three cases above, it's not a totally obvious given which will provide the best IQ and/or overall graphical presentation.

In which, case, while DL-based Super Resolution on console hardware might indeed be fast enough to do in realtime, it still may not be cheap enough practically to warrant switching to it over existing methods for resolving your game at a higher overall IQ and visual quality.

Last edited: