sircaw

Banned

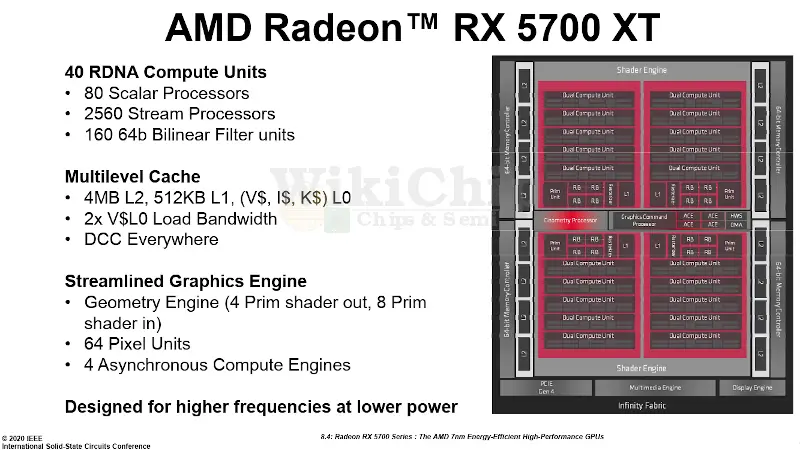

There's kinda no point even talking about RDNA 3 when we still don't have a full window into all of what RDNA 2 is. But it's a guarantee that both consoles almost certainly have features unique to their specific platform needs that likely won't be in RDNA 2, and may not necessarily be in RDNA 3 either and could maybe appear in something later. That doesn't necessarily make those potential features more advanced than features already announced, we just know that AMD and Nvidia are constantly iterating on their architecture and updating it as they find reasonable use cases.

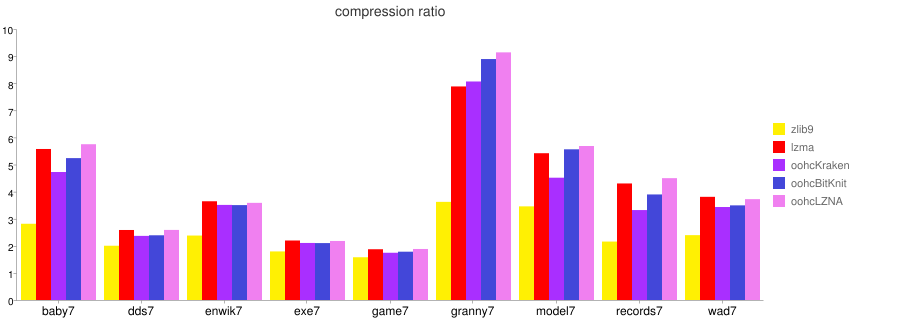

Cerny already confirmed one potential feature, it's the PS5's GPU cache scrubbers. That was a Sony suggested customization or change. He says if that appears in a future AMD GPU, and it might, it would mean that they had a successful collaboration with AMD that produced features useful in the PC space. On the Xbox side, we seem to know about a few more. Their implementation of sampler feedback streaming is unique to Series X. Specifically, they have hardware texture filters built into the GPU (fully custom to Xbox Series X) that a microsoft graphics R&D engineer has confirmed are not part of standard RDNA 2. Then there's the fact we don't fully understand yet all of what DirectStorage is and what specific features are required for this to be applicable. Microsoft has said they will eventually bring it to PC. I bring this up because it's clear it's in part responsible for how the GPU can talk to the SSD and use it as virtual RAM. So toss in DirectStorage and whatever hardware support is around it, such as the decompression unit that's also designed for Microsoft's BCPack, a custom compression system specifically for GPU textures that DirectStorage almost certainly helps to manage. That could be a potential beyond RDNA2 feature.

Meaning we can also toss in Sony's kraken decompression unit as likely a potential feature enhancement that may not be apart of RDNA2 also, or it could be. Afterall, it was AMD who unveiled Radeon SSG where an SSD is literally bolted onto a GPU and usable as expandable memory, so i find it highly unlikely they didn't seek to further role that work into their PC high end consumer cards.

Keep in mind I am not saying that Microsoft's and Sony's SSD implementations are the same, I honestly don't think they are, only that there are obviously some similar concepts or thought processes at play, because we really don't have a full window into all that both sides are doing, just a high level overview at best. Anyway, it's entirely possible that such changes on the PC side may require more time because these consoles had far more immediate need for certain features than, say, the PC did, meaning all these considerations and hardware built in for the SSDs could actually be beyond RDNA2 features only because the consoles needed them now. So I say let's wait to find out. But there's no doubt that both Sony and Microsoft both have all sorts of modifications to their GPUs because their specific developers may have asked for things or made requested (almost certainly did) and they themselves may have felt there were improvements required in certain areas.

Take for example, Horizon Zero Dawn or the Last of Us 2 and Halo Infinite. All 3 of those developers may have specific quirks in their game engines that are specific to how their game is designed and may make a request of Sony or Microsoft to increase their capability in a specific regard, and that by doing so it will have a direct impact on their ability to do specific things in their games. Those things while they may not get the headlines like ray tracing or VRS or DLSS may still be extremely important and be features that are actually beyond RDNA2 and that could make it into later architectures if AMD is learning that this technique is becoming more common on games. They may end up deciding "okay, we need our GPU to handle these specific tasks better."

So THAT is what features beyond RDNA 2 may actually entail, specific optimizations for doing certain tasks better, but what I think most fans are doing is treating these secret RDNA 3 or RDNA 4 features as things that will blow features like Mesh Shaders, VRS, Ray Tracing away or at least be on par. It's possible, but then highly unlikely because it likely would have been discussed already because then that would mean it was an innovation brought on by Sony or Microsoft, meaning there is far less reason for them to not talk about it. So far it's been Microsoft who has been more willing to talk about all the new things they did, but then Cerny's presentation contained a lot of info also. People are treating it as if he barely said anything. He likely didn't say all, but he likely got all the major headliners out that are meant to be game changing GPU capability. The rest may be specific to how all these developer's existing or upcoming game engines are designed. I guarantee there are things specific in Xbox Series X that will make Halo Infinite's engine run better. I guarantee there are things in Playstation 5 that is optimal and uniquely designed for the next God of War or the next Horizon Zero Dawn.

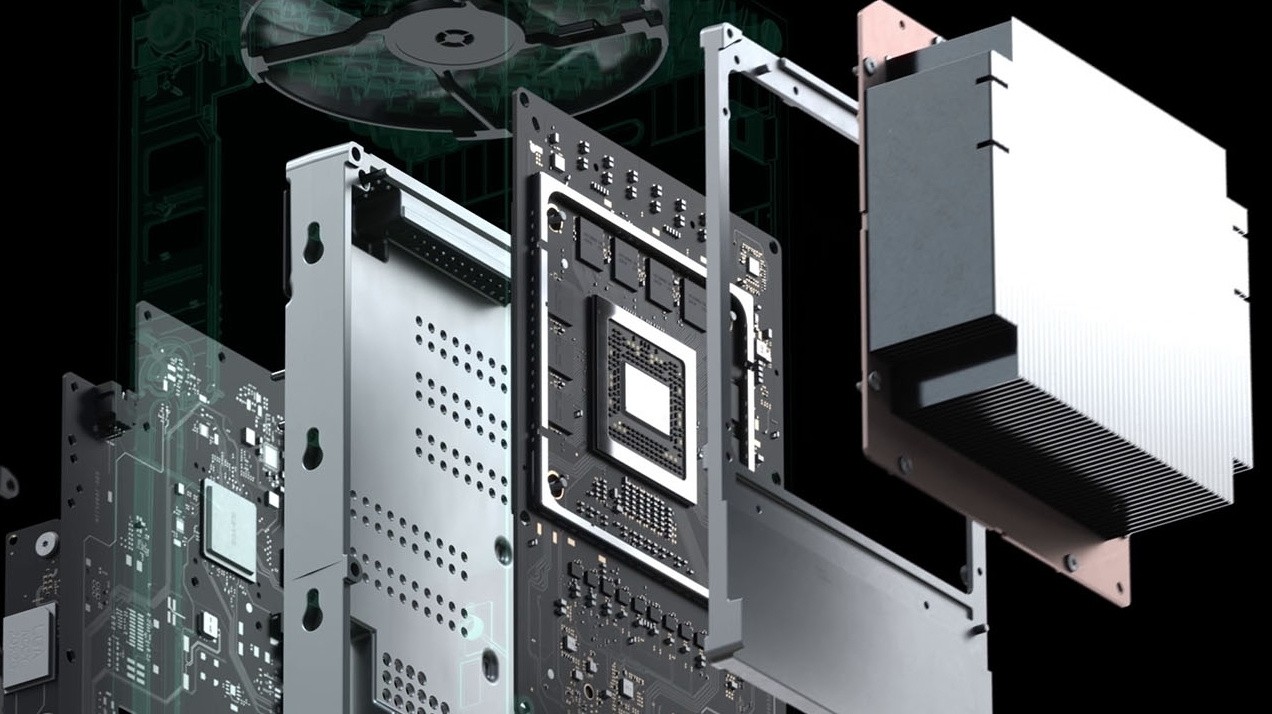

So, yes, there are many customizations and features in these consoles that are likely not in RDNA 2 that's dropping this year and might arrive later. In fact, based on some of the requested developer modifications, they may invent new innovative capabilities from those, same as what happens in each cycle. Remember Sony said they had specific work done designed to better handle checkerboard rendering on PS4 Pro? These are the kinds of things that I'm certain was done across both consoles and is likely not RDNA 2 specific. Why else does Cerny stress they didn't just take a PC part. Why else does Microsoft's Jason Ronald and another graphics engineer stress that they built a lot of custom hardware into the Series X? Because developers have needs and desires, and so do they for where they want their games to go.

Hopefully this better explains the whole RDNA 3 and 4 craziness. We don't know what will make it into the newer architectures. We don't even know what's in RDNA 2, but just know that Sony's first party teams had requests and Sony likely did their best to oblige in order to specifically enhance their 1st party games or address specific weak points or bottlenecks to further gain. Same on the Microsoft side, and then we all know the important role that 3rd party plays to both consoles. They had input also. DICE gave input, iD software made suggestions, Epic Games made suggestions for Unreal existing and unreal for the future. You KNOW they talked to Rockstar, you know they talked to Ubisoft Montreal and Bungie and CDProjekt Red. Basically these consoles are likely loaded with all kinds of little tweaks and customizations, but just don't expect magic bullet solutions to evaporate raw performance advantages or certain features. Just trust that both consoles will kick ass especially for their first party and many 3rd party titles because they were designed to do just that.

Some very good points to ponder,

Thank you