MasterCornholio

Member

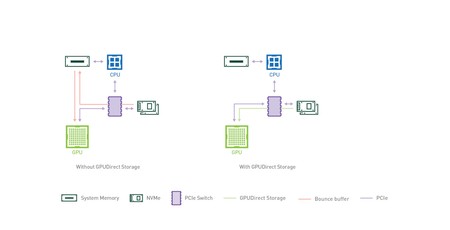

I tend to agree that they will be fundamentally similar. It's just curious to see the difference in processing's power dedicated to that though. XSX uses 1/10th of a core while PS5 has a dedicated block that's equivalent to 1-2 cores. I guess Sony is anticipating handling magnitudes more I/O ops in next gen games.

I don't think Microsoft has been at all negligent. They probably just envisaged flash memory as being about load times and less pop-in. Just like everyone else did before Sweeney unzipped his trousers.

Sony and Epic Games have been cooking this new paradigm up for a while, and it's probably no coincidence it wasn't publicly revealed until this close to release.

Sony have potentially played a blinder by partnering with pretty much the premier third-party engine like this. I wonder if Microsoft was also approached to work on future rendering technologies, and whether they'd have been as interested in investing the same resources as Sony have done.

I really think this big difference in I/O is because of how both are viewing next gen. Sony probably believes that they need an insane I/O to push game design while Microsoft only sees it as a way to reduce load times so the XSXs I/O is good enough for them.

It should be interesting to see who is right in the end but currently it seems like Sony made a better decision with the I/O based off that UE5 demo.