GHG

Member

It's not like this is the standard performance delta between the 7900 XTX and 4090. It's literally one game. One game that has always performed uncharacteristically well on nVidia hardware and poorly on AMD hardware.

For the other 99% of games the 7900 XTX holds up just fine and actually provides a much better cost/performance ratio compared to the 4090.

I literally said "the games they want to play".

If someone wants has $1000+ to spend and they want to play cyberpunk with the full suite of features enabled (with that amount of money being spent, why wouldn't they?) then what are they supposed to do? Cut their nose off to spite their face and purchase an AMD GPU for the sake of "muh competition"?

And no, it's not just one game when you start factoring games that feature ray tracing and DLSS, which happens to be a lot these days in case you haven't been paying attention.

So tired of people defending substandard products from huge businesses who can and should be doing better.

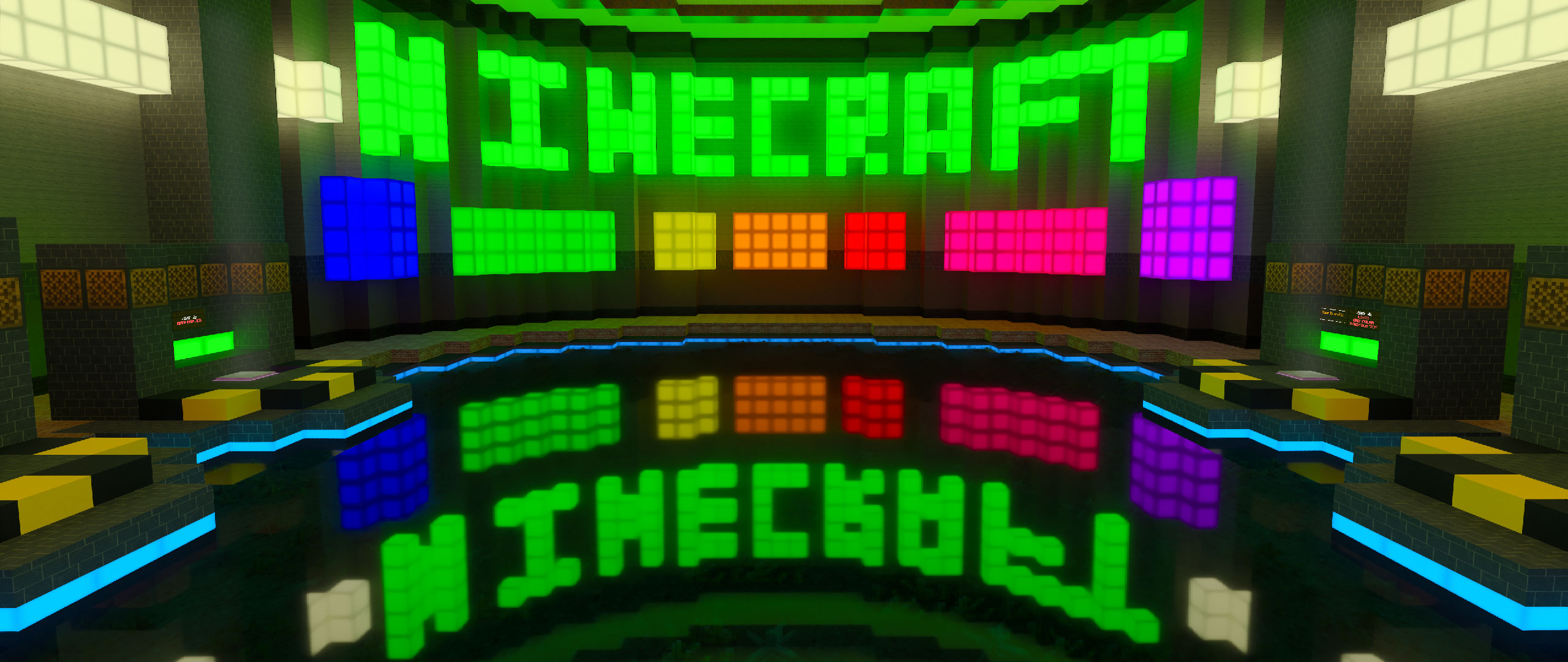

But this game is designed with Nvidia hardware in mind so Cyberpunk should run best on Nvidia GPUs. I'm not sure how well can path tracing run on AMD's GPU if the devised and equivalent tech but I can't say for certain AMD GPUs are bad.

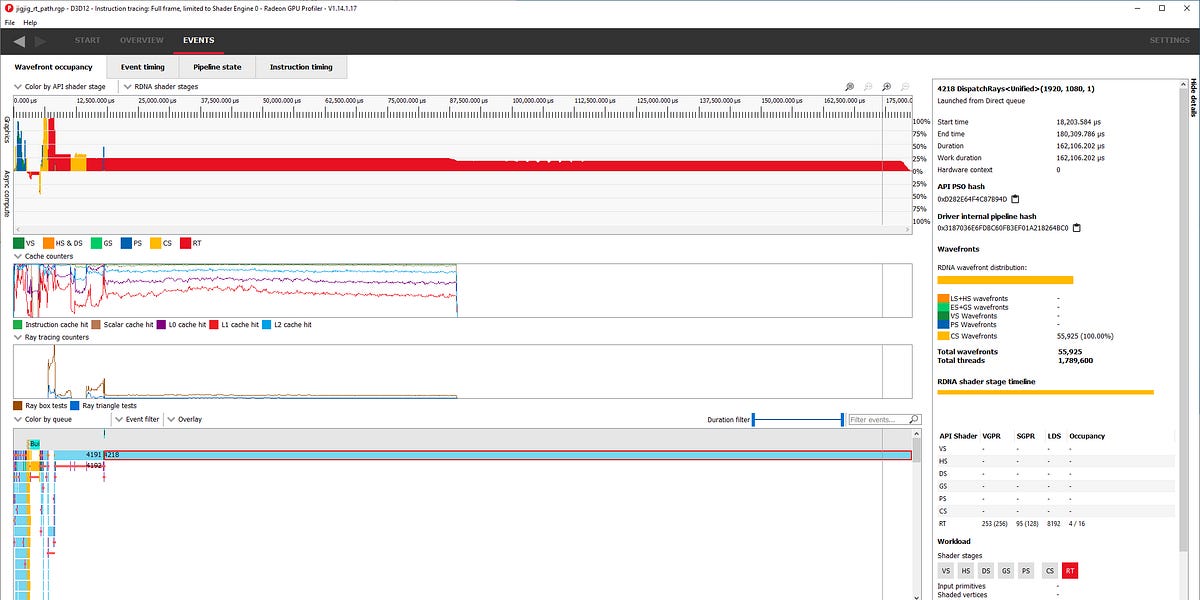

AMD are about 2 generations behind when it comes to RT performance, they can't do path tracing well because their technology is so behind and isn't up to scratch. That's nobody's fault but their own.

What do you want devs and Nvidia to do, not push/develop new technologies and sit around waiting for poor little AMD to catch up?

Last edited: