Topher

Identifies as young

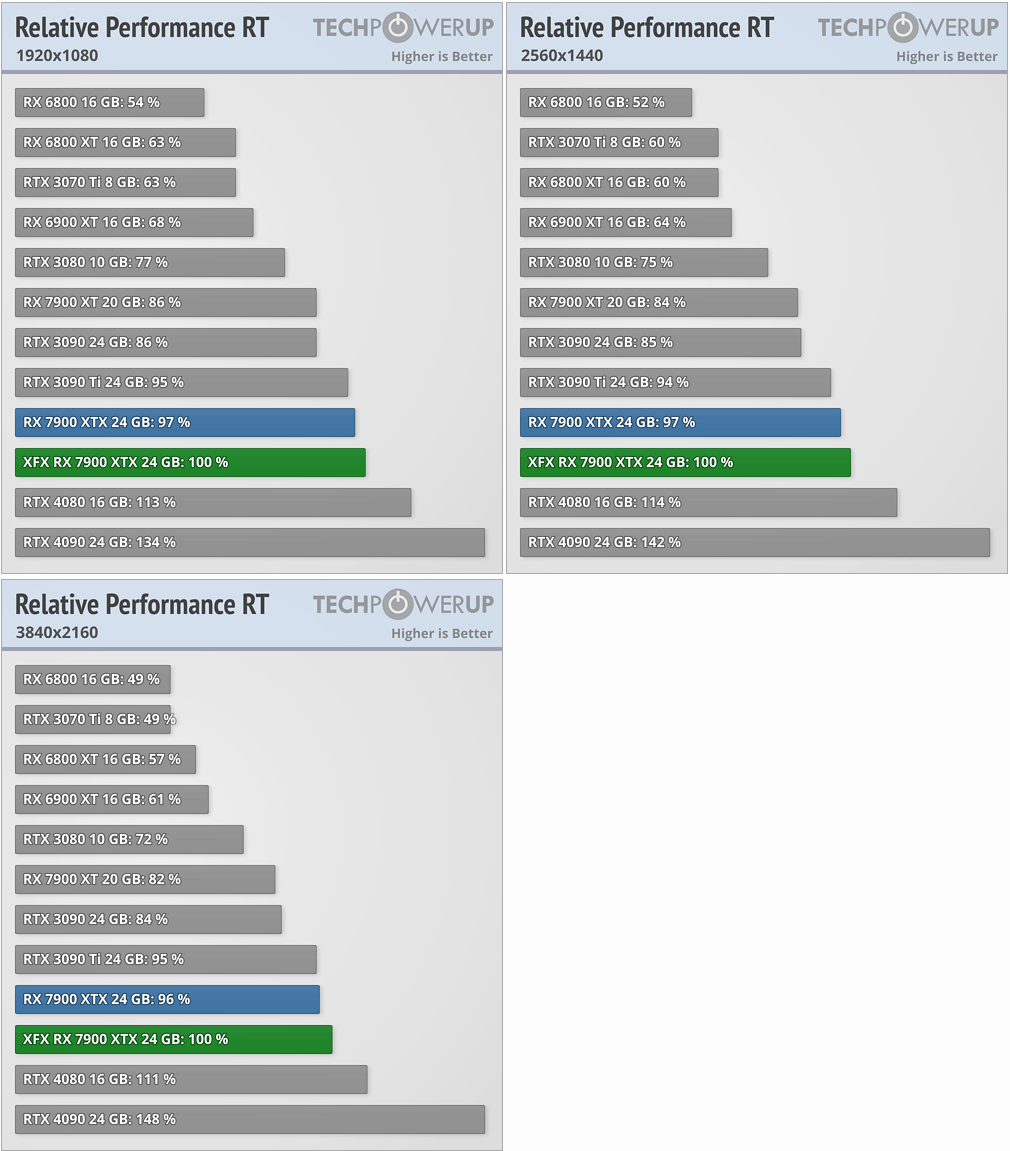

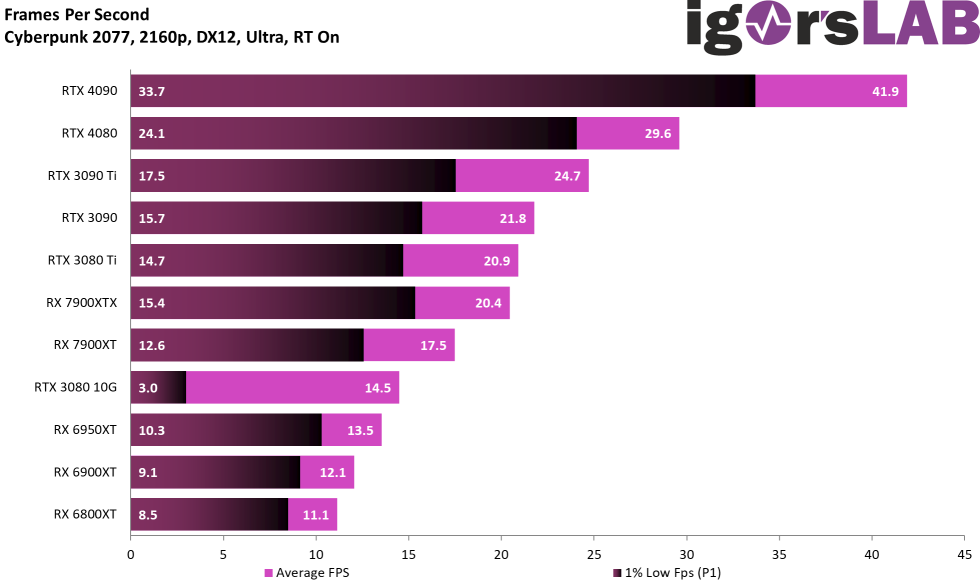

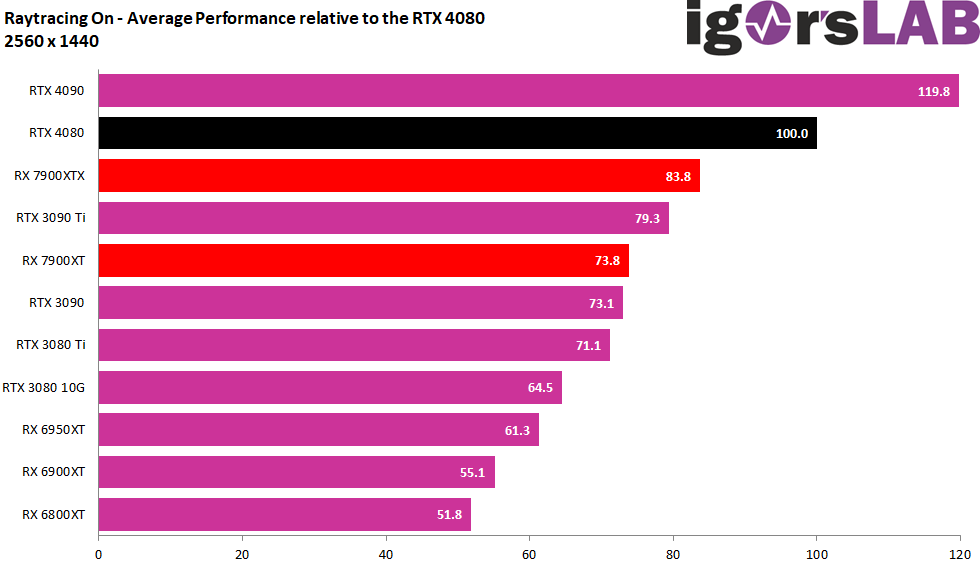

I am not rooting for either teams. But to me the 4090 and the 4080 are high end cards. The 7900 xt is a mid range card in terms of performance. If I am going that route ( not really mid range but you know what I mean) then I would just go and buy a used 3090 for cheaper since I'll still have access to same level ray tracing as well as close performance but cheaper

Yeah, I get what you are saying. Although I doubt I'd buy a used card with all the ones used for mining probably going on ebay, but I get your point.

So here is what I'm seeing right now as far as how the GPUs are laid out in the market.

$1600 4090

$1200 4080

$1099 3090 Ti

$999 7090 XTX

$899 7090 XT

$799 6950 XT

$679 6900 XT

$449 6750 XT

Of the top five, only the 4080, 3090 Ti, and 7900 XT are actually available to buy at MSRP.