In my understanding, they do. Everything is updated twice as fast with 120Hz displays: the buffer updates half-frames, and the LEDs blink twice as fast, except half of the time it's to display the same thing.

Your understanding is incorrect. If the HDTV in the above picture always updated the entire picture at once, then all 3 bars would be showing identical values. (Read what I posted. If you understand how the tester works then this should make sense.)

This is the case for

some flat-screen displays. The documentation that came with the tester said that it is common for plasma TVs to push every pixel to the screen at once (resulting in identical readings on all 3 bars), but LCDs tend to mimic CRT raster scan behavior and draw in everything line-by-line.

You might be correct in saying that an LCD has to receive an entire frame before it can start drawing the picture... but does it take 16.66ms to send a full frame? What if the video output of the game console (or other source) is something like this:

(I'm not saying this is necessarily the case; this is just my assumption, because I can't think of anything else that would make sense after accounting for the display behavior that I

do know. Also: woo MSPaint!)

Anyway, if you think of the timing for the video source in terms of that graph, then you could see why it would only take an LCD 2 or 3 milliseconds or so to start drawing a picture. Then this test (on a 60 Hz LCD monitor) would make sense:

It would be possible that it takes about 2ms to 3ms fill the LCD's frame buffer, and then the LCD starts drawing the picture almost immediately, ending about 14ms later (3ms for the top bar; 17ms for the bottom bar). A 120 Hz LCD with the same buffer and processing speed could finish drawing the picture in 7ms, giving 3ms for the top bar but 10 ms for the bottom bar.

In theory. I'm honestly not confident in how a frame buffer works in this context, but it makes sense to me that a complete frame can be sent from a video source at a speed faster than the video's frame rate.

I'd actually love to learn more about this. The nature of how the timing works between source and display is something I really want to read more about. I once heard Kevtris say that the NESHDMI has an "8 scanline buffer" that results in near-zero lag, implying that HDMI displays

don't have to wait on a full frame before they start drawing, but I'm not sure if I understood that correctly.

Thanks alot!

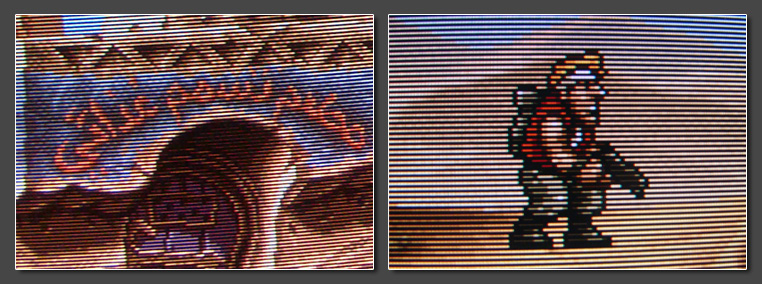

So is it due to screen resolution and not refresh rate? I was under this impression, because those effects get displayed correctly on my CRT TV, no matter if Street Fighter Zero 2 - example related to those videos I posted above - runs on the SEGA Saturn, displayed at a native 240p resolution, or upscaled to 480i on the PlayStation 2 (from the Street Fighter Zero collection). On the other hand, both versions look messed up - just like in the YouTube vids - on my LCD TVs.

Your TV probably has a bad deinterlacing algorithm and also probably treats 240p as if it was interlaced.