I spoke to this in my post, bandwidth is part of GPU perf. - take it away and you lose most of that added gpu throughput.

ROPs fall under the same thing - basically think of it like this - PS4/XB1 had 2x the bandwidth, 2x the ROPs, 50% more compute, 8x the async-shading - but people only really ever talked about compute delta - ultimately all of these 'extra items' are cogs depending on one-another to get a meaningfully faster GPU.

Mesh shaders are an API construct, not a hardware feature. So far only Cerny spoke about related underlying hw (we're missing real Xbox details here) but it seems likely it's a common RDNA feature to both.

HDR hack for bc is a SW service, and 100gb/virtual memory is a simple way to explain benefits of SSD I/O, which isn't in favor of SXS anyway.

Packed rapid integer math is a valid question - it's another 'likely' common RNDA element, but it'd be nice to know for sure.

Though it doesn't run concurrent with floating point - just like RT acceleration doesn't. Goes for both consoles. There are no magical TFlop inflation scenarios in RDNA.

That was many years/platforms ago - been all over the map(figuratively and literally / geographically) since those days.

Let me follow you correctly. You're saying that if we take away the bandwidth advantage of the XSX then...the advantage won't matter?

Im not sure I follow your meaning there.

On your next point regarding the compound view of performance. Yes that is exactly the point of this entire thread. Taken to together from the CU advantage, the RT and ML hw, the decompressor speed and the additional virtual ram XVA should be a very competitive solution to the PS5s IO implementation. What else would we be talking about?

Whats amazing is you say all that to then pronounce that the overall I/O solution "isn't in favor of XSX anyway." Ok thank you for your opinion on that.

Well with respect to mesh shaders on XSX, the implementation has been described as quite powerful. A lack of information on PS5s part has no bearing on the information we do have from MS.

DirectX12 is the API construct that runs on the XSX hardware to expose its features.

So your saying its not an advantage because both HW have it, as its based on a broadly available RDNA2 featureset? OK possibly.

The UE5 demo had all the time and opportunity in world to showcase that, but they didnt.

They did actively reference using the primitive shader HW in Ps5 to accelerate scene construction at 1440p @30fps.

By contrast:

"Principal Engineer at Microsoft/Xbox ATG (Advanced Technologies Group), Martin Fuller, has showcased how the new technique would help devs...

It is also noteworthy that the RTX 2080 Ti renders the scene in about 40 microseconds using the regular pass-through method at 1440p whereas the Xbox Series X renders in around 100 micro seconds at 4K. The Xbox Series X, however, delivers much faster render times even at 4K than the (standard pass-through) NVIDIA GeForce RTX 2080 Ti which goes off to show the benefits of the new Mesh Shaders in Direct X 12 Ultimate API being embedded in Turing and

RDNA 2 GPUs."

Having a next gen console compare favorably with a top of the line discrete Graphics card is a good thing no?

Finally you are wrong about RT HW concurrency on XSX. From the horses mouth, Andrew Goossen:

"Without hardware acceleration, this work could have been done in the shaders but would have consumed over 13 TFLOPs alone. For the Xbox Series X,

this work is offloaded onto dedicated hardware and the shader can continue to run in parallel with full performance. " In parallel with full performance.

On integer concurrence:

"We knew that many inference algorithms need only 8-bit and 4-bit integer positions for weights and the math operations involving those weights comprise the bulk of the performance overhead for those algorithms," says Andrew Goossen. "

So we added special hardware support for this specific scenario. The result is that Series X offers 49 TOPS for 8-bit integer operations and 97 TOPS for 4-bit integer operations. Note that the weights are integers, so those are TOPS and not TFLOPs. The net result is that Series X offers unparalleled intelligence for machine learning."

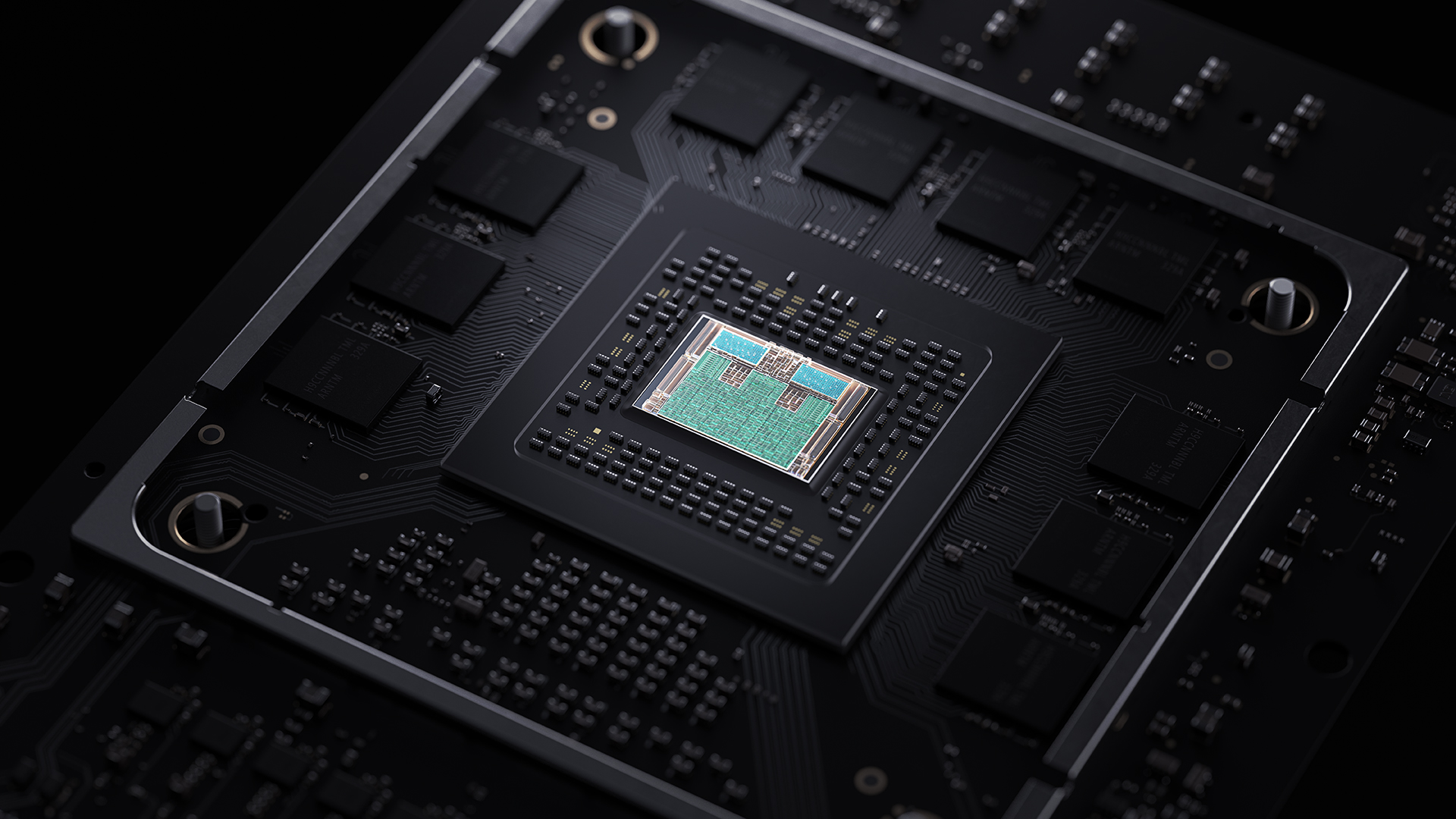

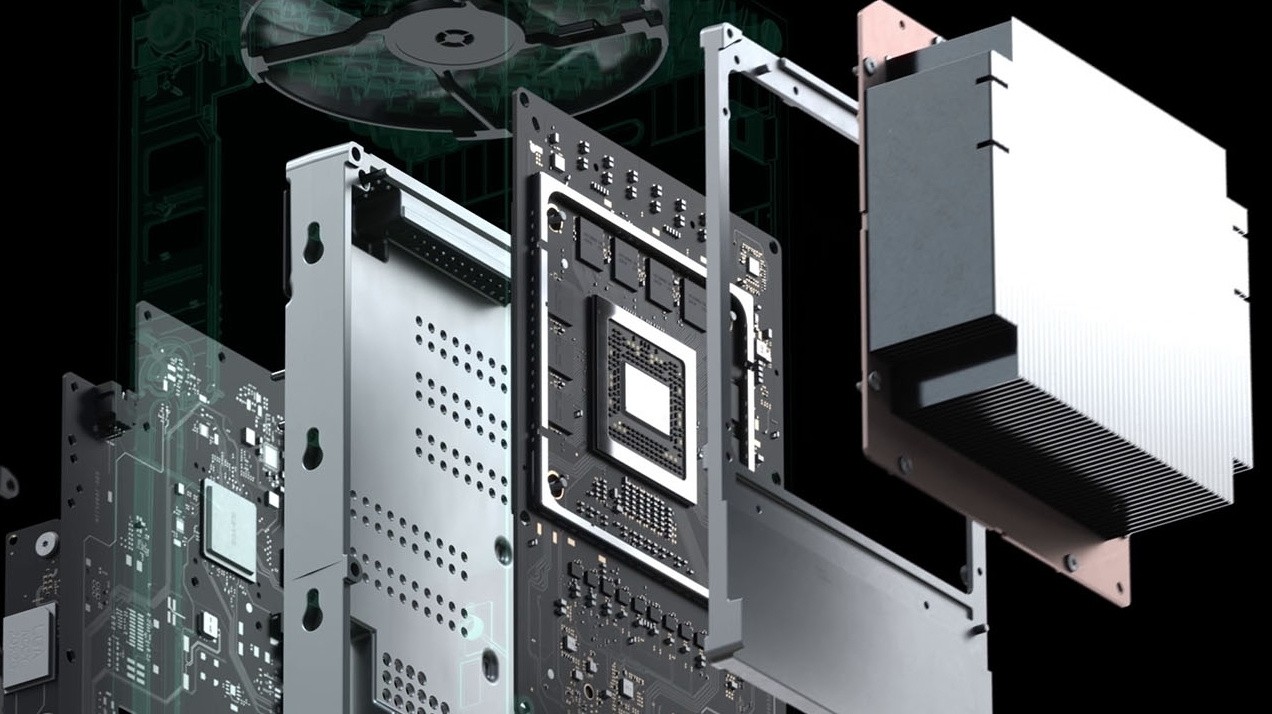

So the XSX has RDNA 2 shader arrays, HW for RT independent of that Shader array, AND special Hardware designed for int/ML work... all concurrent.

All this insight comes directly from Goossen or Fuller who are responsible for the XSX feature set.

I'm not sure where else we can go with this part of the conversation because it seems that our facts are incompatible here..

Your understanding of the featureset is fairly incomplete based what we know today. And there hasn't even been a deep dive as to how it all works together yet.