llien

Banned

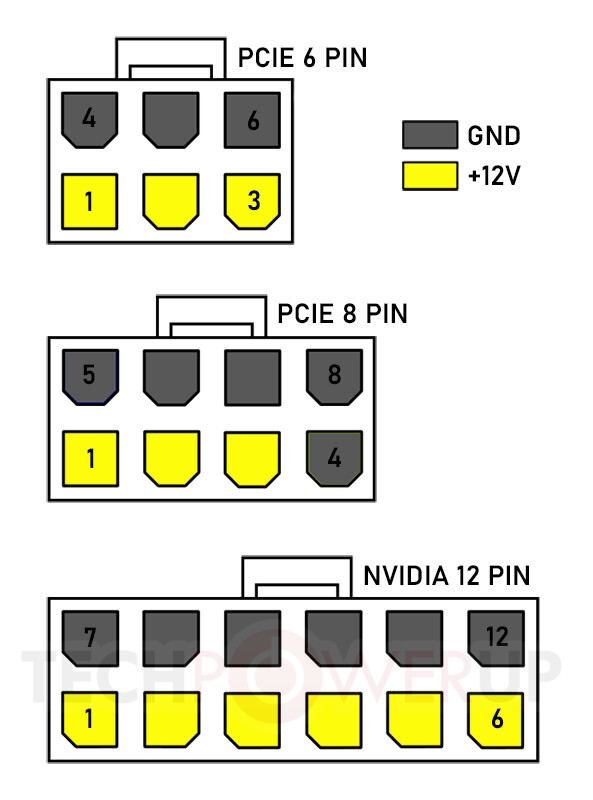

Over the past few days, we've heard chatter about a new 12-pin PCIe power connector for graphics cards being introduced, particularly from Chinese language publication FCPowerUp, including a picture of the connector itself. Igor's Lab also did an in-depth technical breakdown of the connector. TechPowerUp has some new information on this from a well placed industry source. The connector is real, and will be introduced with NVIDIA's next-generation "Ampere" graphics cards. The connector appears to be NVIDIA's brain-child, and not that of any other IP- or trading group, such as the PCI-SIG, Molex or Intel. The connector was designed in response to two market realities - that high-end graphics cards inevitably need two power connectors; and it would be neater for consumers to have a single cable than having to wrestle with two; and that lower-end (<225 W) graphics cards can make do with one 8-pin or 6-pin connector.

The new NVIDIA 12-pin connector has six 12 V and six ground pins. Its designers specify higher quality contacts both on the male and female ends, which can handle higher current than the pins on 8-pin/6-pin PCIe power connectors. Depending on the PSU vendor, the 12-pin connector can even split in the middle into two 6-pin, and could be marketed as "6+6 pin." The point of contact between the two 6-pin halves are kept leveled so they align seamlessly.

the connector should be capable of delivering 600 Watts of power (so it's not 2*75 W = 150 W), and not a scaling of 6-pin. Igor's Lab published an investigative report yesterday with some numbers on cable gauge that helps explain how the connector could deliver a lot more power than a combination of two common 6-pin PCIe connectors.

TPU

So:

Up to 600 watts, curious, what this tell us about Samsung 8nm.

The "it's just not to have to manage 2 cables" is an obvious BS.

Interesting part is that connector is rated for 25 insertions. I was shocked first, but then figured existing ones are rated at 30. (connector is guaranteed to not break if you plug/unplug it that many times)

The implication or PSUs, cough... Note that just having adapter connectors doesn't cut it, due to the higher currents.

The new NVIDIA 12-pin connector has six 12 V and six ground pins. Its designers specify higher quality contacts both on the male and female ends, which can handle higher current than the pins on 8-pin/6-pin PCIe power connectors. Depending on the PSU vendor, the 12-pin connector can even split in the middle into two 6-pin, and could be marketed as "6+6 pin." The point of contact between the two 6-pin halves are kept leveled so they align seamlessly.

the connector should be capable of delivering 600 Watts of power (so it's not 2*75 W = 150 W), and not a scaling of 6-pin. Igor's Lab published an investigative report yesterday with some numbers on cable gauge that helps explain how the connector could deliver a lot more power than a combination of two common 6-pin PCIe connectors.

TPU

So:

Up to 600 watts, curious, what this tell us about Samsung 8nm.

The "it's just not to have to manage 2 cables" is an obvious BS.

Interesting part is that connector is rated for 25 insertions. I was shocked first, but then figured existing ones are rated at 30. (connector is guaranteed to not break if you plug/unplug it that many times)

The implication or PSUs, cough... Note that just having adapter connectors doesn't cut it, due to the higher currents.

Last edited: