Pagusas

Elden Member

SAM support for nvidia isnt anywhere near being done.

its expected next year, just like AMD's super resolution.

SAM support for nvidia isnt anywhere near being done.

So in terms of RT performance.......

The thing is, most of the games currently being benchmarked have raytracing solutions one and a half / two years old with DXR 1.0 implementation.

DXR 1.0 isn't the problem in itself, but it requires heavy optimization regarding data moves to run good on a specific hardware. Which obviously the developers couldn't do for RDNA 2, since there wasn't even a prototype of RDNA 2 two years ago.

You can see the results, pretty big drop in performance for the 6800XT:

But moving on to Watch Dogs Legions where the developers were provided with hardware from AMD beforehand, so they could optimize. The difference to the 3080 is pretty minor:

And then moving on to Dirt 5, which is currently the only game that is optimized for RDNA 2 and uses DXR 1.1 instead of 1.0:

So we shouldn't judge RNDA 2 RT performance based on older titles with RT solutions from a time when this hardware didn't even existed. Besides, the RDNA 2 architecture itself was always designed with DXR 1.1 in mind.

Mixing them up is not the way to address "those claims", it's a way to mislead, and for not too bright individuals: when someone tells you there are only about X pears and Y apples, you are supposed to say how many apples and how many pears are there, not come with a list of "either a pear or an apple".No, I didn't. Your post claimed that there are only about twenty games that support ray tracing and only about a handful that support DLSS. So, I addressed both of those claims.

RDNA3 is next year. Bye bye nvidia.its expected next year, just like AMD's super resolution.

RDNA3 is next year. Bye bye nvidia.

RDNA3 is next year. Bye bye nvidia.

You are posting this.i'll eat my hat if RDNA3 arrives next year. RTG has never been on time.

Source?RDNA3 is next year.

People DO care about Raytracing though. Not sure why you are trying to be so dismissive about it. RT is a driving force for a TON of people. Shortages alone dispute what you've just barfed out. You're talking about rasterization, which is super important, but you are talking about competition from YEARS ago when it was more important. Rasterization performance hasn't been an issue for NV for a long time in most of their cards, and while yes it's GREAT that AMD has caught up and even beat NV in some games, it's not the same selling point it was years ago when AMD was behind. You talk about moving goal post, while literally moving goal post in your own post.So what's the deal here...

Turing launched over 2 years ago, had buggy, noisy raytracing which tanked performance. Many companies went back to the drawing board to fix RT in the four games at the time. In some cases effects were dialed back and Nvidia had no choice but to use a reconstruction technique to sell RT because natively RT was just not performant...People bought a 1200 card to play Quake 2 demo at 1080p 30fps, an old as nails game and they are pretending that's why they spent all this money...

Nobody in their right minds bought Turing to play BFV at sub 60fps and lower resolutions instead of 1440p or 4k at 120-60fps....Even now, nobody is buying any card just because of RT....It's a feature that will evolve, but the asking price is way too much right now to justify the much lower performance....

DLSS 1.0 failed, just recently they improved it to 2.0...How many games are using it? How many games are using RT after 2 years and 3 months? RT is in it's introductory phase only now because of consoles....For over two years, it was simply being beta tested by NV fans at a high price.....

Circa November 2020 and AMD delivers rasterization performance so good that it can drive 1440p 120fps and 4k 60fps at ultra settings and people are still complaining.....AMD shunned their blower coolers and have engineered some good looking cards with top a top notch cooling solution, lower DB and temps vs Nvidia per GPU bracket, then you still get complaints on AMD's cooling solution, no outright praise for it's lower power draw, no praise for it's performance per watt, no praise for it's superior rasterization performance per bracket.....In some cases the 6800 is even beating the $1500 3090 at $580 and people are still complaining, saying these cards should be 100 or 200 dollars less....In what world do these people live, I'm quite convinced the most aggressive Nvidia fans are still running on board graphics and 750ti's, can't afford a $600 AMD card, far less a $700-1500 NV card, but are stanning the hardest.....

AMD's cards are performing on the high end, most of these RT games are old games tuned for NV RT, in newer games and newer RT games, AMD cards perform better.....Some of these benches are even using 8700K's paired with these new AMD GPU's for crying out loud, some are using old NV titles to boost benches and averages to Nvidia....Then the people stuck on the RT and DLSS mantra, expect further optimizations for AMD RT, also expect even further improvements to rasterization performance in these new games and older games on these RDNA 2 GPU's with updated drivers.....You notice how stuck on RT and DLSS NV fans are for just about a paltry list of RT games in over 2 years of RT support.....

https://www.digitaltrends.com/computing/games-support-nvidia-ray-tracing/

Most of these games are not even out yet, a tonne of indies and chinese/eastern games, which most here won't ever play, but it's all about RT......Yes because everybody here bought their $1200 2080ti's and their $1500 3090's did it to play Amid Evil and they are still playing BFV and Modern Warfare daily....or even the drab looking control.....These are the only games that matter...

All I can say is this, if the 6800 is beating the 3090 in some games now, I really want to see benchmarks when the drivers are further optimized for AMD and of course it has to be mentioned that this is AMD's third tier card.....It's top tier lands in December. So let them continue to downplay this performance as they did with the 5700XT priced in the 2060S bracket which matched the 2070S at a higher price....The value AMD is giving here is the best in the business. No one cares about RT that is so tasking, that you need a reconstruction technique which has to be implemented by devs and Nvidia on a case by case basis....

The difference between AMD fans and NV fans is that NV fans always shift the goal posts.....In Pascal days, rasterization and power draw mattered, more vram did not. In Turing days RT and DLSS were gamechangers for 4 games for 1 year+ at exorbitant prices....With Ampere, slightly better RT and DLSS 2.0 is the only thing that matters......Better rasterization, higher frames at native rez, lower power draw, better performance per watt, higher clocks and OC's, cooler and quieter cards don't matter anymore....RT in a handful of games is all that matters....Once they have a feature, it's the most important thing.....I see with SAM AMD is outpacing the 3090 a $1500 card by a good margin in many games, yet AMD fans don't even brag about that and call it a gamechanger, yet it is.....How many are really testing with the best gaming CPU's out there, the Ryzen 5000's, even with SAM we are fair in knowing that not all folks will have X570 motherboards, but I'd be willing to bet that there are hundreds of thousands of these boards out there, much more than the paltry count of RT and DLSS gamers out there. So, much more persons out there who can use SAM and see massive benefits now, but no, AMD has no features you see.....The same persons who say NV is coming up with it's own SAM are the same persons saying AMD does not have a DLSS equivalent to boost their RT performance in the here and now and yet never mention the massive improvements SAM gives AMD now.

Some of these tech guys are disingenuous at best, the only way they will have respect for AMD is for fans to buy these cards like crazy, just like they did Ryzen.....Ryzen always had good performance, but they nitpicked Ryzen too, never forget...I personally expect these cards to do very well, they are clearly the best value products out there relative to performance per dollar and watt, people will see that and make the right decisions as they have now made with Ryzen......The bell tolls...

With some that manual overclocking ExtremeIT was able to achieve 2750 MHz Game and 2800 MHz boost clock, according to the GPU-Z monitoring software. By default, however, the graphics card has 2090/2340 MHz frequencies respectively. The manual overclocking failed and it did not appear to be very stable (multiple display signal losses), but eventually he modified the overclocking to 2600/2650 MHz. This allowed the RX 6800 XT Red Devil to score 56,756 points in Fire Strike (1080p preset, Graphics score).

Its probably gonna be the same thing all over again with RDNA3 next year or so. Sad part is, anyone who prefers Nvidia, automatically gets labeled as "TeAm GrEeN", when we simply spend our money wisely, to get the best bang for buck. Most of us are running AMD ryzen processors (oh the irony).Do you imagine nvidia is taking a 2 year long vacation in the meantime ?

Those clocks are crazy. It's so cool that it can clock so high. Looking forward to seeing some of the crazy OC setups.The AIB versions are going to be MONSTERS! Let's hope we get PPT up and running for reference too, so we can stress ours in the same way.

PowerColor Radeon RX 6800 XT Red Devil tested - VideoCardz.com

It appears that the first custom Radeon RX 6800 XT has been tested ahead of the official embargo. PowerColor Red Devil RX 6800 XT PowerColor Red Devil RX 6800 XT Limited Edition, Source: ExtremeIT It appears that a YouTube channel called ExtremeIT got access to a sample of the PowerColor Radeon...videocardz.com

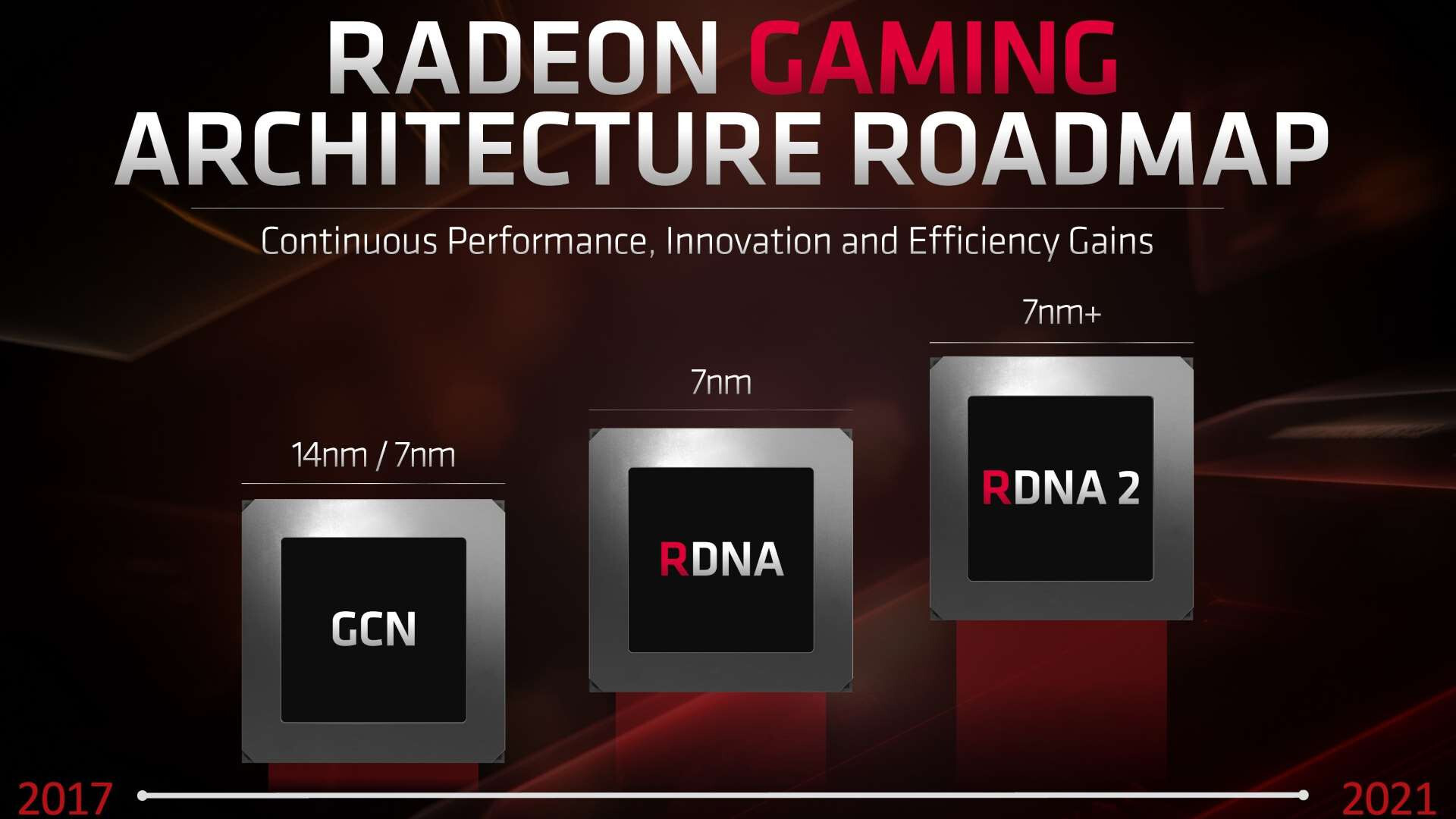

RDNA2 is a huge leap over RDNA1. AMD has same plans for another big leap with RDNA3. Their leap is bigger than nvidia going from 20 series to 30 series. And nvidia had a node shrink from 14nm to 8nm.Do you imagine nvidia is taking a 2 year long vacation in the meantime ?

I saw someone running over 2.6ghz yesterday, which is crazy to imagine if you could go back a year ago or so. In 5 years, I wouldn't be surprised if GPU's have a base clock sitting right at 3ghz or a little lower.Those clocks are crazy. It's so cool that it can clock so high. Looking forward to seeing some of the crazy OC setups.

RDNA2 is a huge leap over RDNA1. AMD has same plans for another big leap with RDNA3. Their leap is bigger than nvidia going from 20 series to 30 series. And nvidia had a node shrink from 14nm to 8nm.

AMD has already said RDNA3 will be another big leap. It might also be on 5nm.AMD had a big leap from 5700Xt because that was a slow card compared to nvidia. There will not be another jump like that for them. Do you think you can jump double your performance yearly in graphics cards ? They had room now on the node because 5700 was so unbelievably slow compared to nvidia. The next release they'll make is gonna be a normal one, 20-30% extra.

They can if they move to 5nm. Anyway, the impressive part is improving performance per watt by 50%+ on the *same* node. Accounting for node advantages, Nvidia's performance per watt remains basically unchanged since 2018.AMD had a big leap from 5700Xt because that was a slow card compared to nvidia. There will not be another jump like that for them. Do you think you can jump double your performance yearly in graphics cards ? They had room now on the node because 5700 was so unbelievably slow compared to nvidia. The next release they'll make is gonna be a normal one, 20-30% extra.

You are posting this.

In 2020.

After RDNA 2 and Zen 3 release...

AMD has already said RDNA3 will be another big leap. It might also be on 5nm.

RDNA2 leap was 53% and they aim the same with RDNA3.

The interview discussed the new levels of efficiency introduced with RDNA 2, which saw roughly a 53% improvement over the original RDNA. It sounds like more of the same is in the works for RDNA 3. "So why did we target, pretty aggressively, performance-per-watt [improvements for] our RDNA 2 [GPUs]. And then yes, we have the same commitment on RDNA 3," Bergman said.

Something called a pandemic happened. Nvidia released a press release in march pretty much confirmed a delay in their graphics cards.big navi should have been out in summer.

There is no reason to doubt AMD under Lisa Su.AMD can say anything and it seems the journalist was taking guesses that they aim the same with rdna 3. Thats nothing, pr talk. Regardless, nvidia will be on 5 nm as well. Its gonna be some intense competition in the near future, thats for sure

Something called a pandemic happened. Nvidia released a press release in march pretty much confirmed a delay in their graphics cards.

Why is last decade important now? Lisa Su has changed the way AMD works.never said they did not have a (valid) excuse this time around. but they never where in time in the last decade.

There is so much wrong with this statement, I don't even know where to start.AMD had a big leap from 5700Xt because that was a slow card compared to nvidia.

And you know that how? At this point you're just spreading FUD. A drop in node can already give them quite a big jump.There will not be another jump like that for them.

Not creating a die bigger than the 5700XT was a business choice. They would have been able to, but they knew it would not be viable for the market at the time in terms of power consumption. Maybe they should have, since all of you apparently don't care about power consumption at all...Do you think you can jump double your performance yearly in graphics cards ?

You have zero idea what you're talking about. The 5700 series GPUs have the same IPC as Turing, as proven by Computerbase.deThey had room now on the node because 5700 was so unbelievably slow compared to nvidia.

Maybe. But their target is still another 50% performance per watt improvement. And they don't often miss their targets.The next release they'll make is gonna be a normal one, 20-30% extra.

Lisa Su, the CEO promised both this year is what I remember.big navi should have been out in summer.

I don't think launching first makes sense, by the way.when they launched before nvidia?

That is one way to see the chart, I'd say it would be safe to say RDNA 3 is sometime between 2021-22.Roadmap shows RDNA3 before 2022 begins.

I'm not dismissive about RT. As I said, RT will evolve this gen, did you miss that? The reason it will, is because all the players are now on board. For over 2 years RT did not evolve and stayed stagnant, it was not penetrating the core base, now it will, because the best developers, pretty much all developers are onboard now....Technology has never been about just the hardware, it's really about the software and who can make RT shine, create games that make the technology look appealing and worthy.....Put your bottom dollar right now, it will be Polyphony Digital, ND and Santa Monica that will make people take notice of RT. So it matters not how many RT games you've seen before, none has been revolutionary with the tech, it doesn't matter how expensive the PC GPU and how many RT cores it has, it's really all irrelevant as opposed to who can make the technology look the most impressive through software/games....People DO care about Raytracing though. Not sure why you are trying to be so dismissive about it. RT is a driving force for a TON of people. Shortages alone dispute what you've just barfed out. You're talking about rasterization, which is super important, but you are talking about competition from YEARS ago when it was more important. Rasterization performance hasn't been an issue for NV for a long time in most of their cards, and while yes it's GREAT that AMD has caught up and even beat NV in some games, it's not the same selling point it was years ago when AMD was behind. You talk about moving goal post, while literally moving goal post in your own post.

Both series of cards from AMD and NV can live in the same market. There's plenty of room for everyone. No need to try and denigrate NV's tech because it doesn't fit your narrative. AMD is making great moves, and a rising tide raises all ships.

And WHO "nit-picked" Ryzen? I don't remember that being the case at all, in fact, I remember it being that Ryzen was a big turnaround for AMD and received a ton of praise. Not sure why you have this victim complex in regards to AMD when it just plain isn't the case. They've done insanely good work in both the CPU and GPU market these last few years.

It's funny that you raise the point of tech guys being disingenuous, because you couldn't be more obvious if you tried.

There is so much wrong with this statement, I don't even know where to start.

And you know that how? At this point you're just spreading FUD. A drop in node can already give them quite a big jump.

You're being too dismissive of RDNA 2 here. The 6800 has 50% more shader cores, has about a 20% higher clock speed, and achieves about the same power consumption on the same node.

Not creating a die bigger than the 5700XT was a business choice. They would have been able to, but they knew it would not be viable for the market at the time in terms of power consumption. Maybe they should have, since all of you apparently don't care about power consumption at all...

You have zero idea what you're talking about. The 5700 series GPUs have the same IPC as Turing, as proven by Computerbase.de

Maybe. But their target is still another 50% performance per watt improvement. And they don't often miss their targets.

And of course the AMD hater has to react to your post with a fire. Says a lot about his knowledge

Watchdogs has RT support for AMD? News to me.I'm not dismissive about RT. As I said, RT will evolve this gen, did you miss that? The reason it will, is because all the players are now on board. For over 2 years RT did not evolve and stayed stagnant, it was not penetrating the core base, now it will, because the best developers, pretty much all developers are onboard now....Technology has never been about just the hardware, it's really about the software and who can make RT shine, create games that make the technology look appealing and worthy.....Put your bottom dollar right now, it will be Polyphony Digital, ND and Santa Monica that will make people take notice of RT. So it matters not how many RT games you've seen before, none has been revolutionary with the tech, it doesn't matter how expensive the PC GPU and how many RT cores it has, it's really all irrelevant as opposed to who can make the technology look the most impressive through software/games....

The problem with the current mindset of NV fans is that they are dismissive of AMD RT performance, when none of the older games tested, the BFV's etc... have been optimized for AMD's brand of RT, they have been updating and optimizing on NV proprietary RT tech for over two years now, talk about being fair in logic and reasoning, it's something they are devoid of...Allow AMD's RT to evolve or allow developers to utilize it's strengths and hybrid technology..>Even now, we are seeing that AMD's tech is more performant over Ampere with RT enabled in recent games. like Dirt and WatchDogs. Yet, the same persons who use RT with DLSS to show show more performant RT on Nvidia cards is, even with AMD having no DLSS type yet are the same persons trying to bury AMD's RT so early even before it shows itself...At least allow things to roost, NV had two years with the technology and if AMD's performance in Dirt and Watchdogs is any indication or even on the consoles in Spiderman, then what AMD has here is very potent and is not as hohum as some would make you believe....Of course they have some driver optimizations and and improvements they have to do for these new cards, for both rasterization and RT. Their Super Resolution is coming soon, people are moving like they want to kill off AMD hype before it gains momentum.....If these people are so enamored with NV, they should just buy NV cards like they've always done, but don't pretend for a minute that their arguments are sound and they care for better prices and competition.....

NV sells their overpriced cards from Turing all the way to Ampere, nobody complains, they bought NV cards at double what AMD is asking now with much worse rasterization and RT performance, but yet, they want to complain about $650 that beats the 3090 a $1500 card in games, which also have better RT performance in Dirt and Watchdogs.....You do the math and see who is being unfair here. It's the same attitude Intel fans had when Ryzen dropped.....Gaming performance can't touch intel, they deflated every areas where Ryzen was strong, but look at things now.....Performance per watt is the biggest mover and shaker in this industry, you best learn that and learn it fast....

All personal attacks... No counter arguments, no substance. I guess we're done here.Are you on drugs brother ? Theres always a limit at any given point in history with what the tech allows you to get at that given time. Did you just find out what a graphics card is today ? Do you think AMD just god the magic juices from alladin and they'll just double its performance every 6 months ? All wile nvidia is just running panicked not knowing that to do ? Relax dude. And maybe stop posting so much bullshit in a single post, i cant be arsed to correct every single line where you're wrong

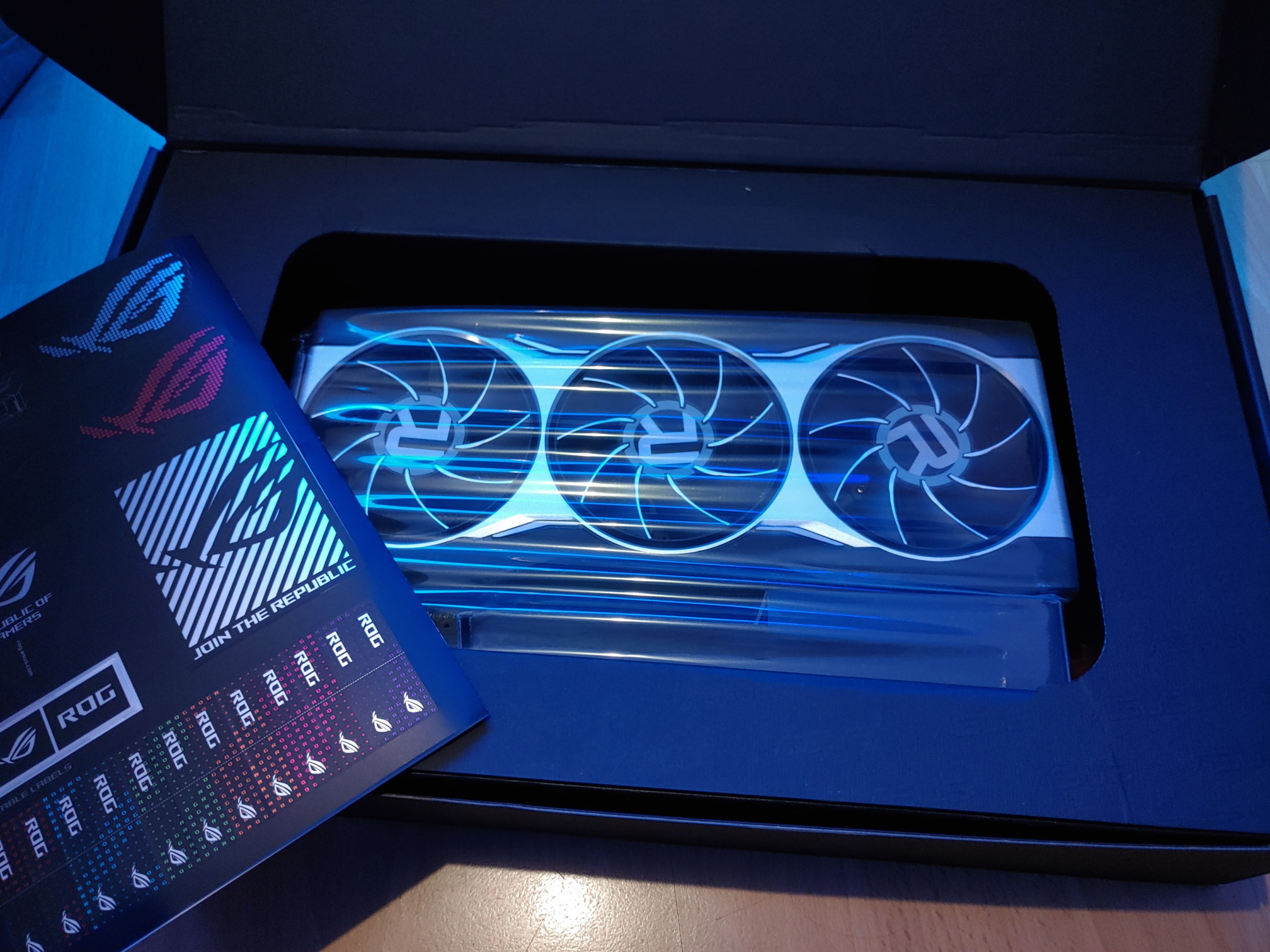

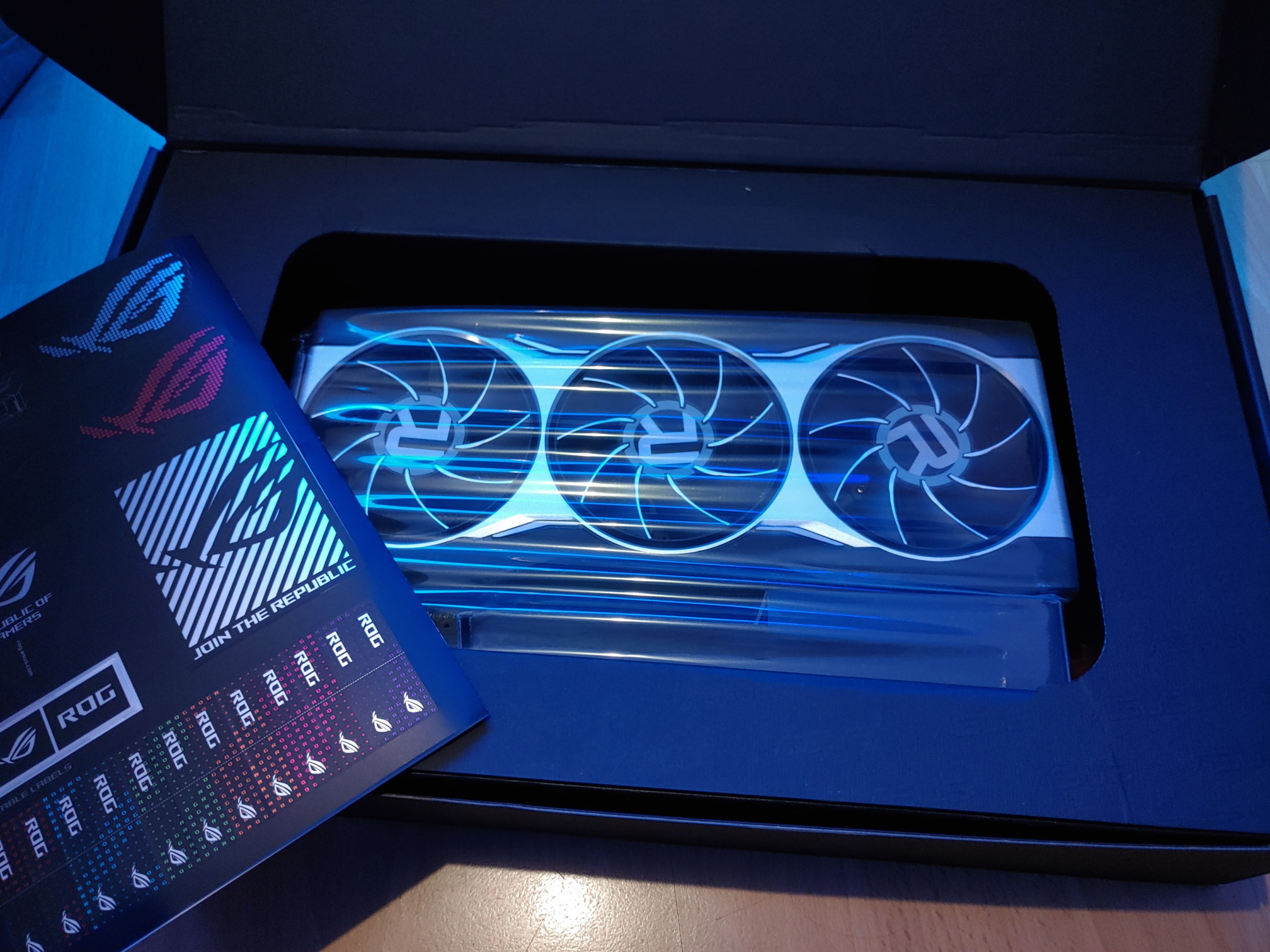

at least something arrived today

welcome SMALL NAVI

the built quality is much better than it appeared from the pictures. it's full on aluminium. that makes it heavier than my rtx 3080 gaming OC

If the 3080 was the 6800XT, and the 6800XT was the 3080, RT would be seen as a gimmick, and AMD's card would be slammed for having higher power consumption compared to nVidia. And oh, they would be slammed also for having only 10GB while the competition has 16GBPerformance per watt means jack shit to most people. It's what's pretty on the screen that drives the industry, not ppw. You could have the most efficient card ever made, but if it doesn't push graphical boundaries most people just won't give a shit.

First of all, lol. Second of all, yep, Nvidia is no longer making any new graphics cards. 3000 series is the last.RDNA3 is next year. Bye bye nvidia.

Some people just dont want competition in the industry. They rather want 20XX series type of jacked up prices and minimal gains.If the 3080 was the 6800XT, and the 6800XT was the 3080, RT would be seen as a gimmick, and AMD's card would be slammed for having higher power consumption compared to nVidia. And oh, they would be slammed also for having only 10GB while the competition has 16GB

Nobody cares indeed, as long as it's nVidia. If it's AMD, suddenly the needle in the haystack becomes the elephant in the room.

Some of you AMD guy's sound like battered spouses. How/Why are you guys even coming up with this shit? This simply isn't true and you are trying to create a false narrative. Not even sure why.If the 3080 was the 6800XT, and the 6800XT was the 3080, RT would be seen as a gimmick, and AMD's card would be slammed for having higher power consumption compared to nVidia. And oh, they would be slammed also for having only 10GB while the competition has 16GB

Nobody cares indeed, as long as it's nVidia. If it's AMD, suddenly the needle in the haystack becomes the elephant in the room.

Well well, whatever you deem moves the industry. Surely AMD is at pole position to have that cake and eat it....It's what's pretty on the screen that drives the industry, not ppw. You could have the most efficient card ever made, but if it doesn't push graphical boundaries most people just won't give a shit.

Let's not be disingenuous here, surely we can argue and also be truthful.....The 5700XT never went for the 2080ti's crown.....The 5700XT was in the 2060S bracket on price, but guess what, it is equal to the 2070S in performance, a $500 card, $100 more expensive......It is only with RDNA 2 that AMD is going for the whole ladder......The card that goes against the $1500 3090 comes out in December (The 6900XT) and it's little brother the 6800XT is already beating NV's top tier card in a few games with early drivers, that's the reality....When AMD is in the fray, they do more than compete, they give you a bit more than their class at a lower price.....$1000 vs $1500 come December.......I guess upscaling tech, and better RT in a few old games is why you would pay the extra $500, I'm braced for the excuses.......With Nvidia, it's forever trying to justify why you should/would pay more for triviality, whilst with AMD it's why you should pay much less than what's already fair for performance way over it's class......It's the same song and dance.....AMD had a big leap from 5700Xt because that was a slow card compared to nvidia. There will not be another jump like that for them. Do you think you can jump double your performance yearly in graphics cards ? They had room now on the node because 5700 was so unbelievably slow compared to nvidia. The next release they'll make is gonna be a normal one, 20-30% extra.

...Some of you AMD guy's sound like battered spouses. How/Why are you guys even coming up with this shit? This simply isn't true and you are trying to create a false narrative. Not even sure why.

Looks like in Cyberpunk 2077, a 6800 XT would be able to play at 1080p with RT Ultra. Since a 3070 apparently needs DLSS to get playable framerates at 1440p.

That slide doesn't mention anything about RT with AMD. I'm pretty sure AMD RT support is gonna be added later, probably around/after it's "next-gen" update for consoles.

Cyberpunk 2077 System Requirements Revealed: GeForce RTX 3070 Recommended

Learn which GPUs you will need to enjoy the ultimate Cyberpunk 2077 experience across resolutions, and check out our new behind the scenes RTX ON video featuring the game’s developers at CD PROJEKT RED.www.nvidia.com

Well well, whatever you deem moves the industry. Surely AMD is at pole position to have that cake and eat it....

Performance per watt, A 10TF console is at pole position (Efficiencies, better design matters, Ill give you that, that's why PS5 is so performant and it's the same reason RDNA 2 is such a rasterization beast)....Give it's RT time to show itself, it just launched....Did you see NV's RT performance on day 1 of Turing...Yet on the outside looking in, there is semblance of very potent RT hybrid technology from AMD if Dirt and WatchDogs has anything to say about it.......So yes, performance per watt drives frames, it drives GPU performance at run time at lower power draws, higher clocks et al...

As for what's pretty on screen will drive this industry, you are damn right about that. As I said to you, it will be AMD based hardware that will drive the prettiest games in the next 7 years.....PD, Santa Monica, Naughty Dog, Sucker Punch.....These are the names you will see on graphical awards setting the standards of awe on your screen....2/2...AMD wins...

...

It is 100% true. Did you take a look at this thread...? I said it a few pages back, and I'll say it again...

Reading through this thread, you would think all reviews would read like:

Instead, reviewers have titles like this;

- "AMD fails to compete again"

- "RDNA2 a disappointment"

- "6800XT Review: nVidia still uncontested".

- TechPowerUp: "AMD Radeon RX 6800 XT Review - NVIDIA is in Trouble"

- The Verge: "AMD RADEON RX 6800 XT REVIEW: AMD IS BACK IN THE GAME"

- TweakTown: "AMD Radeon RX 6800 XT Review: RDNA 2 Totally Makes AMD Great Again"

- PCWorld: "AMD Radeon RX 6800 and RX 6800 XT review: A glorious return to high end gaming"

- Forbes: "AMD Radeon RX 6800 Series Review: A Ridiculously Compelling Upgrade"

- PCPer: "AMD RADEON RX 6800 XT AND RX 6800 REVIEW: BIG NAVI DELIVERS"

- HotHardware: "Radeon RX 6800 & RX 6800 XT Review: AMD's Back With Big Navi"

How long have you been around? Because there is always an excuse to dismiss the AMD cards, but when it's the other way around, those same excuses are dismissed. Take the power consumption one. The 5700XT was actually slammed for that by nVidia fans. Everyone was vouching for RT, despite everyone also agreeing the RTX cards were hugely overpriced.

Normally, you can't have it both ways... But as an nVidia supporter, apparently you can... Even in this thread... A 0.3% advantage for the RTX 3080 is a win for nVidia, but a 0.3% advantage for the 6800XT is a tie...

If the 3080 was the 6800XT, and the 6800XT was the 3080, RT would be seen as a gimmick, and AMD's card would be slammed for having higher power consumption compared to nVidia. And oh, they would be slammed also for having only 10GB while the competition has 16GB

Nobody cares indeed, as long as it's nVidia. If it's AMD, suddenly the needle in the haystack becomes the elephant in the room.

Seriously, you can't make this up. Might as well give these guys participation trophies to make them feel better at this point. If some of these fanboys aren't being paid for being white knights for AMD, then I have no words.Some of you AMD guy's sound like battered spouses. How/Why are you guys even coming up with this shit? This simply isn't true and you are trying to create a false narrative. Not even sure why.

Where exactly does it disagree? Because that is exactly how things went.Your post literally disagrees with your other post:

It's kind of hard to ignore them when they are in a 6800 review thread making the cards look a lot worse than they really are.And even then, you say NVidia fans...Well....They're fanboys, what do you expect? Why listen to them anyways? Are you upset over the fanboys arguing about "wins" in the AC:Valhalla threads that are calling "wins" for PS5 over literally milliseconds of torn frames too? It's funny how hypocritical and abused you come across creating this false narrative of the Nvidia boogie monster.

You just pointed out some pretty important wins for AMD that gamers do care about. Nice.DonJuanSchlong said:If you only care about rasterization, power consumption, and clockspeeds, you go with AMD.

You can't say "if it was the other way around it'd get shit on" and then point to 7 reviews praising the release. Do you not see how that doesn't line up with what you've said?Where exactly does it disagree? Because that is exactly how things went.

You just pointed out some pretty important wins for AMD that gamers do care about. Nice.

I think the pricing is what popped the bubble on this, if AMDs cards were a little cheaper it would have been a slam dunk.

"I have seen stories that say that Cyberpunk 2077 ray tracing will only work on NVIDIA GPUs. Why is that?That slide doesn't mention anything about RT with AMD. I'm pretty sure AMD RT support is gonna be added later, probably around/after it's "next-gen" update for consoles.

I was talking about the sentiment in online forums and graphics cards users, not reviewers. But since you brought it up, even reviewers are sometimes not exempt from this.You can't say "if it was the other way around it'd get shit on" and then point to 7 reviews praising the release. Do you not see how that doesn't line up with what you've said?

That is a more than reasonable stance, and if you go back a couple of pages, you see that many here, do not have that stance, but basically try and portray these cards as absolute garbage.I'm super happy AMD is bringing the thunder, I have a more vested interest in them than probably most people on here and even I don't plan on jumping on board with the 6800XT. It's a stellar card, and I like seeing how competitive it's being, but at the end of the day, what matters for me is the performance(and yes RT is a big reason for me too) in the games I want to play. AMD's RT solution is behind, no need to pretend like it's better than it is, but even that aside, RT isn't supported in a lot of games to begin with and if that's not something that's important to people, they have a stellar alternative with AMD's cards. The market is so huge that there's plenty of room for both cards to be on the market and for everyone to be happy with their purchase. I'm super interested in seeing how crazy some OC's will be with AMD's cards as I think it'll be fun to see some of the cooling solutions people come up with. I don't need to be on either "team" to enjoy seeing both being successful.

People has always cared about native rasterisation and power consumption.You can't say "if it was the other way around it'd get shit on" and then point to 7 reviews praising the release. Do you not see how that doesn't line up with what you've said?

----------------------------------------------------------------

I'm super happy AMD is bringing the thunder, I have a more vested interest in them than probably most people on here and even I don't plan on jumping on board with the 6800XT. It's a stellar card, and I like seeing how competitive it's being, but at the end of the day, what matters for me is the performance(and yes RT is a big reason for me too) in the games I want to play. AMD's RT solution is behind, no need to pretend like it's better than it is, but even that aside, RT isn't supported in a lot of games to begin with and if that's not something that's important to people, they have a stellar alternative with AMD's cards. The market is so huge that there's plenty of room for both cards to be on the market and for everyone to be happy with their purchase. I'm super interested in seeing how crazy some OC's will be with AMD's cards as I think it'll be fun to see some of the cooling solutions people come up with. I don't need to be on either "team" to enjoy seeing both being successful.

Which gamers? I'm not seeing people universally praising rasterization/performanc per watt as some sort of champion in the GPU wars. Most simply don't give a shit about it. They really don't. Clockspeed? Yeah, sure. I'm excited to see where that'll end up, but other than that...Nah.

To some degree I think you are right, but we've also not really seen AMD bring the fire like this in a long time so they were always kind of forced to be the cheaper alternative. Now they are competing pretty neck and neck and even offer better performance/price ratios than what NV is offering in some cases. They still are a cheaper, even if it's not the price differences we are used to. It's gonna be interesting when the 3060ti launches to see where everything falls. It's an exciting time to be aPCgamer.