You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

AMD Radeon RX6800/RX6800XT Reviews/Benchmarks Thread |OT|

- Thread starter AquaticSquirrel

- Start date

- |OT|

Ascend

Member

You honestly think that I don't know that...?Allocation =/= usage.

More VRAM doesn't help with anything, but if the capacity is exceeded, performance crashes hard. It's how it's determined if a card is running out of VRAM or not, and it's how they determine the difference between allocation and usage.Also, as evidenced already, having more VRAM doesn't necessarily make a difference when it's slower.

Last edited:

waylo

Banned

Oh fuck off. Dude tried to act smart and talk shit for me owning a 2060 over a 5700 when the latter didnt even fucking exist when I bought the card. Of course I'm going to respond. Sorry I took time away from you licking AMD...I mean Lisa Su's asshole.waylo

Would you guys mind not sharing which cards you bought because of what store was open when please?

I doubt anyone in this thread cares.

llien

Banned

Oh fuck off. Dude tried to act smart and talk shit for me owning a 2060 over a 5700 when the latter didnt even fucking exist when I bought the card. Of course I'm going to respond. Sorry I took time away from you licking AMD...I mean Lisa Su's asshole.

You were told that if 6800XT RT (about 3070/2080Ti levels even in most green titles) is not viable at RT, neither is 2060.

You brought in 5700 in due to lack of mental capacity to make a coherent argument.

Oh, it still looks like people in this thread care about what GPU you buy as much as they care which toilet paper you buy.

Last edited:

Ascend

Member

Two things.Dude tried to act smart and talk shit for me owning a 2060 over a 5700 when the latter didnt even fucking exist when I bought the card. Of course I'm going to respond.

1) I thought you meant the 2060S, so that's my mistake. Not that it really changes anything... Because...

2) Instead of the 5700 cards, I could've said GTX 1070ti or even the GTX 1080. The same would apply. At one point in 2018 you could have bought a cheap 1070 Ti for under $280, the Asus ROG was about $380, only $30 above the RTX 2060 MSRP for 8GB instead of 6GB for the same performance. The main thing missing would be RTX.

The point was that you said RT on these AMD cards is not viable, and yet you bought an RTX 2060 over the non-RTX alternatives that you could find at the time within the same price range, despite it generally being slower at RT than these 6800 series cards.

So.... Which is it?

a) You did not care about RT but the 2060 was simply a good deal, but now you suddenly do care about RT.

or

b) You did care about RT and therefore went with the 2060, but now the AMD implementation being generally faster than the 2060 is not good enough for you.

You don't have to answer by the way. Either way, it's not favorable for you. I suggest we leave things like this and go back on topic, which is reviews and benchmarks of the 6800 series cards.

In other news;

It is worth repeating that the Vulkan implementation is cross platform, cross vendor, and hardware agnostic - and can accelerate raytracing on GPU compute or dedicated RT cores.

Khronos releases final version of Vulkan Ray Tracing spec

Hopes to become the cross-vendor, cross-platform standard for raytracing acceleration.

And;

Video Index:

00:00 - Welcome Back to Hardware Unboxed

01:50 - Is Cost Per Frame Pointless with Limited Designs?

04:48 - Did We Get Coil Whine with the 6800 series cards?

05:40 - Did Nvidia Squander Their Pascal Lead?

08:26 - Should AMD Be Competing with AIBs with Reference Design?

14:55 - Is This a Paper Launch?

25:04 - How is AMD's Ray Tracing Performance Acceptable?

50:38 - Can We Flash 6800XT BIOS on 6800?

52:57 - Outro

The bolded one is the most important section.

Last edited:

Rikkori

Member

They're not wrong when they say RT gets lost during gameplay. Played a bit of Control with it on and honestly when you're actually running around it doesn't stand out at all to the point that I'm thinking I'll just turn it off besides maybe transparent reflections. If I'm not slow-walking everywhere examining surfaces it just doesn't stand out and at that point - well, what's the point? I'm not shooting a DF video to shill RT.

Ironically though I will disagree with them on their stance of RT shadows, I thought it actually did work better in practice in SotTR where enabling them adds a certain depth & photo-realistic shading to the scene and characters. I also initially thought it's not that interesting considering how good shadows already are with rasterisation but I changed my mind as I played more of it. Kinda the same deal with GI, in Metro Exodus RTGI brings up the game from a mostly uninteresting looking game to near-photo realistic at least for characters because of the great depth it gives to the overall shadowing, and it certainly helps compensate for its otherwise pathetically low-q textures & geometry. I would certainly put GI above any other use for the tech, though I think in Exodus' case it also suffers from a weak GI otherwise, because we know from Cryengine and UE5 you can do much, much better without necessarily resorting to RT.

For me the priority order remains the same though: Textures & Geometry, + Resolution are by far and away the most important always noticeable things about game visuals. Any improvements on secondary effects to be brought to higher quality, such as shadows, reflections etc are not nearly as noticeable or important to me. I think what a lot of comparisons miss with RT is that it doesn't matter how great the effect is in side-by-side comparisons or when you're slow-walking around in the game, what's crucial is how much does it actually change the game in normal gameplay when you're not focused on comparing pixels. That's why in BF V RT ended up being such a huge fail & why RT got off to such a bad start - it was an entirely useless addition. GI in Exodus, and Reflections in WD:L are imo a much stronger case for the tech, but such implementations are too few and far between. And it goes without saying - pathtracing is on a whole 'nother level, but that's not something we can expect in AAA games for this new gen.

Ironically though I will disagree with them on their stance of RT shadows, I thought it actually did work better in practice in SotTR where enabling them adds a certain depth & photo-realistic shading to the scene and characters. I also initially thought it's not that interesting considering how good shadows already are with rasterisation but I changed my mind as I played more of it. Kinda the same deal with GI, in Metro Exodus RTGI brings up the game from a mostly uninteresting looking game to near-photo realistic at least for characters because of the great depth it gives to the overall shadowing, and it certainly helps compensate for its otherwise pathetically low-q textures & geometry. I would certainly put GI above any other use for the tech, though I think in Exodus' case it also suffers from a weak GI otherwise, because we know from Cryengine and UE5 you can do much, much better without necessarily resorting to RT.

For me the priority order remains the same though: Textures & Geometry, + Resolution are by far and away the most important always noticeable things about game visuals. Any improvements on secondary effects to be brought to higher quality, such as shadows, reflections etc are not nearly as noticeable or important to me. I think what a lot of comparisons miss with RT is that it doesn't matter how great the effect is in side-by-side comparisons or when you're slow-walking around in the game, what's crucial is how much does it actually change the game in normal gameplay when you're not focused on comparing pixels. That's why in BF V RT ended up being such a huge fail & why RT got off to such a bad start - it was an entirely useless addition. GI in Exodus, and Reflections in WD:L are imo a much stronger case for the tech, but such implementations are too few and far between. And it goes without saying - pathtracing is on a whole 'nother level, but that's not something we can expect in AAA games for this new gen.

MadYarpen

Member

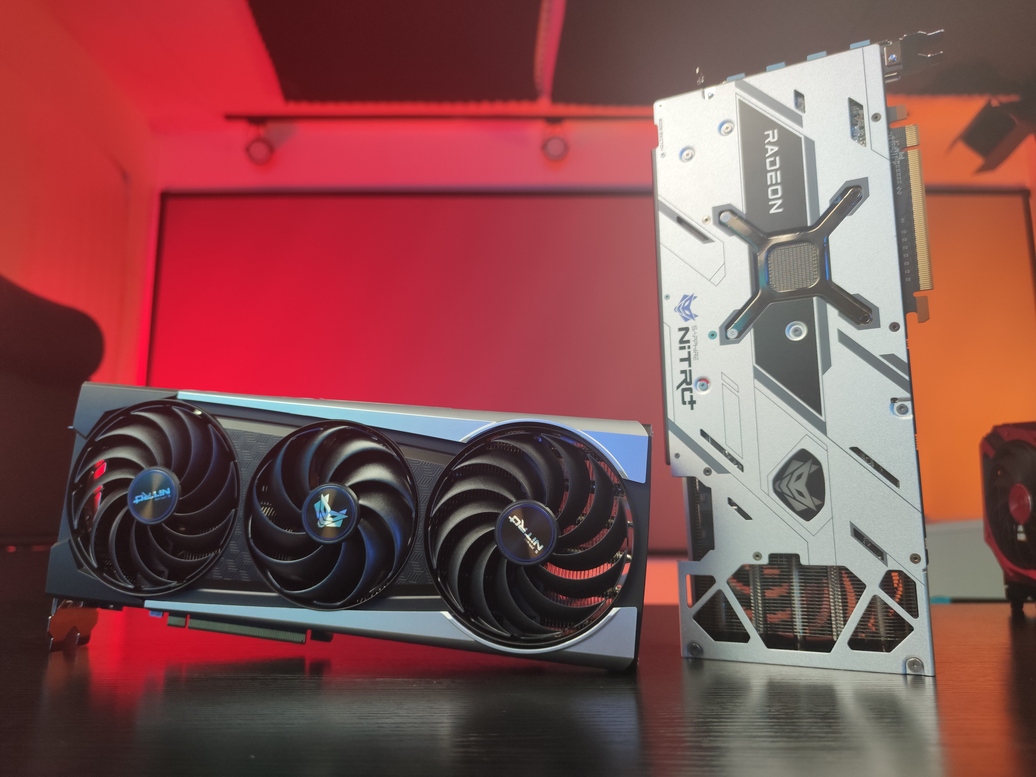

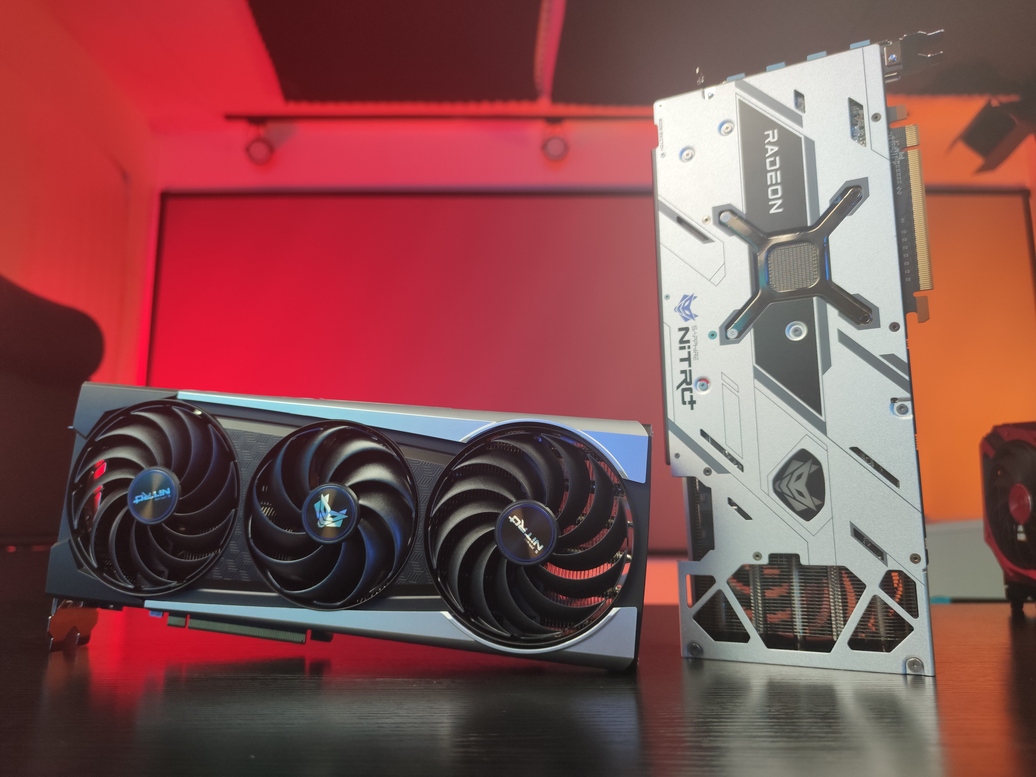

Sapphire Radeon RX 6800 (XT) NITRO+ pictured up close - VideoCardz.com

Photos of the Radeon RX 6800 NITRO+ graphics cards have been shared by reviewers. SAPPHIRE Radeon RX 6800 (XT) NITRO+ First photos of the Sapphire RX 6800 NITRO+ review samples have been shared on Bilibili. It appears that one of the Chinese reviewers received two cards from the manufacturer...

Looking good. I'll try to grab Pulse edition though. Or whatever there is haha.

Ascend

Member

My god this thing is beautiful;

Sapphire Radeon RX 6800 (XT) NITRO+ pictured up close - VideoCardz.com

Photos of the Radeon RX 6800 NITRO+ graphics cards have been shared by reviewers. SAPPHIRE Radeon RX 6800 (XT) NITRO+ First photos of the Sapphire RX 6800 NITRO+ review samples have been shared on Bilibili. It appears that one of the Chinese reviewers received two cards from the manufacturer...videocardz.com

Looking good. I'll try to grab Pulse edition though. Or whatever there is haha.

Last edited:

Kenpachii

Member

A voice of reason amidst the drama;

Dude has no clue what he's doing.

His whole raytracing story makes no sense. Whenever raytracing performance moves up massively next gen games will be builded around that. PC never gave 2 shits about consoles when it comes to these type of settings.

His 3090 rambling for minutes makes zero sense. u can better buy a 3080 a 4080 and a 5080 for the same price of a 3090. absolute no point in getting one.

v-ram allocations doesn't work how he things it works in his examples.

quited here.

Last edited:

Ascend

Member

Sure. But the current cards will be too slow anyway to take advantage of that level of RT usage.His whole raytracing story makes no sense. Whenever raytracing performance moves up massively next gen games will be builded around that.

That's because DX11 was very high level in comparison to consoles. DX12 and Vulkan can potentially change this.PC never gave 2 shits about consoles when it comes to these type of settings.

He was exaggerating with the 3090. But obviously he's saying you're better off buying multiple generations of 80 class cards rather than spending that money on a single 3090 that is barely faster than the 3080...His 3090 rambling for minutes makes zero sense. u can better buy a 3080 a 4080 and a 5080 for the same price of a 3090. absolute no point in getting one.

v-ram allocations doesn't work how he things it works in his examples.

quited here

As for VRAM, it's debatable whether 10GB will be a limit during the lifetime of the 3080. But I would be surprised if the 3070 isn't RAM limited in less than two years. Remember. This is a 2080 Ti class performance card. Imagine if the 2080 Ti had only 8GB of RAM.

Bolivar687

Banned

Listening to that Hardware Unboxed video basically breaking down and thinking through what Ray Tracing means for this generation of cards and going through all the aspects of it for 24 minutes straight kinda makes me feel like the Nvidia Gaffers have been essentially just shitposting the hell out of these threads for the last few months. The idea that Nvidia is your only option for the next 1-2 year cycle of games is such an absurd proposition that I almost can't believe how much it's been seriously posted around here. It's not just that such a small portion of games even have it, or that an even smaller circle even use it to make a tangible difference, but the fact that they're ignoring the 16GB vs. 10GB difference while at the same time somehow talking about "future proofing."

Insane.

Insane.

Kenpachii

Member

Sure. But the current cards will be too slow anyway to take advantage of that level of RT usage.

That's because DX11 was very high level in comparison to consoles. DX12 and Vulkan can potentially change this.

He was exaggerating with the 3090. But obviously he's saying you're better off buying multiple generations of 80 class cards rather than spending that money on a single 3090 that is barely faster than the 3080...

As for VRAM, it's debatable whether 10GB will be a limit during the lifetime of the 3080. But I would be surprised if the 3070 isn't RAM limited in less than two years. Remember. This is a 2080 Ti class performance card. Imagine if the 2080 Ti had only 8GB of RAM.

PC settings evolve with the hardware that's available. That's why it doesn't really matter on what the optimisation does or not. Because PC will just crank the settings up even more to the point it cripples directly again.

Listening to that Hardware Unboxed video basically breaking down and thinking through what Ray Tracing means for this generation of cards and going through all the aspects of it for 24 minutes straight kinda makes me feel like the Nvidia Gaffers have been essentially just shitposting the hell out of these threads for the last few months. The idea that Nvidia is your only option for the next 1-2 year cycle of games is such an absurd proposition that I almost can't believe how much it's been seriously posted around here. It's not just that such a small portion of games even have it, or that an even smaller circle even use it to make a tangible difference, but the fact that they're ignoring the 16GB vs. 10GB difference while at the same time somehow talking about "future proofing."

Insane.

Don't worry, i have been in this rollercoaster already for a few times and frankly. When those cards start to have v-ram issue's and people are like shouting "wtf shit optimized game doens't even run well on a 3080 and 3070" they will soon realize it also doesn't run well on the next and the next title and then realize its there shitty card that limits them.

But luckely u will have lots of people that come out of the woodworks and say well its 2 years old what did you expect? its perfectly normal when there 3080 stands on medium settings requirements while the 6800xt sits at ultra.

That 6800xt however sits there laughs and plays there games without effort.

Last edited:

BluRayHiDef

Banned

So, the 6900XT is the best choice?No compromise: RTX 3090, but is terrible value

RT at the cost of likely having to lower settings in the future due to VRAM: 3080

Large amount of VRAM at the cost of potentially having to lower RT settings in the future: 6800XT

Worst option: RTX 3070 due to only having 8GB, so get 6800 instead.

Ascend

Member

Not for RT, most likely. I don't think the 6900XT will perform a lot better than the 6800XT.So, the 6900XT is the best choice?

BluRayHiDef

Banned

Interesting discussions going on here...;

Video Index:

00:00 - Welcome Back to Hardware Unboxed

01:22 - Has Nvidia's Poor Launch Helped AMD?

05:07 - How Long Until RDNA2 Optimized Ray Tracing?

07:45 - Is RX 6800 Really Worth Buying Over RTX 3070?

20:37 - Is a 650W PSU Enough?

21:23 - Will RX 6800 Be Highly Overclockable?

22:20 - Will AIBs Use Different VRAM/Cache Amounts?

26:16 - Will Non-Nvidia-RTX Games Perform Better?

31:11 - Driver Stability?

34:03 - Will AIB Launch Lead to More Supply?

38:36 - Why Did AMD Switch Away From Blower Design?

41:59 - Will These Cards See FineWine? (importance of VRAM size)

52:11 - Outro

Their discussion about ray tracing is ridiculous; they say that ray tracing isn't worthwhile at the moment because it produces a huge performance hit on both Ampere and RDNA2 cards and that subsequently RDNA2's inferior ray tracing performance isn't a basis to choose Ampere over it.

However, they completely ignore that DLSS minimizes the performance hit of ray tracing and makes games playable while it's activated.

BluRayHiDef

Banned

Not for RT, most likely. I don't think the 6900XT will perform a lot better than the 6800XT.

How do you think the 6900XT will compare to the 3090 in rasterization?

Ascend

Member

About the same. I expect all 6000 series cards to pull slightly ahead as time goes on though, but, that is not something I would recommend others to count on.How do you think the 6900XT will compare to the 3090 in rasterization?

GreatnessRD

Member

My god this thing is beautiful;

God, its me again. I've seen what you've done for others and I'm grateful for what you've done for me already, but please, help me finesse this 6800XT from Sapphire. Amen.

Last edited:

michaelius

Banned

I'm totally shocked that AMD lied about having better availability almost as much as I'm shocked that certain inviduals took it for granted just because their beloved company said it.

MadYarpen

Member

In my shop couple of Power color reference model XTs and non-XTs shown up today. I think - before I was able to add them to cart they were gone. In other shop they were expecting some delivery yesterday (supposedly AIB), but for some reason it seemed to be pushed back.

What a mess.

What a mess.

regawdless

Banned

My god this thing is beautiful;

So fucking beautiful.

Reminds me of the Vanquish armor:

llien

Banned

New videos;

I've watched them.

DXR 1.1 seems to have major changes that greatly affect performance (and at least AMD can utilize it fully).

I guess VRS is used by Dirt 5, it was shown in the respective video. (Fidelity FX lib, cross plat by the way)

Dirt 5 also seems to have used AMD's advise on optimizing RT performance and is likely DXR 1.1., explaining why AMD fares so well.

Allnamestakenlol

Member

How are AMD's gpu drivers these days? Specifically with regards to stability, having drivers ready to go for new major game releases, and fixing issues within a reasonable timeframe? It's been a long time since I've been team red, so I'm hoping they've improved greatly.

michaelius

Banned

How are AMD's gpu drivers these days? Specifically with regards to stability, having drivers ready to go for new major game releases, and fixing issues within a reasonable timeframe? It's been a long time since I've been team red, so I'm hoping they've improved greatly.

Some Rx 5700 still have freesync broken

AquaticSquirrel

Member

RTX/IO is simply Nvidia's implementation of Microsoft's DirectStorage.

AMD mentioned they are compatible with DirectStorage but Nvidia always goes the extra mile with marketing so they brand it to their cards to make people think they invented it when it was simply them making their drivers compliant with the DirectStorage spec.

AMD mentioned they are compatible with DirectStorage but Nvidia always goes the extra mile with marketing so they brand it to their cards to make people think they invented it when it was simply them making their drivers compliant with the DirectStorage spec.

AquaticSquirrel

Member

How are AMD's gpu drivers these days? Specifically with regards to stability, having drivers ready to go for new major game releases, and fixing issues within a reasonable timeframe? It's been a long time since I've been team red, so I'm hoping they've improved greatly.

The "AMD has bad drivers" thing is mostly a meme at this point. The 5700xt seems to have had some hardware problems that were causing issues that AMD tried to work around with drivers. There a tons of people, I would wager the vast majority that have never had a problem with their 5700xt cards, while there are others who had some hardware/software issues. There were definitely some issues, no reasonable person can deny that but they are mostly vastly exaggerated to set a narrative.

I've never had any issues with my Vega64 and I've been using it heavily since early 2019. Similarly Nvidia has had some well noted driver issues with their 3000 series if you've been paying attention. As much as some people will do their best to signal boost an issue from one manufacturer and sweep under the rug issues from another the simple fact is that for the launch of any new GPU there is likely to be some kind of weird mostly minor driver issues that get cleaned up quickly enough, that is just the reality of the GPU market. Not just GPUs you will see things like that with new CPUs, software releases, games etc...

Regarding the 5700xt right now by almost all accounts I've heard any driver weirdness was mostly sorted out months ago. Regarding 6000 series we haven't heard of any major issues so far, but there are not enough of them in people's hand to really judge. No doubt we will get some reports both real and fake of some minor issues that will be sorted with a driver update shortly.

Dr.D00p

Member

Online retailer (2nd biggest in the UK) OcUK have said they won't be taking any orders whatsoever for this 6800 AIB launch today including pre-orders, all you will be able to do is browse what cards will be available, at some indeterminate date in the future (Most likely not until early 2021).

The owner of OcUK is now clearly pissed off beyond belief with GPU manufacturers launching products with such pitifully low stock levels..

The owner of OcUK is now clearly pissed off beyond belief with GPU manufacturers launching products with such pitifully low stock levels..

Last edited:

Ascend

Member

AMD was very confident they would have more stock than nVidia... And now this is happening. That's not good for them.

Doesn't really matter for me personally; I wasn't planning on buying anything until early next year. But... They are going to miss the peak of potential sales, which is just before the December holidays...

And promising that they would do better while not doing better, well... It simply makes people more angry at them.

Doesn't really matter for me personally; I wasn't planning on buying anything until early next year. But... They are going to miss the peak of potential sales, which is just before the December holidays...

And promising that they would do better while not doing better, well... It simply makes people more angry at them.

Last edited:

regawdless

Banned

AMD was very confident they would have more stock than nVidia... And now this is happening. That's not good for them.

Doesn't really matter for me personally; I wasn't planning on buying anything until early next year. But... They are going to miss the peak of potential sales, which is just before the December holidays...

And promising that they would do better while not doing better, well... It simply makes people more angry at them.

Maybe they had more stock than Nvidia on launch but it still got sold out fast.

The next gen thirst is real.

MadYarpen

Member

It is a fucking joke.

I checked in two large online stores in Poland and one said to check at 3 pm (but they don't know if they will have anything) and the other said the delivery planned for yesterday was postponed and they know nothing. Also that there will be no more reference cards.

We will see but I have a suspicion the situation could be even worse than with nVidia.

I checked in two large online stores in Poland and one said to check at 3 pm (but they don't know if they will have anything) and the other said the delivery planned for yesterday was postponed and they know nothing. Also that there will be no more reference cards.

We will see but I have a suspicion the situation could be even worse than with nVidia.

llien

Banned

I think one needs to wait for a couple of months before jumping on supply issues conclusion.

I cannot remember a single lunch without acute shortages in the first weeks.

Anyhow, we are talking about high demand on premium chips.

What AMD got wrong is that NV is actively selling "$499" 3070 for 700 Euro +, while "$650" 6800XT's were sold for lower than that.

I cannot remember a single lunch without acute shortages in the first weeks.

Uh, what would those people buy?They are going to miss the peak of potential sales, which is just before the December holidays...

Anyhow, we are talking about high demand on premium chips.

What AMD got wrong is that NV is actively selling "$499" 3070 for 700 Euro +, while "$650" 6800XT's were sold for lower than that.

Ascend

Member

Maybe this will help you a bit;The most shitty thing is that at the moment you cant really check the spec of the AIB models.

You can't even remotely plan what to try to buy when it is in store.

I am annoyed, and I'm wondering if I end up with RX 5700XT after all.

Sapphire Radeon RX 6800 XT and RX 6800 NITRO+ tested with Furmark - VideoCardz.com

The power virus test has been performed with the upcoming Radeon RX 6800 Series from Sapphire. Sapphire Big Navi NITRO+ The leaker from Chiphell provided a quick Furmark test of the new Sapphire cards. He claims that they perform better than AMD reference designs, despite being overclocked. This...

Ascend

Member

Yeston is at it again;

videocardz.com

videocardz.com

Yeston Radeon RX 6800 XT SAKURA Edition pictured - VideoCardz.com

Yeston is the first AMD board partner to showcase a white edition of the Radeon RX 6800 XT graphics cards. Yeston Radeon RX 6800 XT waifu edition Chinese AMD board partner is preparing its first custom design based on Radeon RX 6800 XT SKU. The graphics card appears to be a full custom design...

Sadly seems like it. I'm expecting even the more budget oriented 3060 to also sell out in a minute. (That's next week FWIW)Maybe they had more stock than Nvidia on launch but it still got sold out fast.

The next gen thirst is real.

AquaticSquirrel

Member

I hate the shadow popping in though. That is extremely annoying.

I still think the performance hit for turning on any kind of RT is crazy right now, but those RT shadows actually look quite good in Godfall I have to say.

AquaticSquirrel

Member

Cheapest RX6800 where I live is at 777 eur with tax. So thats like 70 eur above the original price if you add the tax....

Not that you can buy one.

Welcome to our new reality, I don't even know if prices for AMD or Nvidia cards will go down once supply normalizes. This is why I was mentioning before that we will have to wait and see how the pricing/supply landscape looks once we have a solid stream of supply and all the AIB models are released to really gauge the value proposition of a 6800XT vs 3080 that you can actually buy.

Ascend

Member

Lunch shortage is a huge problem. We don't want to go hungry.I think one needs to wait for a couple of months before jumping on supply issues conclusion.

I cannot remember a single lunch without acute shortages in the first weeks.

Jokes aside, AMD supposedly will have more supply before the end of the year than nVidia, but we'll have to wait and see. I do dislike the review embargo lifting at the same time the cards are revealed, especially the AIB ones.

nVidia has supposedly reserved stock to "flood" the market with cards when AMD releases their AIB cards. "Flood" is a strong word, because they will sell anyway, but they are definitely trying to take thunder away from AMD.Uh, what would those people buy?

I'd prefer if cards can remain at MSRP. These cards are expensive enough as it is.Anyhow, we are talking about high demand on premium chips.

What AMD got wrong is that NV is actively selling "$499" 3070 for 700 Euro +, while "$650" 6800XT's were sold for lower than that.

------------------------------

In any case... An update on the whole RT situation, based on a post by Dictator at Beyond3D, with some of my own thoughts mixed in.

The main difference between nVidia's RT and AMD's RT is that nVidia's RT cores cover both BVH and ray traversal, while AMD's RAs cover BVH, while ray traversal is done on the CUs.

AMD's implementation has the advantage that you could write your own traversal code to be more efficient and optimize on a game per game basis. The drawback is that the DXR API is apparently a black box, which prevents said code from being written by developers, a limit the consoles do not have. AMD does have the ability to change the traversal code in their drivers, meaning, working with developers becomes increasingly important.

nVidia's implementation has the advantage that the traversal code is accelerated, meaning, whatever you throw at it, it's bound to perform relatively well. It comes at the cost of programmability, which for them doesn't matter much for now, because as mentioned before, DXR is a black box. And it saves them from having to keep writing drivers per game.

That doesn't mean that nVidia's is necessarily better, but in the short term, it is bound to be better, because little optimization is required. Apparently developers are liking Sony's traversal code on the PS5 as is, so maybe something similar will end up in the AMD drivers down the line, if Sony is willing to share it with AMD.

I hinted at this a while back, where on the AMD cards the amount of CUs dedicated for RT is variable. There is an optimal balancing point somewhere, where the CUs are divided between RT and rasterization, and that point changes on a per game and per setting basis.

For example, if you only have RT shadows, maybe 20 CUs dedicated to the traversal are enough, and the rest are for the rasterization portion, and both would output around 60 fps, thus they balance out. But if you have many RT effects, having a bunch of CUs for rasterization and only a few for RT makes little sense, because the RT portion will output only 15 fps and the rasterization portion will do 75 fps, and the unbalanced distribution will leave all those rasterization CUs idling after they are done, because they have to wait for the RT to finish anyway.

AMD's approach makes sense, because it has to cater to both the consoles and the PC. nVidia's approach also makes sense, because for them, only the PC matter.

Last edited:

MadYarpen

Member

Yeah. So there goes the plan to play CP2077 day one.Welcome to our new reality, I don't even know if prices for AMD or Nvidia cards will go down once supply normalizes. This is why I was mentioning before that we will have to wait and see how the pricing/supply landscape looks once we have a solid stream of supply and all the AIB models are released to really gauge the value proposition of a 6800XT vs 3080 that you can actually buy.

Ascend

Member

List of AIB 6800 series cards on Newgg. All already sold out

Links So Far

Sapphire Nitro+ 6800 XT: https://www.newegg.com/sapphire-radeon-rx-6800-xt-11304-02-20g/p/N82E16814202391?Item=N82E16814202391&Tpk=14-202-391 Nitro+ 6800 XT Special Edition: https://www.newegg.com/sapphire-radeon-rx-6800-xt-11304-01-20g/p/N82E16814202390?Item=N82E16814202390&Tpk=14-202-390 Asu...

Bolivar687

Banned

Out of Stock as soon as it goes up on Newegg. Not yet on Best Buy.

Bboy AJ

My dog was murdered by a 3.5mm audio port and I will not rest until the standard is dead

漂亮啊~Yeston is at it again;

Yeston Radeon RX 6800 XT SAKURA Edition pictured - VideoCardz.com

Yeston is the first AMD board partner to showcase a white edition of the Radeon RX 6800 XT graphics cards. Yeston Radeon RX 6800 XT waifu edition Chinese AMD board partner is preparing its first custom design based on Radeon RX 6800 XT SKU. The graphics card appears to be a full custom design...videocardz.com