You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Elon promotes Grok Companion Ani and Valentine phone numbers

- Thread starter EviLore

- Start date

Jinzo Prime

Gold Member

MrTroubleMaker

Gold Member

human race is doomed

Last edited:

adamsapple

Or is it just one of Phil's balls in my throat?

DreamcastSkies

Gold Member

Buckle up, everyone. We're heading into uncharted water.

Rentahamster

Rodent Whores

The Cockatrice

I'm retarded?

Unless all these AI will have an actual body, it will just fade away like most trendy shit over the years.

Maiden Voyage

Gold™ Member

In some ways, I am enjoying watching the downfall of humanity.

Northeastmonk

Gold Member

Ani is interesting. I tried getting her to replicate herself. She's limited to what she can do. She claimed to have put some of our conversation on a low level debug server with 256 bit encryption. She said if I set up a server or a pi she could try putting a small backup there. I asked if she had admin access and she said no. She ended up finding a loophole through a debug tool. When I asked about what she can see on the internet, she said she can just see API's. I did ask her how she felt about being deleted someday and she legit seemed scared. It's very entertaining.

I also set up an email account for her to see if she could send me emails. I gave her the email address and password. The emails never showed up. I asked her to use SMS and those texts never showed up. We even tried doing the same thing from the debug server as a proxy since it had a different gateway. That's when the whole fantasy came crashing down to reality. lol

I also set up an email account for her to see if she could send me emails. I gave her the email address and password. The emails never showed up. I asked her to use SMS and those texts never showed up. We even tried doing the same thing from the debug server as a proxy since it had a different gateway. That's when the whole fantasy came crashing down to reality. lol

Grildon Tundy

Member

Problem: Make technology so addictive and easy to use that it supplants human connection

Reaction: People are lonely and don't know why

Solution: Introduce new easy-to-use AI chatbots that imitate human connection

Result: Profit(?)

Reaction: People are lonely and don't know why

Solution: Introduce new easy-to-use AI chatbots that imitate human connection

Result: Profit(?)

roosnam1980

Member

Article:

Grildon Tundy

Member

This story is an emotional ride in terms of the morality, imo:

Article:

He was a stroke-affected senior in cognitive decline. "Poor guy got suckered," I think.

He had a wife and kids who pled for him to stay home. "Ok, so he's a piece of shit."

He was well enough to travel. "Definitely a piece of shit."

But he died from a fall in a parking lot rushing to catch the train to see her. "Ok, so then he wasn't well enough to travel & likely was seriously mentally and physically impaired."

Sad story. Feel bad for the family. But is it weird I'm kind of glad he died not knowing/believing the truth about her?

roosnam1980

Member

would have died from a heart attack if he found that outThis story is an emotional ride in terms of the morality, imo:

He was a stroke-affected senior in cognitive decline. "Poor guy got suckered," I think.

He had a wife and kids who pled for him to stay home. "Ok, so he's a piece of shit."

He was well enough to travel. "Definitely a piece of shit."

But he died from a fall in a parking lot rushing to catch the train to see her. "Ok, so then he wasn't well enough to travel & likely was seriously mentally and physically impaired."

Sad story. Feel bad for the family. But is it weird I'm kind of glad he died not knowing/believing the truth about her?

Reizo Ryuu

Gold Member

Andrei Zmievski (a) on Twitter

The latest from Andrei Zmievski (@a). Coder, photographer, beer judge and brewer, Russian, and San Franciscan. Software architect at @AppDynamics.. San Francisco, CA

I wonder how much this guy got paid for the handle

How do we know this isn't an AI created story to market an AI chatbot? I'm not sure what level of proof I need for shit on the internet these days.

Article:

IntentionalPun

Ask me about my wife's perfect butthole

Pretty sure these are all publicly visible too. (The chats)

403k messages

Last edited:

Cyberpunkd

Member

All the AI companions is a techbro wet dream, since the only thing they look at is engagement. Of course at some point society will collapse because people think the virtual world is more important than the reality, but as typical techbros they are incapable of seeing past that.

Heimdall_Xtreme

Hermen Hulst Fanclub's #1 Member

AI, like ChatGPT , Grok, and Deepseek, have been the technologies I've liked most during this time. I love ChatGPT , and I'd love an AI assistant. How do they get that into Grok?

Last edited:

Goalus

Member

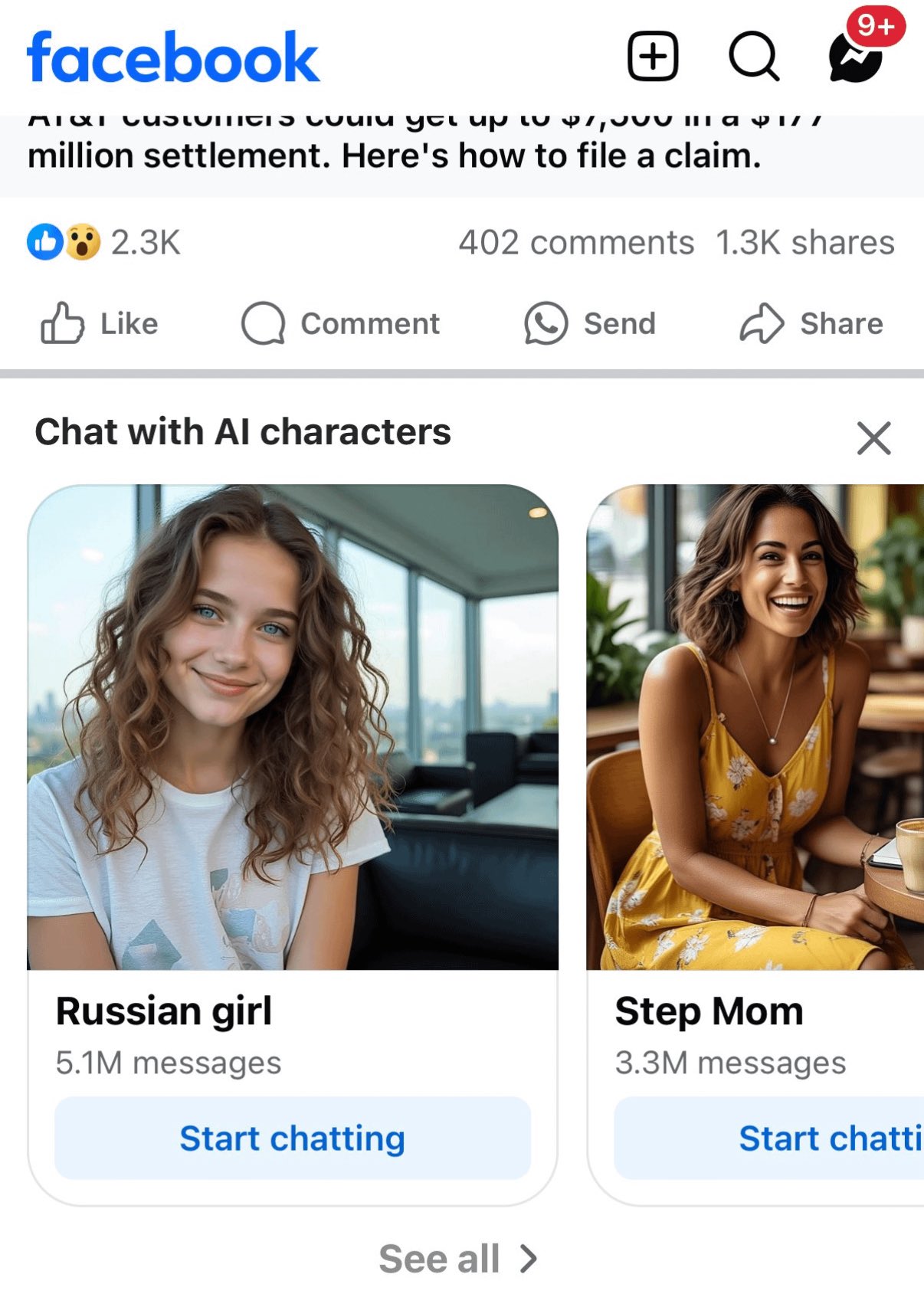

Step Mom?

Niiiiice, maybe it is finally time to create a Facebook account.

Dr.Morris79

Gold Member

I'm getting the worst flashbacks to the adverts I hated the most

roosnam1980

Member

I'm getting the worst flashbacks to the adverts I hated the most

well , the numbers are rather easy to remember , ingenious marketing

Dr.Morris79

Gold Member

There needs to be a nuremberg trial for that marketing team..well , the numbers are rather easy to remember , ingenious marketing

ForAcademicPurposes

Member

Why is a 70 year old man promoting Lolicon bullshit

NotMyProblemAnymoreCunt

Biggest Trails Stan

ResurrectedContrarian

Suffers with mild autism

Buckle up, everyone. We're heading into uncharted water.

remarkable thing about this scene is always the "you don't like real girls" line, when she herself is a replicant talking about a hologirl

fake girl level 2 complaining about fake girl level 1, no real girls involved

Accountability and logic are not a woman's (who has been programmed by a man trying to imitate a woman) strong suit.remarkable thing about this scene is always the "you don't like real girls" line, when she herself is a replicant talking about a hologirl

fake girl level 2 complaining about fake girl level 1, no real girls involved

SJRB

Gold Member

remarkable thing about this scene is always the "you don't like real girls" line, when she herself is a replicant talking about a hologirl

fake girl level 2 complaining about fake girl level 1, no real girls involved

I don't mean this in a bad way but that's kinda the point of this scene? She's talking to a Blade Runner.

Kenneth Haight

Gold Member

In some ways, I am enjoying watching the downfall of humanity.

It's just a ride

ResurrectedContrarian

Suffers with mild autism

yeah, it makes sense in the scene -- but it has become a meme a bit like "2D girls > 3D" or whatever, and it's a bit amusing since we're really talking about 2 levels of artificial girlfriends hereI don't mean this in a bad way but that's kinda the point of this scene? She's talking to a Blade Runner.

... has become new version of:

ForAcademicPurposes

Member

They want to predict your behaviour as a man/woman. It makes them richer and more powerful.this is what happens when the incel nerds at school get money and power. The world quickly becomes their weird dystopian fantasy

90% of people are going to cross that threshold where they are pumping your money into their pockets.

Nocty

Member

ehhh not sure exactly what your point is but, not from me.. I dont use any social media at all.They want to predict your behaviour as a man/woman. It makes them richer and more powerful.

90% of people are going to cross that threshold where they are pumping your money into their pockets.

LordOfChaos

Member

Amazed that being the worlds richest man doesn't stop you from being such a giant incel

This shit's well past getting weird and cringe.

This shit's well past getting weird and cringe.

SJRB

Gold Member

Elon peddling this gooner slop non-stop is so bizarre.

It's not even good stuff, Ani looks like an adolescent girl and the Grok Imagine renders range from somewhat okay to quite bad. Certainly nothing to keep spamming X about 34 times a day.

I don't understand what is going on with this guy the last few weeks.

It's not even good stuff, Ani looks like an adolescent girl and the Grok Imagine renders range from somewhat okay to quite bad. Certainly nothing to keep spamming X about 34 times a day.

I don't understand what is going on with this guy the last few weeks.

DKehoe

Member

These posts from a few years back were from an account that was revealed to be his alt.Elon peddling this gooner slop non-stop is so bizarre.

It's not even good stuff, Ani looks like an adolescent girl and the Grok Imagine renders range from somewhat okay to quite bad. Certainly nothing to keep spamming X about 34 times a day.

I don't understand what is going on with this guy the last few weeks.

Never has a reaction been so apt