-

Hey Guest. Check out your NeoGAF Wrapped 2025 results here!

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Graphical Fidelity I Expect This Gen

- Thread starter ResetEraVetVIP

- Start date

- NSFW

ResetEraVetVIP

Member

Current gen games should look like all of the CGI game trailers I see…

Only few games come close. I look at CGI video game trailers like "Thats what the game should look like".

Only few games come close. I look at CGI video game trailers like "Thats what the game should look like".

AeonGaidenRaiden

Member

Absolutely not lol. People are overhyping the graphics, they are good but not something unseen in gaming. The gameplay is stuck in PS3 era, with repetitive fights, mashing the same 2 buttons, walking simulator with less than 5 hours of experience that has no replay value. Collectables are trash too. Buy it when its $5 or just pirate it.Hellblade 2 worth 25$ on steam ?

what was the consensus on this one ?

Probably better wait until I replace y 3080 in... unknown future ?

March Climber

Member

If it is at least as fun as an 8-12 hour Uncharted experience(considering Amy Henig's involvement), I'd be fine with that.People are gonna be so disappointed with Marvel 1948. They are expecting a (Arkham Knight), but instead will get a call of duty walking around like game (without the shooting).

I rewatched the video to confirm. Yup.

March Climber

Member

Blame the sudden addition of the 4k resolution bump and ray tracing. You're all going to have to sit and wait a generation like the rest of us. Devs are doing a decent job with the cards they were dealt though.Current gen games should look like all of the CGI game trailers I see…

Only few games come close. I look at CGI video game trailers like "Thats what the game should look like".

GymWolf

Member

For people asking why devs are so fucking lazy in this gen, you don't have to look further than the re4r doesn't look too hot topic in first page

We deserve tsushima 2 to look worse than a 3 years old crossgen game.

People fail to understand that great looking games are not nextgen looking games, rdr2 still look great, but is it nextgen looking?

We deserve tsushima 2 to look worse than a 3 years old crossgen game.

People fail to understand that great looking games are not nextgen looking games, rdr2 still look great, but is it nextgen looking?

Last edited:

Bojji

Gold Member

Indiana Jones on max settings require A LOT of VRAM:

1080p here ^ All 12GB cards are dead

4k ^ It's like that, when I tried native 4k with PT on 4070ti super game crashed to desktop, lol.

You maybe right that they didn't optimize it enough when it comes to memory usage (rarely any game is optimized these days) but almost all blame should be directed at Nvidia that skimped on VRAM for 3080 (and 3070) and then released fucking 3060 with 12GB.

Games will require more and more, this is natural - I learned the hard way when AC Unity dropped and I had GTX 770 2GB - despite high FPS game looked like shit with when it comes to textures.

Last edited:

DanielG165

Member

This is objectively false in every metric.People are overhyping the graphics, they are good but not something unseen in gaming

Kenpachii

Member

SlimySnake

Indiana Jones on max settings require A LOT of VRAM:

1080p here ^ All 12GB cards are dead

4k ^ It's like that, when I tried native 4k with PT on 4070ti super game crashed to desktop, lol.

You maybe right that they didn't optimize it enough when it comes to memory usage (rarely any game is optimized these days) but almost all blame should be directed at Nvidia that skimped on VRAM for 3080 (and 3070) and then released fucking 3060 with 12GB.

Games will require more and more, this is natural - I learned the hard way when AC Unity dropped and I had GTX 770 2GB - despite high FPS game looked like shit with when it comes to textures.

5080 the ultimate 1080p card

PeteBull

Member

Yup, good lesson to learn to make sure next time even if we buying midrange card(so in 2025, including new 50xx series from nvidia), it needs those 16gigs of vram, especially if we know/predict we will keep the card for more than 1 gen(so more than 2-3 years).Games will require more and more, this is natural - I learned the hard way when AC Unity dropped and I had GTX 770 2GB - despite high FPS game looked like shit with when it comes to textures.

SlimySnake

Flashless at the Golden Globes

I dont know man. i'd love to blame nvidia for the lack of vram, but black myth wukong's PT, Cyberpunk's PT, and Star wars outlaws PT settings dont even come close to maxing out my vram at 4k dlss.SlimySnake

Indiana Jones on max settings require A LOT of VRAM:

1080p here ^ All 12GB cards are dead

4k ^ It's like that, when I tried native 4k with PT on 4070ti super game crashed to desktop, lol.

You maybe right that they didn't optimize it enough when it comes to memory usage (rarely any game is optimized these days) but almost all blame should be directed at Nvidia that skimped on VRAM for 3080 (and 3070) and then released fucking 3060 with 12GB.

Games will require more and more, this is natural - I learned the hard way when AC Unity dropped and I had GTX 770 2GB - despite high FPS game looked like shit with when it comes to textures.

Whereas this game with its shitty textures, lighting and crappy models is maxing out my vram even with medium texture streaming settings. It's just poorly optimized. Especially for such a linear game. They need some kind of nanite solution or mesh shaders to reduce the vram usage for geometry and textures.

SlimySnake

Flashless at the Golden Globes

most people are dumb. We are in the 9th generation of consoles. if they still dont understand the concept of generational leaps then they are simply morons.For people asking why devs are so fucking lazy in this gen, you don't have to look further than the re4r doesn't look too hot topic in first page

We deserve tsushima 2 to look worse than a 3 years old crossgen game.

People fail to understand that great looking games are not nextgen looking games, rdr2 still look great, but is it nextgen looking?

devs are quickly realizing this too. The vast majority of the gaming userbase doesnt even understand what makes a next gen game next gen. hence, the focus on 60 fps from certain studios. They are treating consoles as $500 PC upgrades. 'My last gen 30 fps game can run at 60? Take my money!' The leaked insomniac docs already prove that Wolverine's base framerate will be 60 fps. ND purposefully released a 60 fps trailer. GG told DF that friends dont let friends play in 30 fps, so dont expect much from Horizon 3 either. And Sucker Punch's lack of big next gen leap screams 60 fps target.

Thank god of Rockstar, CD Project, Massive and devs using UE5. Capcom, FromSoft, and most other japanese devs are settling for 60 fps and good for them. Just dont want to see those guys in this thread.

rofif

Banned

the modes and elimination of 30fps killed this gen next genness.most people are dumb. We are in the 9th generation of consoles. if they still dont understand the concept of generational leaps then they are simply morons.

devs are quickly realizing this too. The vast majority of the gaming userbase doesnt even understand what makes a next gen game next gen. hence, the focus on 60 fps from certain studios. They are treating consoles as $500 PC upgrades. 'My last gen 30 fps game can run at 60? Take my money!' The leaked insomniac docs already prove that Wolverine's base framerate will be 60 fps. ND purposefully released a 60 fps trailer. GG told DF that friends dont let friends play in 30 fps, so dont expect much from Horizon 3 either. And Sucker Punch's lack of big next gen leap screams 60 fps target.

Thank god of Rockstar, CD Project, Massive and devs using UE5. Capcom, FromSoft, and most other japanese devs are settling for 60 fps and good for them. Just dont want to see those guys in this thread.

People are too stubborn to remember that just a moment ago they were playing and enjoying games at 30fps and they didn't think about modes, fps and any technical crap at all.

Now all they know to do is to disable motion blur because their twitch influencer of choice is an idiot who disables it in every game... and then 30fps is harder to stomach but the influencer would tell them to use performance mode anyway.

Seriously - Uncharted 4 was 1080p30 and it wouldn't be remembered for it's next gen graphics if it was 60fps. And it played great at 30.

On the 2nd hand, times have changed and you can do a next gen game... drop res to 144p and it's 60fps now... so I guess 60fps shouldnt stop devs from making next gen games.... maybe

Balducci30

Member

Yep. How the hell would we have ever gotten LOU2 or Red Dead, or GTA5 on ps3 if they were aiming for a stable 60fps and 4k? It makes no sense to me this is what people are asking for, I honestly can't think of a game that released 60fps and 4k (or even not 4k) that was considered a graphical leap at the time of release on console. Seems most just don't carethe modes and elimination of 30fps killed this gen next genness.

People are too stubborn to remember that just a moment ago they were playing and enjoying games at 30fps and they didn't think about modes, fps and any technical crap at all.

Now all they know to do is to disable motion blur because their twitch influencer of choice is an idiot who disables it in every game... and then 30fps is harder to stomach but the influencer would tell them to use performance mode anyway.

Seriously - Uncharted 4 was 1080p30 and it wouldn't be remembered for it's next gen graphics if it was 60fps. And it played great at 30.

On the 2nd hand, times have changed and you can do a next gen game... drop res to 144p and it's 60fps now... so I guess 60fps shouldnt stop devs from making next gen games.... maybe

Last edited:

rofif

Banned

yeah. I remember playing gears of war on 360 and being fucking mind blown how good it looks. I don't remember that it was 30fps because I played it and I had fun and was impressed by graphicsYep. How the hell would we have ever gotten LOU2 or Red Dead, or GTA5 on ps3 if they were aiming for a stable 60fps and 4k? It makes no sense to me this is what people are asking for, I honestly can't think of a game that released 60fps and 4k (or even not 4k) that was considered a graphical leap at the time of release on console. Seems most just don't care

Bojji

Gold Member

I dont know man. i'd love to blame nvidia for the lack of vram, but black myth wukong's PT, Cyberpunk's PT, and Star wars outlaws PT settings dont even come close to maxing out my vram at 4k dlss.

Whereas this game with its shitty textures, lighting and crappy models is maxing out my vram even with medium texture streaming settings. It's just poorly optimized. Especially for such a linear game. They need some kind of nanite solution or mesh shaders to reduce the vram usage for geometry and textures.

UE5 is vram light compared to other engines so Wukong is not too heavy.

Star Wars is reserving VRAM depending on VRAM size, it maxes out almost all GPUs. But below 16GB it reduces texture quality distance. I completed it on 12GB 3080ti but without PT (not that big difference vs. normal RT in game).

Now CP, this game was getting out of VRAM on my 4070ti when I was completing PL, now that i have 16GB card I can confirm that it goes past 14GB easily (more with frame gen) and performs MUCH better despite card being only like ~15% better. Game is unplayable on 12GB cards above 1440p output in PL location with heavy RT.

most people are dumb. We are in the 9th generation of consoles. if they still dont understand the concept of generational leaps then they are simply morons.

devs are quickly realizing this too. The vast majority of the gaming userbase doesnt even understand what makes a next gen game next gen. hence, the focus on 60 fps from certain studios. They are treating consoles as $500 PC upgrades. 'My last gen 30 fps game can run at 60? Take my money!' The leaked insomniac docs already prove that Wolverine's base framerate will be 60 fps. ND purposefully released a 60 fps trailer. GG told DF that friends dont let friends play in 30 fps, so dont expect much from Horizon 3 either. And Sucker Punch's lack of big next gen leap screams 60 fps target.

Thank god of Rockstar, CD Project, Massive and devs using UE5. Capcom, FromSoft, and most other japanese devs are settling for 60 fps and good for them. Just dont want to see those guys in this thread.

the modes and elimination of 30fps killed this gen next genness.

People are too stubborn to remember that just a moment ago they were playing and enjoying games at 30fps and they didn't think about modes, fps and any technical crap at all.

Now all they know to do is to disable motion blur because their twitch influencer of choice is an idiot who disables it in every game... and then 30fps is harder to stomach but the influencer would tell them to use performance mode anyway.

Seriously - Uncharted 4 was 1080p30 and it wouldn't be remembered for it's next gen graphics if it was 60fps. And it played great at 30.

On the 2nd hand, times have changed and you can do a next gen game... drop res to 144p and it's 60fps now... so I guess 60fps shouldnt stop devs from making next gen games.... maybe

Yep. How the hell would we have ever gotten LOU2 or Red Dead, or GTA5 on ps3 if they were aiming for a stable 60fps and 4k? It makes no sense to me this is what people are asking for, I honestly can't think of a game that released 60fps and 4k (or even not 4k) that was considered a graphical leap at the time of release on console. Seems most just don't care

This gen all gamers got increased standards, 30fps was always shit and now console gamers got ability to play in 60fps. Treat this gen as gen zero, most of power increase went into 30->60fps transition. Next gen will build upon that and we will have a generation leap.

Xtib81

Member

I think that wasSlimySnake who wanted to know what graphical improvements Horizon Forbidden West had on the PS5 Pro and with this video now we have the answer :

The features include improved depth of field, cleaner volumetrics, and full-resolution rendering of effects like fire, holograms, and explosions. Higher-quality clouds and the ability to render characters at greater distances.

Horizon 3 will look absolutely bonkers on ps6. I'll say this again, but GG is Sony's most talented studio when it comes to visuals. FW still looks better than 99% of the games released this gen. Yet, it's a 3-year-old cross gen title.

Last edited:

H . R . 2

Member

agreed however I think the only acceptable reason that the industry might have for 60fps but has failed to articulate and rationalise properly is probably the fact that, at some point, in one of these generations, the entire industry needs to, once and for all, collectively move on and transition to 60fps so that we can focus on visual fidelity again, this seems like the only solution to me to ending this debate.yeah. I remember playing gears of war on 360 and being fucking mind blown how good it looks. I don't remember that it was 30fps because I played it and I had fun and was impressed by graphics

admittedly, 60 fps does add a little bit to the experience of a game BUT it takes away so much from its graphical potential. Unlike visual fidelity and engine upgrades, 60fps is extremely easy to achieve, just downgrade and turn off some options, and like native 4k, it is a huge waste of resources that only Sony and MS themselves are responsible for

but if people start demanding 120fps then, I will quit gaming

that's exactly what I was thinkingThis gen all gamers got increased standards, 30fps was always shit and now console gamers got ability to play in 60fps. Treat this gen as gen zero, most of power increase went into 30->60fps transition. Next gen will build upon that and we will have a generation leap.

Last edited:

SlimySnake

Flashless at the Golden Globes

i never played Phantom Liberty but base game was 8.1GB in 4k dlss performance. and 7.3 gb in 1440p dlss performance.UE5 is vram light compared to other engines so Wukong is not too heavy.

Star Wars is reserving VRAM depending on VRAM size, it maxes out almost all GPUs. But below 16GB it reduces texture quality distance. I completed it on 12GB 3080ti but without PT (not that big difference vs. normal RT in game).

Now CP, this game was getting out of VRAM on my 4070ti when I was completing PL, now that i have 16GB card I can confirm that it goes past 14GB easily (more with frame gen) and performs MUCH better despite card being only like ~15% better. Game is unplayable on 12GB cards above 1440p output in PL location with heavy RT.

This gen all gamers got increased standards, 30fps was always shit and now console gamers got ability to play in 60fps. Treat this gen as gen zero, most of power increase went into 30->60fps transition. Next gen will build upon that and we will have a generation leap.

Indy is simply unoptimized. There is nothing here that should be taxing the vram worse than open world games.

Xtib81

Member

admittedly, 60 fps does add a little bit

A little bit ? Lmao, games are unplayable at 30fps on an Oled and I'm not even kidding, I have no Idea how anyone can enjoy playing games with such low framerates. I played 50hrs of FW at 30fps because of the shimmering issues at 60fps back in 2022, and every second felt awful. 60 FPS should be standard but frame gen should be a thing too, or put some beefy cpus in those damn consoles. Either way, throw 30fps to the trash and never look back developers !

Last edited:

rofif

Banned

So you are saying we sacrificed this gen to move to 60fps but now we should stay here and next gen should improve at 60fps compared to this gen?agreed however I think the only acceptable reason that the industry might have for 60fps but has failed to articulate and rationalise properly is probably the fact that, at some point, in one of these generations, the entire industry needs to, once and for all, collectively move on and transition to 60fps so that we can focus on visual fidelity again, this seems like the only solution to me to ending this debate.

admittedly, 60 fps does add a little bit to the experience of a game BUT it takes away so much from its graphical potential. Unlike visual fidelity and engine upgrades, 60fps is extremely easy to achieve, just downgrade and turn off some options, and like native 4k, it is a huge waste of resources that only Sony and MS themselves are responsible for

but if people start demanding 120fps then, I will

that's exactly what I was thinking

Well yeah, of course.

But I still wonder what could be possible at real 30 (with no modes etc)

Standards... we hear that often and I think it's bs talking.UE5 is vram light compared to other engines so Wukong is not too heavy.

Star Wars is reserving VRAM depending on VRAM size, it maxes out almost all GPUs. But below 16GB it reduces texture quality distance. I completed it on 12GB 3080ti but without PT (not that big difference vs. normal RT in game).

Now CP, this game was getting out of VRAM on my 4070ti when I was completing PL, now that i have 16GB card I can confirm that it goes past 14GB easily (more with frame gen) and performs MUCH better despite card being only like ~15% better. Game is unplayable on 12GB cards above 1440p output in PL location with heavy RT.

This gen all gamers got increased standards, 30fps was always shit and now console gamers got ability to play in 60fps. Treat this gen as gen zero, most of power increase went into 30->60fps transition. Next gen will build upon that and we will have a generation leap.

I am playing games since I was 5. I am 35 years old. I had a 120hz eizo monitor in 2000 and that wasn't even my first monitor.

I had 240hz monitors, 144hz monitors, xbox 360, ps4 slim, then pc gaming again, 3080, 1060.... I was playing at every res, fps and monitor/tv type.

And 100% I realized that what matters the most is just playing the game. Souls games were all 60fps locked on pc and I had 240hz monitor at the time ds3 came out... and all I noticed is how much better that game would look at 4k60 rather than this shitty 1080p tn 240hz monitor, that I just gimp ds3 by playing it on it.

Also, the more I played at 240hz/fps, I noticed that now 60fps sucked dicks and was really hard to get back to.

So I got 4k60 monitor and got used to 60 back in few days. Then when I want to play at 30fps on a consoles, it's 1 evening and you just play the game and it's fine.

Brains get used to it, standards mean shit.

Of course if 30fps is done right. That means low deadzones, fast input, good motion blur. Compare Demons Souls 30fps mode to bloodborne... bloodborne is so unimaginably more responsive to control.

Balducci30

Member

Meh, if 30fps is done correctly is absolutely fine on Oled. I play most games in fidelity mode - you just have gotten used to 60fps, or the 30fps mode in that particular game isn't done well. Unplayable is extremely hyperbolic considering I've been playing games since late ps4 on Oled.A little bit ? Lmao, games are unplayable at 30fps on an Oled and I'm not even kidding, I have no Idea how anyone can enjoy playing games with such low framerates. I played 50hrs of FW at 30fps because of the shimmering issues at 60fps back in 2022, and every second felt awful. 60 FPS should be standard but frame gen should be a thing too, or put some beefy cpus in those damn consoles. Either way, throw 30fps to the trash and never look back developers !

Bojji

Gold Member

i never played Phantom Liberty but base game was 8.1GB in 4k dlss performance. and 7.3 gb in 1440p dlss performance.

Indy is simply unoptimized. There is nothing here that should be taxing the vram worse than open world games.

Base game can indeed fit with DLSS P and RT lighting + Reflections (and indoor shadows):

But add PT into the mix, it goes to 12GB:

In PL with PT:

+ FG:

Even without FG or PT (just normal RT settings as above) it kills 12GB cards ^

So you are saying we sacrificed this gen to move to 60fps but now we should stay here and next gen should improve at 60fps compared to this gen?

Well yeah, of course.

But I still wonder what could be possible at real 30 (with no modes etc)

Standards... we hear that often and I think it's bs talking.

I am playing games since I was 5. I am 35 years old. I had a 120hz eizo monitor in 2000 and that wasn't even my first monitor.

I had 240hz monitors, 144hz monitors, xbox 360, ps4 slim, then pc gaming again, 3080, 1060.... I was playing at every res, fps and monitor/tv type.

And 100% I realized that what matters the most is just playing the game. Souls games were all 60fps locked on pc and I had 240hz monitor at the time ds3 came out... and all I noticed is how much better that game would look at 4k60 rather than this shitty 1080p tn 240hz monitor, that I just gimp ds3 by playing it on it.

Also, the more I played at 240hz/fps, I noticed that now 60fps sucked dicks and was really hard to get back to.

So I got 4k60 monitor and got used to 60 back in few days. Then when I want to play at 30fps on a consoles, it's 1 evening and you just play the game and it's fine.

Brains get used to it, standards mean shit.

Of course if 30fps is done right. That means low deadzones, fast input, good motion blur. Compare Demons Souls 30fps mode to bloodborne... bloodborne is so unimaginably more responsive to control.

You really expect competent 30fps implementations from devs we have in 2024/25? Lazy or contractors, it's probably easier for them to make game run in 60fps.

60fps is much more comfortable, easier to the eyes and better for gameplay (input lag!). 30 fps sucks ass, especially on modern tvs with low pixel response times.

40fps is the new minimum for me.

rofif

Banned

At this point, they can barely do competent 60fps... let alone good 30.Base game can indeed fit with DLSS P and RT lighting + Reflections (and indoor shadows):

But add PT into the mix, it goes to 12GB:

In PL with PT:

+ FG:

Even without FG or PT (just normal RT settings as above) it kills 12GB cards ^

You really expect competent 30fps implementations from devs we have in 2024/25? Lazy or contractors, it's probably easier for them to make game run in 60fps.

60fps is much more comfortable, easier to the eyes and better for gameplay (input lag!). 30 fps sucks ass, especially on modern tvs with low pixel response times.

40fps is the new minimum for me.

So no. I don't expect it.

40fps is barely replacing the good old 30fps when devs knew how to do it

ResetEraVetVIP

Member

You have got to be kidding…Absolutely not lol. People are overhyping the graphics, they are good but not something unseen in gaming. The gameplay is stuck in PS3 era, with repetitive fights, mashing the same 2 buttons, walking simulator with less than 5 hours of experience that has no replay value. Collectables are trash too. Buy it when its $5 or just pirate it.

SlimySnake

Flashless at the Golden Globes

ive played Ratchet, Spiderman 2, HFW, FF16, Black Myth, Callisto, Star Wars Outlaws, Silent Hill 2 and Hellblade 2 at 30 fps. Only HFW was a fucking mess but that was because of the insane sharpness and flickering on camera turns. I fixed that before they even patched it in by changing some ingame settings.Meh, if 30fps is done correctly is absolutely fine on Oled. I play most games in fidelity mode - you just have gotten used to 60fps, or the 30fps mode in that particular game isn't done well. Unplayable is extremely hyperbolic considering I've been playing games since late ps4 on Oled.

If anything, 30 fps modes on 120 hz displays are way more responsive than 60 hz screens. Oled input lag is even better than LEDs.

ResetEraVetVIP

Member

Spiderman Remastered PS5 and Spiderman Miles Morales PS5 is better at 30 fps on an OLED IMO…

ResetEraVetVIP

Member

From my sister thread on ResetEra,

"Alanah Pearce has heard from devs who have seen Witcher 4's current build being played that game does look like a trailer.

Different thing if it stays there, or has to scale back on graphics to meet performance goals."

"Watching Alanah Pierce on stream right now and she says she knows people who have played a build of The Witcher 4 and it apparently looks as good as the trailer. Just an anecdote but it gives me hope."

"Alanah Pearce has heard from devs who have seen Witcher 4's current build being played that game does look like a trailer.

Different thing if it stays there, or has to scale back on graphics to meet performance goals."

"Watching Alanah Pierce on stream right now and she says she knows people who have played a build of The Witcher 4 and it apparently looks as good as the trailer. Just an anecdote but it gives me hope."

Last edited:

powder

Banned

On what platform, though? Sure, it could look pretty close to the trailer if she saw it running on a maxed out PC.From my sister thread on ResetEra,

"Alanah Pearce has heard from devs who have seen Witcher 4's current build being played that game does look like a trailer.

Different thing if it stays there, or has to scale back on graphics to meet performance goals."

"Watching Alanah Pierce on stream right now and she says she knows people who have played a build of The Witcher 4 and it apparently looks as good as the trailer. Just an anecdote but it gives me hope."

Schmendrick

Banned

If it was any developer except for CDPR who have already proven that they're willing to feed a vastly inferior version of their game to consoleros I'd have absolutely no hope at all that the final game would end up anywhere near that cinematic.From my sister thread on ResetEra,

"Alanah Pearce has heard from devs who have seen Witcher 4's current build being played that game does look like a trailer.

Different thing if it stays there, or has to scale back on graphics to meet performance goals."

But with another 3+years in the oven we're talking about the RTX 6xxx or maybe even the 7xxx generation of hardware, so.... Probably possible from a technological standpoint. Dunno how budget intensive that visual splendor still is though.

Last edited:

ResetEraVetVIP

Member

From my sister thread on ResetEra…

About Intergalactic.

.

.

About Intergalactic.

S0ULZB0URNE

Member

Demons Souls: Tower of Latria from start to finish on the PS5 Pro vs the world.

Last edited:

Neo_game

Member

If it was any developer except for CDPR who have already proven that they're willing to feed a vastly inferior version of their game to consoleros I'd have absolutely no hope at all that the final game would end up anywhere near that cinematic.

But with another 3+years in the oven we're talking about the RTX 6xxx or maybe even the 7xxx generation of hardware, so.... Probably possible from a technological standpoint. Dunno how budget intensive that visual splendor still is though.

Yes by the time the game release RTX 7 series will likely be launched and people will upgrade their PC. This game could be the CP2077. Running 900P 30fps on PS5 and SX and ideal for next gen consoles.

Msamy

Member

It want be that hard to achieve because that game won't released before next gen consoles , at least it's cross gen title like cyperpunk 2077 this gen, the hardest part in all this is open world settings which may cause downgrade even in the most powerful gpu.From my sister thread on ResetEra,

"Alanah Pearce has heard from devs who have seen Witcher 4's current build being played that game does look like a trailer.

Different thing if it stays there, or has to scale back on graphics to meet performance goals."

"Watching Alanah Pierce on stream right now and she says she knows people who have played a build of The Witcher 4 and it apparently looks as good as the trailer. Just an anecdote but it gives me hope."

Msamy

Member

Other digital Foundry guy's says ND used rt reflection alongside planar reflection in that trailerFrom my sister thread on ResetEra…

About Intergalactic.

.

SlimySnake

Flashless at the Golden Globes

They targeted 1080p 30 fps on the 1080 Ti for cyberpunk. Ended up delivering 1440p 30 with Rt shadows on the ps5 which is basically a 1080Ti with RT support.Yes by the time the game release RTX 7 series will likely be launched and people will upgrade their PC. This game could be the CP2077. Running 900P 30fps on PS5 and SX and ideal for next gen consoles.

the good thing is that they are using UE5 which scales very well on consoles and PCs. Most UE5 games are 1440p 30 fps. its their 60 fps modes that are rough because of UE5.1's CPU bottlenecks that have since been resolved. they have also improved performance of both software and hardware lumen on consoles. I think it will be 1296p-1440p 30 fps on base ps5, and uses hardware lumen on the ps5 pro.

the fancy path traced graphics we saw are likely for 5080s and 5090s. Not 6090, let alone 7090. I dont think this game is 4+ years away. Likely a holiday 2026 title that might get delayed to march like most games do. And next gen consoles arent coming until 2028. i think sony will delay it a year because it costs too much and AMD's far behind the AI stuff that nvidia will be showcasing on the 5090. Cerny said they are only just now partnering up with AMD so the ps6 is likely 4 years away.

Last edited:

FIREKNIGHT2029

Member

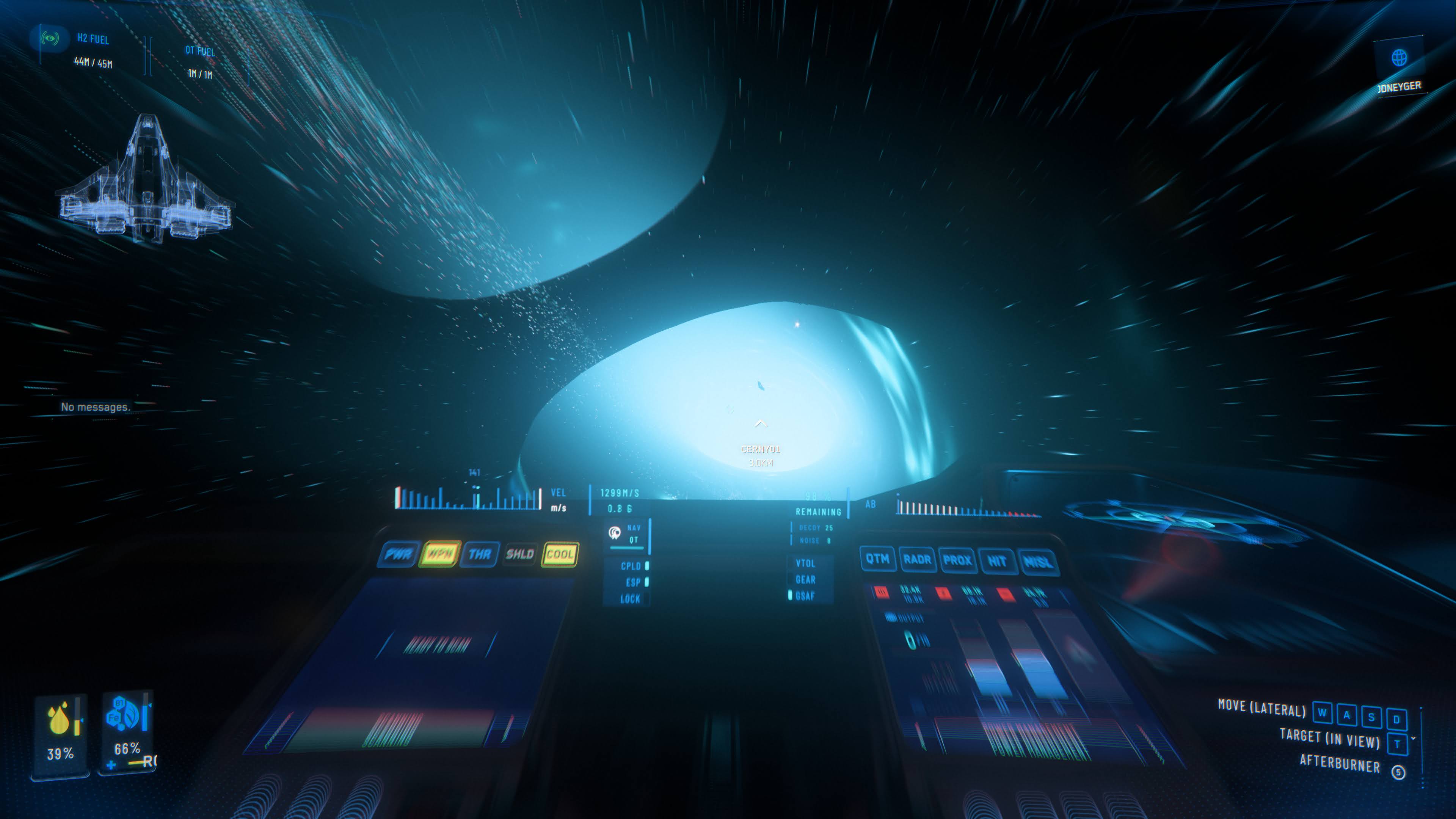

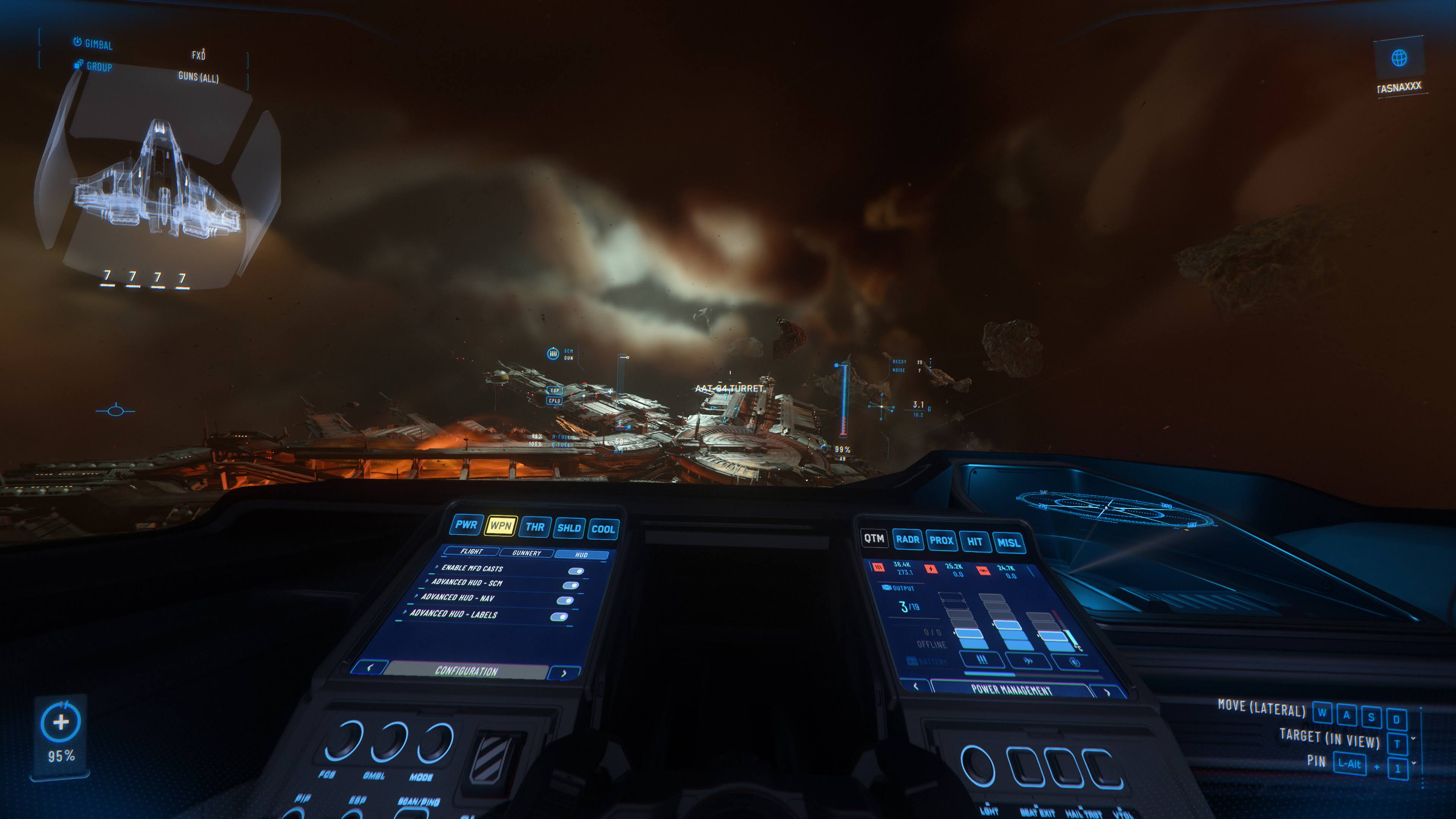

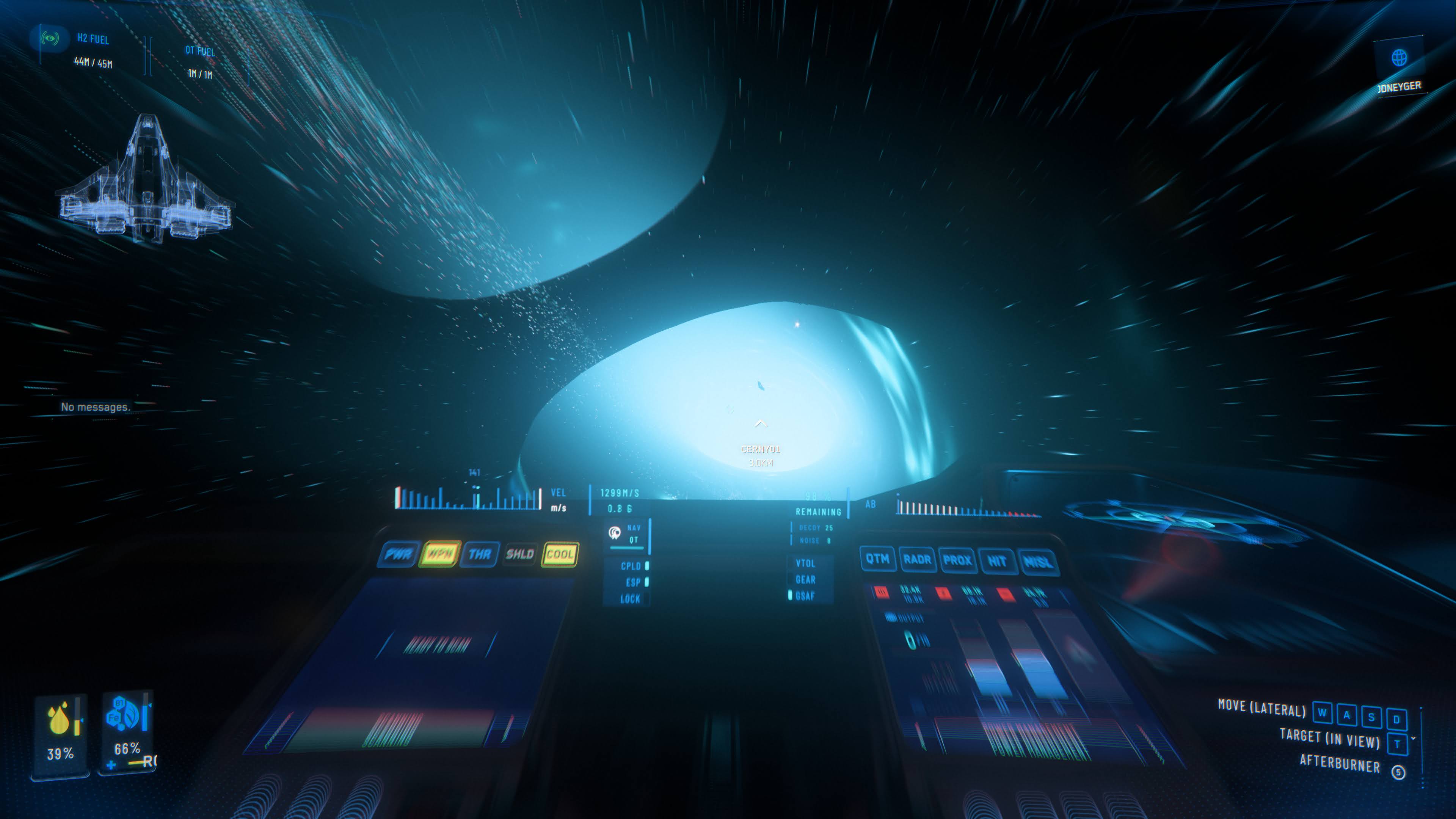

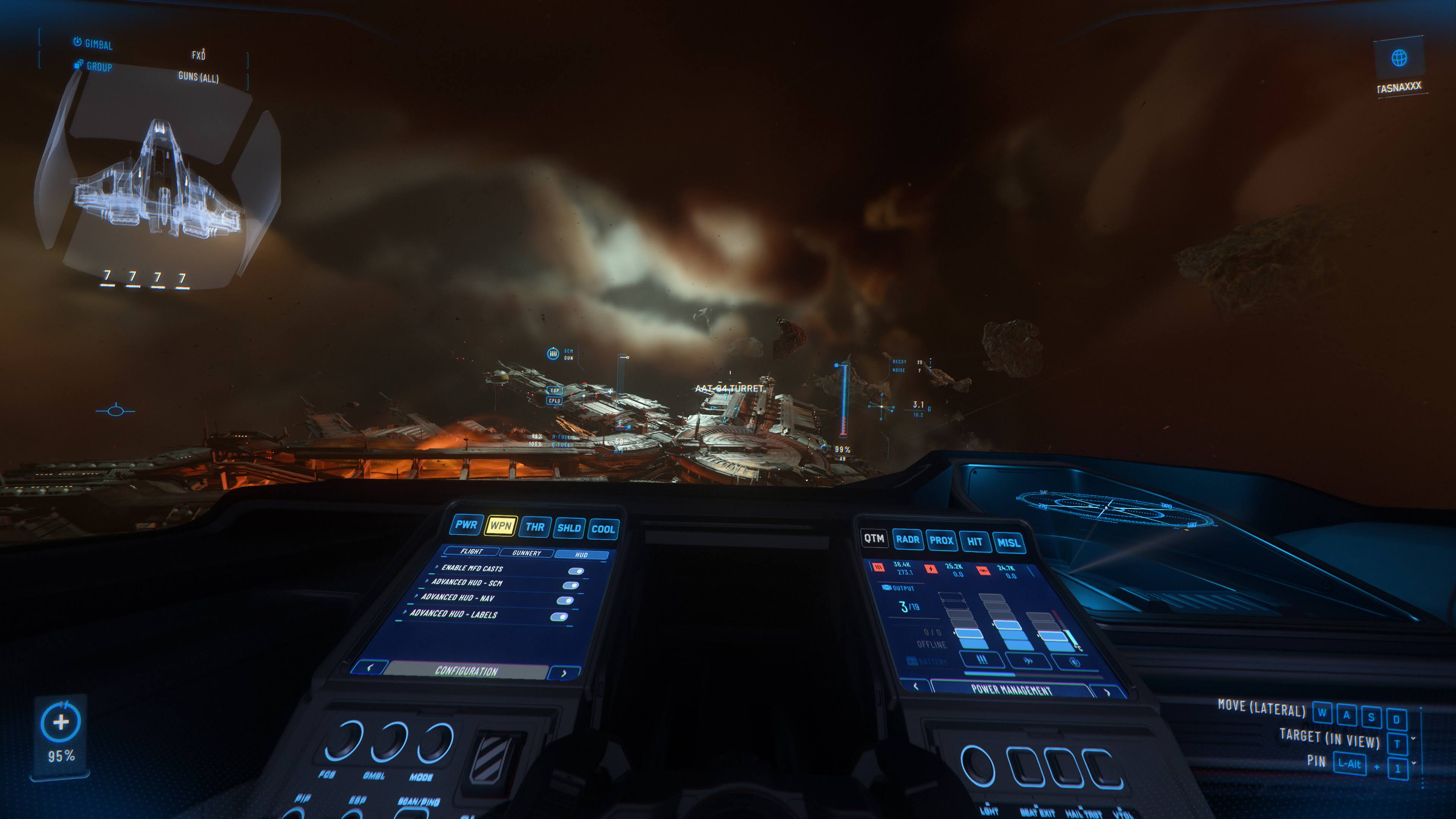

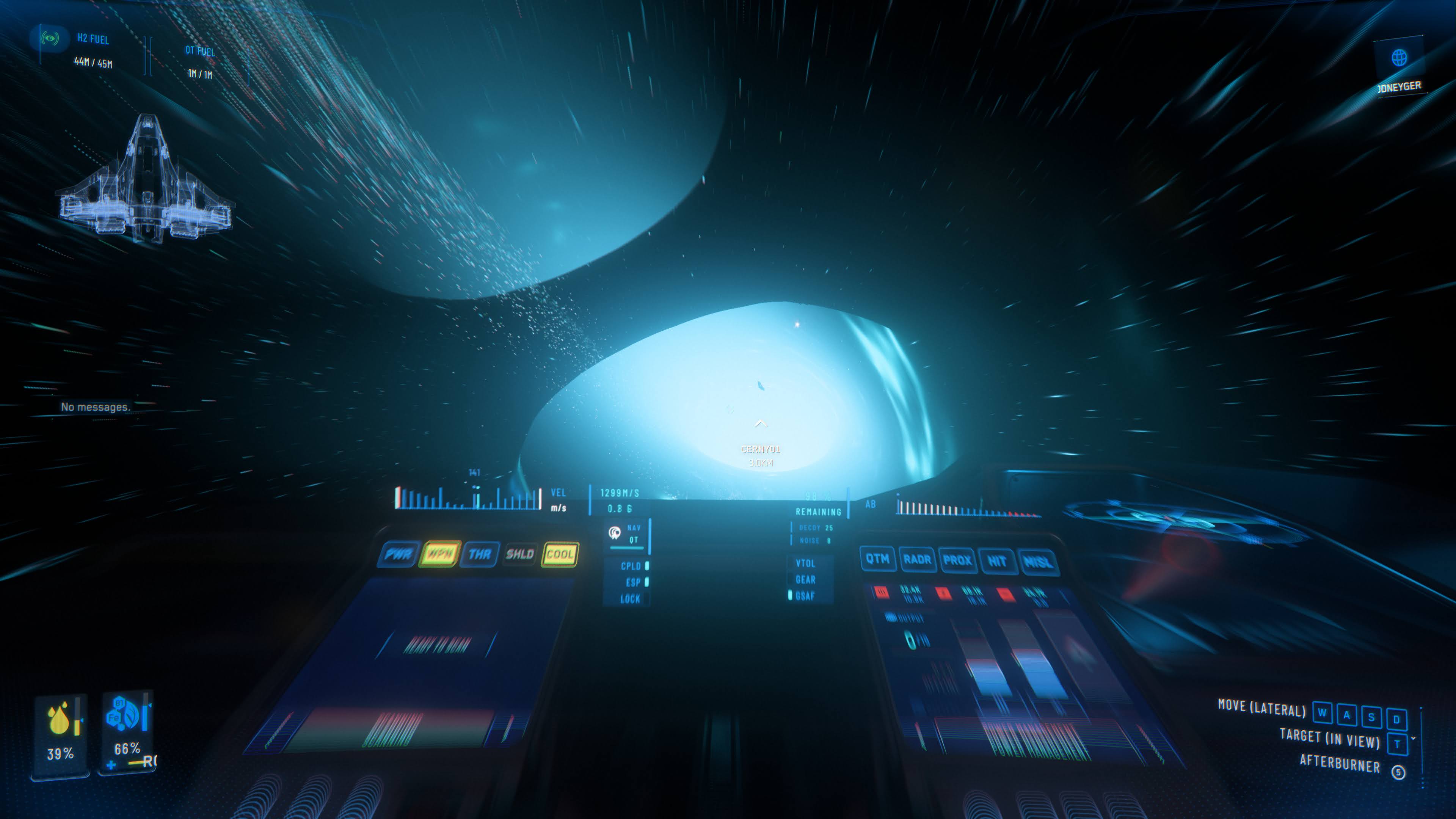

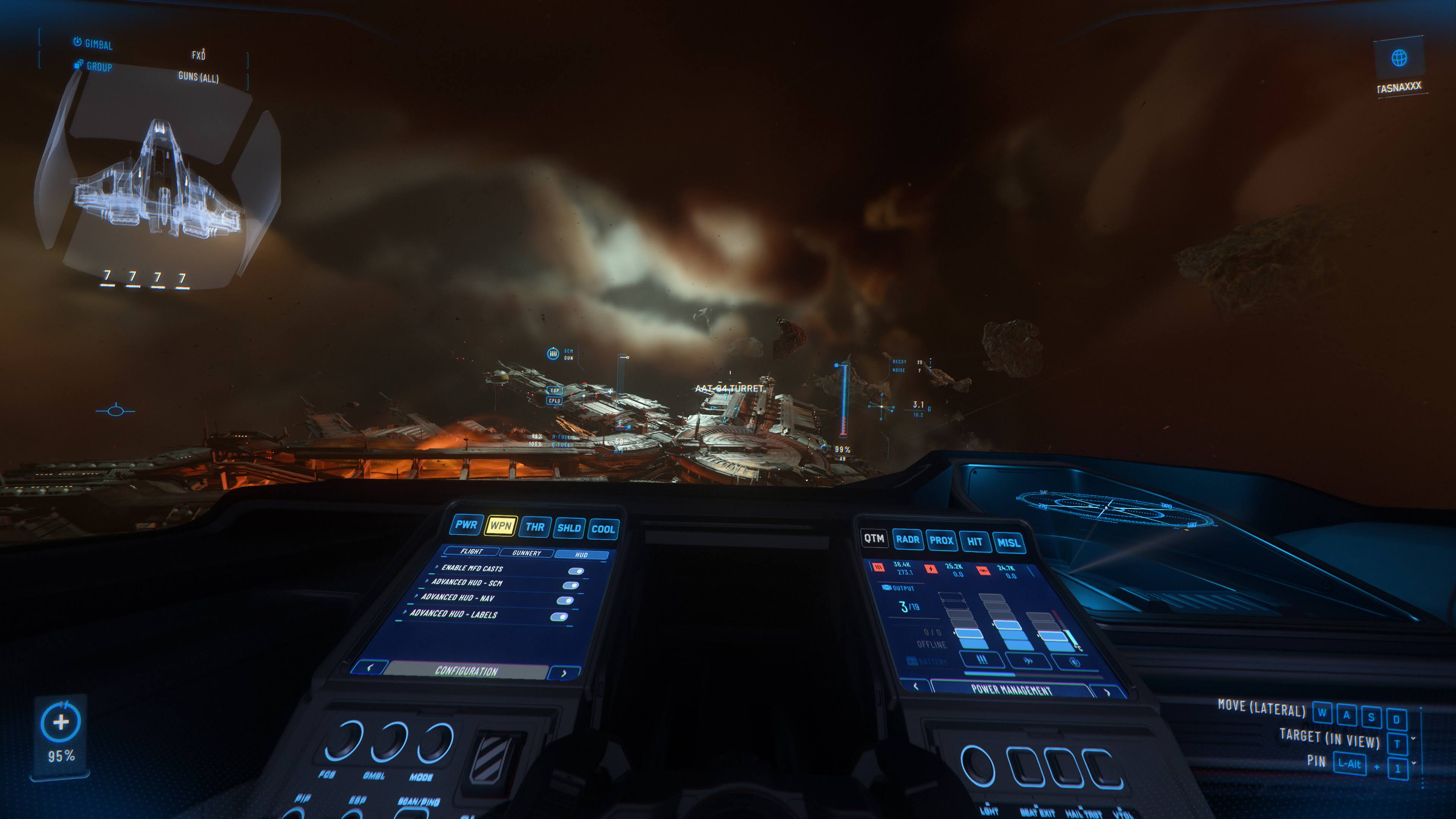

Next gen graphical fidelty has arrived here with Star Citizen's Alpha 4.0 update. I expect graphics like this from PS6 next gen or I will be disappointed. These are all my personal screenshots I took while playing the game, truly insane graphics to witness in person, the shots don't do the game enough justice....

Last edited:

ResetEraVetVIP

Member

I have always thought Star Citizen looked insaneNext gen graphical fidelty has arrived here with Star Citizen's Alpha 4.0 update. I expect graphics like this from PS6 next gen or I will be disappointed. These are all my personal screenshots I took while playing the game, truly insane graphics to witness in person, the shots don't do the game enough justice....

visually in most parts.

AeonGaidenRaiden

Member

You have got to be kidding…

Why would I be kidding? Please dont tell me you are considering prerendered cutscene as "gameplay graphics" That is not at all what the actual game looks like. Same as people here using Uncharted 4 and TLOU 2 facial animations but only picking cutscene shots...take a screenshot of the same character and it has half the detail and facial features. So no, i don't see what the big deal is about it because nothing in this screenshot tells me "omg game of the generation, it will be talked about for decades like we do about Crysis".

This is an actual screenshot representative of the overal look of the game:

This is definately not a real gameplay screenshot. Just because you can pause it in photo mode, doesnt make it "gameplay". Its a rendered scene, pre calculated, cant change a thign about it, has to play out the way it was programmed and cant interract with it. Stop saying cutscenes are representing actual game graphics.

Last edited:

Xtib81

Member

From my sister thread on ResetEra,

"Alanah Pearce has heard from devs who have seen Witcher 4's current build being played that game does look like a trailer.

Different thing if it stays there, or has to scale back on graphics to meet performance goals."

"Watching Alanah Pierce on stream right now and she says she knows people who have played a build of The Witcher 4 and it apparently looks as good as the trailer. Just an anecdote but it gives me hope."

W4 will melt eyes for sure, but you'll need a powerful rig, so either a beefy GPU or a next gen console.

Johnny Concrete

Member

Sure, but when the game actually releases (2029?) it will be nothing special anymore.From my sister thread on ResetEra,

"Alanah Pearce has heard from devs who have seen Witcher 4's current build being played that game does look like a trailer.

Different thing if it stays there, or has to scale back on graphics to meet performance goals."

"Watching Alanah Pierce on stream right now and she says she knows people who have played a build of The Witcher 4 and it apparently looks as good as the trailer. Just an anecdote but it gives me hope."

The safe bet woud be an unannounced nvidia graphics card just like the caption on the trailer said.On what platform, though? Sure, it could look pretty close to the trailer if she saw it running on a maxed out PC.

Why would I be kidding? Please dont tell me you are considering prerendered cutscene as "gameplay graphics" That is not at all what the actual game looks like. Same as people here using Uncharted 4 and TLOU 2 facial animations but only picking cutscene shots...take a screenshot of the same character and it has half the detail and facial features. So no, i don't see what the big deal is about it because nothing in this screenshot tells me "omg game of the generation, it will be talked about for decades like we do about Crysis".

This is an actual screenshot representative of the overal look of the game:

This is definately not a real gameplay screenshot. Just because you can pause it in photo mode, doesnt make it "gameplay". Its a rendered scene, pre calculated, cant change a thign about it, has to play out the way it was programmed and cant interract with it. Stop saying cutscenes are representing actual game graphics.

here are some gameplay screenshots, not cutscenes

Feel Like I'm On 42

Member

Cutscenes though...real time yes but games never look as good in actual gameplayIts not precalculated, thats how Hellblade 2 looks on my PC, real time:

The second picture is not a cutscene, just gameplay.Cutscenes though...real time yes but games never look as good in actual gameplay

Last edited:

here are some gameplay screenshots, not cutscenes

In fact, and as DF said in his reviews of Hellblade 2..... The game surprises more during gameplay than during cutscenes.