Noctis0Caelum

Neo Member

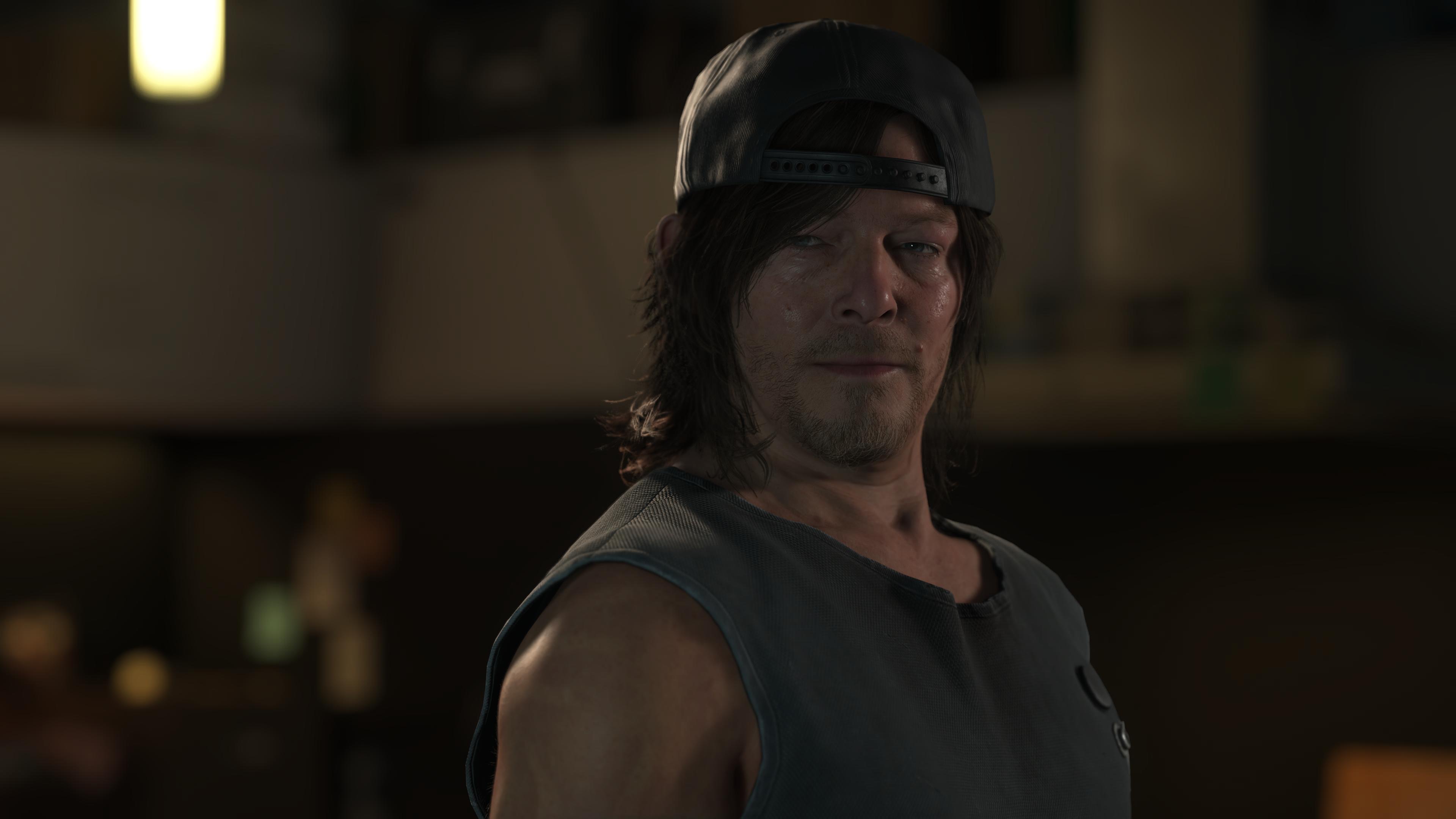

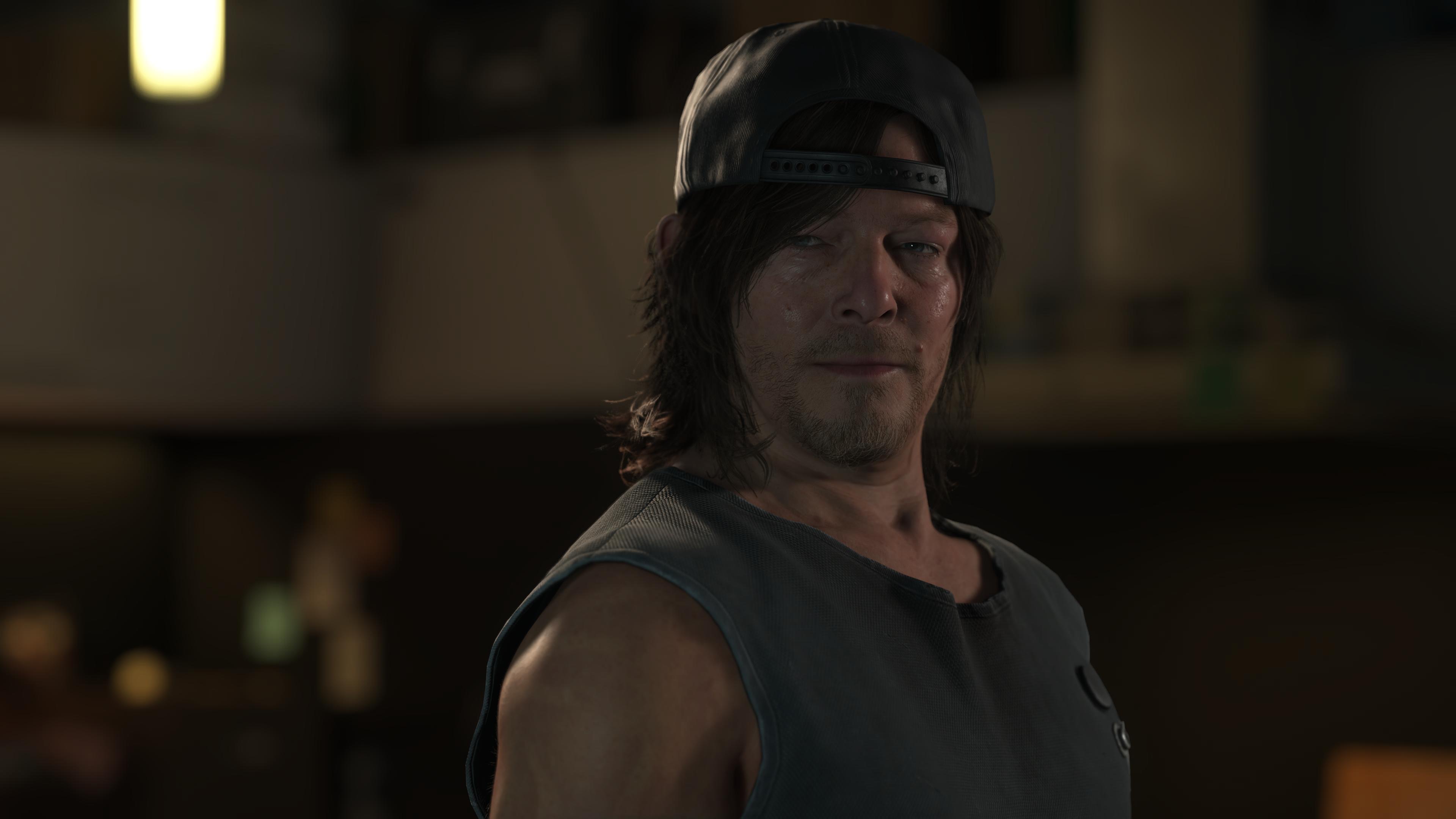

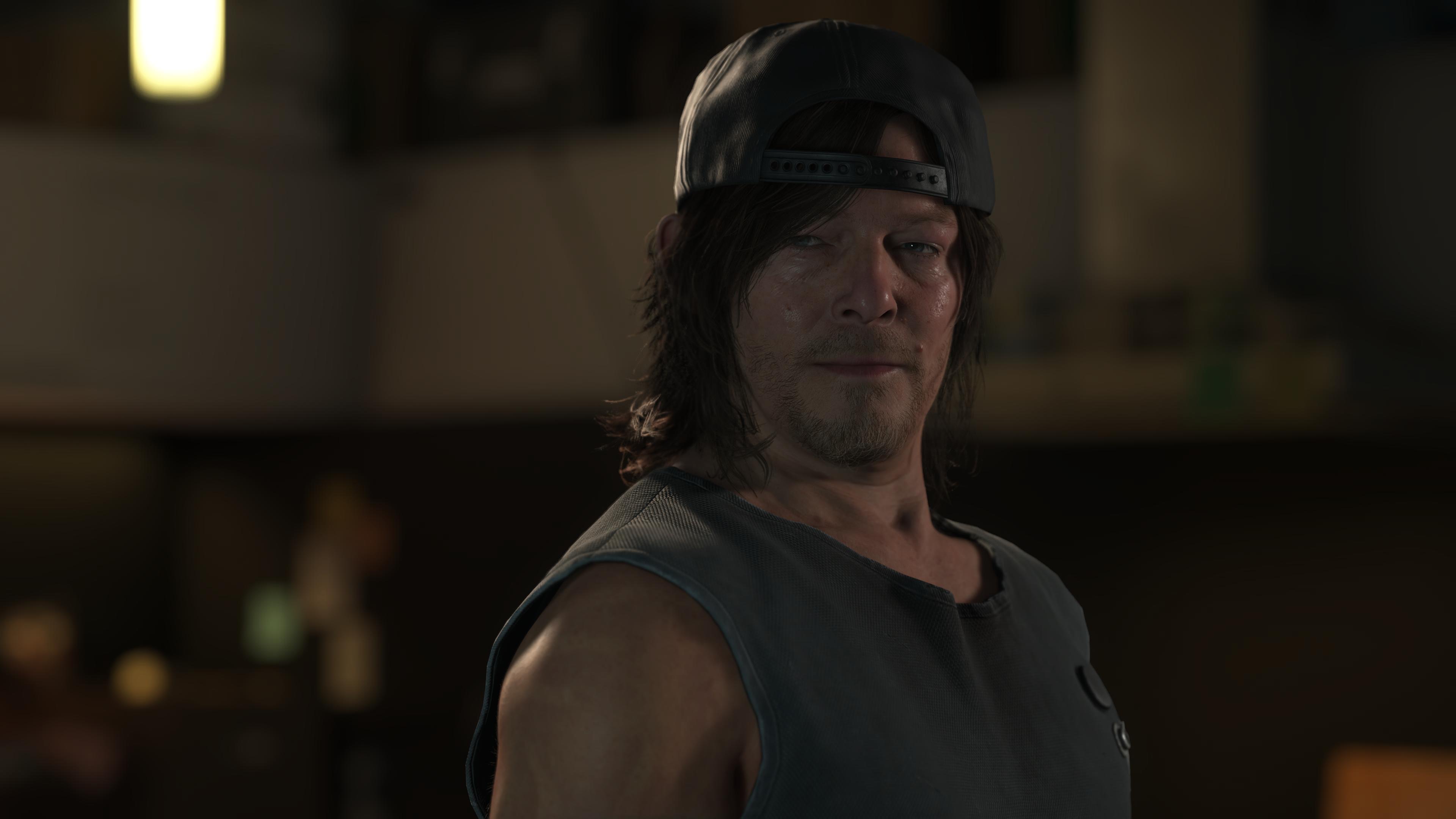

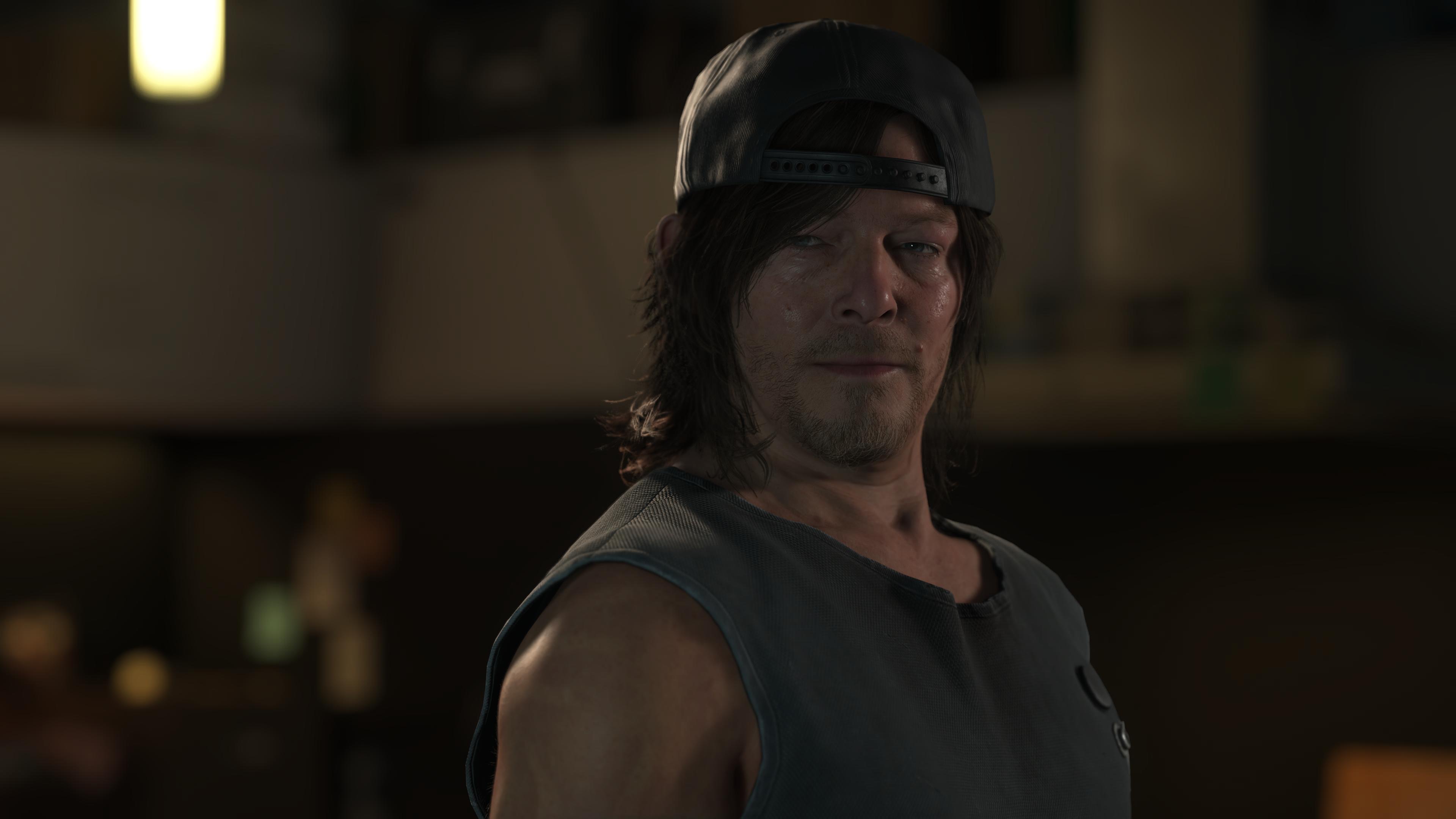

naaaah, DS2 hair and beard look not as good as H2 and these are cutscenes while H2 ingame graphic always look like that.

naaaah, DS2 hair and beard look not as good as H2 and these are cutscenes while H2 ingame graphic always look like that.

"Kojima san" and his team has write access to Decima engine. Kojima san literally chose the name of the engine. Kojima san had 6 years to add ray tracing either alone or by partnering up with GG, and failed miserably.

There were around 30 games released in the first year of PS5 that had ray tracing support. Mostly rt reflections or shadows, but nowadays in 2025, realtime GI either software or hardware RT is pretty much a standard and baked lighting is on its way out. Callisto added three RT effects in 2022, star wars jedi survivor added 3 RT effects including RTGI 4 months later. Both UE4 games that still hold up next to UE5 games.

No one should get a pass 5 years into this gen.

They don't need to create new engine they just need to update their current engine, so that ex rage 9, UE 5, Snowdrop and anvil are just updated versions from previous generations with new futures like rtgi,virtual geometry and etcThe biggest visual difference between decima DS2 and say Hellblade 2, is down to the geometry details.

UE5 can throw up tons of polygons and that gives visuals a sizable weighted feels.

Until GG create a new engine that can use mega-geometry and such, i dont think Kojima-san can do much, besides the designing the gameplay aspects and maxing out their artwork and game performance.

Ds2 like horizon use metahumans models for the characters...Dont blame kojima san. His team did their best with character models and superb art work, to hide the clearly dated decima engine.

Spiderman2, Hfw, Wolverine, intergalactic, all looks weak

You just cant make an UE4 game look better than UE5 as a developer. FF7RB looks dated no matter how much artwork poured into it

Now that's what all mid - late current games should look like

Lolshould look like is such a boring, overused statement.

Thank god games don't look like that

Did any games use it on Vega? It seems to have been undercooked when AMD dropped driver path. I guess peoples expected AMD to hijack a game and squeeze performance out of it with this? That would have been a monumental task to develop on a game to game basis and then to keep support, but apparently AMD engineers made the claim they would?

Now that's what all mid - late current games should look like

Barely visit this forum these daysI vaguely recall some performance upgrades to software lumen in 5.2 but i cant find anything on it right now. I believesncvsrtoip saw something on B3D back in the day.

no. it won't be a baseline.Nextgen buddy, nextgen.

That is gonna be the baseline for all decent devs.

Maybe even some sony studios if they don't completely turn into nintendo 2, the revenge.

That's why i said any decent dev, i should have added any decent dev that want to push graphic.no. it won't be a baseline.

Just as uncharted 4 is not a baseline for this gen.

It all depends on the devs and I hope the game look still remains. As I've said. The universally good, cgi looking ue5 realistic look is not what everyone should be going after.

We are speeding towards boring, every game is looking the same future. Let is be games for a little bit more.

I already miss clever graphics and tricks.. idk... like re4 or said uc4

fair enough.That's why i said any decent dev, i should have added any decent dev that want to push graphic.

Dude my main point not about hyper realism I talk about graphics tech like rtgi and virtual geometry which can also used in Pixar looking titles and give them great graphics in gameplay that compared to Pixar movies which not hyper realistic we taking about graphics tech dude, that i praised whatever I saw it, like when I was praise ds2 cutscenes characters models in previous commentsno. it won't be a baseline.

Just as uncharted 4 is not a baseline for this gen.

It all depends on the devs and I hope the game look still remains. As I've said. The universally good, cgi looking ue5 realistic look is not what everyone should be going after.

We are speeding towards boring, every game is looking the same future. Let is be games for a little bit more.

I already miss clever graphics and tricks.. idk... like re4 or said uc4

no. it won't be a baseline.

Just as uncharted 4 is not a baseline for this gen.

It all depends on the devs and I hope the game look still remains. As I've said. The universally good, cgi looking ue5 realistic look is not what everyone should be going after.

We are speeding towards boring, every game is looking the same future. Let is be games for a little bit more.

I already miss clever graphics and tricks.. idk... like re4 or said uc4

We are, but not in the way you meant it.We are speeding towards boring, every game is looking the same future.

Noted. Didn't cross my mind but they are indeed in the path of geometry pipeline changes, right after geometry shader I guess.

I can't say I know much about their implementation, but this post seem to cover some of the hurdles that differentiates the primitive shader tech to mesh shader's later implementation.

Did any games use it on Vega? It seems to have been undercooked when AMD dropped driver path. I guess peoples expected AMD to hijack a game and squeeze performance out of it with this? That would have been a monumental task to develop on a game to game basis and then to keep support, but apparently AMD engineers made the claim they would?

idk. I am having this reappreciation for custom engine older games graphics.Better graphics ≠ everything looking the same

I don't know why you keep bringing this up as if it's a thing. Game visuals are more diverse than they've ever been.

Yeah, but with the older engines they still strived for creating realistic graphics, is just that everyone had their own take and cheats and thus different results in creating realistic graphics.idk. I am having this reappreciation for custom engine older games graphics.

most notably Epic's Nanite.

There is a difference between ambient and specluar gi. Theres no directionality to ambient probes. They paint the enviroment with a flat bounce with zero direction to it no matter how dense they appear.what?

takes the color from environment to paint the textures. seems like a pretty usual method to cheat RT

Yeah that talk with the AMD VP was very enlightening, we now know that Mesh Shaders on AMD cards compile down into Primitive Shaders.

As others have pointed out Vega and even RDNA 1 supported this on a hardware level, but the driver stack was extremely poor. Mesh Shaders are the future, but there's also competing solutions, most notably Epic's Nanite.

If you want to find out more, you can dig into LeviathanGamer2's old tweets as he covered Mesh/Primitive Shaders extensively over the past 5 years.

We are, but not in the way you meant it.

Here, we are being echo-chambered in graphics-whore communities thinking that this is the direction the industry is going, and some of us bemoaning that "OOOH-ALL-GAMES-WILL-LOOK-AMAZING-AND-REALISTIC-SO-BORING!!!" But let me bring the anecdote. I watched the latest Sony's State of Play with my brother who last time played video games sometime in the early-mid 2010s and who remembers how all the devs then were trying to beat each other with better graphics and physics. While watching the State of Play, he was dumbfounded by EVERY game having some cheapo stylized/artsy look instead of good realistic graphics. He didn't follow the gaming industry for the last 15 years and expected that all games would look similarly amazing and realistic now.

The majority of the games do indeed look pretty similar now. Like stylized cartoon crap.

It looked ok, even for ps4 standards. It's just plastic looking and detail is severely lacking (especially because of lack of SSS).Using real famous actors only help tricking the brain into thinking that they look more real then they actually do.

Look at how good takeshi kitano look on a a freakin AA yakuza game on ps4

You're oversimplifying things here, if we're to only take into consideration very different art/graphical styles, we would have very little from where to choose.Hi fi rush looks as different from Mario as Mario looks from Hellblade 2.

What I said was simpleYou're oversimplifying things here, if we're to only take into consideration very different art/graphical styles, we would have very little from where to choose.

Things aren't so black and white.

But this is pretty much a given, that realistic styles are more simillar than a hand-drawn animation vs a CGI one.What I said was simple

Games with totaly different art stylet look more different than games that are realistic with different settings/architecture/tone/ etc

Doesn't mean they look exactly the same, but hey are obviously more simillar than tthe difference bettween a modern pixar movie and a 1960s disney movie.

These things are obviously on different levels when it comes to looking unique

Played a couple of hours of death stranding 2 and man this is as last gen as it gets. This isnt even ps4.5 like demon souls or ratchet, this is like do spiderman 2 at best. The lighting is barely better than last gen. There are some decent looking ground level detail but everything else looks last gen at best. The foliage, the rocks and mountains, draw distance. Volumetric lighting effects. Everything.

Cutscenes look a generation ahead. Phenomenal work here in terms of lighting and level of detail.

They fuc. ed in everything except cutscenesPlayed a couple of hours of death stranding 2 and man this is as last gen as it gets. This isnt even ps4.5 like demon souls or ratchet, this is like do spiderman 2 at best. The lighting is barely better than last gen. There are some decent looking ground level detail but everything else looks last gen at best. The foliage, the rocks and mountains, draw distance. Volumetric lighting effects. Everything.

Cutscenes look a generation ahead. Phenomenal work here in terms of lighting and level of detail.

A generational jump compared to ds1 maybe.Played a couple of hours of death stranding 2 and man this is as last gen as it gets. This isnt even ps4.5 like demon souls or ratchet, this is like do spiderman 2 at best. The lighting is barely better than last gen. There are some decent looking ground level detail but everything else looks last gen at best. The foliage, the rocks and mountains, draw distance. Volumetric lighting effects. Everything.

Cutscenes look a generation ahead. Phenomenal work here in terms of lighting and level of detail.

Unsurprisingly, the nitpicking and hyperboles have started. The bias is real.Played a couple of hours of death stranding 2 and man this is as last gen as it gets. This isnt even ps4.5 like demon souls or ratchet, this is like do spiderman 2 at best. The lighting is barely better than last gen. There are some decent looking ground level detail but everything else looks last gen at best. The foliage, the rocks and mountains, draw distance. Volumetric lighting effects. Everything.

We do this shit with every game and ds2 doesn't get a free pass because it's a kojima game, sorry.Unsurprisingly, the nitpicking and hyperboles have started. The bias is real.

Saying that DS2 is not even PS4.5 is just ridiculous when it's one of the best PS5 looking games. You guys have to come to your senses.We do this shit with every game and ds2 doesn't get a free pass because it's a kojima game, sorry.

And slimy is one of the biggest fanboy of ds1 in here, half of the things he say about that game make me laugh histerically, you really picked the wrong guy

Dude doesn't even need to cherrypick, this is a pic that a dude on reee posted to gush about the game...

We always exagerate a bit in here but if you have told me that the pic i posted was from ds1, i would believe you in an heartbeat.Saying that DS2 is not even PS4.5 is just ridiculous when it's one of the best PS5 looking games. You guys have to come to your senses.

And I hope it stays that way. Mandatory 30fps and below needs to stay dead, regardless of the graphical consequences.I dont think this game looks last gen at all. It looks next gen with some cuts because ps5 is not a very powerful console and because everyone nowadays is obsessed with 60 fps

It looks pretty much exactly how a last gen game with higher geometry LODs/detail would look like.I dont think this game looks last gen at all.

Watching df as if their content is just comedy skits seems to be the only correct way of watching their videosIt looks pretty much exactly how a last gen game with higher geometry LODs/detail would look like.

I've watched John's coverage on DF and was seriously puzzled by some takes as they sounded like sarcasm to me most of the time.

But is it better than mercedes or even audi?DS2 is no better than BMW

PS5 or PC pics?DS2 is no better than BMW

It doesnt look better than Ratchet and Demon Souls which i consider PS4.5 games. This is firmly on the cross gen territory like HFW, Astro Bot, GOW, GT7 and Spiderman 2.Saying that DS2 is not even PS4.5 is just ridiculous when it's one of the best PS5 looking games. You guys have to come to your senses.

I literally shook kojima's hand at the MGS4 launch on Timesquare. I have repeatedly said i would have his babies. My username is snake and my current avatar is a DS2 one. If anything i have pro kojima bias. But this is not next gen. I would say its like MGSV back in 2015, its last gen but with a decent upgrade over MGS4. But the forests couldnt compare to Witcher 3 that came out the same year, and Afghanistan rocky train simply didnt look PS4 quality.We do this shit with every game and ds2 doesn't get a free pass because it's a kojima game, sorry.

And slimy is one of the biggest fanboy of ds1 in here, half of the things he say about that game make me laugh histerically, you really picked the wrong guy

Dude doesn't even need to cherrypick, this is a pic that a dude on reee posted to gush about the game...

How many games look better on PS5?It doesnt look better than Ratchet and Demon Souls which i consider PS4.5 games. This is firmly on the cross gen territory like HFW, Astro Bot, GOW, GT7 and Spiderman 2.

You cant just say it looks like one of the best. Like how? The lighting is last gen. The level of detail is last gen. The foliage is last gen. The volumetric effects in sandstorms are last gen. The rain is last gen. What exactly is making you think this is a next gen game or on par with Demon Souls and Ratchet?.

Does it matter?PS5 or PC pics?

You are still not answering my question or addressing my claims. The lighting, foliage, rain, sand, detail is all last gen. Do you disagree?How many games look better on PS5?

The fact that FW, demon's souls etc. are cross gen games doesn't mean they would've looked much better had they been next gen only. Those games are the most beautiful games we have on PS5 even after all those years.