Leonidas

AMD's Dogma: ARyzen (No Intel inside)

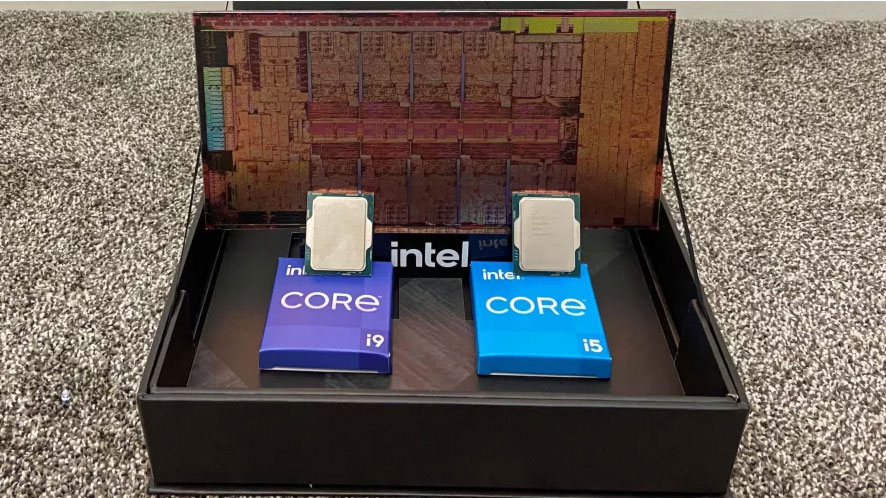

Intel Core i9-12900KS Review: The Fastest Gaming Chip Ever

Intel's Special Edition hits 5.5 GHz with ease.

Make no mistake: the Special Edition Core i9-12900KS is the fastest desktop [gaming] PC chip ever built.

Intel Core i9-12900KS Review - The Best Just Got Better

The Intel Core i9-12900KS is the company's new flagship Alder Lake processor. After our review, we can confirm that it is the "world's fastest gaming CPU," but that comes at a price not only in terms of dollars, but increased power draw and heat output, too.

the 12900KS is without any doubt the fastest gaming processor available.

Alder Lake is a beast!