Kataploom

Gold Member

Uh, it's an old post so I don't see how it relates to WC3 lolWarcraft 3?

IDK if it breaks its logic at higher frame rate, but as it's a game that should be played with M&K, I wouldn't recommend any FG.

Uh, it's an old post so I don't see how it relates to WC3 lolWarcraft 3?

Because most people (me included) don't give a shit about "ultra low latency". For me 60fps is perfect in responsiveness, even 30fps might be responsive enough if well implemented (I beat sekiro on PS4, a time based game at 30fps). But I care a LOT about smoothness, and in this case theseThe most annoying thing is people casually equating generated frames to real frames. Generated frames mean nothing. It's has no impact on the game's input, physics, simulation, etc. Its just annoying and I wish this trend got dead and buried. It's like AI assisted interpolation. Just trash.

Youre acting like AI interpolation like DLSS and average TV interpolation that was the trend decade ago were equal, they arent.The most annoying thing is people casually equating generated frames to real frames. Generated frames mean nothing. It's has no impact on the game's input, physics, simulation, etc. Its just annoying and I wish this trend got dead and buried. It's like AI assisted interpolation. Just trash.

No steam deck version? It's crying out for this!

motion interpolation in TVs and framegen are near identical bar where the processing is done. framegen is like motionflow 120hz and multiframegen is like motionflow 240hz. Sure the algorithms have improved from a decade ago but theyre the same thing and move on near identical timelines.Youre acting like AI interpolation like DLSS and average TV interpolation that was the trend decade ago were equal, they arent.

Obviously generated shouldnt be considered the same real frames and you need enough real ones as a prerequisite to generate the fake, but i actually prefer fake frames over real ones since its impossible to tell FG is on since in real-time youre looking at both fake and generated in conjunction, its not like youre capturing a video and then stopping only on one specific ai generated frame.

I have no idea how either work on a technical level, i just know that one looks good, has many upsides and some small downsides, whereas the other one looks just plain bad (bad might be putting it too lightly)motion interpolation in TVs and framegen are near identical bar where the processing is done. framegen is like motionflow 120hz and multiframegen is like motionflow 240hz. Sure the algorithms have improved from a decade ago but theyre the same thing and move on near identical timelines.

Playing videogames on a tiny screen never looks good.I want to be back where we get actual good screen picture clarity because if the screen projector was a crt this would never be a problem.all this add is soap opera effect and smoothing . A good crt at 20 fps looks better in motion then this at 60 magic

Tell that to all the switch and steamdeck users. Screensize rarely affects immersion.Playing videogames on a tiny screen never looks good.

Tell that to all the switch and steamdeck users. Screensize rarely affects immersion.

the input isn't just 24fps, I'm not sure where you're getting that from. It's up to 60fps with MotionFlow 120hz and 240hz. meaning it adds a frame inbetween each for 120fps and 3 frames between for 240fps from a 60fps source. The quality is comparable with the same artifacts as framegen. It's very very similar tech just where the processing is done is changed. It's all "fake frames".Easy to spot interpolation on a tv immediately because it looks and feels terrible, not to mention the input is just 24fps, cant tell at all with dlss or even fsr3.1 for that matter, but starting and ending at much higher framerates.

Tried it with 16-bit games and arcades. It looks cool, but it's not very useful. One frame of lag and you start missing jumps in Mario games.What does that do to emulators?

8/16-bit games run mostly at 60fps, bumping them to a 120fps interpolated mode, that's interesting!

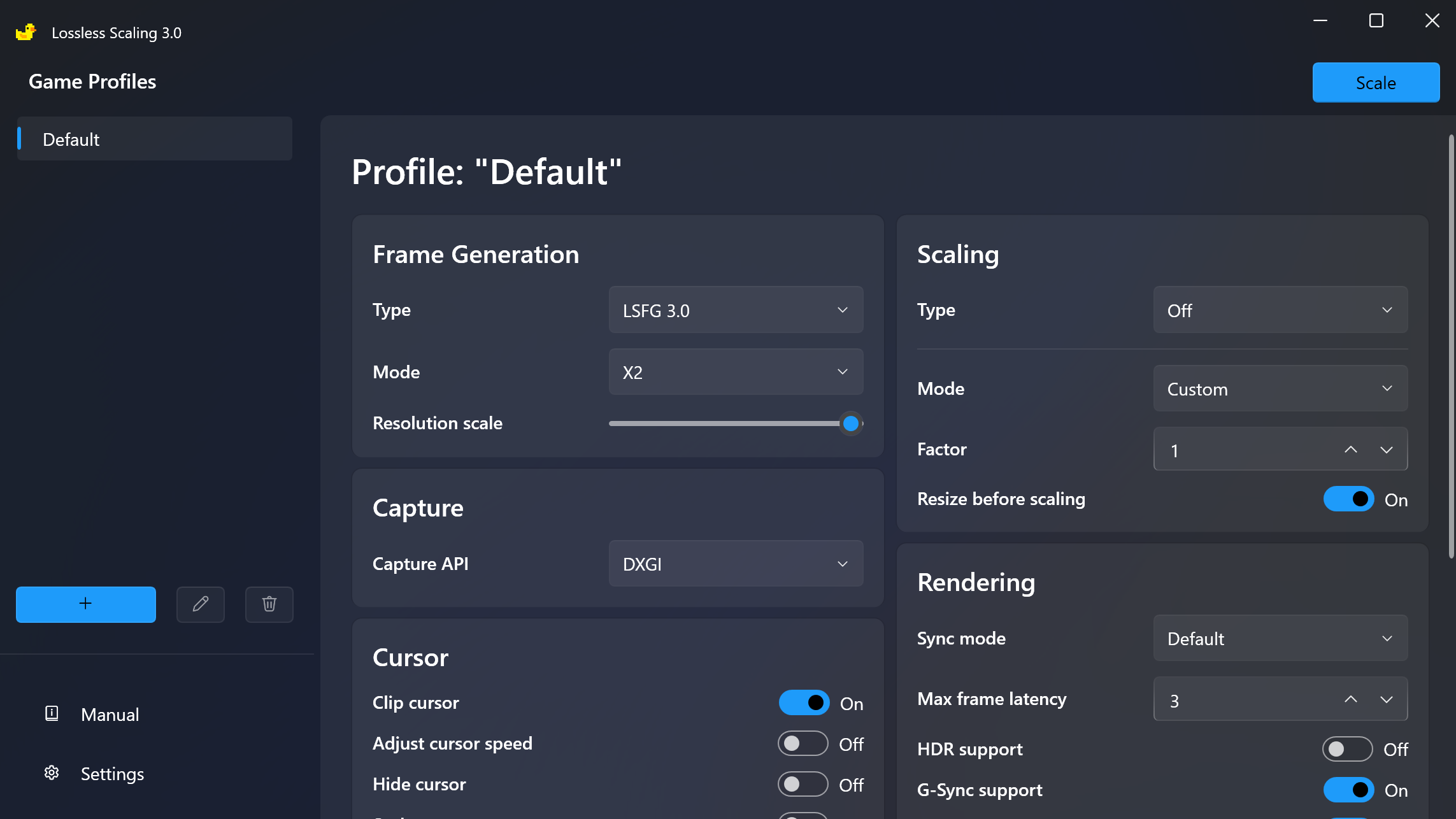

Looking forward to testing out the new update tomorrow. Gotta be careful using x3 or x4, it can cause motion sickness in some games.

Sometimes I use it on anime. Wouldn't say it's "better", but it's cool to fuck around with.

The input has a variable range, but when all the content is 24fps it does not matter, movies and tv shows are pretty much 24fpsthe input isn't just 24fps, I'm not sure where you're getting that from

I can tell right away that you havent experienced framegen using this app, if you are really comparing it to TV interpolation techniques .motion interpolation in TVs and framegen are near identical bar where the processing is done. framegen is like motionflow 120hz and multiframegen is like motionflow 240hz. Sure the algorithms have improved from a decade ago but theyre the same thing and move on near identical timelines.

This loseless scaling app no, but framegen in general of course I have.I can tell right away that you havent experienced framegen using this app, if you are really comparing it to TV interpolation techniques .

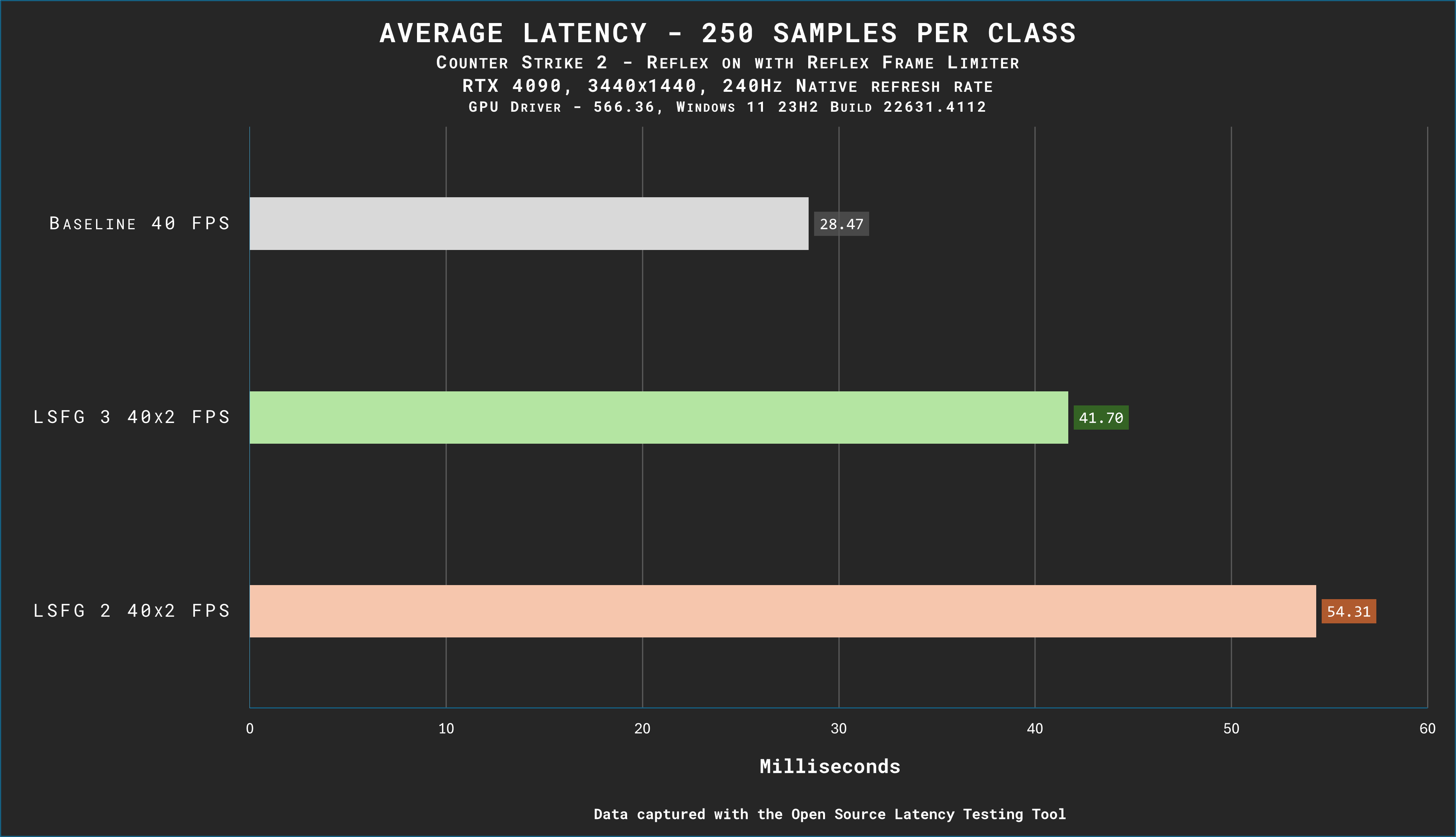

What matters most in framegen is latency. And no TV will offer latency as good as this app using your GPU.

It's pretty damn good, especially if used in games where the movement is relatively smooth. I would never recommend it for competitive fps though. But flight simulators or more story heavy games, it's mostly upsides and very little downsides. I'm fucking around with it in cyberpunk, comparing it to DLSS frame gen on a laptop, I'm keeping it at no more than 3x as 4x already causes too much lag and artifacts to appear, but it's pretty good. Even better on the windows handhelds where you're needing to compensate for low powered GPU anyway.This loseless scaling app no, but framegen in general of course I have.

So, how's the input latency?just dont ask me about input latency.

So, how's the input latency?

GPU cost and vram requirements are broadly inline with DLSS FG. Catch is that there is more artifacting here than DLSS FG Can't speak for FSR as I have an Nvidia card. On the other hand it's nice to have a fg solution that can be universally applied (even for movies!).So does this thing affect gpu performance? Vram requirements? Surely you can't 2x the fps without some catch

It has a gpu/cpu cost so you don't go from 60fps to 120fps. For example you go from 60fps to 105fps with added latency because your true fps is now 52.5fps.So does this thing affect gpu performance? Vram requirements? Surely you can't 2x the fps without some catch

x20 frame gen lol. Eat your heart out, Jensen, and your puny 4x!

I kid ofc and expect a artifact mess at anything over 4-5x. Excited to try it out after work nonetheless!

It has a gpu/cpu cost so you don't go from 60fps to 120fps. For example you go from 60fps to 105fps with added latency because your true fps is now 52.5fps.

Have you used it? In my experience the input lag is very minimal. The difference between 60 and 105 frames is massive.This was always my chief complaint with this software. Who on earth wants to play with 105 laggy fps over 60 real and responsive ones? I think it's best suited to very high baseline performance, but again, it's just superior to stick to real frames then.

I can't figure out why this app exists.

Have you used it? In my experience the input lag is very minimal. The difference between 60 and 105 frames is massive.

Cool, thats great to hear.cant see any artifacts now , reduced lag is noticable

brilliant update

I'm sorry, but reduced lag is... impossible.cant see any artifacts now , reduced lag is noticable

im using x2 mode to bump fps from a locked 30 fps to 60 in games like snowrunner.I'm sorry, but reduced lag is... impossible.

He probably compared it to earlier versions. There is of course a little bit lag but its not that bad. Link's head glitch while rotating camera is less noticeable now(I'm using it on twilight princess for 60fps experience).I'm sorry, but reduced lag is... impossible.

Only "real frames" matter to lag, and real FPS suffers a bit with this enabled.

Try play on a small ass monitor before saying this.Tell that to all the switch and steamdeck users. Screensize rarely affects immersion.

Artifacts are definitely lower now, but still visible on my very limited testing with AC: Odyssey. Main artificing is the fizzle around the player character model when turning the camera.Cool, thats great to hear.

Especially the reduced lag.

I can confirm lag to be reduced even when not using reduced frame resolution. Truly revolutionary.