I have to agree with this strongly. I hadn't gotten Days Gone at first because though it looked really cool to me, what I was reading about it gave me pause. Sounded like it was super buggy and maybe broken. I didn't pay attention to the reviews talking about how male-centric it was or how it was based on a "macho worldview" because frankly, I don't give a shit about that. Me caveman! Me shoot things and go BOOOM!

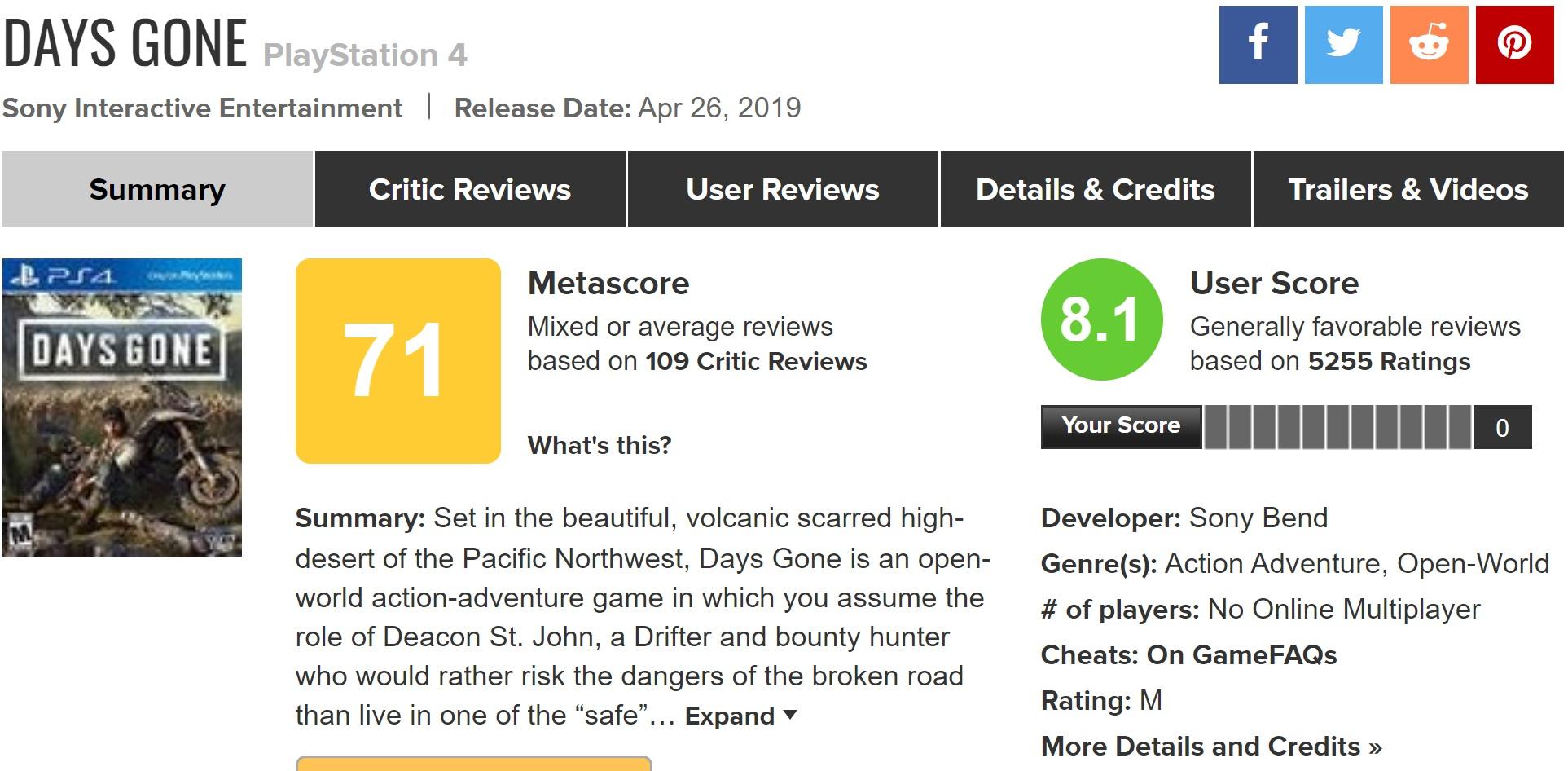

But it looked really cool so I ended up getting it probably 3 weeks to a month after release. Apparently they'd fixed the bugs by then because I had no issues. The game was, IMHO, much better than it had been reviewed. Sure, it's a certain type of story. Show me a game based on a (basically) zombie apocalypse with the modern world gone that's centered on "woke" principles and story line and I'll show you one LOUSY game that shouldn't have been made! lol. So I really liked it.

Look, I think there's room in games to tell all kinds of stories. When a game isn't centered on a specific character and type of character, I want and expect to be able to customize my character, make it male or female or look like whatever I want. If the game IS centered on a specific character and type, like say the Last of Us was....that's fine too. I'm not going to ding TLOU for having a white dude in it, and I wouldn't rip that game or a similar one for having any other race character as the center. That's all good. Same thing with other aspects that people deem "woke." When things are central to the story, all good and as I said, there's a LOT of room for storytelling in games.

What I DO object to, is when such things are shoveled into a game just "because it's time" or "because it's 2020 now." If there's not a reason central to the story, game or character, I don't care how many women the main character bangs. Likewise if the main character was gay or anything else. We're seeing this shoveling in a lot of movies right now. Instead of making a good strong balanced female character and making it about that, the movie creators make an unrealistic caricature of a woman and not only that, they have to make all the male characters useless just to show even more how awesome the female character is. (See: The Last Jedi as one good example of this). Also, isn't it insulting that to make a strong woman character they so often make the woman act MALE? She's twice as ass kicking and beer chugging as her male colleagues, just to show how strong she is. Can't a woman be strong without being "butch?" Same for men...can't men be sensitive, caring or idealistic without acting feminine? Now, of course there's a place for masculine women and feminine men as well, but it just seems that things are out of balance.

The other problem is making things "woke" by breaking the world lore and story. Dr. Who is a great example of this. Throughout the history of the show and books there were male and female TimeLords. It was even joked about that a gender transition in a regeneration would be a disaster...rumored to have happened but doubtless untrue. Then "Because it's time" they broke all that lore and made the new Doctor regenerate as a female. Wouldn't have bothered me if that had been a part of the show and story's lore, etc. but it wasn't. They shoehorned it in and then told everyone if they didn't like it, it was because you were a misogynist.

Anyway, enough of that. Bottom line I agree with games. There's lots of room to tell lots of stories and to keep characters diverse as well. Everyone should get to play at least some games as a character that resembles themselves or whatever image they so choose. I just think it's the wrong thing to do to try and force or shoehorn things into those stories in the name of being "woke." It doesn't feel legitimate and I think it is frankly insulting.