LectureMaster

Has Man Musk

I guess this will essential for multi frame gen.

In competitive games, a few milliseconds of input lag can mean the difference between victory and defeat.

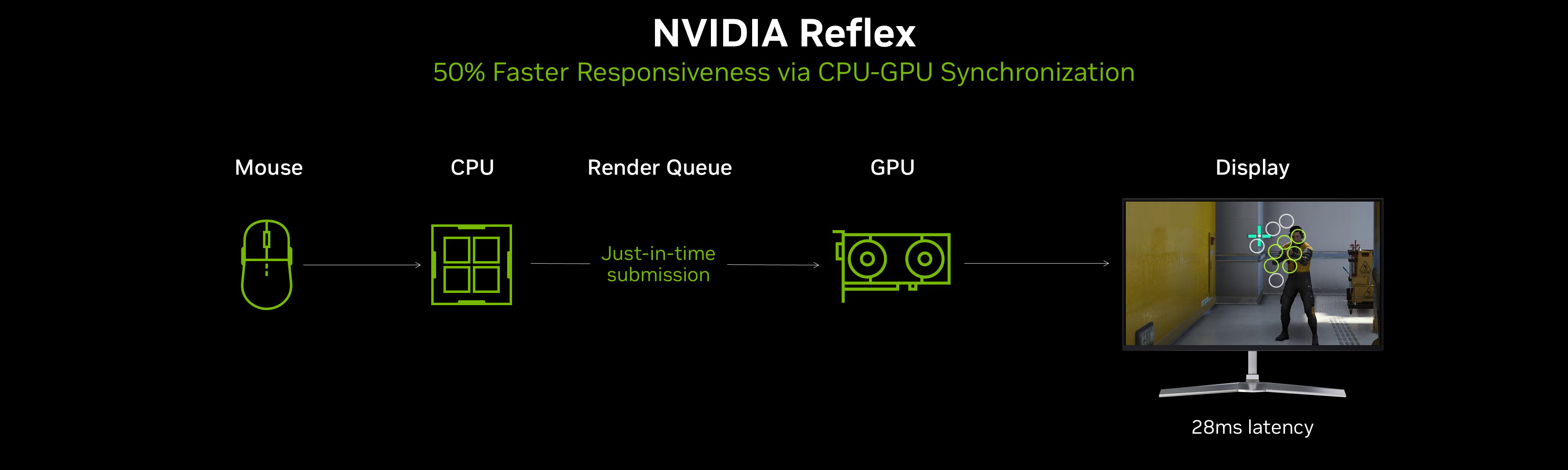

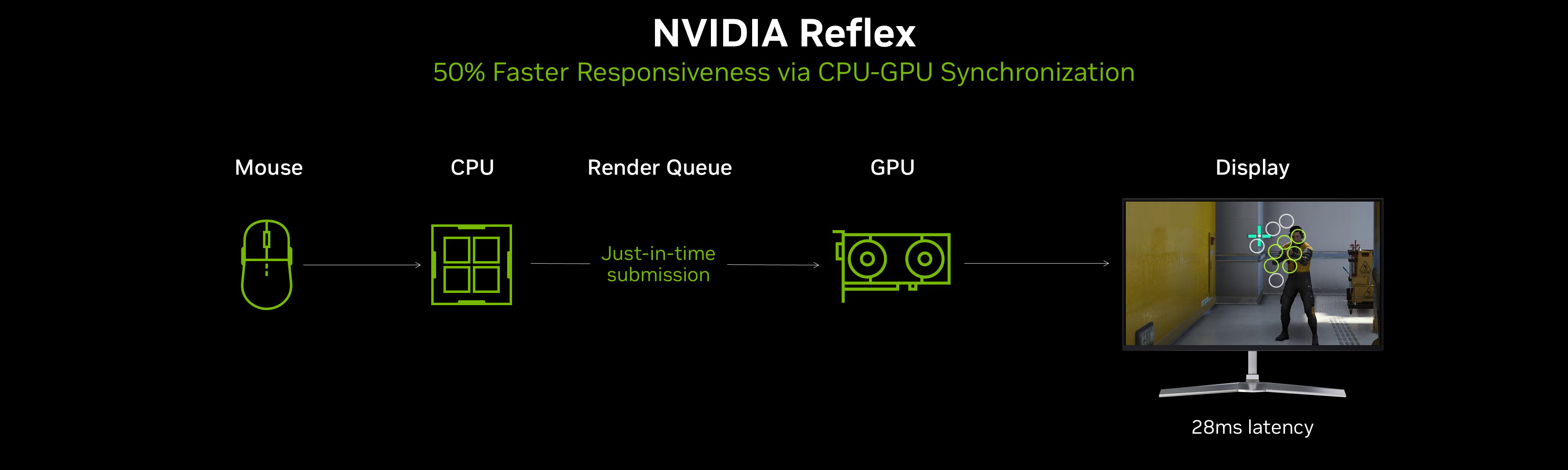

In 2020, we released NVIDIA Reflex, an innovative technology that reduces PC latency in top competitive games by an average of 50%. NVIDIA Reflex accomplishes this by synchronizing CPU and GPU work, so player actions are reflected in-game quicker, giving gamers a competitive edge in multiplayer games, and making single-player titles more responsive.

In the last four years, NVIDIA Reflex has been integrated in over 100 games and reduced latency for tens of millions of GeForce gamers. Over 90% of gamers turn Reflex on, allowing them to experience better responsiveness, aim more accurately, and win more games.

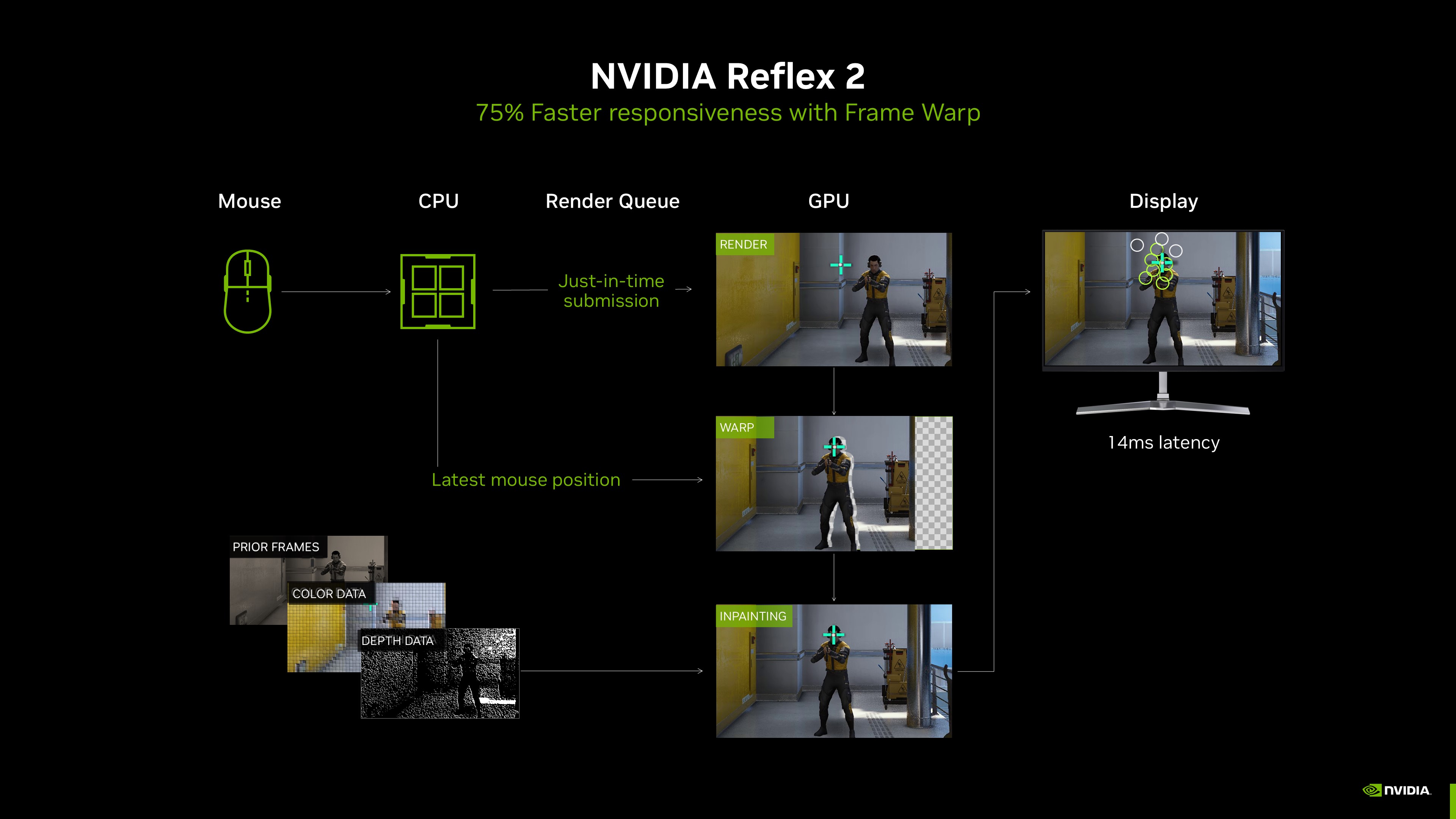

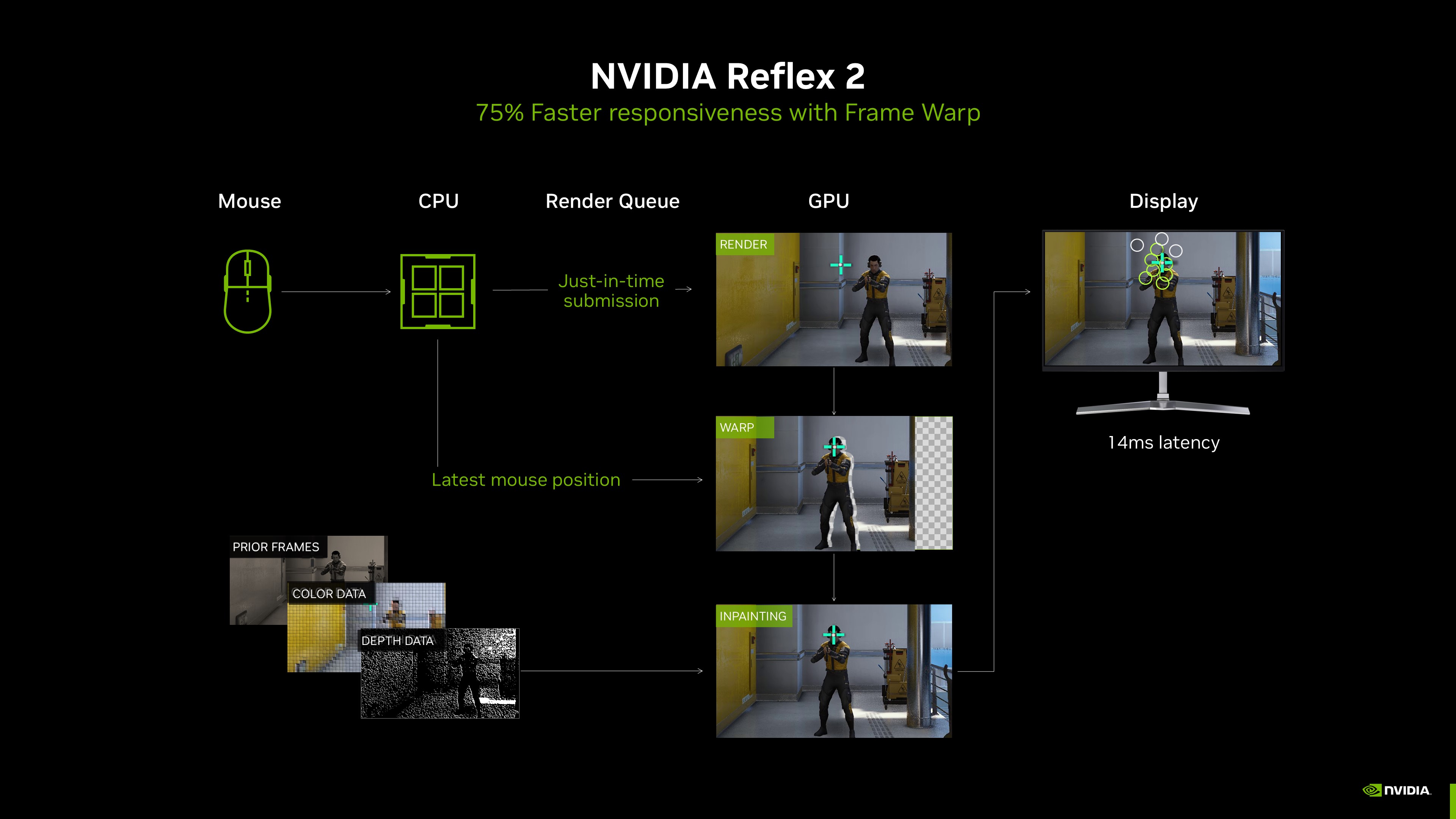

At CES 2025, we're unveiling NVIDIA Reflex 2, which can reduce PC latency by up to 75%. Reflex 2 combines Reflex Low Latency mode with a new Frame Warp technology, further reducing latency by updating the rendered game frame based on the latest mouse input right before it is sent to the display.

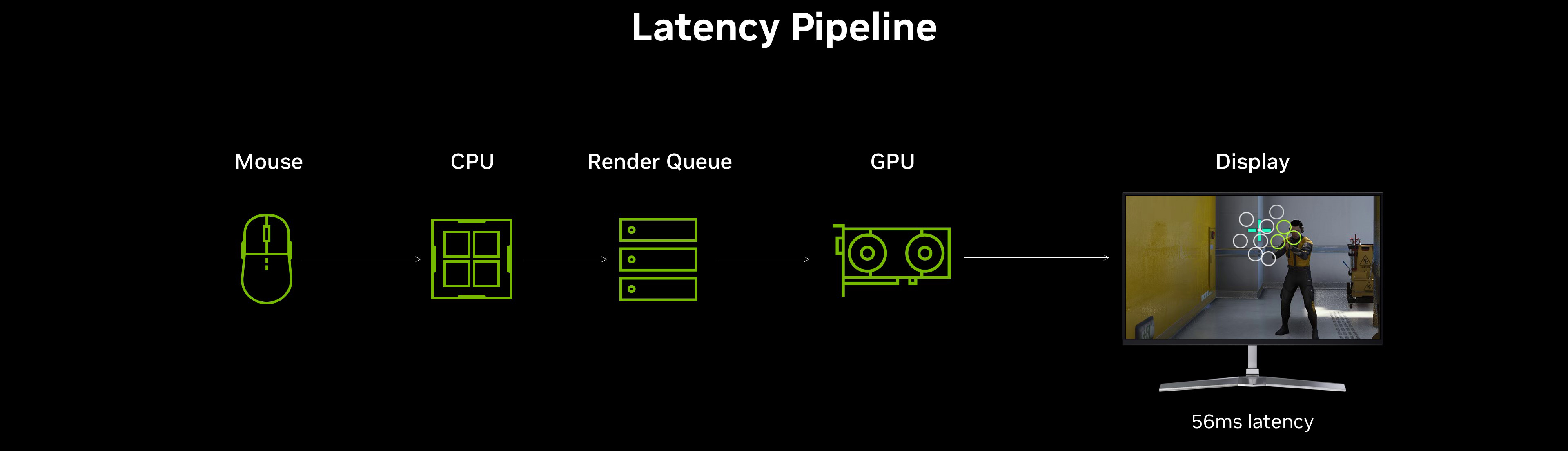

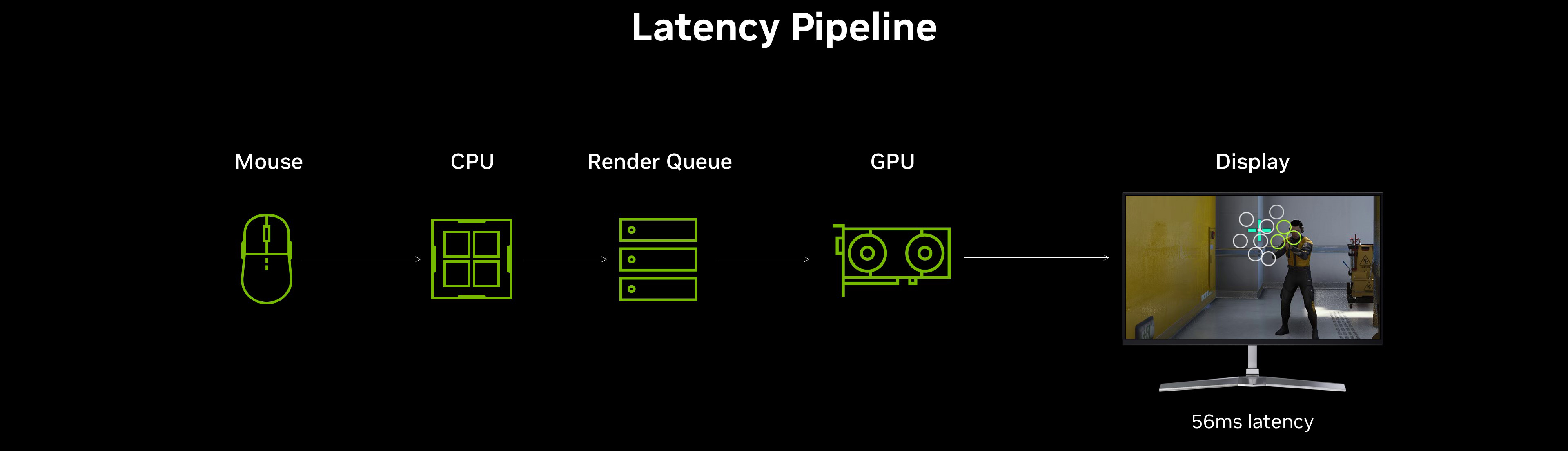

This process typically executes in tens of milliseconds for each frame, though stalls and other delays can add latency, making the game feel unresponsive.

With the launch of Reflex, we set out to optimize the latency pipeline from mouse to display via an SDK integrated directly into the game engine. Reflex better paces the CPU, preventing it from running ahead, and allowing it to submit tasks to the GPU just in time for the GPU to start work, effectively eliminating the render queue. And by starting the CPU work later, mouse inputs can be sampled closer to when a frame is being submitted to the GPU, further improving responsiveness.

With Reflex 2, we've introduced a different approach to reducing latency. Four years ago, NVIDIA's esports research team published a study illustrating how players could complete aiming tasks faster when frames are updated after being rendered, based on even more recent mouse input. In the experiment, game frames were updated to reduce 80 milliseconds (ms) of added latency, which resulted in players completing an aiming target test 30% faster.

When a player aims to the right with the mouse, for example, it would normally take some time for that action to be received, and for the new camera perspective to be rendered and eventually displayed. What if instead, an existing frame could be shifted or warped to the right to show the result much sooner?

Reflex 2 Frame Warp takes this concept from research to reality. As a frame is being rendered by the GPU, the CPU calculates the camera position of the next frame in the pipeline, based on the latest mouse or controller input. Frame Warp samples the new camera position from the CPU, and warps the frame just rendered by the GPU to this newer camera position. The warp is conducted as late as possible, just before the rendered frame is sent to the display, ensuring the most recent mouse input is reflected on screen.

When Frame Warp shifts the game pixels, small holes in the image are created where the change in camera position reveals new parts of the game scene. Through our research, NVIDIA has developed a latency-optimized predictive rendering algorithm that uses camera, color and depth data from prior frames to in-paint these holes accurately. Players see the rendered frame with an updated camera perspective and without holes, reducing latency for any actions that shift the in-game camera. This helps players aim better, track enemies more precisely, and hit more shots.

Here's an example from Embark Studios' THE FINALS, with Frame Warp, with and without in-painting:

The result is a frame which shows the freshest camera position, seamlessly inserted into the rendering pipeline.

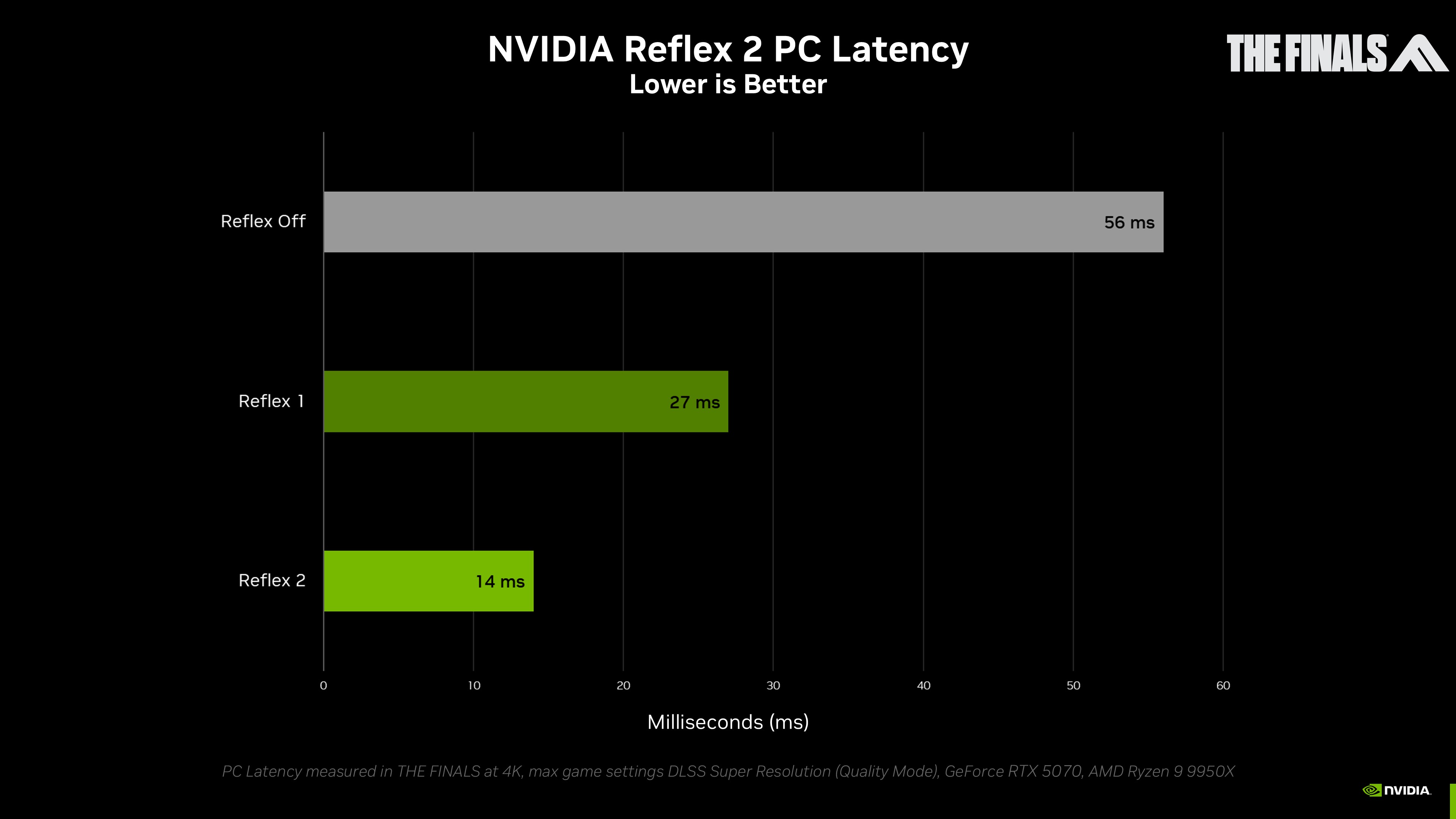

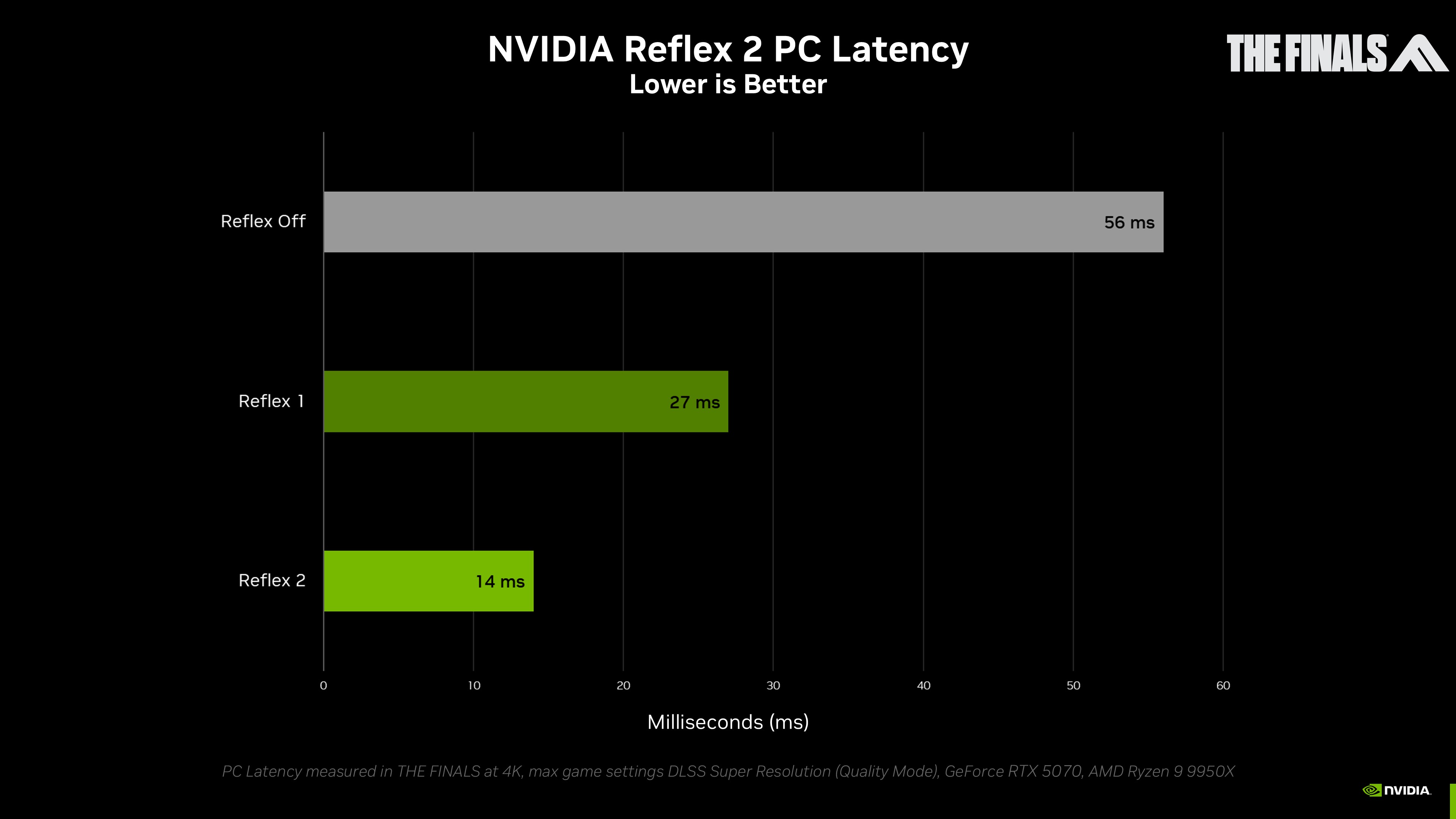

Without Reflex, PC latency in THE FINALS on a GeForce RTX 5070 is 56 ms, using highest settings at 4K. With Reflex Low Latency, latency is more than halved to 27ms. And by enabling Reflex 2, Frame Warp cuts input lag by nearly an entire frametime, reducing latency by another 50% to 14ms. The result is an overall latency reduction of 75% by enabling NVIDIA Reflex 2 with Frame Warp.

Reflex Low Latency mode is most effective when a PC is GPU bottlenecked. But Reflex 2 with Frame Warp provides significant savings in both CPU and GPU bottlenecked scenarios. In Riot Games' VALORANT, a CPU-bottlenecked game that runs blazingly fast, at 800+ FPS on the new GeForce RTX 5090, PC latency averages under 3 ms using Reflex 2 Frame Warp - one of the lowest latency figures we've measured in a first-person shooter.

NVIDIA Reflex 2 is coming soon to THE FINALS and VALORANT, and will debut first on GeForce RTX 50 Series GPUs, with support added for other GeForce RTX GPUs in a future update.

[/hr]

NVIDIA Reflex 2 With New Frame Warp Technology Reduces Latency In Games By Up To 75%

Innovative new technology improves responsiveness by updating rendered frames based on latest mouse input.

www.nvidia.com

NVIDIA Reflex 2 With New Frame Warp Technology Reduces Latency In Games By Up To 75%

By Andrew Burnes and Nyle Usmani on January 06, 2025 | Featured StoriesCESGeForce RTX 50 SeriesGeForce RTX GPUsNVIDIA DLSSNVIDIA ReflexIn competitive games, a few milliseconds of input lag can mean the difference between victory and defeat.

In 2020, we released NVIDIA Reflex, an innovative technology that reduces PC latency in top competitive games by an average of 50%. NVIDIA Reflex accomplishes this by synchronizing CPU and GPU work, so player actions are reflected in-game quicker, giving gamers a competitive edge in multiplayer games, and making single-player titles more responsive.

In the last four years, NVIDIA Reflex has been integrated in over 100 games and reduced latency for tens of millions of GeForce gamers. Over 90% of gamers turn Reflex on, allowing them to experience better responsiveness, aim more accurately, and win more games.

At CES 2025, we're unveiling NVIDIA Reflex 2, which can reduce PC latency by up to 75%. Reflex 2 combines Reflex Low Latency mode with a new Frame Warp technology, further reducing latency by updating the rendered game frame based on the latest mouse input right before it is sent to the display.

NVIDIA Reflex 2 Explained

Every player action taken in a video game goes through a complex pipeline before being rendered on-screen, with each step introducing latency. Inputs from your keyboard and mouse are passed to the game, where their effects are calculated by the CPU. The results are placed in a render queue, which is passed to the GPU for rendering, before finally being output to the display.This process typically executes in tens of milliseconds for each frame, though stalls and other delays can add latency, making the game feel unresponsive.

With the launch of Reflex, we set out to optimize the latency pipeline from mouse to display via an SDK integrated directly into the game engine. Reflex better paces the CPU, preventing it from running ahead, and allowing it to submit tasks to the GPU just in time for the GPU to start work, effectively eliminating the render queue. And by starting the CPU work later, mouse inputs can be sampled closer to when a frame is being submitted to the GPU, further improving responsiveness.

With Reflex 2, we've introduced a different approach to reducing latency. Four years ago, NVIDIA's esports research team published a study illustrating how players could complete aiming tasks faster when frames are updated after being rendered, based on even more recent mouse input. In the experiment, game frames were updated to reduce 80 milliseconds (ms) of added latency, which resulted in players completing an aiming target test 30% faster.

When a player aims to the right with the mouse, for example, it would normally take some time for that action to be received, and for the new camera perspective to be rendered and eventually displayed. What if instead, an existing frame could be shifted or warped to the right to show the result much sooner?

Reflex 2 Frame Warp takes this concept from research to reality. As a frame is being rendered by the GPU, the CPU calculates the camera position of the next frame in the pipeline, based on the latest mouse or controller input. Frame Warp samples the new camera position from the CPU, and warps the frame just rendered by the GPU to this newer camera position. The warp is conducted as late as possible, just before the rendered frame is sent to the display, ensuring the most recent mouse input is reflected on screen.

When Frame Warp shifts the game pixels, small holes in the image are created where the change in camera position reveals new parts of the game scene. Through our research, NVIDIA has developed a latency-optimized predictive rendering algorithm that uses camera, color and depth data from prior frames to in-paint these holes accurately. Players see the rendered frame with an updated camera perspective and without holes, reducing latency for any actions that shift the in-game camera. This helps players aim better, track enemies more precisely, and hit more shots.

Here's an example from Embark Studios' THE FINALS, with Frame Warp, with and without in-painting:

The result is a frame which shows the freshest camera position, seamlessly inserted into the rendering pipeline.

Without Reflex, PC latency in THE FINALS on a GeForce RTX 5070 is 56 ms, using highest settings at 4K. With Reflex Low Latency, latency is more than halved to 27ms. And by enabling Reflex 2, Frame Warp cuts input lag by nearly an entire frametime, reducing latency by another 50% to 14ms. The result is an overall latency reduction of 75% by enabling NVIDIA Reflex 2 with Frame Warp.

Reflex Low Latency mode is most effective when a PC is GPU bottlenecked. But Reflex 2 with Frame Warp provides significant savings in both CPU and GPU bottlenecked scenarios. In Riot Games' VALORANT, a CPU-bottlenecked game that runs blazingly fast, at 800+ FPS on the new GeForce RTX 5090, PC latency averages under 3 ms using Reflex 2 Frame Warp - one of the lowest latency figures we've measured in a first-person shooter.

NVIDIA Reflex 2 is coming soon to THE FINALS and VALORANT, and will debut first on GeForce RTX 50 Series GPUs, with support added for other GeForce RTX GPUs in a future update.

[/hr]