HeisenbergFX4

Member

Get that 8GB card and try running higher settings and see what happens with todays games.

Hardware threads on gaf. Why do I even bother. Might as well tape 16GB to a worm and gaf will still love it.

Get that 8GB card and try running higher settings and see what happens with todays games.

Hardware threads on gaf. Why do I even bother. Might as well tape 16GB to a worm and gaf will still love it.

They did that of all the cards except the 4090, while also raising the price. The joke writes itself.Sadly they downgraded the Chip of xx60, now it's using 107 instead of 106.

Get that 8GB card and try running higher settings and see what happens with todays games.

If the RX 7700 XT uses Navi 33 then the RX 6800 will definitely be a lot faster still.Currently the best option seems to be the discounted RX 6800. The 4060 ti 16gb Vs Rx 6800 will be an interesting one I feel at the 500usd mark. Not to mention the possible Rx 7700 xt which should be revealed in the next few months.

An 8GB 4060 is the 3GB 1060 all over again. Just stay away if you don't want to regret it a little later. An 8GB ti is just puzzling, who is this card really for? It shouldn't exist as it makes no sense. I imagine it's just there to fill the price gap.

Both 7700 XT and 7800XT uses Navi 32, but AMD is gonna wait for RDNA 2 cards to sell before launching them on the market.If the RX 7700 XT uses Navi 33 then the RX 6800 will definitely be a lot faster still.

Though if it uses cut-down Navi 32 with 6 channels (12GB VRAM) then it might be a bit better.

4070 is massively better from that 8gigs 4060ti not to mention 4more gigs of vramI bought a 4070 recently, did I get scammed? LOL

not everyone has 3 grand to spend on a GPU! yeah that's how much it cost here in Canada.This is barely better than a 3060 and you all think this is a great deal. It would be a good deal if it had the usual performance uplift from last gen, but it's what a 15% uplift. Are you freakin kidding me? You can talk about frame generation all you want, but that doesn't count when you still have the same lag (even more so actually).

Nvidia suckered you... and here I go back to gaming on my 4090!

not everyone has 3 grand to spend on a GPU! yeah that's how much it cost here in Canada.

nope the 4090 , the lowest price is 2100 plus taxesA RTX 3060 is $3k in Canada? I sure do feel sorry for you.

In Dominican Republic cost around 2,300 to 2,400.nope the 4090 , the lowest price is 2100 plus taxes

Jesus christ. I'm not defending 8GB. VRAM isnt the only thing GPUS needs for performance. How the fuck is everyone so oblivious? If you slap 24GB VRAM on 970, it'll still be shit.

well exactly , plus tax here its around 2500$In Dominican Republic cost around 2,300 to 2,400.

8 GB 4060 is shit down the road in a year or two.

Considering I paid 500 euro for a 3060 during the crypto/covid boom (yes I'm a idiot) that's actually pretty damn good.RTX 460 = $299

RTX 460 TI (8 GB) = $399

RTX 460 TI (16 GB) = $499

RTX 460 TI (16 GB) is the only card that's somewhat future proof of course.

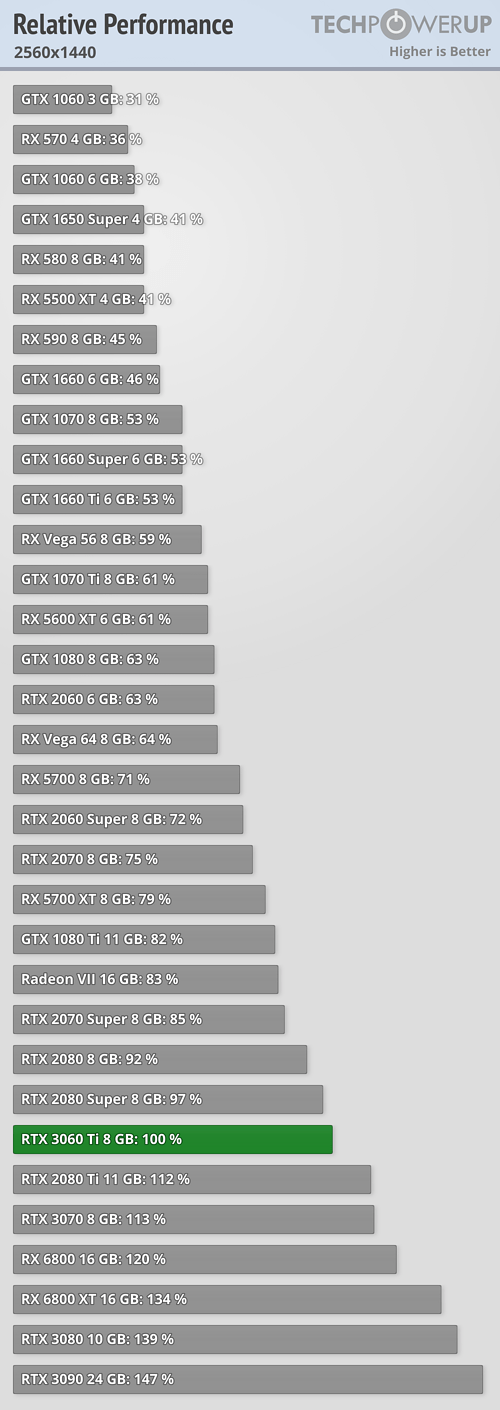

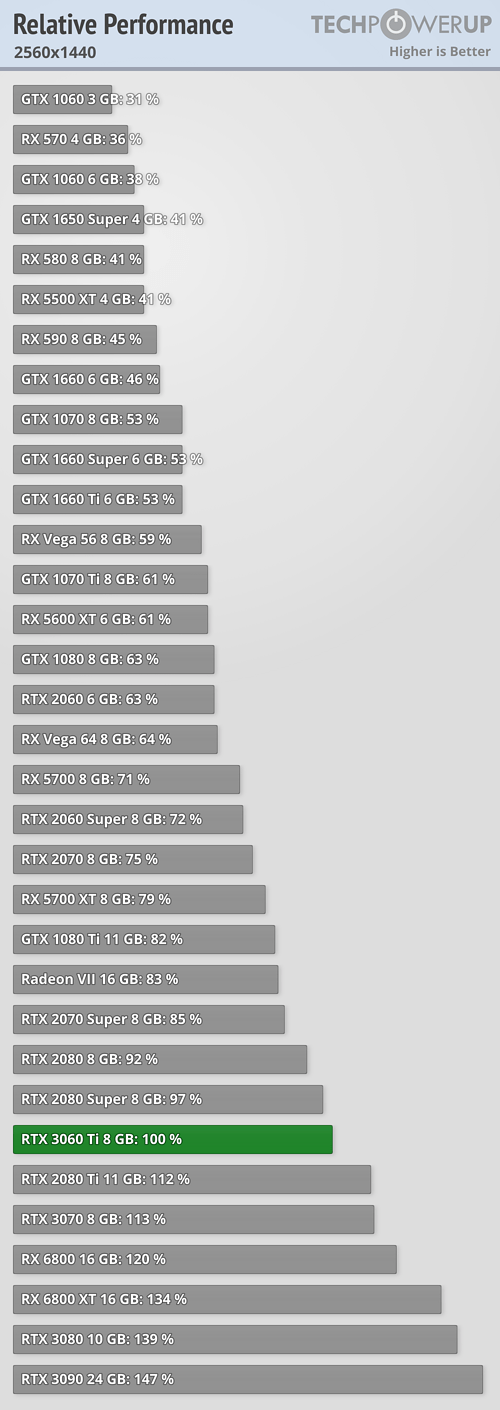

Nvidia's official slides say it's 15% faster than the 3060 Ti, which would put it almost exactly on par with the 3070. I assume it will have some performance regressions and also have a few titles where it does really well, like the 4070 vs 3080.The xx60 on Pascal and Turing was as powerful as the xx80 card from the previous gen. When we got to Ampere, the 3060 was only as powerful as the 2070. The leaked Geekbench 5 CUDA score for the 4060 Ti is 146170. The 3060 Ti scores 130000-140000. 3070 is ~150000. Obviously we'll have to wait for reviews, but in this test the 4060 Ti isn't even hitting 3070 level, let alone the 4060.

If that ends up being reflected in benchmarks, then we went from xx60 being as powerful as the xx80 from the previous gen, to the xx60 being less powerful than xx70, and possibly even the xx60 Ti from the previous gen. Don't be fooled by the naming convention and price point, this is a 4050 renamed to a 4060.

This isn't even getting into the whole VRAM deal. The 8GB 4060 Ti is a definite no-go. If you're cool with med-high settings and want a new card with warranty, then the 4060 might be a consideration. My take is that this is more trash from Nvidia.

A bad time to buy a GPU was the pandemic-era where GPUs were selling for 2-3x their MSRP.I feel like I chose a real shit time to be thinking about buying a new gpu.

If you ignore the 8GB 4060 Ti variant, there's no fucking way $200 justifies the difference between the RTX 4060 and RTX 4060 Ti 16GB variant.

But this is Nvidia this generation, running wild with pricing. I mean ffs there's a $200 difference between the RTX 4070 and RTX 4070 Ti, and then theres a $400 gap between the RTX 4070 Ti and the RTX 4080. So I guess I'm not sure why I'm so surprised pricing on the lower end is sucky as well.

Edit:

For reference:

- There was a $300 difference between the GTX 560 and GTX 580.

- There was a $270 difference between the GTX 660 and GTX 680.

- There was a $400 difference between the GTX 760 and GTX 780.

- There was a $350 difference between the GTX 960 and GTX 980.

- There was a $400 difference between the GTX 1060 and GTX 1080.

- There was a $350 difference between the RTX 2060 and RTX 2080.

- There was a $370 difference between the RTX 3060 and RTX 3080.

And somehow now there is a $900 difference between the RTX 4060 and the RTX 4080. Makes perfect sense.

Probably not.Do any of you all think nvidia will ever release a 16gb vram 4070?

Whomever was hoping for RTX 3080 performance for <$500 is up for some disappointment. These cards don't even reach RTX3070 performance numbers.

Hardware threads on gaf. Why do I even bother. Might as well tape 16GB to a worm and gaf will still love it.

I don't see how there was any of those people left. The RTX 3080 equivalent part in the new series is the 4070 @$600. The 4060 line had to be a step down from that.

There's no people left... who saw 3 generations of new 60 series cards getting the same performance as the previous 80 series but at a much lower starting price?

Your argument is that this expectation somehow didn't exist?

You're trying to spread the bullshit that slower GPUs can't make use of more RAM, which is completely at odds with modern rendering.Jesus christ. I'm not defending 8GB. VRAM isnt the only thing GPUS needs for performance. How the fuck is everyone so oblivious? If you slap 24GB VRAM on 970, it'll still be shit.

What's the point behind reminding people about this?Friendly reminder that Lisa Su (CEO of AMD)'s grandfather is Jensen Huang (CEO of NVIDIA)'s uncle.

Fact-Check: Is NVIDIA CEO an 'Uncle' of AMD's CEO Dr. Lisa Su?

As the Radeon RX 6800 XT goes head-to-head with GeForce RTX 3080, it's hard to believe that the leaders of these 2 companies are actually related!www.techtimes.com

I posted it on another thread, and it was news to someone there.What's the point behind reminding people about this?

You know, I've thought about that for a time now... It's like AMD seem to be steps behind Nvidia even when Nvidia mess it up badly, like if Nvidia does some steps back AMD does the same amounts of steps back or never take full advantage of their position...I posted it on another thread, and it was news to someone there.

Personally, I think collusion would explain some of the seeming inability/unwillingness of AMD to undercut NVIDIA and gain marketshare with a banger card for cheapish. Nothing's proven, of course.

And somehow now there is a $900 difference between the RTX 4060 and the RTX 4080. Makes perfect sense.

An 8GB 4060 is the 3GB 1060 all over again. Just stay away if you don't want to regret it a little later. An 8GB ti is just puzzling, who is this card really for? It shouldn't exist as it makes no sense. I imagine it's just there to fill the price gap.

I'm still on 6700k but have a 3060 ti. CPU is definitely showing it's age. I have all new parts except mobo+cpu and at this point I'm waiting til Meteor Lake or even Arrow Lake. I will probably just grab a 5060 ti or 70 at that point as well. Nvidia shit the bed with the 40xx series minus 80s and 90s.Might finally upgrade my 1070.

6700k worries me though.

You should maybe go look at what the last patch for tlou did for vram use, 8GB didn't seem to be the problem otherwise how did a software patch fix it? Using shitty unpatched ports is like saying consoles are gonna be 720p in next gen games because jedi survivor drops that low.It is now, even on a 3050 you cannot use console textures in newer games... for example, The last of Us looks a bit like a cartoon, and on Hogwart's Legacy, you cannot use RT shadows because it runs out of Vram. 8gb is not enough for console textures in Resident evil remasters or the likes of Dead Space. 8gb is legacy now, even at the low end.

You should maybe go look at what the last patch for tlou did for vram use, 8GB didn't seem to be the problem otherwise how did a software patch fix it? Using shitty unpatched ports is like saying consoles are gonna be 720p in next gen games because jedi survivor drops that low.

So is this good or bad lol

I see some people at each others throats over 8GB Ram.

The key point is that current gen consoles has about 11gb to work with. So any current gen only PC ports will likely require similar (if not more) VRAM to get comparable texture quality and performance. If a port is well optimized (an endangered species at this point) you might see 8Gb VRAM still being adequate, but in most cases one would either see: (1) loads of hitching/stutter or; (2) lower texture quality on 8gb VRAM cards Vs the console editions.So is this good or bad lol

I see some people at each others throats over 8GB Ram.

The key point is that current gen consoles has about 11gb to work with. So any current gen only PC ports will likely require similar (if not more) VRAM to get comparable texture quality and performance. If a port is well optimized (an endangered species at this point) you might see 8Gb VRAM still being adequate, but in most cases one would either see: (1) loads of hitching/stutter or; (2) lower texture quality on 8gb VRAM cards Vs the console editions.

People buying 8gb cards in 2023 are basically hoping that PC porting get much better or, they are content to play older titles (including any cross gen PC ports) or, are not aware of issues.