They fucking better.

But knowing Nintendo, they'll do something wrong.

Touch-only controls is my guess.

They fucking better.

But knowing Nintendo, they'll do something wrong.

why would you want that much edam on the CPU? Wouldn't it make more sense on the GPU for post processing/AA etc, where you need bandwidth?

They fucking better.

But knowing Nintendo, they'll do something wrong.

Touch-only controls is my guess.

Cool, so it would be higher res than a lot of ps60 games.They would do it wrong. They would make it 720p or some shit.

Yeah and you could change the lighting on the main screen in realtime as well as a full render with camera control to the tablet. All on crappy early devkit hardware.I can't really go by the Zelda tech demo since everything is stage and the only thing you can control is the camera.

These rumors would support the "butt load" of edram comments some have made. I assume they meant megabits and not megabytes, because the latter would be impossible. So between 96 and 128 MB of teh fast ramz.

The floor demo was an improved build and yes, I think it overall looked better than any current console game.

The lighting in the Zelda demo was superior to anything in current consoles as well. That at least is somewhat backable since it does use effects to a degree current consoles don't.

First off, You are comparing a technical demo to an actual game. In a closed enviroment both ps3 and 360 could render technical demo's just like that.

Second off, there ARE actual games that look more impressive then those demo's. Compared to Uncharted 3 or God of War 3, actual games, these demo's feel pretty mediocre, visual design aside.

I love the fact you use "butt load" as a quote, as if IBM/Nintendo said it themselves

It's not like all screens are the same cost by the inch, though. Go with a higher density smaller one and you're talking more about size reduction than cost reduction.Even if the screen had a higher pixel density? I guess I am just selfish I don't care if the other people playing have a smaller screen I just don't want to have to pay more than 50 bucks for a controller that would only get used when I have friends over. You couldn't see it working, image it like the size of an Iphone screen.

why would you want that much edam on the CPU? Wouldn't it make more sense on the GPU for post processing/AA etc, where you need bandwidth?

Shared... is shared.

People are really questioning the Wii U Zelda demo's visual quality to the 360/PS3? Maybe in geometry and textures, sure, but you'll be hard pressed to find a single 360/PS3 game with such nice, accurate global illumination and what appears to be some decent radiosity.

Well, I can't really argue against someone saying that the HD Twins can replicate that now in a similar demo.

People are really questioning the Wii U Zelda demo's visual quality to the 360/PS3? Maybe in geometry and textures, sure, but you'll be hard pressed to find a single 360/PS3 game with such nice, accurate global illumination and what appears to be some decent radiosity.

Well, I can't really argue against someone saying that the HD Twins can replicate that now in a similar demo.

embedded.....is embedded. You embed on the die for speed. The rumour suggested a separate GPU. If the GPU is a different chip to the CPU then you don't get the bandwidth benefits of edram for the GPU. And usually thats where you want the bandwidth - see Xbox 360 for instance.

either the rumour means 758MB but its mistaken about embedded and means unified instead.

Or it means 768Mb and it does mean embedded, but might be mistaken about embedded on the CPU and its the GPU instead?

768 MB of DRAM embedded with the CPU, and shared between CPU and GPU

IBM introduced the following technology in 2007:

High K

eDRAM

3D Stacking (TSV)

Air Gap

A combination of these could explain this rumor.

IBM introduced the following technology in 2007:

High K

eDRAM

3D Stacking (TSV)

Air Gap

A combination of these could explain this rumor.

You mean they didn't?

Well, I can't really argue against someone saying that the HD Twins can replicate that now in a similar demo.

no.

768MB is too much. Even IBMs own material is saying Mb, and thats quite a commonly mis-read thing, so if its embedded, 768Mb (96MB) is much more likely the correct amount. 3D stacking gets you some efficiency, but not that much.

and I still think it'd be wasted on the CPU. Well, not wasted ,but not as useful as if it was on the GPU - 96MB is enough for a nice frame buffer

Maybe they can, but I haven't seen it. Geometry and texture work isn't anything overly amazing, but the lighting engine in that tech demo is fantastic at that framerate.

Whether or not that can get something with that clean and accurate lighting/shadows in a full game is impossible to say, but if they can it should look stunning.

I was simply referring to the sharing between cpu and gpu.

That it could be done as a 3D stack.

Not necessarily the amount of the edram.

And where does IBM say Mb vs MB?

Technically, this is all IBM have officially said on the subject:

http://www-03.ibm.com/press/us/en/pressrelease/34683.wss

http://www-03.ibm.com/press/us/en/photo/34681.wss

Curiously enough, in the regurgitated newsbit making rounds on the internet, the quantifier "a lot" has been used in conjunction with edram. But we have no credible source for that, AFAIK. IBM's own claim is that in comparison to sram, they can put 3x the amount of memory in the form of edram. So wheres you'd have 4-8MB of on-die L2/L3 in other designs, you could in theory have 12-24MB of similar-purpose memory in IBM's. That tells us next to nothing about how much edram Nintendo could afford to put on a separate die (ala Xenos) if they decided to.

When you compare the iPhone 3GS to the iPhone 3G you can see that Apple made a vast amount of improvements, the iPhone 3G featured a 620MHz processor (underclocked to 412MHz), 128MB eDRAM, a 1150 mAh battery, a 2 megapixel camera and either 8GB or 16GB of flash memory. A year later Apple released the 3GS, this boasted improved specs including an 800MHz processor (underclocked to 600MHz), 256MB eDRAM, a 1219 mAh battery, a 3 megapixel camera and either 8GB,16GB or 32GB of flash memory.

The iPhone 4 features Apples A4 processor which is said to run at around 1000MHz (not underclocked), 512MB eDRAM, a 1420 mAh battery, a 5 megapixel camera and

http://www-03.ibm.com/press/us/en/pressrelease/32970.wss

they say up to 1Gb, which tallies with the rumour of Nintendo testing out both 768MB and 1GB systems.

Ok, but the WiiU is a custom chip.

If we look at the iPhone:

If products like the iPhone can have up to 512 eDRAM, then why cant

the WiiU possibly be using more than that amount?

Is eDRAM that expensive?

Calling the iPhone's mDDR/LPDDR2 'edram' is a bit disingenuous (not your fault, i know). The special thing about edram, is that it sits on some very custom & very direct/short buses, normally on the same piece of silicon as the logic that makes use of said edram. By saving on the 'wiring latency' inherent to the standard memory interfaces and protocols, and by using massive-width data buses (1024bit data bus is nothing special for edram), that otherwise 'ordinary' dram memory achieves latency and BW characteristic of sram (which is a conceptually different kind of memory, inherently faster and moreso expensive). I can assure you that nothing of the performance characteristics of the iPhones memory would qualify it as edram as per this discussion. Basically, comparing iPhone's PoP memory to IBM's edram macros is akin to claiming that the accessibility of a Corolla should somehow dictate the accessibility of an F1, since they're both 'cars' /yet another bad car analogyOk, but the WiiU is a custom chip.

If we look at the iPhone:

If products like the iPhone can have up to 512 eDRAM, then why cant the WiiU possibly be using more than that amount? Is eDRAM that expensive?

Great, now people have gone back to believing that it'll be weaker than the current gen. :/

The iPhone costs WAY more than the Wii U will?

IBM/ Qimonda eDRAM is not exactly the same thing - at all. Their eDRAM is meant as a replacement for SRAM, as an extremely high bandwidth, low latency local memory pool, typically cache. We're talking hundreds of gigabytes per second, not 5GB/s or whatever the A5 does. It makes very little sense to use that technology as a replacement for regular RAM.If products like the iPhone can have up to 512 eDRAM, then why cant

the WiiU possibly be using more than that amount?

Is eDRAM that expensive?

Was it really 1080p though?When you look at all of the lighting and particle effects done in real time and in 1080p, yes I would say that it's more powerful than PS3/360.

http://www.youtube.com/watch?v=27Lf4uVuE50

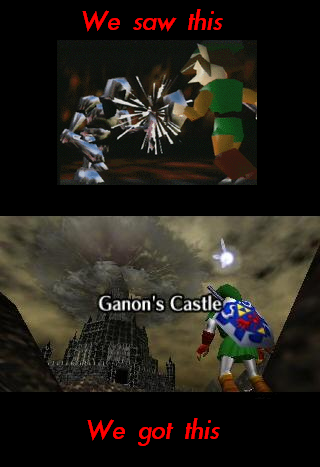

We saw this

We will see something incredible following this.

Calling the iPhone's mDDR/LPDDR2 'edram' is a bit disingenuous (not your fault, i know). I can assure you that nothing of the performance characteristics of the iPhones memory would qualify it as edram as per this discussion. Basically, comparing iPhone's PoP memory to IBM's edram macros is akin to claiming that the accessibility of a Corolla should somehow dictate the accessibility of an F1, since they're both 'cars' /yet another bad car analogy

IBM/ Quimonda eDRAM is not exactly the same thing - at all. Their eDRAM is meant as a replacement for SRAM, as an extremely high bandwidth, low latency local memory pool, typically cache. We're talking hundreds of gigabytes per second, not 5GB/s or whatever the A5 does. It makes very little sense to use that technology as a replacement for regular RAM.

http://i.imgur.com/UPJOpl.jpg

Really, you've never seen a PS360 game that looks better than this?

And as for the bird demo AFAIK there's no direct feed footage/shots so it's probably unfair to judge it too harshly but even then it had some slowdown, had no AI, and looked like this:

http://i.imgur.com/bLNgu.jpg

While 1t-sram is indeed very fast, the 24MB in the cube/wii were not embedded - they were sitting on a 64bit 2.7GB/s / 4GB/s external bus. The actual embedded 1t-sram was all-in-all 3MB on flipper's die (10GB/s + 7GB/s / 15GB/s + 11GB/s).Ok, you guys are right, iPhone was a bad example to use.

But lets take a look at the Gamecube, which used 24MB of 1t-sram as its main memory right? Which normally is used as embedded ram.

That traditional ram (GDDR3) was essentially Hollywood's pool.And I know the Wii used a slower traditional ram for main ram, but I recall hearing some developers barely touching that when making their games.

The 24MB die was packaged on the GPU, not embedded. It was still sitting on a similar 64 bit external bus, albeit much closer to its controller (note: the GPU is the north bridge/mem controller in the cube architecture).They were content with the 24MB 1t-sram that was also available embedded on the GPU.

I consider as an indirect successor to the cube, architecture-wise, the xb360. Apparently, the true successor will be WiiU, with high probability, unless nintendo radically changed their mind.So lets say that Nintendo wanted to take the same principles and designs of the GC, and wanted to make a machine capable of 1080p graphics, yet was compact, and ran green.

In other words, how would a modern version of the Gamecube look?

Both the GC and the Wii have 3MBs of eDRAM.Hm, bear with me now. Assuming the eDRAM rumour is true, what if that's the only RAM on the Wii U?

Just an obscene amount of eDRAM but no normal RAM, how would it deal with games and how easy would it be to develop for?

Really, you've never seen a PS360 game that looks better than this?

And as for the bird demo AFAIK there's no direct feed footage/shots so it's probably unfair to judge it too harshly but even then it had some slowdown, had no AI, and looked like this:

While 1t-sram is indeed very fast, the 24MB in the cube/wii were not embedded - they were sitting on a 64bit 2.7GB/s / 4GB/s external bus. The actual embedded 1t-sram was all-in-all 3MB on flipper's die (10GB/s + 7GB/s / 15GB/s + 11GB/s).

That traditional ram (GDDR3) was essentially Hollywood's pool.

The 24MB die was packaged on the GPU, not embedded. It was still sitting on a similar 64 bit external bus, albeit much closer to its controller (note: the GPU is the north bridge/mem controller in the cube architecture).

I consider as an indirect successor to the cube, architecture-wise, the xb360. Apparently, the true successor will be WiiU, with high probability, unless nintendo radically changed their mind.

The one key area of bandwidth, that has caused a fair quantity of controversy in its inclusion of specifications, is that of bandwidth available from the ROPS to the eDRAM, which stands at 256GB/s. The eDRAM is always going to be the primary location for any of the bandwidth intensive frame buffer operations and so it is specifically designed to remove the frame buffer memory bandwidth bottleneck - additionally, Z and colour access patterns tend not to be particularly optimal for traditional DRAM controllers where they are frequent read/write penalties, so by placing all of these operations in the eDRAM daughter die, aside from the system calls, this leaves the system memory bus free for texture and vertex data fetches which are both read only and are therefore highly efficient. Of course, with 10MB of frame buffer space available this isn't sufficient to fit the entire frame buffer in with 4x FSAA enabled at High Definition resolutions and we'll cover how this is handled later in the article.

Hm, bear with me now. Assuming the eDRAM rumour is true, what if that's the only RAM on the Wii U?

Just an obscene amount of eDRAM but no normal RAM, how would it deal with games and how easy would it be to develop for?

I'm gonna quote this because no one seems to be answering the question. The people that probably could answer it just seem to be telling us why it won't happen instead of what it would be like if it did happen.

We understand that it is absurd that that much eDRAM would be possible (especially financially), but what if it were true?

TheMagician said:Do people reckon the Wii U could handle the PC versions of Arkham City and Battlefield 3?

LiquidMetal14 said:Nope. Not with the same fidelity. Especially when the specs speak for themselves. It could handle it as good as the PS3 or 360 going by those specs.

If the game doesn't have ram eaten up by having to cater to that controller, it should look better though but we don't know how the system works right now.

doomed1 said:[..]with the specs in this OP, you'd better believe it would run. Even under 1GB, 768MB of eDRAM would eliminate so many bottlenecks that you would be able to run data through the quad core in ridiculous chunks and thus get massively better speeds despite lower numbers.

I'm gonna quote this because no one seems to be answering the question. The people that probably could answer it just seem to be telling us why it won't happen instead of what it would be like if it did happen.

We understand that it is absurd that that much eDRAM would be possible (especially financially), but what if it were true?

As simply as I can put this:

eDRAM is very, very fast because when it is put on the processor itself that is a huge deal. The Xbox 360 has 10 MB. This rumor says the WiiU can have up to 96 MB (assuming the rumor was misheard and they're not saying it's 768 MB, which is insane and practically impossible at present costs). So you see how big of a jump this is. This type of RAM can be used in many ways and developers will be able to push the system far beyond the PS3 and 360 if this is true.

The eDRAM is separate from the normal system memory, which is likely to be higher than it is in the HD Twins (they both have 512 MB) but probably no higher than 1024 MB. This sort of memory allows levels to be larger, geometry to be more complex and nicer textures to be used. A system memory upgrade is a good jump for the WiiU and a pretty obvious inclusion but nowhere near as big of a deal as the eDRAM stuff.

I would just add to his post that the eDRAM would handle the more memory intensive functions. Game coding would be helped on the CPU side, and on the GPU side (based on Xenos) things like AA and alpha blending so they won't depend on the slower memory. We talked a little while back about the current Wii U specs possibly running BF3 at max at 720p/60. That hypothetical console should make 1080p/60 at max easily obtainable. I'd assume 1GB of system memory would be virtually available only for textures and other less bandwidth intensive functions. But I'm still getting working the kinks out on things I'm relearning, so that's about the best I can do for now. To quote Einstein, “If you can't explain it simply, you don't understand it well enough.”

Can one of you amazing tech people that have made this thread a fun read these past few months make a thread explaining how some of this basic tech stuff works and how console hardware works differently than PCs? Cost vs performance, RAM vs edRam vs speed, etc?

It can be a general help thread for all new next gen console discussion.

With the loop/720 discussion and all the previous ignorance earlier in this thread, i have had enough. It would be amazing to spread some knowledge up in this bitch so the level of discussion climbs a bit instead of the one and done ignorant posts that are so common.

It might even get sticky'd

Yes I do. The real time lighting that's going on in the Zelda and Bird demos are beyond what's been done even in the best of PS360 games. The quality of the global illumination being used, and the smoothness, and subtlety in the color bleed in the higher quality bird demo is beyond the current generation. I also love how you seemed to have found the most highly compressed jpeg of the bird demo out there, so that all it's colors and detail were crushed.

While the polygon count and the textures of the Zelda Demo aren't above what the current gen can do (which makes sense since I could have sworn they said they based the demo on TP assets) the lighting engine is well ahead of just about anything on the PS360.

For people who don't know what maybe myself or EatChildren are talking about in regards to color bleed/radiosity maybe an explanation is needed.

So when you have say a green object sitting next to a white object. The light that hits the green object is going to have all the frequencies absorbed except for green and that's what its going to bounce back. So when that green light hits part of the white object its going to reflect back as green, mixed with the white light.

I found this image online to give you a quick example.

Notice how the color of the different objects "bleeds" off into close by objects. It's easily noticeable with the blue of the wall effecting the cylinder, or cone effecting the donut shape.

Now if you watch a quality feed of the bird demo you'll see this kind of stuff all over the place on it. Which you don't see in current gen light engines.

will sucks

While the polygon count and the textures of the Zelda Demo aren't above what the current gen can do (which makes sense since I could have sworn they said they based the demo on TP assets) the lighting engine is well ahead of just about anything on the PS360.