Elog

Member

I would be more careful in dancing victory laps. There has been speculation about two things for the PS5 die: That the new GE is part of the AMD roadmap and then people have speculated about the cache sizes (and the only way the PS5 could have a large cache is by having an off-die cache - not enough mm2 for that on the die). It is the GE that has had the 'RDNA3' rumour attached to it.you amaze me :

"RGT is probably the best sourced youtuber but he got the unified ZEN 3 cache wrong. --->>>

Also the upgrade to 38CUs, upgrade to 16 gbpp GDDR6 iirc." (wrong)

"He gets info by piecing the bits he hears over internet + fishing for it via DMs. Believe me, he doesnt know much"

"So no unified L3 cache? I guess we can all blacklist RedGamingTech now."

"Yes I am happy he is caught to have a bad source."

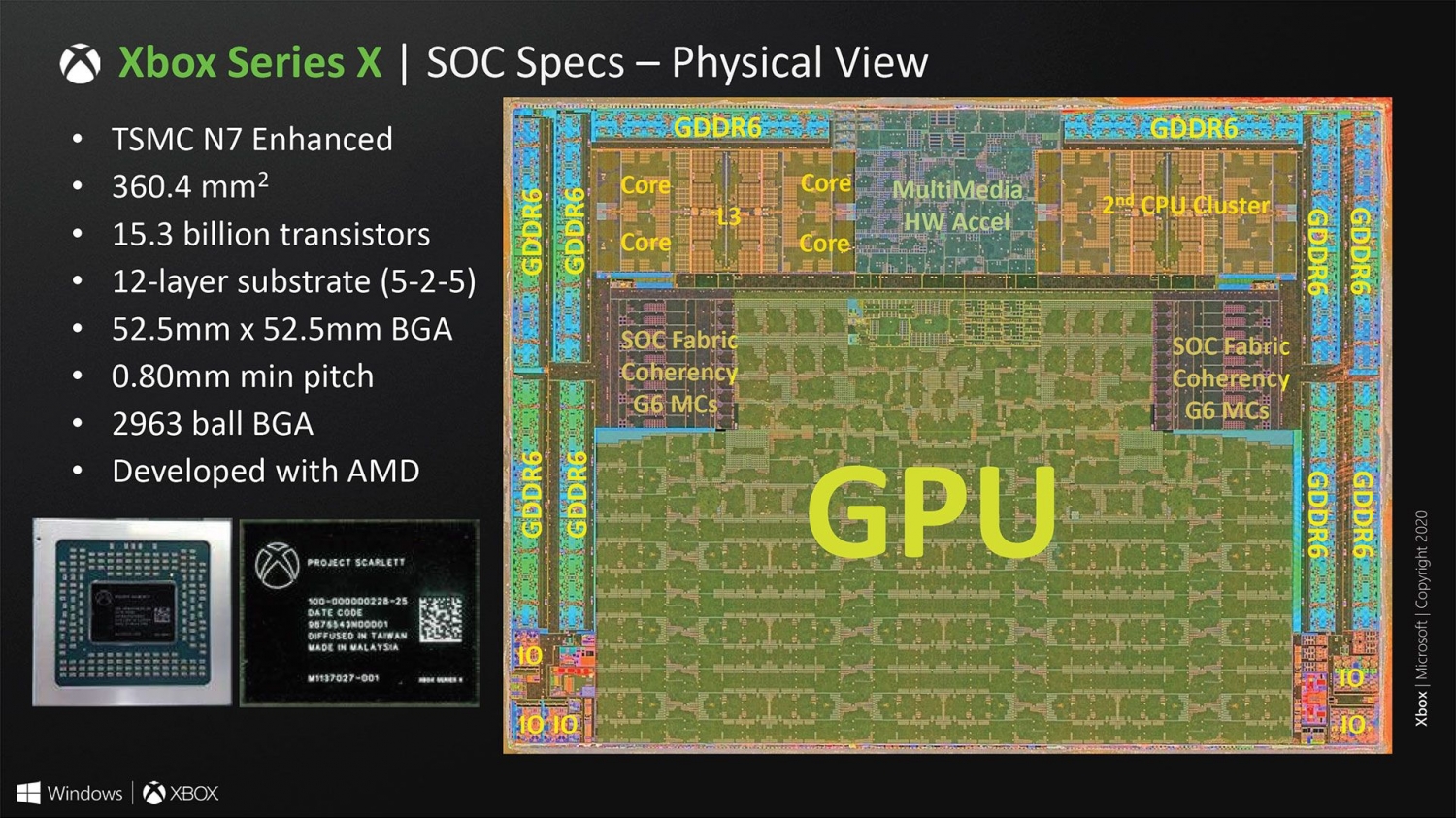

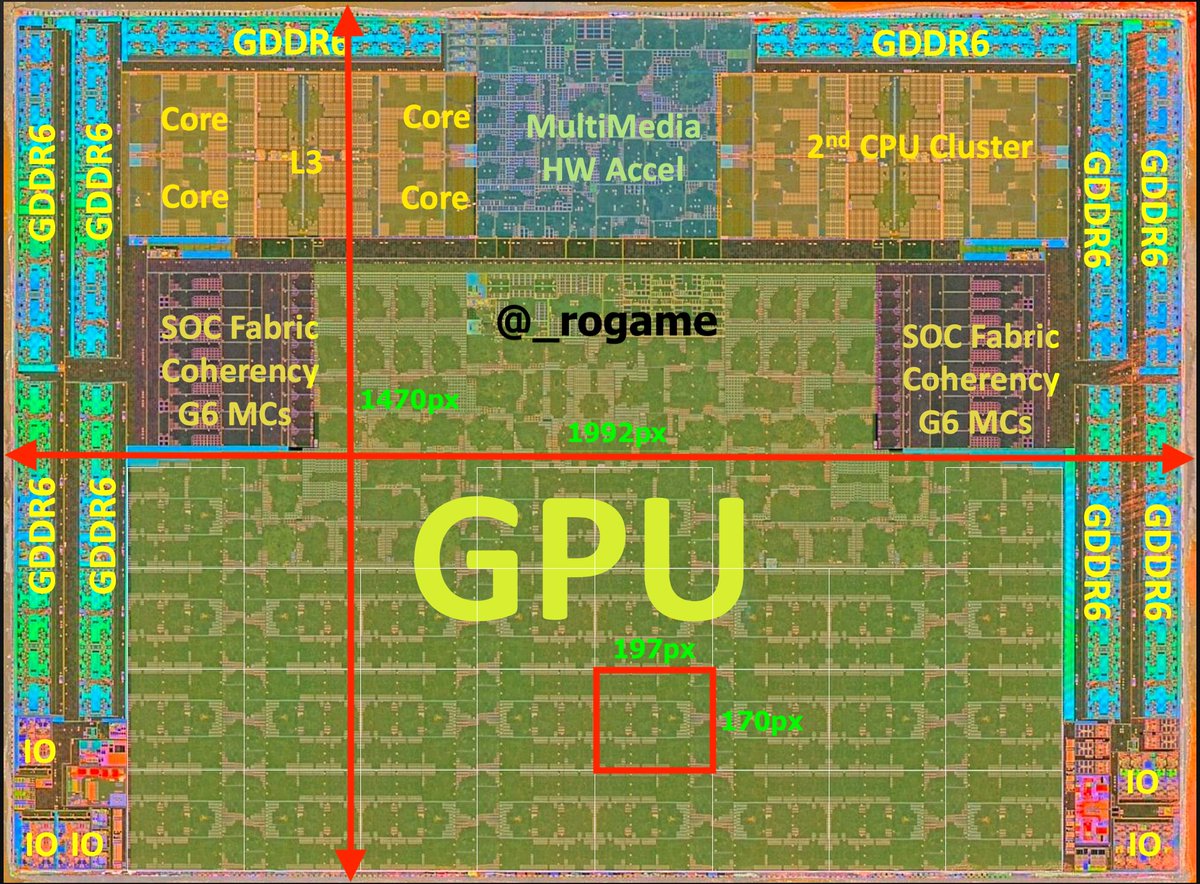

"Eyeballing it, looks like 2 X 4MB L3 for the CPU so same size as XSX / Renior.

Surprise to no-one, I'm sure.

Also lmao Red Gaming Tech."

etc etc etc

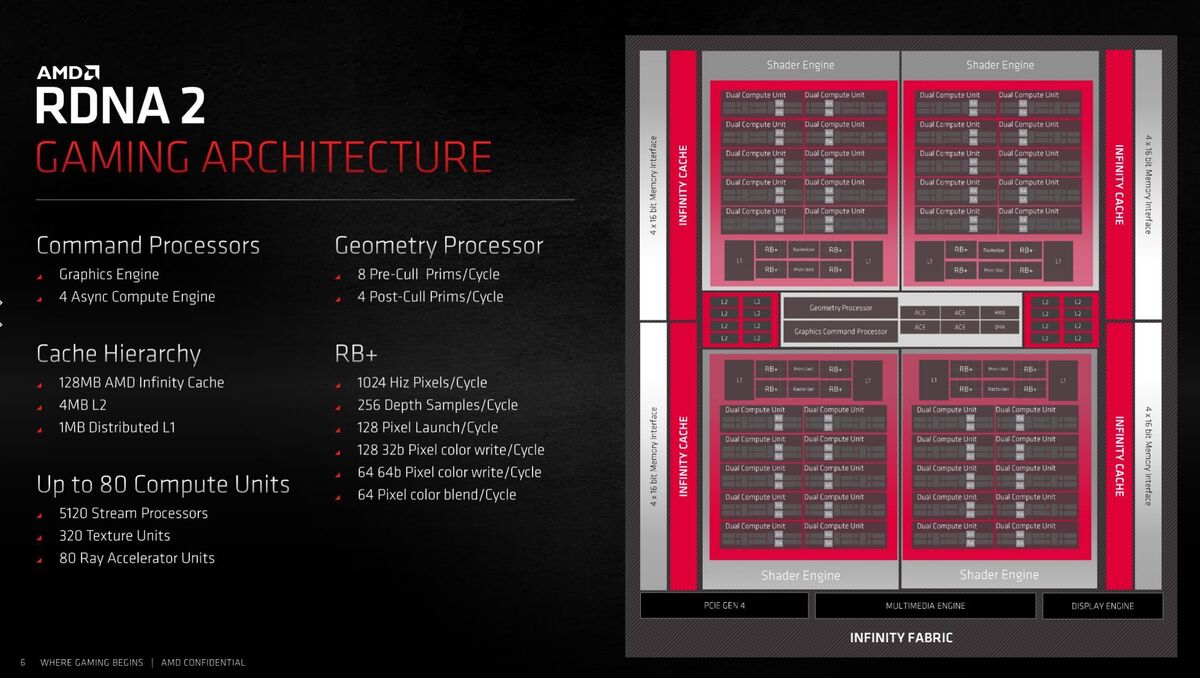

The middle GE and command processor section in this die shot is massive compared to the graphics cards and the XSX. So the first speculation - the key one - could very much be legit.