You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Alan Wake 2 on PlayStation 5 Pro – Behind The Scenes

- Thread starter Thick Thighs Save Lives

- Start date

- Game Dev

For whatever identical screen size and distance 720p upscaled to 1440p looks worse than 864p upscaled to 4k. The quality isn't determined by the factor that you've increased it. Only when that factor is improving your native base resolution does the factor mean better image quality.Yes, but depends on how many people use performance mode at 1440p resolution. Other than that 720p should look better on 1440p screen than 4k screen even using normal scaling, you have 1/4 information on 1440p screen and 1/9 on 4k screen.

It's well known that DLSS performs best at 4k output where even 1080p performance mode usually looks good. On 1440p I don't think it's recommended to go below balance. While on 1080p no one sane should go below quality.

Of course anyone can do anything but results aren't pretty below some point of native res.

720p upscaled to 1440p will look worse than 720p upscaled to 4k. Even if you were to use basic nearest-neighbor upscaling, at worse it will look the same let alone it looking 'worse' with AI upscaling.

on DLSS perf it's 720p, DLSS Balanced you're looking at 835p on a 1440p display. At the highest Quality you're looking at 960p. barely just above 900.

So going back to what was said:

Crazy that we have sub 900p native resolution in 2024 and people are rejoicing.

What do you think is the internal resolution when people with high/semi-high-end RTX cards engage DLSS at 1440p?

Md ray is right. Even in 2024 about 94% of steam users are on 1440p or below

When they enable DLSS (performance or balanced) they're looking at image quality worse than 864p>4k. The comment was pretty silly disparaging the 864p native res. 55%, the majority, are on 1080p displays so even worse native res than 1440p displays. down to 626p or 540p if they decide to use it.

Last edited:

Physiognomonics

Member

This is just patch for an existing game optimized on PC. We are talking about a whole new API for a new console. What were you expecting? that it would compete with a 4080 at launch? This is to get used developers to Sony new APIs and will need years of maturity before getting it right.So not even hardware rt for gi...not going to lie that's disappointing for a supposedly next gen amd rt pipeline which is supposed to be on par with ampere/close to lovelace in rt efficiency.

Senua

Member

What crazy person would use performance DLSS at 1440p lol it'll look shite. Even performance at 4k isn't brilliant. Anything below balanced at 4k is a large step down. I use DLAA on my 1440p monitor. For my 4k TV it's balanced or quality, I'll drop other settings before going to performance (1080p)For whatever identical screen size and distance 720p upscaled to 1440p looks worse than 864p upscaled to 4k. The quality isn't determined by the factor that you've increased it. Only when that factor is improving your native base resolution does the factor mean better image quality.

720p upscaled to 1440p will look worse than 720p upscaled to 4k. Even if you were to use basic nearest-neighbor upscaling, at worse it will look the same let alone it looking 'worse' with AI upscaling.

on DLSS perf its 720p, DLSS Balanced you're looking at 835p on a 1440p display. At the highest Quality you're looking at 960p. barely just above 900.

So going back to what was said:

Md ray is right. Even in 2024 about 94% of steam users are on 1440p or below

When they enable DLSS (performance or balanced) they're looking at image quality worse than 864p>4k. The comment was pretty silly disparaging the 864p native res. 55%, the majority are on 1080p displays so even worse native res than 1440p displays. down to 626p or 540p if they decide to use it.

Maybe you missed the part where even Balanced DLSS will look worse at 835p.What crazy person would use performance DLSS at 1440p lol it'll look shite. Even performance at 4k isn't brilliant. Anything below balanced at 4k is a large step down. I use DLAA on my 1440p monitor. For my 4k TV it's balanced or quality, I'll drop other settings before going to performance (1080p)

DeepEnigma

Gold Member

It's not at all impressive.

Last edited:

Senua

Member

I don't use resolutions that low, and you shouldn't eitherMaybe you missed the part where even Balanced DLSS will look worse at 835p.

Bojji

Gold Member

720p upscaled to 1440p will look worse than 720p upscaled to 4k. Even if you were to use basic nearest-neighbor upscaling, at worse it will look the same let alone it looking 'worse' with AI upscaling.

This is some crazy talk. First time I hear that upscaling same bese res to even higher resolution produces better results, lol. 720p on 4k screen isn't pretty, I played PS3 on my LG.

Point is, Alan Wake 2 performance mode is below "performance" mode of upscaling, it probably won't look really good on 4k tv. Tolerable and better than FSR2? For sure.

Cool, we're not talking about you. You seem to have missed the point. Those with a 1440p display will be looking at 960p, 835p, 720p or 480p with whatever DLSS setting they decide to use.I don't use resolutions that low, and you shouldn't either

With AI it absolutely does, or even basic nearest neighbour you're looking at identical results with Bilinear you might like blocky nearest neighbour above the softer look but none of them are worse. Did 720p look better on your 1440p Samsung?This is some crazy talk. First time I hear that upscaling same bese res to even higher resolution produces better results, lol. 720p on 4k screen isn't pretty, I played PS3 on my LG.

The point is that MD Ray was making a point that those enabling DLSS on 1440p hit even lower native res.Point is, Alan Wake 2 performance mode is below "performance" mode of upscaling, it probably won't look really good on 4k tv. Tolerable and better than FSR2? For sure.

Last edited:

Bojji

Gold Member

With AI it absolutely is or even basic nearest neighbour you're looking at identical results with Bilinear you might like blocky nearest neighbour above the softer look but none of them are worse.

The point is that MD Ray was making a point that those enabling DLSS on 1440p hit even lower native res.

I was talking about basic upscaling and from what I have seen in my life the less you need to upscale the better results there will be:

720p looks better on 1080p screen than on 1440p screen.

1080p look better on 1440p screen than on 4k screen etc.

Let's just agree to disagree here.

Are you able to test 3.7 with preset E? Not that I'm expecting much changes, present E is to reduce ghosting and enhance temporal stability, not something you'd see in a still.

Sorry for missing your post earlier. I don't even know how to change that DLSS presets, I can upgrade to the latest version and see how it looks.

Last edited:

720p looks better on 1080p screen than on 1440p screen.

No it definitely doesn't . Your first one possibly/subjectively because you're talking about a resolution mismatch which would require some kind of non-integer stretching. We're not talking about that though since you seem to think non-integer stretching is fine/better on your second one.1080p look better on 1440p screen than on 4k screen etc.

Let's just agree to disagree here.

Last edited:

yogaflame

Member

I think we are over reacting that the full Rt mode is on 30 fps for AW2. The other games will have 4k with RT in 60 fps in ps5 pro mode. Well that is the preference of the AW2 developer, put all there resources more on image quality and RT, but it is still possible to be updated the frame rate in the future as Ps5 pro PSSR ML continues to evolve. It is still too early. This is just PSSR 1.0. But it is still an impressive achievement for AW2 for Ps5 pro.

Last edited:

Still images won't do justice to ray traced reflections, especially on the ground. While it's higher res and with more fidelity, it really shines in motion; screen space reflection break apart once the reflected object disappears from your view/screen, so you can have an always shifting (reflection) image causing a highly noisy image. With RT, however, objects off-screen are also reflected on respective surfaces and don't change or cause artefacts by moving your camera.

Gaiff

SBI’s Resident Gaslighter

We're getting too caught up in numbers here. I agree that 864p is an extremely low resolution to upscale from, but Remedy did try increasing the input res from what I know and they decided to keep it the same but increase the graphical quality. PSSR is much better than FSR, so we'll have to see what it looks like in motion.

Obviously, the IQ is unlikely to be great, but if it's acceptable, it's an option. I personally never upscale from below 1080p, but for a game like Alan Wake 2 with a lot of post-processing, maybe it won't look so bad on a 4K display.

Obviously, the IQ is unlikely to be great, but if it's acceptable, it's an option. I personally never upscale from below 1080p, but for a game like Alan Wake 2 with a lot of post-processing, maybe it won't look so bad on a 4K display.

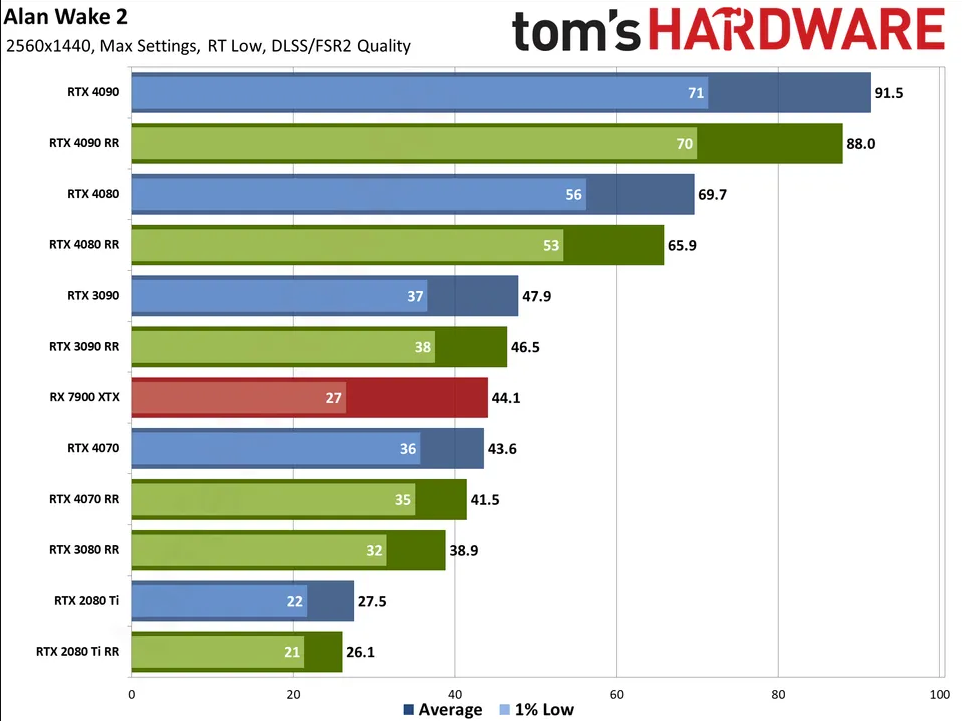

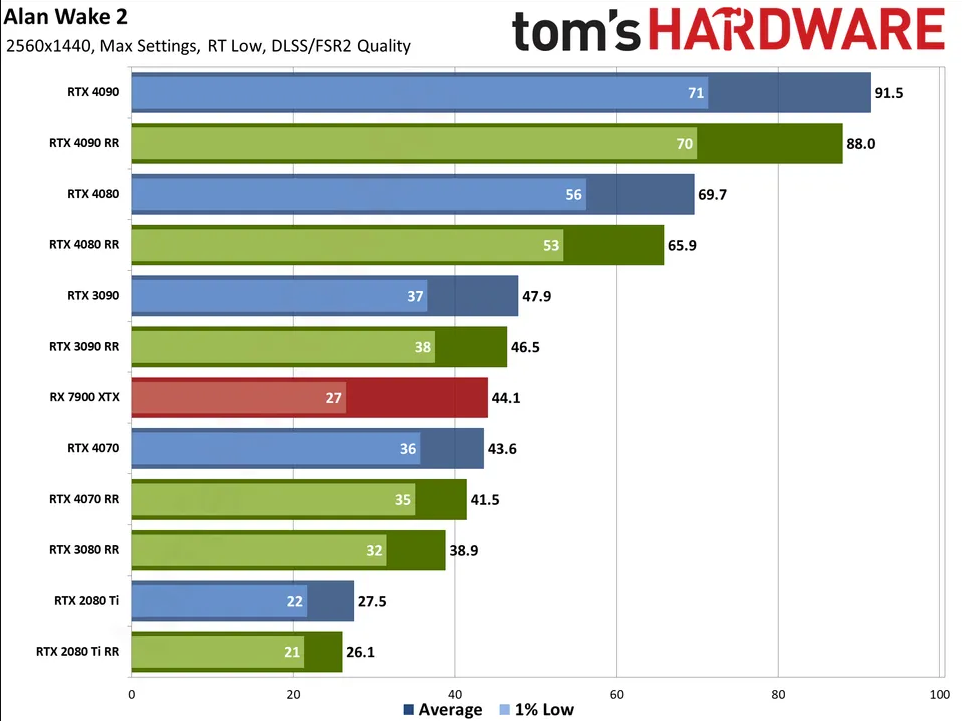

No, i was expecting it to come close to a damn 3080 in rt in a game where the developers seemed to put more effort in than most basic pro patches. The graphics API on the PlayStation consoles are much closer to the code than dx12... a 3080 is hitting 40+fps at 1080 with freaking path tracing meanwhile the pro is only able to turn on just rt reflections with reduced bvh not even gi. No way you spin it it's seriously disappointing, and makes me lose confidence in amds rdna4 in terms of competing with love lace and hell even ampere in terms of rt performance when that's the biggest upgrade of the graphics architecture.This is just patch for an existing game optimized on PC. We are talking about a whole new API for a new console. What were you expecting? that it would compete with a 4080 at launch? This is to get used developers to Sony new APIs and will need years of maturity before getting it right.

Last edited:

Zathalus

Member

It's pretty simple, download from here:Sorry for missing your post earlier. I don't even know how to change that DLSS presets, I can upgrade to the latest version and see how it looks.

DLSSTweaks

Allows using DLAA anti-aliasing, customizing DLSS scaling ratios, overriding DLSS DLL version, and choosing different DLSS3.1 presets.

Drop in the folder with the game .exe and run to edit the preset for each mode.

Preset E should be the default for 3.7 but you can force override it with this tool.

No need to check Alan Wake 2 out if you don't want to, the expansion dropped yesterday so I finished that up and tested 3.7 at the start. Ghosting seemed better but on stills it was about the same, but I didn't spend that much time on it.

Gaiff

SBI’s Resident Gaslighter

The load in this game can shift widely, so it's pointless to say that the RTX 3080 has this kind of performance without comparing the scenes like-for-like. The interior sections are MUCH more manageable than the exterior ones that can clobber your fps. I'm not sure you're getting 40fps+ at 1080p with PT on a 3080 in the forest at the beginning of the game for instance.No, i was expecting it to come close to a damn 3080 in rt in a game where the developers seemed to put more effort in than most basic pro patches. The graphics API on the PlayStation consoles are much closer to the code than dx12... a 3080 is hitting 40+fps at 1080 with freaking path tracing meanwhile the pro is only able to turn on just rt reflections with reduced bvh not even gi. No way you spin it it's seriously disappointing, and makes me lose confidence in amds rdna4 in terms of competing with love lace and hell even ampere in terms of rt performance when that's the biggest upgrade of the graphics architecture.

Worth noting that there have been nice performance increases in the latest patch. Not sure if the Pro is using that code.

James Sawyer Ford

Banned

This seems like an extremely poor PS5 Pro patch. I don't think the RT adds much here, and the image quality still looks extremely blurry/poor.

The load in this game can shift widely, so it's pointless to say that the RTX 3080 has this kind of performance without comparing the scenes like-for-like. The interior sections are MUCH more manageable than the exterior ones that can clobber your fps. I'm not sure you're getting 40fps+ at 1080p with PT on a 3080 in the forest at the beginning of the game for instance.

Worth noting that there have been nice performance increases in the latest patch. Not sure if the Pro is using that code.

I get that load can shift but the pro isn't even able to handle rt gi with reduced bvh for reflections at 30fps. Thats disappointing for rt performance for the new architecture. I got the 40fps from the techpowerup benchmarks.

Last edited:

Gaiff

SBI’s Resident Gaslighter

I wouldn't necessarily say it cannot handle more. It's just what the devs deemed as the optimal option. Again, without unlocking the fps and having the same scenes and settings, it's impossible to compare accurately.I get that load can shift but the pro isn't even able to handle rt gi with reduced bvh for reflections at 30fps. Thats disappointing for rt performance for the new architecture. I got the 40fps from the techpowerup benchmarks.

I don't think the Pro will match a 3080 in this game, but it's so far impossible to know how well it does without being able to match the benchmarks. We may be selling it short.

Last edited:

The quality mode seems like the best option in this game to me since you get raytracing too. The native internal res in performance mode is just a strange thing to get hung up about for those who want higher framerate with improved settings over base, as people have said it's no worse than enabling DLSS for better performance/settings on 1440p. Alan Wake is a graphically heavy and good looking game. Do those who are maxing out Cyberpunk on a 4090 give a shit that their internal res is below 900p either as long as they're getting good framerate and settings.We're getting too caught up in numbers here. I agree that 864p is an extremely low resolution to upscale from, but Remedy did try increasing the input res from what I know and they decided to keep it the same but increase the graphical quality. PSSR is much better than FSR, so we'll have to see what it looks like in motion.

Obviously, the IQ is unlikely to be great, but if it's acceptable, it's an option. I personally never upscale from below 1080p, but for a game like Alan Wake 2 with a lot of post-processing, maybe it won't look so bad on a 4K display.

pasterpl

Member

That's on Max Settings. What settings are comparable on PC vs PS5Pro version? Eg. When DF was running ratchet and clank comparison they mention they have to adjust down some of the visual settings to match PS5Pro visual settings.Too much you want. Here is lower resolution,, than on PS5 pro. Even XTX and 4070 can't give 60 fps

Edit. Those reflections in the mirrors are truly next gen. /s

Last edited:

R&C on PS5 Pro doesn't have RTAO and RT Shadows most notably. Makes me think PS5 Pro isn't really RDNA4 after all or once again AMD has failed with RT gains.That's on Max Settings. What settings are comparable on PC vs PS5Pro version? Eg. When DF was running ratchet and clank comparison they mention they have to adjust down some of the visual settings to match PS5Pro visual settings.

Edit. Those reflections in the mirrors are truly next gen. /s

The performance in AW2 has increased a lot, even Series S is getting a resolution upgrade. people should stop throwing out day one/pre-release benchmarks.Too much you want. Here is lower resolution,, than on PS5 pro. Even XTX and 4070 can't give 60 fps

This could have been much better if the engine can do DRS. The forest section is pretty heavy compare to the city or indoor section. I see 15 to 20 fps advantages in most places compare to the forest section. With DRS, most of these section could run at a internal 1080p or a bit higher.This seems like an extremely poor PS5 Pro patch. I don't think the RT adds much here, and the image quality still looks extremely blurry/poor.

In this game, it favors Nvdia GPU. I can see the pro in the same ball park as GTX 3070 here. It is around 50 percent faster than the ps5 in performance mode which is kinda inline with what the pro has over the base ps5. See DF video that show this below.

I also found a GTX3070 video 4k DLSS performance with almost the same graphics setting as quality mode abate a few lower settings. It looks substantial better and more crisp compare to the ps5 performance mode. I think this is perfect in term of image clarity and quality for the pro.

I can see why they lower the resolution to 860p on the pro because the 3070 couldn't hold a flat 60fps in the forest and it drop to a low 50. So again, with DRS, this could have look substantially more crisp in most sections Of the game.

Found 1 more GTX 3070 video here which can use to simulate how a pro run this game at 4k PSSR performance and 4k PSSR quality. There are a lot of head room in the indoor part. See video below around 24:20.

So in an ideal way. The pro should have a performance mode with a base ps5 quality setting and a DRS range from 860p to 1160p. An image quality mode at 40fps with DRS range from 1440p to 1600p with higher graphic setting, and a RT mode range from 1270p to 1440p. Of course these will upscale with PSSR to 4k.

Edit: Now that I think about it why DF did not question Remedy there is no DRS implementation for consoles? It is such a crucial tool to extract more performance/image quality out of a game like this where the performance range is huge between most area.

I can see the series x would benefit more than the ps5 seeing as how it hold a flat 60 when the ps5 drop. It also hold true for the pro too seeing how this game favor more compute unitS. So potentially, the pro and the series x left lot of unused performance in most indoor area because the resolution have to cap at 860p to keep forest section run a flat 60fps. This kinda dumb.

Last edited:

pasterpl

Member

I think it is RDNA 3.5 and that PssR is lower tier of AI based FSR4.Makes me think PS5 Pro isn't really RDNA4 after all or once again AMD has failed with RT gains.

Little Chicken

Banned

How many times must I be hoodwinked by Sony

Venom Snake

Member

How many times must I be hoodwinked by Sony

What did they do to you this time?

Last edited:

Feel Like I'm On 42

Member

.

Last edited:

Feel Like I'm On 42

Member

No, i was expecting it to come close to a damn 3080 in rt in a game where the developers seemed to put more effort in than most basic pro patches. The graphics API on the PlayStation consoles are much closer to the code than dx12... a 3080 is hitting 40+fps at 1080 with freaking path tracing meanwhile the pro is only able to turn on just rt reflections with reduced bvh not even gi. No way you spin it it's seriously disappointing, and makes me lose confidence in amds rdna4 in terms of competing with love lace and hell even ampere in terms of rt performance when that's the biggest upgrade of the graphics architecture.

THIS^^^ Why do these people think I've been so god damned disappointed and shouting from the rooftops? Because damn near every Pro update has sucked so far, coming in wayy below everyones expectations for the Pro prior to all this info coming out!

Alan Wake 2 is one of those games that people were hoping we could see the shackles come off on consoles, like Cyberpunk 2077 amd Black Myth ...twp of those games don't have patches yet but AW2 is massive, massive disappointment! The only games that seem to be transformed are FF7 Rebirth and that F1 facing game ...every other Pro update is depressing so far.

Feel Like I'm On 42

Member

This could have been much better if the engine can do DRS. The forest section is pretty heavy compare to the city or indoor section. I see 15 to 20 fps advantages in most places compare to the forest section. With DRS, most of these section could run at a internal 1080p or a bit higher.

In this game, it favors Nvdia GPU. I can see the pro in the same ball park as GTX 3070 here. It is around 50 percent faster than the ps5 in performance mode which is kinda inline with what the pro has over the base ps5. See DF video that show this below.

I also found a GTX3070 video 4k DLSS performance with almost the same graphics setting as quality mode abate a few lower settings. It looks substantial better and more crisp compare to the ps5 performance mode. I think this is perfect in term of image clarity and quality for the pro.

I can see why they lower the resolution to 860p on the pro because the 3070 couldn't hold a flat 60fps in the forest and it drop to a low 50. So again, with DRS, this could have look substantially more crisp in most sections Of the game.

Found 1 more GTX 3070 video here which can use to simulate how a pro run this game at 4k PSSR performance and 4k PSSR quality. There are a lot of head room in the indoor part. See video below around 24:20.

So in an ideal way. The pro should have a performance mode with a base ps5 quality setting and a DRS range from 860p to 1160p. An image quality mode at 40fps with DRS range from 1440p to 1600p with higher graphic setting, and a RT mode range from 1270p to 1440p. Of course these will upscale with PSSR to 4k.

Edit: Now that I think about it why DF did not question Remedy there is no DRS implementation for consoles? It is such a crucial tool to extract more performance/image quality out of a game like this where the performance range is huge between most area.

I can see the series x would benefit more than the ps5 seeing as how it hold a flat 60 when the ps5 drop. It also hold true for the pro too seeing how this game favor more compute unitS. So potentially, the pro and the series x left lot of unused performance in most indoor area because the resolution have to cap at 860p to keep forest section run a flat 60fps. This kinda dumb.

Excellent post and these are the types of questions we should ALL be asking

MisterXDTV

Member

I think it is RDNA 3.5 and that PssR is lower tier of AI based FSR4.

This sounds familiar. We are back to 2020

LOL

"PS5 is RDNA 1.5"

Gaiff

SBI’s Resident Gaslighter

Does it really favor CUs or compute power? The Pro seems to disprove that theory. It has over 60% more throughput than the PS5 in compute, yet Alan Wake 2 doesn't seem to scale all that well.I can see the series x would benefit more than the ps5 seeing as how it hold a flat 60 when the ps5 drop. It also hold true for the pro too seeing how this game favor more compute unitS. So potentially, the pro and the series x left lot of unused performance in most indoor area because the resolution have to cap at 860p to keep forest section run a flat 60fps. This kinda dumb.

For whatever reason, this game simply doesn't play nice with the PS5 or there is something that makes it lag behind because Ampere cards are much faster than RDNA2 or even Turing GPUs in this game. The 3070, which usually matches the 2080 Ti, is more than 10% faster in Alan Wake 2. It's also 50% faster than the PS5 which is really the upper bounds of the differential that can exist between them.

Last edited:

TheRedRiders

Member

Memory bandwidth limitations?Does it really favor CUs or compute power? The Pro seems to disprove that theory. It has over 60% more throughput than the PS5 in compute, yet Alan Wake 2 doesn't seem to scale all that well.

For whatever reason, this game simply doesn't play nice with the PS5 or there is something that makes it lag behind because Ampere cards are much faster than RDNA2 or even Turing GPUs in this game. The 3070, which usually matches the 2080 Ti, is more than 10% faster in Alan Wake 2. It's also 50% faster than the PS5 which is really the upper bounds of the differential that can exist between them.

Radical_3d

Member

Most likely. The Pro is a mere 20 something faster than the old model. In terms of raw performance that's the limit where it is going to reach. That's why it's primarily an AI machine.Memory bandwidth limitations?

MisterXDTV

Member

Most likely. The Pro is a mere 20 something faster than the old model. In terms of raw performance that's the limit where it is going to reach. That's why it's primarily an AI machine.

Actually it's 30%.... But let's continue spreading bullshit

Gaiff

SBI’s Resident Gaslighter

Possibly that among other things. More than likely a number of factors compounding one another to give us this result. Gonna wait until DF does a benchmark. The regular PS5 spends a lot of time below 60fps in the woods and I think it even drops to the 40s. If the Pro is locked to 60, that means there is extra headroom and it could simply be Remedy being conservative with the settings, just like Housmarque was with Returnal. They set the internal res to 1080p and the output to 1440p if I remember correctly. A 3060 can run it at native 1440p/High without a problem if I'm not mistaken.Memory bandwidth limitations?

It's 28%Actually it's 30%.... But let's continue spreading bullshit

Last edited:

MisterXDTV

Member

Possibly that among other things. More than likely a number of factors compounding one another to give us this result. Gonna wait until DF does a benchmark. The regular PS5 spends a lot of time below 60fps in the woods and I think it even drops to the 40s. If the Pro is locked to 60, that means there is extra headroom and it could simply be Remedy being conservative with the settings, just like Housmarque was with Returnal. They set the internal res to 1080p and the output to 1440p if I remember correctly. A 3060 can run it at native 1440p/High without a problem if I'm not mistaken.

It's 28%

It's 29%, I guess you can round it up to 30% not 20%

LOL

Bojji

Gold Member

This sounds familiar. We are back to 2020

LOL

"PS5 is RDNA 1.5"

Whatever RDNA version number it was it wasn't 2.0 - it lacked features.

Pro has RDNA 3.5 raster parts and 4.0 RT parts based on rumors.

It's the newest AMD tech vs. outdated/not complete on PS5 launch so it's a step up.

Does it really favor CUs or compute power? The Pro seems to disprove that theory. It has over 60% more throughput than the PS5 in compute, yet Alan Wake 2 doesn't seem to scale all that well.

For whatever reason, this game simply doesn't play nice with the PS5 or there is something that makes it lag behind because Ampere cards are much faster than RDNA2 or even Turing GPUs in this game. The 3070, which usually matches the 2080 Ti, is more than 10% faster in Alan Wake 2. It's also 50% faster than the PS5 which is really the upper bounds of the differential that can exist between them.

This game also prefer Ada in RT over ampere (4070 faster than 3080 while reverse is true without RT). Probably optimization played some role here, they also released massive performance patch for non mesh shaders gpus at some point (while 5700XT was left in the dust).

Gaiff

SBI’s Resident Gaslighter

The poster said 20-something...you know, meaning in the 20s. 28 and 29 are also in the 20s.It's 29%, I guess you can round it up to 30% not 20%

LOL

Last edited:

MisterXDTV

Member

The poster said 20 something...you know, meaning in the 20s, which 28 and 29 are also in the 20s.

20 something in my book means 21 or 22

NOT 29

LOL

Gaiff

SBI’s Resident Gaslighter

Your book is completely wrong. Anything from 20 to 29 is 20-something.20 something in my book means 21 or 22

NOT 29

LOL

MisterXDTV

Member

Your book is completely wrong. Anything from 20 to 29 is 20-something.

Gaiff

SBI’s Resident Gaslighter

I don't see why you're reacting like a child. You tried to nitpick but put your foot in your mouth. Imagine getting bent out of shape because a poster said the Pro's memory is 20-something faster.

Also, 28%. If you're going to be a jerk and split hairs, at least get it right.

pasterpl

Member

Probably PssR is using elements of FSR4 (which supposed to be AI based).Pro has RDNA 3.5 raster parts and 4.0 RT parts based on rumors.

It's the newest AMD tech vs. outdated/not complete on PS5 launch so it's a step up.

Physiognomonics

Member

Do we have comparisons between both 60fps modes? This will apply to most people tastes. Who will play this at 30fps on their 1000$ OLED TV?

Last edited:

Senua

Member

20 something in my book means 21 or 22

NOT 29

LOL

Yes, I think it does and maybe is the combination with mesh shaders and the pro does have mesh shaders this time. the series x having 10fps or more over in the forest point to this, but we all guessing here.Does it really favor CUs or compute power? The Pro seems to disprove that theory. It has over 60% more throughput than the PS5 in compute, yet Alan Wake 2 doesn't seem to scale all that well.

For whatever reason, this game simply doesn't play nice with the PS5 or there is something that makes it lag behind because Ampere cards are much faster than RDNA2 or even Turing GPUs in this game. The 3070, which usually matches the 2080 Ti, is more than 10% faster in Alan Wake 2. It's also 50% faster than the PS5 which is really the upper bounds of the differential that can exist between them.

In this video, You can see the GTX 3070 struggling to hold a flat 60fps at the forest section with similar setting to the pro at 4k DLSS performance. I think it is reasonable to assume the pro will perform similarly if it go from 860p to 1080p assuming that the pro will hold a flat 60 in forest like the series x.

It all guessing game here but what i said hold true. DRS would have been much better for consoles especially the series x And maybe the pro too. The extra performance in most indoor areas could help with image clarity and stability.

Last edited:

mckmas8808

Mckmaster uses MasterCard to buy Slave drives

28 is closer to 30. Saying 20 something would be dumber than just saying 30 in this instance. I know you get that.