Dude, look at these benchmarks. The 7900 XTX is quite competitive with the 4080 and $200. Please refrain from embarrassing yourself.

Oh yeah, counting those odd games that favour AMD like call of duty twice. Big brain move there. You totally fit with the avatar you have.

Here's a with 50 games that is actually doing good work (best for VR)

The Hellhound RX 7900 XTX takes on the RTX 4080 in more than 50 VR & PC Games , GPGPU & SPEC Workstation Benchmarks The ... Read more

babeltechreviews.com

I did the avg for 4k only, i'm lazy. 7900 XTX as baseline (100%)

So, games with RT only ALL APIs - 20 games

4090 168.7%

4080 123.7%

Dirt 5, Far cry 6 and Forza horizon 5 being in the 91-93% range for 4080, while it goes crazy with Crysis remastered being 236.3%, which i don't get, i thought they had a software RT solution here?

Rasterization only ALL APIs - 21 games

4090 127.1%

4080 93.7%

Rasterization only Vulkan - 5 games

4090 138.5%

4080 98.2%

Rasterization only DX12 2021-22 - 3 games

4090 126.7%

4080 92.4%

Rasterization only DX12 2018-20 - 7 games

4090 127.2%

4080 95.5%

Rasterization only DX11 - 6 games

4090 135.2%

4080 101.7%

But Ray Tracing....yes ray tracing is better than on nvidia's system. Heck, even Intel has done a better job with raytracing than AMD. No question, and if that is a huge priority to you, then go with nvidia, but I'd argue that you should spend the extra $$ and just get the 4090.

The 4080 has the specs of what the 4070 Ti should have been.

And that makes AMD flagship supposed to be what then?

Do you even remember how 6900XT vs 3090 was?

The 4080 should have added about 11K CUDA cores and a 320-bit memory bus compared. That gives room for a 4080 Ti to have around 13K, which would go nowhere near encroaching on the 4090s MASSIVE 16K CUDA cores. The 4070 Ti would make a great 4070 if it had a 256-bit bus. The current 4070 would make a good 4060 Ti and down the line.

Imagine what Nvidia did with that cut down chip?

We all thought that Nvidia priced the 4080 as a push to sell 4090's, it ends up they actually fucking nailed the AMD flagship with a card that should normally cost ~$700!

How does a card with

379 mm² vs 529 mm²

45.9M transistors vs 58M transistors

4864 vs 6144 Cores

~30% of transistors reserved for ML & RT vs one that concentrates on rasterization

Gets -6.3% in rasterization? 4080 consuming -100W than the 7900XTX?

Look, if you don't care about RT,

Even if data shows otherwise for the now vast majority of RTX users, fine, but let's entertain for a minute that we don't care about RT at the dawn of the tsunami of HW Lumen unreal 5 games coming, just for fun.

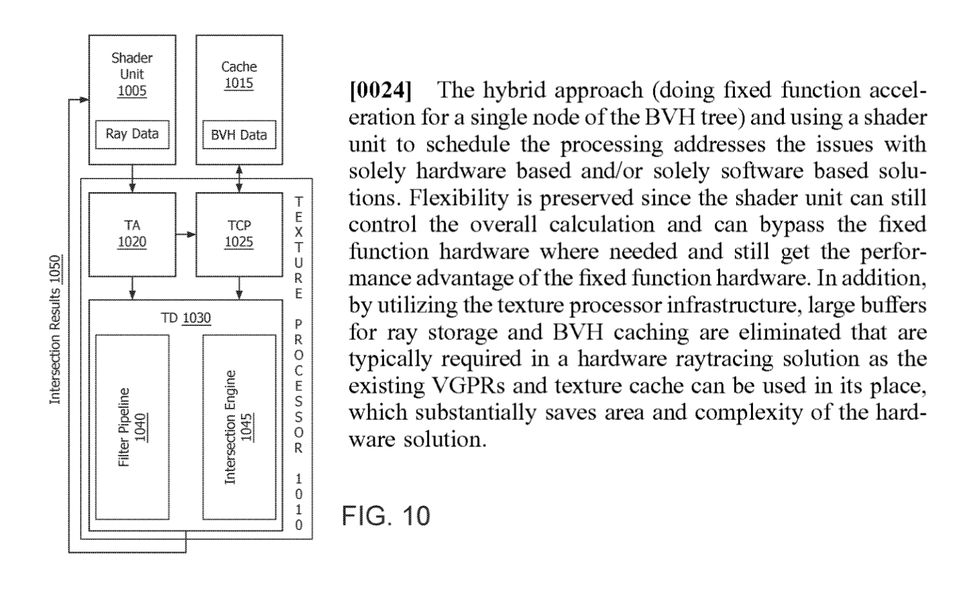

So RT's not important. Cool. That's why we have the hybrid RT pipeline since RDNA 2.

Keyword here from AMD's own patent : Saves area and complexity. Saves

area.

Cool beans. I can respect that engineering choice.

So then..

Why is AMD failing miserably at just trading blows in rasterization with a 379 mm² that has a big portion of its silicon dedicated to RT cores and ML?

Massive tech failure at AMD. If you're butchering ML and RT and still fail to dominate rasterization, it's a failed architecture. Nvidia's engineers schooled AMD's big big time.

AMD is fumbling around. Buying team red is basically just a teenage angts rebelious mind against Nvidia with "mah open source!" at this point.

Saves $200 for a cooler that barely keeps up with the power limits, is one of the noisiest card since Vega and has terrible coil whine. Pays $100-$200 more for a respectable AIB cooler solution... tolerates -45% lower RT performances in heavy RT games for roughly same price.. There's a market for all kinds of special peoples i guess.

But don't get me wrong, both are shit buys, both are fleecing customers.