How is that 3090 that you paid 700 dollars over market price for working out? I definitely expected you to be super positive on AMD in this thread. Can't think of a single reason why you wouldn't be! No correlation at all!

Keep in mind VFXVeteran is a graphics artist; for graphics artists more VRAM and RT features are probably preferred, for their visual effects suites. IIRC the 3090 is mainly targeted at graphics production suites, not gaming per se.

Overall I'm really happy with these AMD announcements. Gotta give RedTechGaming some props, he was pretty accurate WRT the 6000 line spec leaks without saying too much to get obliterated by AMD ninjas. From what I saw, one of the GPUs is basically 2x PS5s (6900?), and the other is basically a Series X (6800 XT). So it definitely seems they leveraged the hell out of those partnerships.

Seems they are going with adopting DirectStorage for data I/O solutions, similar to Nvidia, though I think they're waiting on MS's DirectStorage instead of spinning their own. Also something that surprised me here was just how focused they were on specifying DX12U support with the GPUs. Any mentions of Vulkan stuff? A good number of the DX12U stuff seems strongly ingrained into the actual silicon design of RDNA2, as well, that kind of matches some of the earlier speculation indicative to what degree MS's been involved in the process (like bringing mesh shaders to the spec).

I didn't see any mention of cache scrubbers here, but that could maybe be the feature Sony said would be the type of thing that is brought forward in a "future" RDNA standard, something like RDNA3 most likely. So in that sense yeah, they would "have" an RDNA3 feature present, but more as something from their end adopted forward, not pulled from a forward RDNA3 roadmap...which fits the language Mark Cerny used back in March.

There might be a chance the consoles have Infinity Cache after all; definitely not 128 MB's worth, but maybe say, 32 MB minimum. That's at least about 1 MB (minus a few hundred KB) L1$ per dual CU, but it could go up a bit depending on what Sony and MS would've wanted. Maybe a chance they'd have it implemented slightly differently, too.

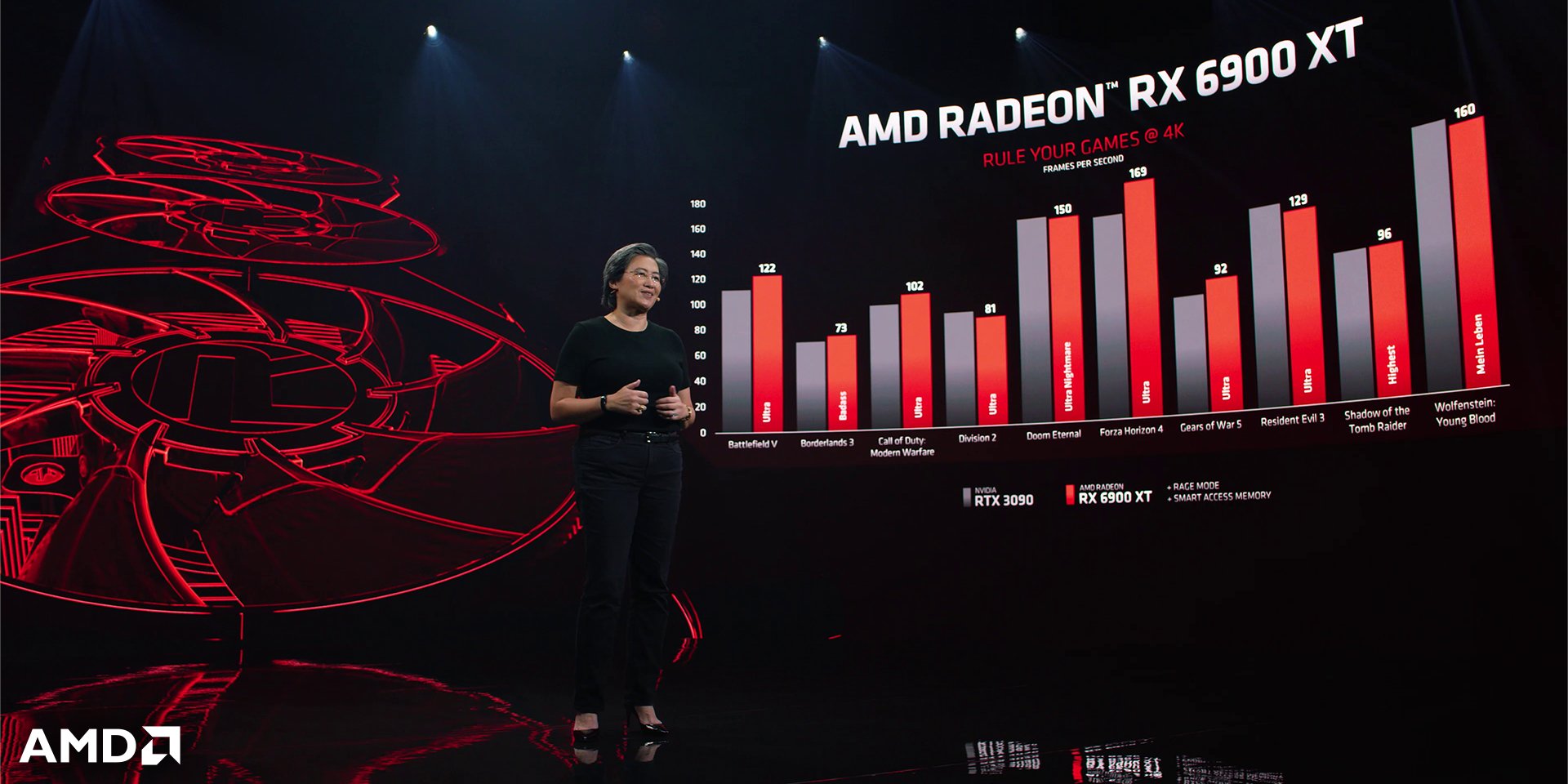

Power consumption looks like it falls in line with what I was expecting, very good figures there. I think for visual suite productivity Nvidia might still have the advantage at the highest end but for gaming AMD is at least legit competitive with them and beating them in a lot of cases for less money, too. That could change somewhat factoring in DLSS and RT for Nvidia, but the former is game-by-game case and the latter is only really applicable in measured doses without tanking game performance (i.e you still need to use a lot of artistic liberties).

, "same perf, half price" kind of messaging

)

)

-325W reference, ~350W board power for fancy AIB OC models (ASUS STRIX et al)