Yeah, for gaming, faster ram is better than quad channel. With quad channel, the pipe is wider. But it isn't faster. Dual channel is wide enough. Speed is what you need. And indeed, Skylake and Kaby Lake scale nicely in most games, with faster RAM.This is overblown. The average size of data being accessed at any given time will hit cache or be quickly placed into cache.

There are probably more reasons for this but all answers point to Quad channel bandwidth means nothing for gaming.

-

Hey Guest. Check out your NeoGAF Wrapped 2025 results here!

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

AMD Ryzen Thread: Affordable Core Act

- Thread starter ·feist·

- Start date

That said, Intel's 6- and 8-core CPUs show much better scaling.

Is it likely for the gap between the Intel 6+ core and Ryzen to narrow with the potential updates to OS/BIOS/games(?), or can that gap only be filled by targeted optimizations that need to be added while in development?

Ryzen is behind in both IPC and clocks to a 7700k, but I am willing to accept the poor performance in current games if Ryzen can, going forward, offer gaming performance closer to the 6900k in computerbase's review.

AstroNut325

Member

Is it likely for the gap between the Intel 6+ core and Ryzen to narrow with the potential updates to OS/BIOS/games(?), or can that gap only be filled by targeted optimizations that need to be added while in development?

Ryzen is behind in both IPC and clocks to a 7700k, but I am willing to accept the poor performance in current games if Ryzen can, going forward, offer gaming performance closer to the 6900k in computerbase's review.

I'm not sure I understand. First, Ryzen isn't performing poorly in games. Second, that chart you quoted, based on my understanding, shows a lower and flatter line for the 1800X compared to the 7700K. And to my understanding, that's more ideal. Also, if AMD was able to beat the 6900K in everything, I'm not sure the would be charging $500 for it.

For reference, an i7-2600K at 4.2GHz with DDR3-2133 RAM scores 31.0 FPS in this test, and an i7-7700K with DDR4-3733 RAM scores 56.5 FPS.

http://techreport.com/review/31410/a-bridge-too-far-migrating-from-sandy-to-kaby-lake/2

But ARMA 3 is basically a single-threaded game so that's a worst-case scenario for Ryzen.

AMD contacted reviewers about this, so they would have known about it.

Disabling power-saving features would also benefit Intel processors though.

I'm still not sure what would be best for testing either:

Do you test the CPU with power-saving features disabled, or do you test it in the normal state which the majority of users are going to have it set to?

With my 2500K, I disabled the power-saving features because it does have a noticeable impact on game performance since it's so old now. However part of the reason I'd want to upgrade would be the huge strides that have been made in power savings. Even having the power savings options enabled doesn't save much on this CPU.

But Intel has been working really hard to improve that, starting with Skylake.

With Kaby Lake, they can reach maximum performance from idle in only 15ms, compared to almost 100ms in older CPUs.

Frametimes in percentile are a better way to characterize game performance.

Measurements are in milliseconds so lower is better, and so is a flatter line.

What that graph shows is that the 1800X performs better than the 7700K (lower line) and that the 6850K and 6900K perform almost identically except in the worst-cases, where the 6900K performs marginally better. (minimum framerate would be higher)

It's a much better look at performance than just the three max/avg/min framerate numbers many places post - if you even get that. A lot of sites only post averages.

EDIT: Looking over the "Frametimes in Percentile" graphs from Computer Base really shows that games are starting to benefit from having 6 cores now.

If the 7700K is faster, the 6850K is still performing very close to it. However there are some games where the 6850K is performing noticeably better than the 7700K now.

8 cores don't seem to bring much improvement over 6 cores though, and can sometimes perform considerably worse than either the 7700K or 6850K.

Really interesting results for Rise of the Tomb Raider there too. It clearly demonstrates how DX12 significantly smooths out the gameplay experience even if overall performance is slightly lower.

In addition to what Paragon said, frametime percentiles are, in my opinion, the way that game benchmark results should be presented. More than any other objectively and accurately measureable metric, they give you an idea of how smooth a game actually fels to play.

Thanks Paragon and Durante for answering my question

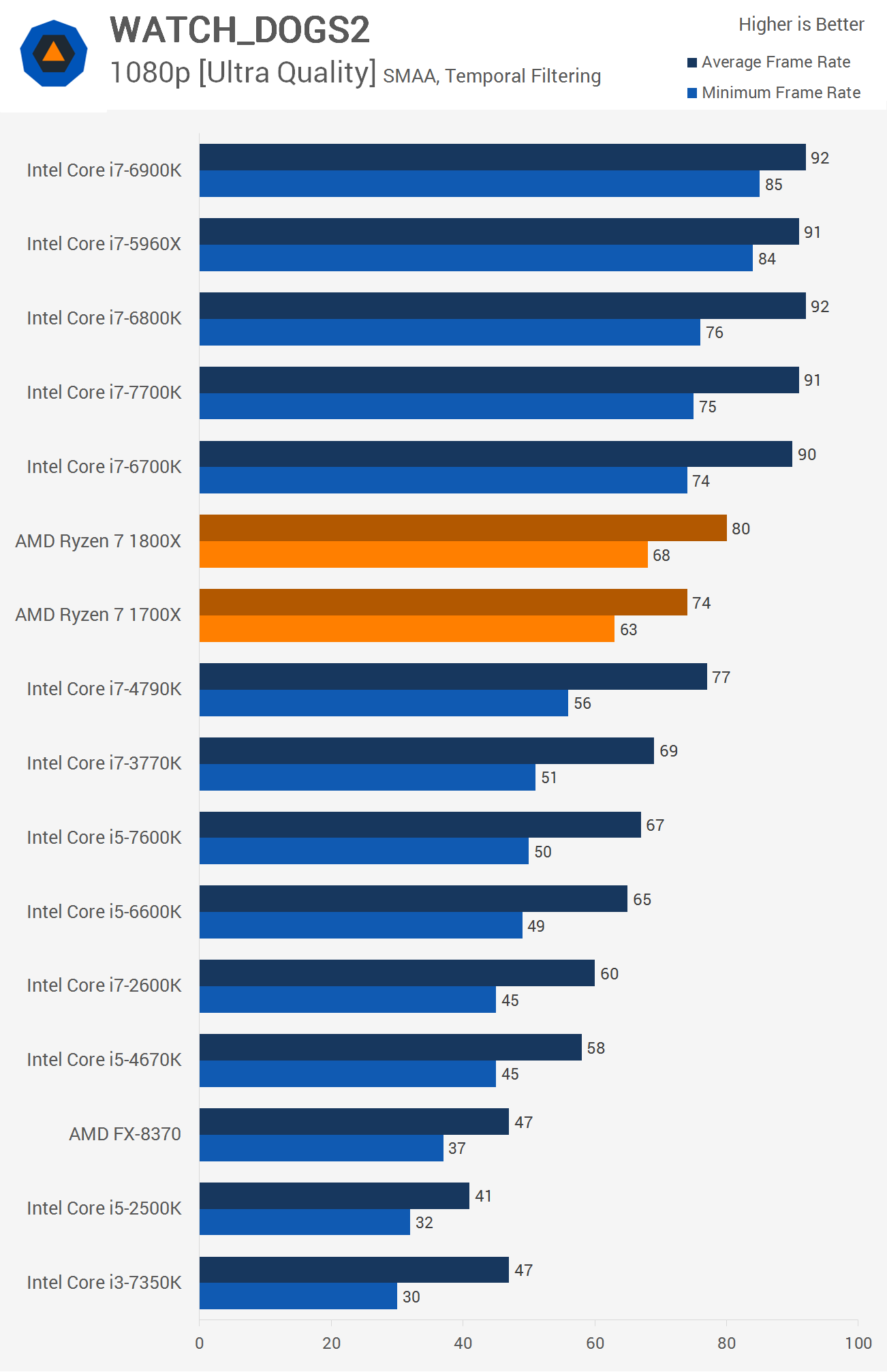

Not many outlets are using Watch Dogs 2 in their tests, but the few I've seen other than ComputerBase have the 7700K showing better results than the 1800X.

For example:

Though in this test, the 7700K system has faster memory (3866 vs 2933).

On another note, the 5960X has slower memory and it's also doing better (2400). ¯\_(ツ _/¯

_/¯

Ah well, I'm really curious on what Digital Foundry's results will be, if they do videos on Ryzen.

For example:

http://techreport.com/review/31366/amd-ryzen-7-1800x-ryzen-7-1700x-and-ryzen-7-1700-cpus-reviewed/8

Though in this test, the 7700K system has faster memory (3866 vs 2933).

On another note, the 5960X has slower memory and it's also doing better (2400). ¯\_(ツ

Ah well, I'm really curious on what Digital Foundry's results will be, if they do videos on Ryzen.

FX8350 vs R7 1800X by Wccftech

More benches @ http://wccftech.com/ryzen-fx-performance-gains-vishera/

GPU

GTX 1080 Founders Edition

FX Test System

CPU FX 8350 w/wraith

Memory 16GB Corsair Vengeance LP DDR3 1866

Motherboard Asus 970 Aura/Pro Gaming

Storage 480GB OCZ Trion 150 SSD

Power Supply Corsair AX860i

Ryzen Test System

CPU Ryzen 7 1800x

Memory 16GB Corsair Vengeance LPX DDR4 2400

Motherboard MSI X370 Xpower Titanium

Storage 480GB OCZ Trion 150 SSD

Power Supply Corsair AX860i

Conclusion

A long way and time have things come since the introduction of the FX 8350 and it shows. The Ryzen 7 1800x is far from an inexpensive chip bringing itself to the market at $499, but if the eight cores and sixteen threads is what you're after, they can be had for as low at $329 in the form of the Ryzen 7 1700, which is my personal pick of the bunch. But it is clear that a lot has come with Ryzen, a solid performing workhorse that has brought some arguable benefits to the gaming scene. Was the wait worth it? Sure, if you've got the money and the need. But me personally, I'm suggesting that those looking to build a sweet Ryzen gaming rig hang tight while the early bugs and issues are getting ironed out for now. I have a feeling that the Ryzen 5 1600x (6-core/12-thread) and Ryzen 5 1500x (4-core/8-thread) are going to be the real winners in this lineup as we're likely to see similar gaming performance for a whole lot less money. But so far Ryzen is performing on all cylinders right where I had personally anticipated. Now, if they could just get the memory speeds up.

More benches @ http://wccftech.com/ryzen-fx-performance-gains-vishera/

GPU

GTX 1080 Founders Edition

FX Test System

CPU FX 8350 w/wraith

Memory 16GB Corsair Vengeance LP DDR3 1866

Motherboard Asus 970 Aura/Pro Gaming

Storage 480GB OCZ Trion 150 SSD

Power Supply Corsair AX860i

Ryzen Test System

CPU Ryzen 7 1800x

Memory 16GB Corsair Vengeance LPX DDR4 2400

Motherboard MSI X370 Xpower Titanium

Storage 480GB OCZ Trion 150 SSD

Power Supply Corsair AX860i

Conclusion

A long way and time have things come since the introduction of the FX 8350 and it shows. The Ryzen 7 1800x is far from an inexpensive chip bringing itself to the market at $499, but if the eight cores and sixteen threads is what you're after, they can be had for as low at $329 in the form of the Ryzen 7 1700, which is my personal pick of the bunch. But it is clear that a lot has come with Ryzen, a solid performing workhorse that has brought some arguable benefits to the gaming scene. Was the wait worth it? Sure, if you've got the money and the need. But me personally, I'm suggesting that those looking to build a sweet Ryzen gaming rig hang tight while the early bugs and issues are getting ironed out for now. I have a feeling that the Ryzen 5 1600x (6-core/12-thread) and Ryzen 5 1500x (4-core/8-thread) are going to be the real winners in this lineup as we're likely to see similar gaming performance for a whole lot less money. But so far Ryzen is performing on all cylinders right where I had personally anticipated. Now, if they could just get the memory speeds up.

I'm not sure I understand. First, Ryzen isn't performing poorly in games. Second, that chart you quoted, based on my understanding, shows a lower and flatter line for the 1800X compared to the 7700K. And to my understanding, that's more ideal. Also, if AMD was able to beat the 6900K in everything, I'm not sure the would be charging $500 for it.

I guess what I am really trying to figure out is, If Ryzen can compare with the 6900k in some heavily multi-threaded production workloads will it ever be able to compare to the 6900k in a heavily multi-threaded game too?

Do games need to have Ryzen in mind from the start to fully utilize its power or, assuming they already perform best on an Intel 6c/12t+ CPU, can updates compensate?

'poor performance' was a bad choice of words. I meant I was fine with less performance than a 7700k in games that don't go beyond 4 core and rely more on single threaded performance.

Who knows. This is something that we'll really just have to wait and see.I guess what I am really trying to figure out is, If Ryzen can compare with the 6900k in some heavily multi-threaded production workloads will it ever be able to compare to the 6900k in a heavily multi-threaded game too?

Do games need to have Ryzen in mind from the start to fully utilize its power or, assuming they already perform best on an Intel 6c/12t+ CPU, can updates compensate?

'poor performance' was a bad choice of words. I meant I was fine with less performance than a 7700k in games that don't go beyond 4 core and rely more on single threaded performance.

How bottlenecked is even something like a 2600k if you're doing 60fps or less in modern games? How relevant are these CPU gaming tests, really, if you plan on upgrading within the next 4 years?

I feel like for the vast, vast majority of people, none of this stuff is going to matter, and they should look at frame times and non gaming usage.

I feel like for the vast, vast majority of people, none of this stuff is going to matter, and they should look at frame times and non gaming usage.

With time? Computerbase has 1800X being smoother than 7700K right now already...Thanks Paragon and Durante for answering my questionSo I guess that with time the AMD's 8 core CPU's will have "smoother" frame rate than intel's 4 core CPU's?

...at which point we can close the circle of commonly used gaming benchmark being completely useless since they are misleading. And not only because many of them are actually disguised GPU benchmarks instead testing the CPU.I agree as to the significance of the WD2 results, but your remark about the 7700k doesn't hold true in frametimes at Computerbase at least:

Sqrt_minus_one

Member

With time? Computerbase has 1800X being smoother than 7700K right now already...

...at which point we can close the circle of commonly used gaming benchmark being completely useless since they are misleading. And not only because many of them are actually disguised GPU benchmarks instead testing the CPU.

Thats a single benchmark.

Not many outlets are using Watch Dogs 2 in their tests, but the few I've seen other than ComputerBase have the 7700K showing better results than the 1800X.

For example:

http://techreport.com/review/31366/amd-ryzen-7-1800x-ryzen-7-1700x-and-ryzen-7-1700-cpus-reviewed/8

Though in this test, the 7700K system has faster memory (3866 vs 2933).

On another note, the 5960X has slower memory and it's also doing better (2400). ¯\_(ツ_/¯

Ah well, I'm really curious on what Digital Foundry's results will be, if they do videos on Ryzen.

Results are all over the place for this chip Computerbases Watchdogs 2 performance appears at odds with both Gamers Nexus and techreport which both appear to put 7700k ahead I assume they are simply testing in different sections or some kind of bios issue.

Techspot is another one (though all they have is average and minimum framerate results).Thats a single benchmark.

Results are all over the place for this chip Computerbases Watchdogs 2 performance appears at odds with both Gamers Nexus and techreport which both appear to put 7700k ahead I assume they are simply testing in different sections or some kind of bios issue.

http://www.techspot.com/review/1345-amd-ryzen-7-1800x-1700x/page4.html

Hard to find more results for comparison since there's not many reviewers that used watch dogs 2 in their testing.

Unknown Soldier

Member

That's not really true though?

I paid around 350 for the 5820k in 2014. Now the Ryzen 7 1700 (never mind X or 1800) is 360.

I paid USD$350 for my 5820K a year and a half back. Where I got the great deal was on the motherboard, only $130 after rebate. Also at the time DDR4-3200 was pretty cheap, got 4x4 GB G.skill sticks for $90 or something like that. Overall I feel like I got a good deal but 5820K is 6 cores and Ryzen 7 is 8 cores. You get more cores per dollar from Ryzen.

I agree as to the significance of the WD2 results, but your remark about the 7700k doesn't hold true in frametimes at Computerbase at least:

That said, Intel's 6- and 8-core CPUs show much better scaling.

HEDT is badass as fuck because it's built off the server chipsets. People don't believe me when I say quad-channel makes a difference. If it didn't matter, why would Intel support it? They aren't dummies and supporting quad-channel drives up the cost of both CPU package and motherboard so they wouldn't be doing it for no reason.

Computerbase did "frametimes in percentile" for all games they tested. In BF1 DX11 1800X even equals 6850K.Thats a single benchmark.

If you mean no other sites did 99th percentile benchmarks then we are walking in a circle again: The whole thread deteriorated once gaming benchmarks arrived since neither the readers nor many of the testers apparently know what there is being tested. They essentially tested GPUs, the state of BIOS versions, drivers, OS support (or the lack thereof) of an all new uArch, platform and chipset and often made imaginary conclusive conclusions of the suitability of the CPU for gaming.

I don't think anyone is suggesting that there is no reason to use quad-channel memory ever.HEDT is badass as fuck because it's built off the server chipsets. People don't believe me when I say quad-channel makes a difference. If it didn't matter, why would Intel support it? They aren't dummies and supporting quad-channel drives up the cost of both CPU package and motherboard so they wouldn't be doing it for no reason.

But I've yet to see any gaming tests where it's made a difference - especially when pit against a higher-clocked dual-channel memory setup - since X99 doesn't support anything like the speed that Z270 does.

Gaming seems to rely more on data rate and latency than bandwidth.

With Intel moving from 4-channel memory to 6-channel memory on LGA-3647, and AMD using 8-channel memory with Naples (server Zen CPUs), there are clearly applications for it.

Though I'd like some of the additional features supported by the upcoming LGA-2066 (X299) or LGA-3647 platforms, I suspect that the top gaming CPU released this year is going to be the 6-core Coffee Lake chip, as it should have high IPC and high clockspeeds - but with a limited number of PCIe lanes, only dual-channel non-ECC memory, and all the other compromises you get for buying into the consumer platform vs HEDT/server.

While you get 6 channel memory with the server parts, the fastest ECC memory I've been able to find is DDR4-2666 CL19, and you can't overclock Xeons either, so I expect that those parts won't be great performers in games despite being very powerful for certain types of application.

Thanks Paragon and Durante for answering my questionSo I guess that with time the AMD's 8 core CPU's will have "smoother" frame rate than intel's 4 core CPU's?

Again it depends on the game. If the 4 core is hitting 90+ utilization it will have bigger frame drops than a CPU with more cores, and similar IPC performance, if a game scales with them. Those frame drops will be felt a lot more than a higher core count having a higher average frame latency but is more consistent in adhering to that average.

PCGH tested Starcraft 2.Are there any other test on older games like Starcraft 2, Xcom2 and Kerbal Space Program?

The frametime graph would show this.Again it depends on the game. If the 4 core is hitting 90+ utilization it will have bigger frame drops than a CPU with more cores, and similar IPC performance, if a game scales with them. Those frame drops will be felt a lot more than a higher core count having a higher average frame latency but is more consistent in adhering to that average.

If it had big drops/stuttering problems, you would see a sharp spike at the end of the graph.That's why it's arguably the best way to present benchmarks.

PCGH tested Starcraft 2.

The frametime graph would show this.

If it had big drops/stuttering problems, you would see a sharp spike at the end of the graph.That's why it's arguably the best way to present benchmarks.

Thanks, that doesn't look good :/

Computerbase did "frametimes in percentile" for all games they tested. In BF1 DX11 1800X even equals 6850K.

yeah but for some reason their 7700k performs worse then on any other site... absolutely no one else show the 7700K with worse frame-times then ryzen. Also for some strange reason it looks like they are testing with a 980ti.

The hell is wrong with that Starfcraft 2 benchmark? None of those CPUs should be performing that badly.

Don't underestimate Starcraft 2. I remember it being very heavy on the CPU when there's lots of units in play. We also don't know what they did. They could've just spawned 2000 units.

Evolution of Metal

Member

Don't underestimate Starcraft 2. I remember it being very heavy on the CPU when there's lots of units in play. We also don't know what they did. They could've just spawned 2000 units.

I know for sure that they haven't benchmarked the actual game, testing the game in some sandbox mode is unproductive and it doesn't represent the performance of the game. Even with garbage SC2 engine you shouldn't get sub-30 FPS on a modern day CPU on low settings.

michaelius

Banned

Starcraft 2 is one of worst offenders in doesn't scale to multiple threads category.

For typical everyday usage low voltage dual core with HT is enough.

I feel like for the vast, vast majority of people, none of this stuff is going to matter, and they should look at frame times and non gaming usage.

For typical everyday usage low voltage dual core with HT is enough.

JohnnyFootball

GerAlt-Right. Ciriously.

Went to Microcenter and almost bought a 6700K for $280 and a $50 open box Z170 motherboard and some $100 DDR4. $430 minus tax.

Decided to hold off. Skylake is still excellent but I am now determined to wait and see on the 1600X.

Decided to hold off. Skylake is still excellent but I am now determined to wait and see on the 1600X.

Nah Blizzard games are notoriously poorly-threaded before Overwatch.Don't underestimate Starcraft 2. I remember it being very heavy on the CPU when there's lots of units in play. We also don't know what they did. They could've just spawned 2000 units.

TC McQueen

Member

Probably should've picked up the RAM.Went to Microcenter and almost bought a 6700K for $280 and a $50 open box Z170 motherboard and some $100 DDR4. $430 minus tax.

Decided to hold off. Skylake is still excellent but I am now determined to wait and see on the 1600X.

Coulomb_Barrier

Member

It is, however, worth pointing out that Ryzen 7 1800X had around 70% spare CPU cycles on its SMT threads and around 40-60% spare on its 8 cores (GTA prefers actual cores to threads). So, if you want to stream GTA V over Twitch, you have plenty of spare processing horsepower to do so whereas the 6C12T 6800K is slightly more heavily loaded and a Skylake i5 will be pushing well above 90% CPU load.

http://www.kitguru.net/components/cpu/luke-hill/amd-ryzen-7-1800x-cpu-review/9/

Reading Kitguru's review. Very promising as for GTA5 a Skylake CPU is nearly 100% loaded, but Ryzen is about 50% loaded with only 30% of its SMT utilised.

It's clear there is huge room for the gaming performance to improve, so that side of the CPU is hardly a dud from a hardware level, with 100% utilisation of the CPU during gaming (likely will never happen) it would blow right past a 7700K.

The question is how much of this spare performance can be unlocked to bring the performance up to where it should be. There's huge potential just sitting there.

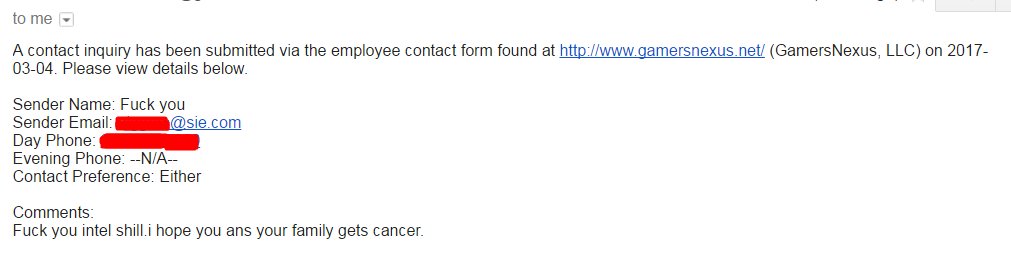

Yikes! Steve from Gamingnexus is getting death threats for his Ryzen review - https://mobile.twitter.com/GamersNexus/status/838221363991166981

Coulomb_Barrier

Member

Yikes! Steve from Gamingnexus is getting death threats for his Ryzen review - https://mobile.twitter.com/GamersNexus/status/838221363991166981

That's not a death threat is it that's just a d#ck.

Can we keep this nonsense out of here.

michaelius

Banned

Yikes! Steve from Gamingnexus is getting death threats for his Ryzen review - https://mobile.twitter.com/GamersNexus/status/838221363991166981

Business as usual with AMD fans. Every portal that dares to criticize their company is Nvidia/Intel shill no matter how legit issue is raised.

brain_stew

Member

Not many outlets are using Watch Dogs 2 in their tests, but the few I've seen other than ComputerBase have the 7700K showing better results than the 1800X.

For example:

http://techreport.com/review/31366/amd-ryzen-7-1800x-ryzen-7-1700x-and-ryzen-7-1700-cpus-reviewed/8

Though in this test, the 7700K system has faster memory (3866 vs 2933).

Ah well, I'm really curious on what Digital Foundry's results will be, if they do videos on Ryzen.

Given that a 7700K will run 3866mhz memory more often than Ryzen will accept 2933mhz memory, it seems a fair comparison to me. Both platforms are being shown in the best light.

There's definitely some potential here for a good gaming CPU. Another 3 months to work out the UEFI, Windows, SMT and memory issues and a $200-$250 6C12T unlocked Ryzen 5 that can overclock to 4ghz on high end air could still be great competition for the i5 line in gaming rigs.

We don't really know at this point if it's primarily a software or a hardware issue. In any case, I would never expect or make purchasing decisions based on the idea of most PC games actively optimizing for any given CPU architecture.Is it likely for the gap between the Intel 6+ core and Ryzen to narrow with the potential updates to OS/BIOS/games(?), or can that gap only be filled by targeted optimizations that need to be added while in development?

Ryzen is behind in both IPC and clocks to a 7700k, but I am willing to accept the poor performance in current games if Ryzen can, going forward, offer gaming performance closer to the 6900k in computerbase's review.

The 5960X has quad-channel memory. It has a significantly higher memory bandwidth even at lower clocks.Though in this test, the 7700K system has faster memory (3866 vs 2933).

On another note, the 5960X has slower memory and it's also doing better (2400). ¯\_(ツ_/¯

I can't believe GamerNexus is blowing up over this AMD stuff lol. I've been watching his videos since pretty much the first time he uploaded to youtube. He is not a bias shill..he does amazing work much better than "bigger" youtube channels.

Kids thanks to the Internet can talk all the shit they want best to just ignore it.

Kids thanks to the Internet can talk all the shit they want best to just ignore it.

I know for sure that they haven't benchmarked the actual game, testing the game in some sandbox mode is unproductive and it doesn't represent the performance of the game. Even with garbage SC2 engine you shouldn't get sub-30 FPS on a modern day CPU on low settings.

It's completely ok if you only want to know the differences between cpus in that scenario.

brain_stew

Member

I can't believe GamerNexus is blowing up over this AMD stuff lol. I've been watching his videos since pretty much the first time he uploaded to youtube. He is not a bias shill..he does amazing work much better than "bigger" youtube channels.

Kids thanks to the Internet can talk all the shit they want best to just ignore it.

Only started watching his videos over the last couple of months but I've found it to be among the best PC YouTube channels. His methodology for gaming tests is spot on and I'm pleased he's sticking by his testing.

https://forums.anandtech.com/threads/ryzen-strictly-technical.2500572/page-8#post-38775732

Windows 10 is currently not working well with SMT compared to Windows 7. We'll see if the next OS patch addressed this.

Windows 10 is currently not working well with SMT compared to Windows 7. We'll see if the next OS patch addressed this.

LelouchZero

Member

I can't believe GamerNexus is blowing up over this AMD stuff lol. I've been watching his videos since pretty much the first time he uploaded to youtube. He is not a bias shill..he does amazing work much better than "bigger" youtube channels.

Kids thanks to the Internet can talk all the shit they want best to just ignore it.

Only started watching his videos over the last couple of months but I've found it to be among the best PC YouTube channels. His methodology for gaming tests is spot on and I'm pleased he's sticking by his testing.

I'm not too fond how long he tests for but I do like the methodology of taking into account the 1% and 0.1% lows.

CPU Test Methodology

Each game was tested for 30 seconds in an identical scenario, then repeated three times for parity.

When I do benchmarking I test for no less than 1 minute and 30 seconds, 30 seconds is not enough time to test games with many variables at play that can impact the performance in titles such as GTA V, Watch Dogs 2 and Battlefield 1.

lordfuzzybutt

Member

Business as usual with AMD fans. Every portal that dares to criticize their company is Nvidia/Intel shill no matter how legit issue is raised.

How about not lump every "AMD fans" together? That shit is not okay, nor is this kind of generalisation.

I'm not too fond how long he tests for but I do like the methodology of taking into account the 1% and 0.1% lows.

CPU Test Methodology

When I do benchmarking I test for no less than 1 minute and 30 seconds, 30 seconds is not enough time to test games with many variables at play that can impact the performance in titles such as GTA V, Watch Dogs 2 and Battlefield 1.

I'm sure if you hit him up he'll address why he tests for 30 seconds (if that is not a typo.). I'd also like to see the tests last for 2 mins or so to be honest.

Sqrt_minus_one

Member

I'm not too fond how long he tests for but I do like the methodology of taking into account the 1% and 0.1% lows.

CPU Test Methodology

When I do benchmarking I test for no less than 1 minute and 30 seconds, 30 seconds is not enough time to test games with many variables at play that can impact the performance in titles such as GTA V, Watch Dogs 2 and Battlefield 1.

I agree with you but a lot of sites do this though (looking at Computerbases frametime and frame rate graph indicates its 30 seconds as well, techreport is about 60 secs) - IMO it should ideally be a couple of minutes combining mostly intense and not so intense areas.

General Lee

Member

I agree with you but a lot of sites do this though (looking at Computerbases frametime and frame rate graph indicates its 30 seconds as well, techreport is about 60 secs) - IMO it should ideally be a couple of minutes combining mostly intense and not so intense areas.

It really depends on what you want to accomplish with the tests. You can do testing in non-demanding areas and get high numbers and that might be 90% of how one might spend gaming, but once you get to the 10% that result won't be useful. That's why it's better to just take the most demanding area of a game and test that, and this might be different for GPU and CPU.

Take for example ROTTR Geothermal Valley, which is highly CPU bound in the village, and highly GPU bound right below the village in the forest. You could do a 2 min run through both and get an average, but that result would not really give you a very good description of the performance since it could be considerably off in both cases depending on the hardware bottleneck. It's important to disclose what sort of benchmark scene is used because of this reason. A 30s bench through the village gives a better indication of what sort of performance benefits one might get from a 7700K over a 1700 in that situation.

A frametime graph with video like DF does is really the best way.

JohnnyFootball

GerAlt-Right. Ciriously.

I've thought about building a system by buying a little bit at a time but I always decide against it because you could end up buying a defective part and not know it was defective until well past the return window.Probably should've picked up the RAM.

Completely forgot about that. <-<;The 5960X has quad-channel memory. It has a significantly higher memory bandwidth even at lower clocks.

Based on techreport, the 1800X is a bit better than the 4790K in gaming overall (which has slower memory but higher clock I guess).

It's just the 1800X either failing to surpass or barely surpass (depending on the reviewer) the 7700K on a well multithreaded title like watch dogs 2 makes the 7700K look like a more future proof option for gaming, despite the higher core count on Ryzen.

Taking into consideration that the 1600X will have the same clocks as the 1800X, the only way it'll perform better is if the updates/patches AMD talks about actually make a difference.Really hoping the 1600x knocks it out of the park. It's either that or fork out extra money for a 7700k

Coulomb_Barrier

Member

Completely forgot about that. <-<;

Based on techreport, the 1800X is a bit better than the 4790K in gaming overall (which has slower memory but higher clock I guess).

It's just the 1800X either failing to surpass or barely surpass (depending on the reviewer) the 7700K on a well multithreaded title like watch dogs 2 makes the 7700K look like a more future proof option for gaming, despite the higher core count on Ryzen.

Taking into consideration that the 1600X will have the same clocks as the 1800X, the only way it'll perform better is if the updates/patches AMD talks about actually make a difference.

Yes but look at the details. The Skylake and Kaby Lake CPUs are almost at max load when running these types of games (see my above post) whereas the Ryzen is only at 50% load on all its cores in GTA5. So whereas Kaby Lake and SL won't get much faster, there is a large amount of headroom left with the Ryzens.

Wasn't aware of that, but, despite that, there's no guarantee that AMD will deliver on their promises that it'll get better with patches. For all we know, those could end up being just a minor improvement.Yes but look at the details. The Skylake and Kaby Lake CPUs are almost at max load when running these types of games (see my above post) whereas the Ryzen is only at 50% load on all its cores in GTA5. So whereas Kaby Lake and SL won't get much faster, there is a large amount of headroom left with the Ryzens.

If they end up improving alot, that'll be good for everyone, especially since Intel does need competition, but until then, Ryzen can't really be recommended for those focused on gaming based on promises, in my opinion.

Ouch, so it's an OS level regression. As if people need any more reasons not to move to W10...https://forums.anandtech.com/threads/ryzen-strictly-technical.2500572/page-8#post-38775732

Windows 10 is currently not working well with SMT compared to Windows 7. We'll see if the next OS patch addressed this.

General Lee

Member

It's better to let the platform mature and work out the kinks. If you've got the luxury of time, waiting for Skylake-X/Kaby-X or even Coffee Lake or Zen 2 is always an option. Hedging your bets on supposed unused potential is always risky, especially when we're talking of devs utilizing a GPU/CPU that's not from the market leader. Fiji had lots of potential too.

Unknown Soldier

Member

It's better to let the platform mature and work out the kinks. If you've got the luxury of time, waiting for Skylake-X/Kaby-X or even Coffee Lake or Zen 2 is always an option. Hedging your bets on supposed unused potential is always risky, especially when we're talking of devs utilizing a GPU/CPU that's not from the market leader. Fiji had lots of potential too.

People who already made the move to Haswell-E and Broadwell-E are going to be set for quite a long time it seems. Especially since the kinds of people who are on HEDT aren't exactly gaming at 1080p. It will probably be years before GPUs will catch up to the point where my 5820K will be bottlenecking anything at 4K resolution. Being on quad-channel memory means that I won't fall behind either if games continue to become more multithreaded and chew up more memory bandwidth.