Negotiator

Banned

PS2's exotic philosophy is a total antithesis to nVidia's philosophy.Dude, I'm not surprised by your disingenuous behaviour. I remember your comments and you did the same thing in our argument about PS2 vs Xbox when you tried to present facts in such a way to make the inferior console superior. You wanted to tell people that the PS2 hardware could render the same effects in software and outperform the more powerful console in polycounts even though it was RAM limited and you couldnt even grasp that you need memory even to be able to use higher polycounts. Even developers who made games on all platforms said that Xbox had the upper hand when it came to hardware and polycounts, whereas the PS2 has the upperhand in other areas (fillrate) but you still thought Sony's console was superior. I had to link internal reports done by sony to show people the real poly counts of PS2 games and the numbers were nowhere near what you claimed and that finally shut you up.

Brute force (eDRAM insane 2560-bit bus @ 48GB/s) vs efficiency (hardwired circuitry, compression etc).

PS2 was capable of emulating fur shaders (SoTC), but the framerate was abysmal:

Shadow of the Colossus Making of (PS2 / PS3) ... Playstation 2 / Playstation 3

Shadow of the Colossus - the making of in english and with pictures

selmiak.bplaced.net

I'm not sure if XBOX's GeForce 3 GPU was capable of rendering fur shaders, but I vividly remember this GeForce 4 demo:

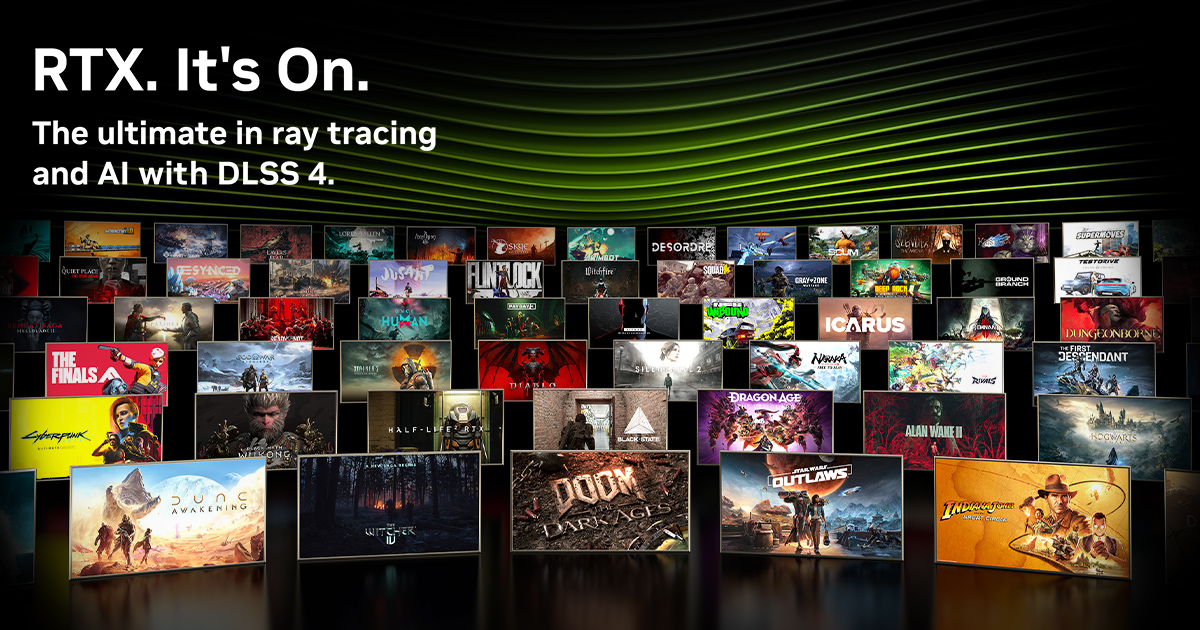

nVidia continues the efficiency philosophy with DLSS... brute force/rasterization is no more (even AMD will stop chasing that train with FSR4 aka rebranded PSSR).

I'm curious to see if PS6 will have something like DLSS4 + MFG, because I don't expect a huge bump in CPU/GPU specs (could be a sizeable jump if they adopt chiplets instead of a monolithic APU with low yields, we'll see).