roosnam1980

Member

thats true , and they use the same engineTbh It looks way worse than arkham knight from just a year later.

thats true , and they use the same engineTbh It looks way worse than arkham knight from just a year later.

Guys, this is it. This is the matrix awakens moment for video game character faces. this will change gaming forever. (if devs embrace it)

The aloy comparison blew me away. wtf.

The AI is removing the subtle ND facial animations but the face still looks great.

Bethesda games get the biggest upgrade.

Your opinion is wrong and you should be ashamed,

FF16 isn't even full realtime, the large scale battles use prerendered scenes, and those scene clearly have better lighting than the normal gameplay and cutscenes.

I love futbol references!Which is why I picked the scenes that are real-time. Or maybe you didn't even realize it was real-time and you just own-goaled yourself lol.

Generational leap?

Its a plugin that devs can add to their engine much like havoc or other third party solutions like dlss. it will help devs like bethesda and bioware produce better looking characters and animations that their current modeling and procedural animation systems simply cant handle. the game has somewhere around 200-300 characters you interact with. each with their own lines of dialogue. bethesda simply couldnt animate them so they wrote a one size fits all lipsync algorithm that results in somewhat wooden performances. now imagine if they just let AI handle it. Either way, its being programmed, but this time the AI is doing it. no need to develop an algorithm. no need to model any of these characters. just provide it one picture and it will do the rest. it will save time and resources.What's to embrace? It's FAR from realtime and still plastered with weird artifacts.

But who knows, DLSS is also a weird thing that is working pretty well now. So maybe in the future we will get techniques like that in realtime.

Didnt take you for a chubby chaser. Not judging but if you can like old fat aloy thats great. I just prefer my girls skinny and attractive.That's it guys, we completely fucking lost him.

Even worse when he was bragging about the vanilla sarah not looking bad...he needed plastic doll sarah to realize that vanilla looked like a 60 years old cunt.

lol photomode and DoF effects add more detail to the faces. Also, that first screenshot looks hilariously bad. what are you even doing? are you seriously making the argument that ND's cutscene models and gameplay models are the same?Gameplay.

Npc.

Why do you always take everything as an attack?lol photomode and DoF effects add more detail to the faces. Also, that first screenshot looks hilariously bad. what are you even doing? are you seriously making the argument that ND's cutscene models and gameplay models are the same?

Both Ellie and Abby have a constant resting bitch face in the game.I'm more disturbed by Edgie's constant bitch face tbh...

I don't think he is as serious as you think dudeWhy do you always take everything as an attack?

No, obviously the models don't look the same during gameplay as they do in scenes, but it doesn't look as bad as in your screenshot either.

gah take ya like this made me fuckin laugh.it's why i call the game Angry Birds.

Both Ellie and Abby have a constant resting bitch face in the game.

it's why i call the game Angry Birds.

I hope so, I like Serpiente babosa.I don't think he is as serious as you think dude

We all apprecciate your random gifs and pics.

That sound way cooler than slimysnake.I hope so, I like Serpiente babosa.

That sound way cooler than slimysnake.

Appreciate you posting these pics. I see the same cherry picked shots to downplay TLOU2. Its not as good as cinematics but it shouldn't be, push the fidelity when you can. But credit where its due, they look great in gameplay.Gameplay.

Npc.

Appreciate you posting these pics. I see the same cherry picked shots to downplay TLOU2. Its not as good as cinematics but it shouldn't be, push the fidelity when you can. But credit where its due, they look great in gameplay.

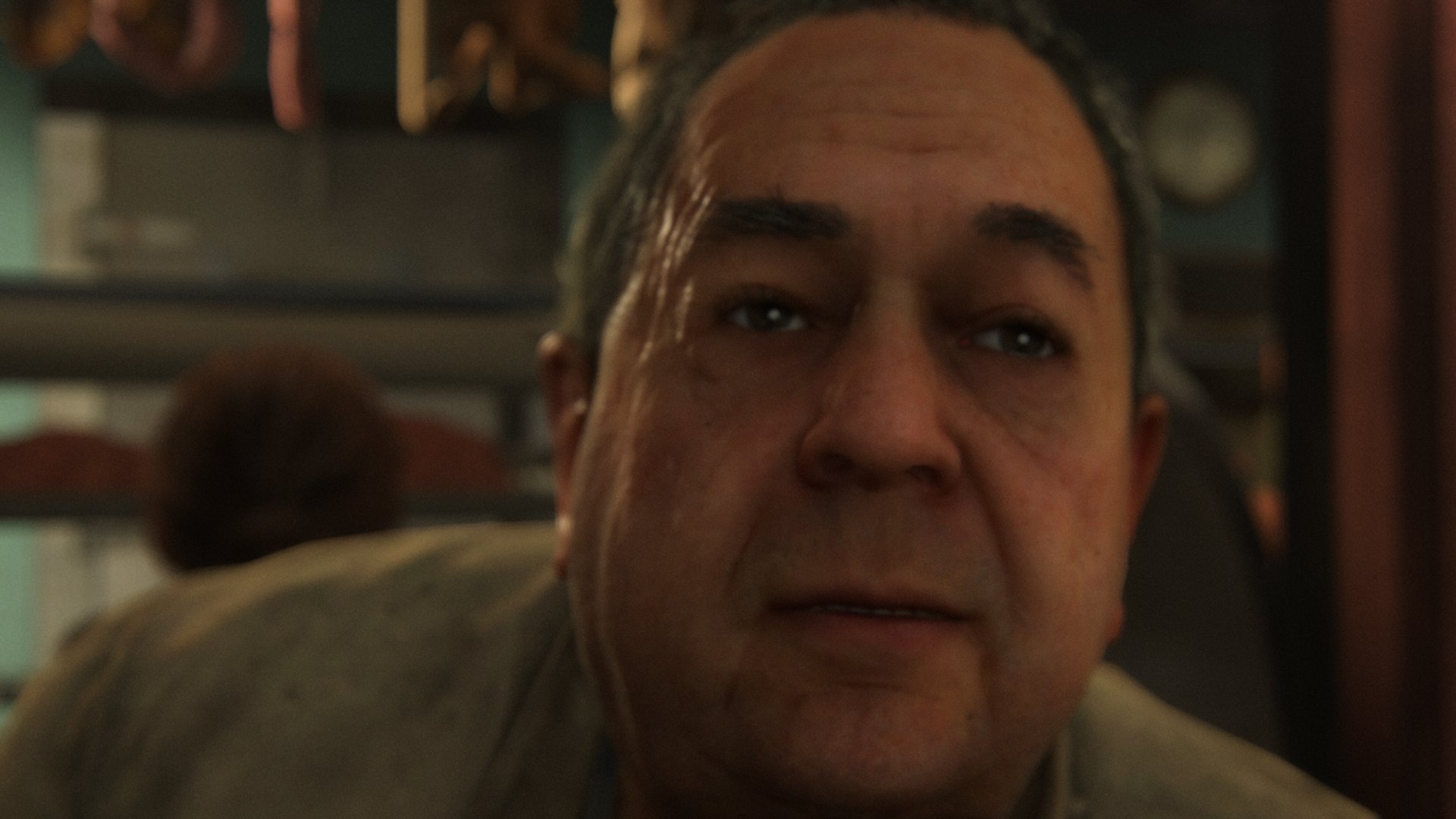

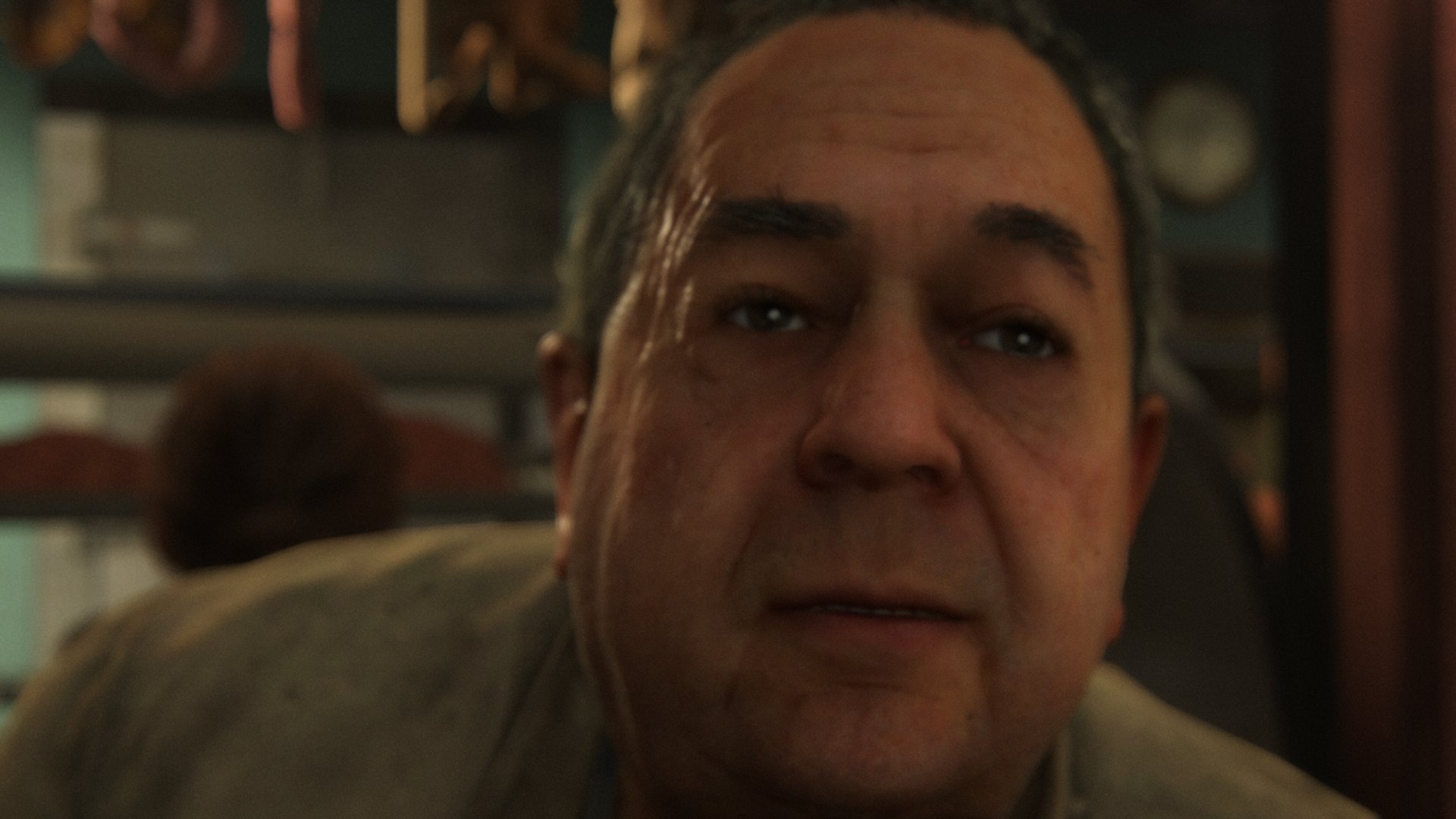

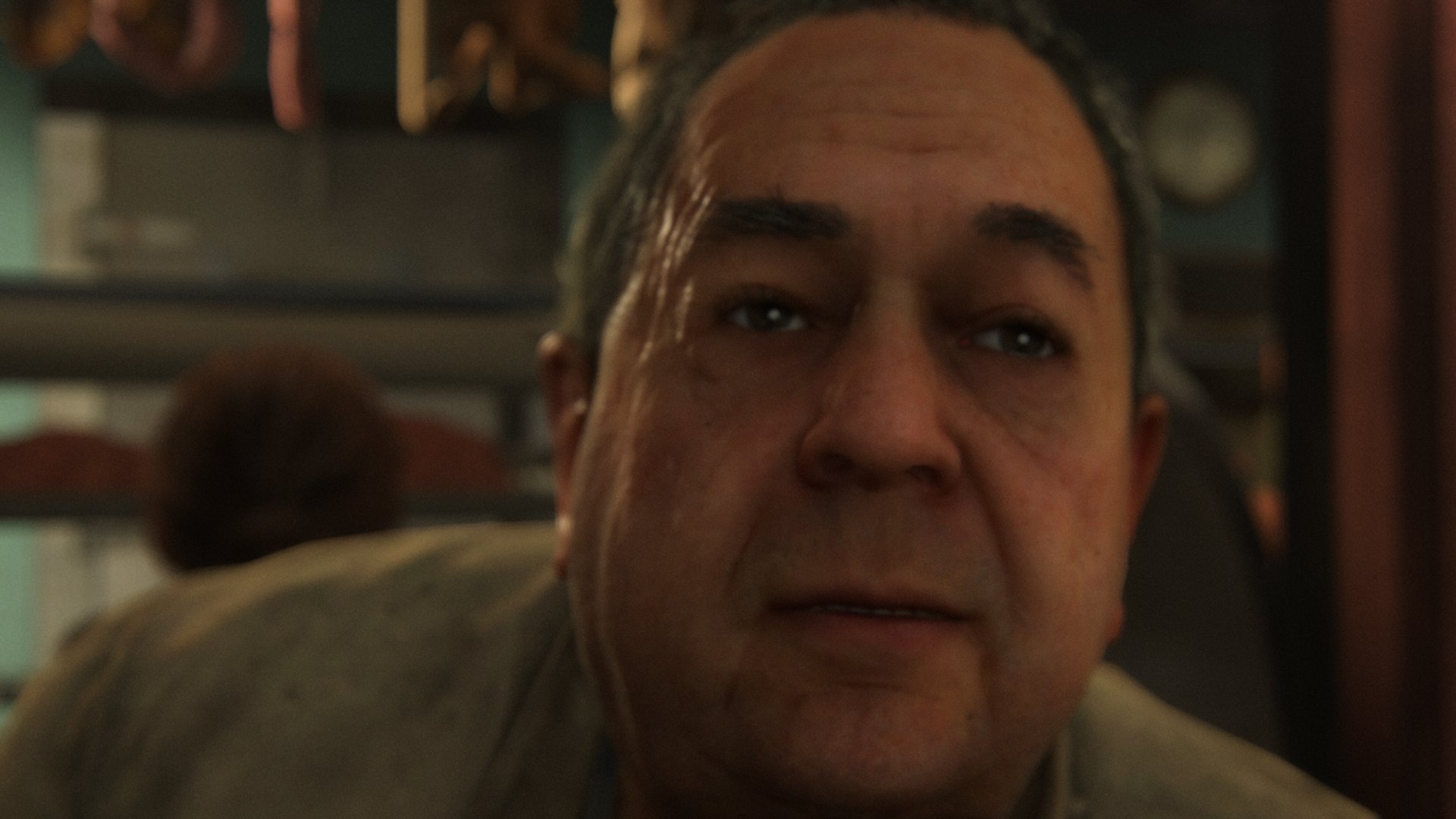

Where's the bottom image from? I don't remember that man's face.Gameplay.

Npc.

It's a random Npc, a butcher in Jackson.Where's the bottom image from? I don't remember that man's face.

Which is why I picked the scenes that are real-time. Or maybe you didn't even realize it was real-time and you just own-goaled yourself lol.

No, no moment in any game out gets even close to that lighting quality.Final Fantasy 16 intro achieved very similar lighting quality to SM2 CG trailer where Miles, Peter, and Venom were fighting.

They aren't using raytraced GI or raytraced reflections in ff16, it's most likely the usual GI probes.

You didn't though, you picked a mix a realtime and pre-rendered in engine cutscenes

They even mix the two elements in a single shot, suppose I can't expect you to notice.

The impressive battle sequence at the start of the game, it does switch to prerendered video, sandwiched between two real-time clips

But thanks again for admitting that you are impressed with PS5 real-time graphics sooooo much that you confused it with a pre-rendered video. A feat you said wouldn't be possible until PS117 or something ridiculous like that!!!

No, no moment in any game out gets even close to that lighting quality.

You can look at the most bland, most flat wall in that trailer and it will have far more accurate lighting.

But thanks again for admitting that you are impressed with PS5 real-time graphics sooooo much that you confused it with a pre-rendered video. A feat you said wouldn't be possible until PS117 or something ridiculous like that!!!

You mean like here when the shadow of Venom's tentacles pop in 5 frames behind schedule and Spiderman lacks contact shadows/hardening as he's running on the building?

Who gives a shit it's a cutscene ...we spend less than 1% of our time watching cutscenes, which we all know look better than real time gameplay.

The Forest Level setpeice in the fire indeed looks stunning. I even posted a 4k HDR capture of it here on gaf.

i said setpiece, not cutscene. the fight following the botched Abby execution (bummer) is lit using amazing lighting effects and then you follow the torches out of the forest. thats a setpiece.Your boss does:

Nah, that's prerendered footage within engine, much like the original last of us did on PS3. They already confirmed that the battle of hundreds of people they are using pre rendered graphics.

i said setpiece, not cutscene. the fight following the botched Abby execution (bummer) is lit using amazing lighting effects and then you follow the torches out of the forest. thats a setpiece.

the cutscene is the best looking cutscene in the game, but i was talking about the setpiece here.

i dont mind comparing cutscenes to gauge visual fidelity as long as we stick to cutscene v cutscene comparisons, but i think you are wrong about those FF16 cutscenes being realtime. Any cutscene with that many people on screen is not realtime. just like the ship chase later on in the story.

Sigh, you are now in denial about an objective truth.

My feelings exactly. But again, I do love my charity.Imagine continuing to argue with someone who swears the sky is purple.

Its a plugin that devs can add to their engine much like havoc or other third party solutions like dlss. it will help devs like bethesda and bioware produce better looking characters and animations that their current modeling and procedural animation systems simply cant handle. the game has somewhere around 200-300 characters you interact with. each with their own lines of dialogue. bethesda simply couldnt animate them so they wrote a one size fits all lipsync algorithm that results in somewhat wooden performances. now imagine if they just let AI handle it. Either way, its being programmed, but this time the AI is doing it. no need to develop an algorithm. no need to model any of these characters. just provide it one picture and it will do the rest. it will save time and resources.

Then there is something even i didnt think of until someone else mentioned it in the other thread. If the ML hardware is creating these faces on the fly then you dont need the GPU to render any of these fancy graphical features. That would get offloaded to the machine learning hardware. no idea how intensive that would be but assuming next gen consoles have some kind of ML hardware like tensor cores DLSS uses, all of that processing will be done on those leaving the GPU a lot more power for the devs to spend resources elsewhere...

insightface.ai

insightface.ai

stablediffusionxl.com

stablediffusionxl.com

I'm at unreal fest right now in a talk about what's coming in 5.4 and is set to have a lot of significant performance features, but there's still no way it's as production ready as they claimed 5 would be...

I think this talk is being currently Livestreamed fyi

E: 5.4 is getting frostbite hair!

Nah it was another user in the main thread. I am aware that there is no plugin but the guys in the video said that this could become one. thats the entire thesis of the video that this tech can indeed be put into games, and once integrated into the engine could provide some stunning results.Wait, are you talking about the Corridor Digital post or something else?

As I understand it, there's no plugin to utilize here, and it's not an animation-saving solution per se. It's a face morphing tool (and really a face replacement app) built on the "deep face" (not deep fake) open source face analysis project InsightFace. Picsi replaces an existing face with the photo image and human anatomy model, and movement in the video is remapped to its particular version of the image/motion model. Everything done in the video was post-processed of existing faces (from YT clips,) and CD proposes what this would mean as a plugin, but all the work would need to be done ahead of time anyway. Seems like the process would be all the animation work, all the lighting work, most of the modeling work (including all the musculature and phoneme mapping,) but instead of finely building a face, you'd use a generic Metahuman or whatever and then employ this AI tool to place the actor's face on top of the model.

Free AI Face Swap Online for Photos, Videos & GIFs | Picsi.Ai

Swap faces in photos, videos and GIFs instantly with Picsi.Ai. Try our free AI face swapper for realistic photo face swap, video face swap, celebrity face swap, and group face editing in seconds — powered by InsightFace technology.www.picsi.ai

Open Source Deep Face Analysis Library - 2D&3D | InsightFace

InsightFace is an open source 2D & 3D deep face analysis library with tools for recognition, alignment, and verification, plus SDKs and face swapping solutions.insightface.ai

Stable Diffusion XL - SDXL 1.0 Model - Stable Diffusion XL

Stable Diffusion XL or SDXL is the latest image generation model that is tailored towards more photorealistic outputs with more detailed imagery andstablediffusionxl.com

I also don't see that you would save much on processing even if the face replacement approach was fast and accurate and human-like, since you would still need a face to replace? You wouldn't need to break your back making highly-detailed faces with modeled pores and retinas if the replacement image was high enough in fidelity and depth (and maybe you could save resources by just using a generic avatar face that gets morphed over for all characters?), but you'd still need to put the character in the scene and have them perform. Having a face doesn't give you an actor.

Results are intriguing (probably there will be plenty of RTX Remix projects using such approaches,) and we're already face-scanning real people and motion-capturing live faces and mapping personal scans onto MetaHuman pre-rigged models anyways so the "artistry" of game design is already on the way out for machine-assisted processes, so I get where the excitement is here. But I'm not seeing why Corridor Digital or you are pegging this as the future. It's one model of human facial animation, and it's going to apply that same singular, generic model on every face it applies over an artist's or actor's portrayal of a character.

And no you wont need a face, you just need a picture. the AI algorithm fills in the rest. so you could have a very basic mesh of like 10 polygons like Snake in MGS1 and it will do the rest. yes, you will need ML hardware but that will free up the GPU from rendering the character face.

Because face scanning, hiring actors and mocapping are a time consuming and expensive process that produces results that need dozens of animators to go in and retouch. This is NOT something bethesda can do. Anyone who has played starfield knows its not a space exploration game, its a dialogue driven game set in space. like 60% of the game is dialogue trees and there is just no way they couldve mocapped it like Naughty Dog mocaps 4-5 hours of cutscenes than spends 3 years handkeying the facial animations.

Seems to not be fully livestreamed?

Still wouldn't say the resolution, it's sub 1080p for sure in performance mode. The good news is next gen can maybe get back to aiming for 1080p again lol.Straight up says "60fps is a huge, huge compromise"

Good watch/interview. Really highlights the importance of 30fps for any dev that wants to push fidelity.

Straight up says "60fps is a huge, huge compromise"

In regards to games not being 4K60:

"Would you rather us make the game on the weakest platform, or would you rather us take advantage of the most powerful platforms?"

Talks about how the word "Optimize" is thrown around by FrameRate warriors as if its some type of magic.

They were able to get the performance mode to run "mostly" at 60. Lots of sacrifices made in that mode. Their focus was absolutely on getting a rock solid 30.

"Im a big performance mode guy, but I play this at 30 because its so smooth and the visuals are just incredible"

Playing this at 60fps sounds like a lesser experience.

Good watch/interview. Really highlights the importance of 30fps for any dev that wants to push fidelity.

Straight up says "60fps is a huge, huge compromise"

In regards to games not being 4K60:

"Would you rather us make the game on the weakest platform, or would you rather us take advantage of the most powerful platforms?"

Talks about how the word "Optimize" is thrown around by FrameRate warriors as if its some type of magic.

They were able to get the performance mode to run "mostly" at 60. Lots of sacrifices made in that mode. Their focus was absolutely on getting a rock solid 30.

"Im a big performance mode guy, but I play this at 30 because its so smooth and the visuals are just incredible"

Playing this at 60fps sounds like a lesser experience.

We have matched photorealism with the matrix awakens, in some parts.Can we please just appreciate that we are doing better than the ps4 generation, instead of constantly trying to claim with have achieved photorealism and are now matching CGI when we just aren't there and won't be there for a while.

You're right I'm on itRepresent. you should make a new thread for the Alan Wake/Remedy/IGN interview. It's been a while since Gaf warred over the legitimacy of Series S lol.