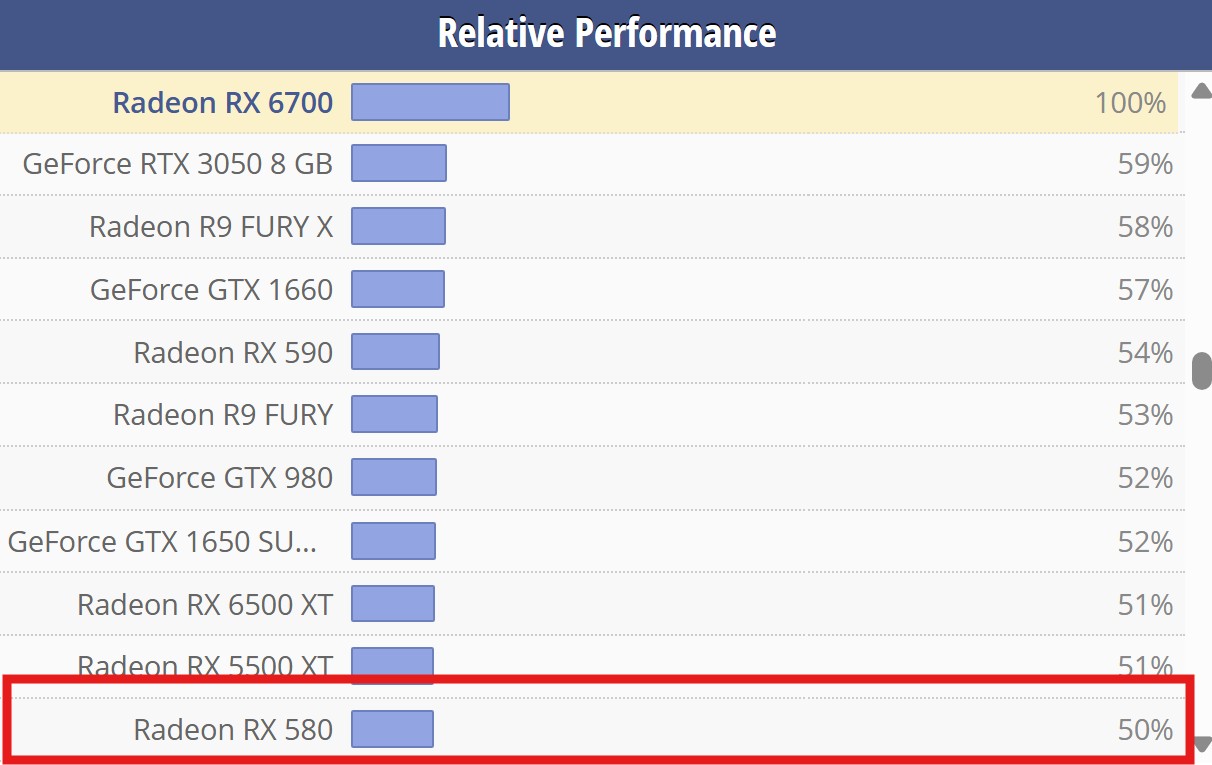

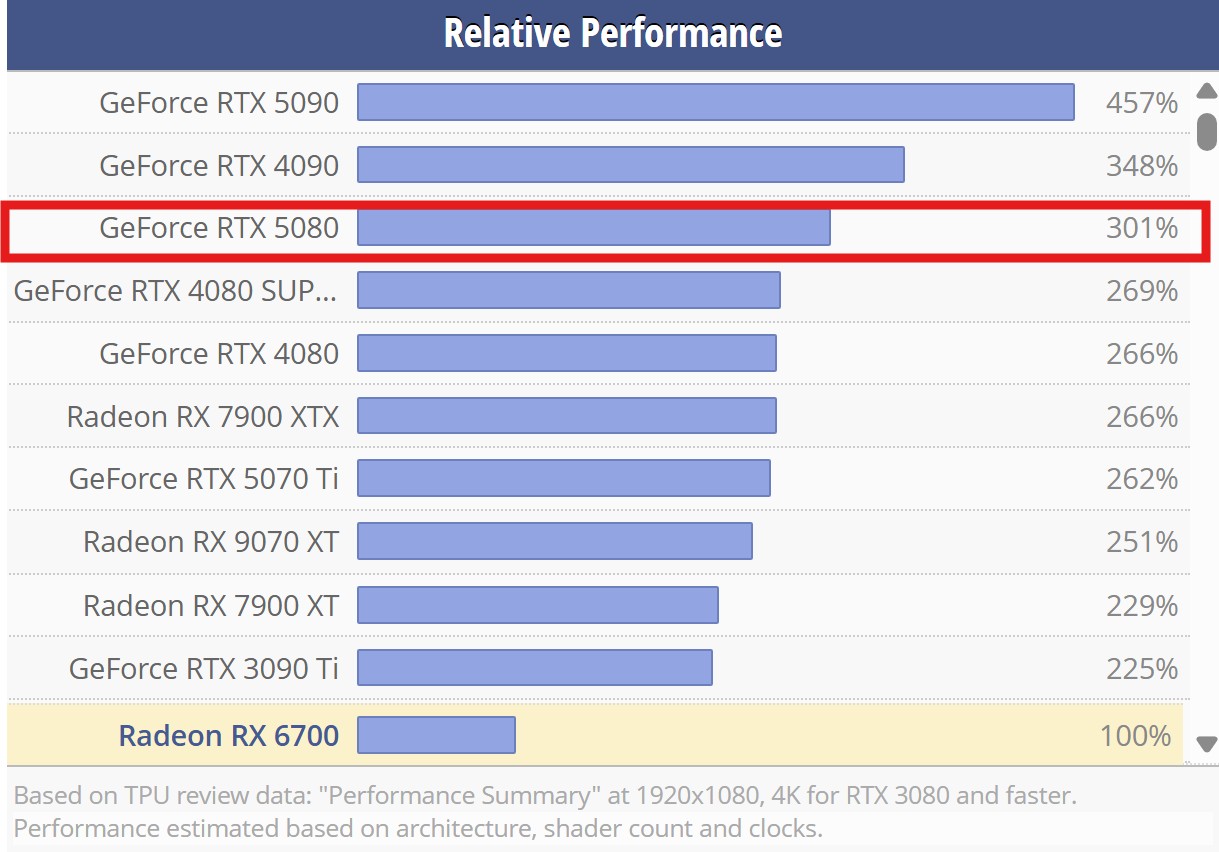

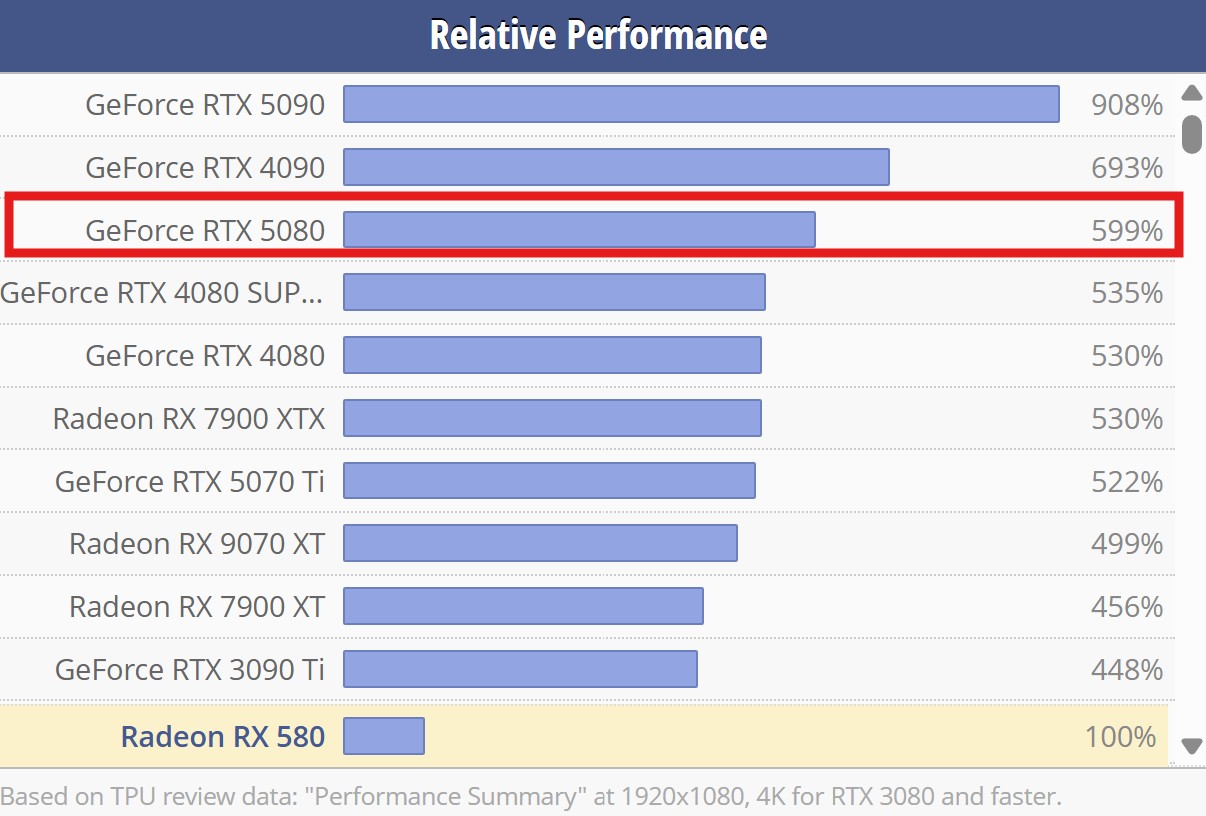

Holidays 2027 launch, yet it will be weaker from 5080, which means substantially weaker from rtx 4090 which launched in 2022, aka by then 5yo gpu, writting is on the wall if ps6 will only draw 160Watts like they saying in the leaks.

Ofc it will still be substantally stronger in raster(and even more in rt/ai upscaling) from pathethically weak ps5pr0, still by then we will have 60xx series cards from nvidia already, so very likely even midrange rtx 6070(likely 800-1k usd streetprice) will be stronger from brand new ps6 in every possible aspect.

It makes total sense, especially if we assume that smaller/weaker ps6 is made to cost at max 499-599$(with xbox rising white flag sony doesnt even really need to try that much unfortunately).