-

Hey Guest. Check out your NeoGAF Wrapped 2025 results here!

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

- Status

- Not open for further replies.

T

Three Jackdaws

Unconfirmed Member

Curious to see how the PS5's supposed RDNA 3 features will manifest in gaming, my hunch is only first party developers will take advantage of them when it comes to very heavy utilisation, especially the Geometry Engine. Although I don't see why third party developers would leave stable performance on the table which the GE offers, optimisation is generally done at the towards the end of development as well so "crunch" also plays a heavy role.So apparently next year CDPR will use Xbox Series X RDNA 2 features for the Cyberpunk 2077 upgrade next year, so does that mean they'll also use PS5 RDNA 3 features for its upgrade also.

As for when RDNA 3 actually comes out, we could see some interesting things because I remember RGT saying that RDNA 3's geometry handling is heavily influenced by the PS5 as well as how data from the SSD is brought into RAM, the latter which might seem strange for a discreet GPU but we've already seen AMD do something similar with the Radeon Pro series which basically had HBM and the SSD bolted onto the GPU. Apparently AMD also seems to be changing the GFX ID number for RDNA 3 and they only ever do that when BIG changes are happening.

Last edited by a moderator:

Radical_3d

Member

The number of ACEs/compute queues on a GPU has nothing to do with the SPU task manager (STM). According to Cerny it has everything to do with how much asynchronous compute a vendor (or customer in the case of SIE) wants a particular GPU to do for game systems (PS game systems) and middleware (PS middleware):

"For PS4, we've worked with AMD to increase the limit to 64 sources of compute commands -- "... "The reason so many sources of compute work are needed is that it isn't just game systems that will be using compute -- middleware will have a need for compute as well." -- Mark Cerny

What Volcanic Islands can do is schedule tasks and put them in the same number of computes queues that PS4's GPU has. What it (and every other PC GPU) can't do is use them as efficiently as PS4's GPU can. That's where STM-derived customizations to the cache and bus (reduces overhead associated with context switching) of PS4's GPU come into play. The 'volatile bit' in the article you linked relies on these STM-derived customizations to effectuate a knock-off SPURS on the GPU. As Cerny put it:

"First, we added another bus to the GPU that allows it to read directly from system memory or write directly to system memory, bypassing its own L1 and L2 caches." -- Mark Cerny

"Next, to support the case where you want to use the GPU L2 cache simultaneously for both graphics processing and asynchronous compute, we have added a bit in the tags of the cache lines, we call it the 'volatile' bit."... "-- in other words, it radically reduces the overhead of running compute and graphics together on the GPU." -- Mark Cerny

"We're trying to replicate the SPU Runtime System (SPURS) of the PS3 by heavily customizing the cache and bus," -- Mark Cerny

One of the people who worked on Volcanic Islands had no idea what the 'volatile bit' was until Cerny mentioned it:

"I worked on modifications to the core graphics blocks. So there were features implemented because of console customers. Some will live on in PC products, some won't. At least one change was very invasive, some moderately so. That's all I can say. Since Cerny mentioned it I'll comment on the volatile flag. I didn't know about it until he mentioned it, but looked it up and it really is new and driven by Sony. The extended ACE's are one of the custom features I'm referring to." -- anonymous AMDer

Without STM-derived customizations and the 'volatile bit', an AMD GPU for PC has higher context switching overhead. Increasing ACE/compute queue counts won't change that. With STM-derived customizations and the 'volatile bit', an AMD GPU becomes something akin to PS3's SPURS driven SPU+GPU hybrid rendering system with "radically" reduced context switching overhead, regardless of how few or many ACEs/compute queues it has.

Entries [0012] and [0013] of the patent you linked explain that SPURS is a type of SPU task system; and this patent credits Keisuke Inoue, Tatsuya Iwamoto and Masahiro Yasue with its invention. On CELL, the SPU task manager is a software component of SPURS that reduces context switching overhead within SPURS software.

Not because of CELL, because of Blu-ray. Estimated to initially cost over $400 to produce....

Blu-ray a player in PlayStation pricing

Sony's PlayStation 3 game console may cost more than the Xbox 360, but it also comes with Blu-ray. Will that bring in the buyers?www.cnet.com

HD DVD and Blu-ray drives cost over US$400 to build

A research firm has analyzed the cost of materials and royalties required to …arstechnica.com

CELL cost around $100 per chip (timestamped)...

Depends on who you ask...

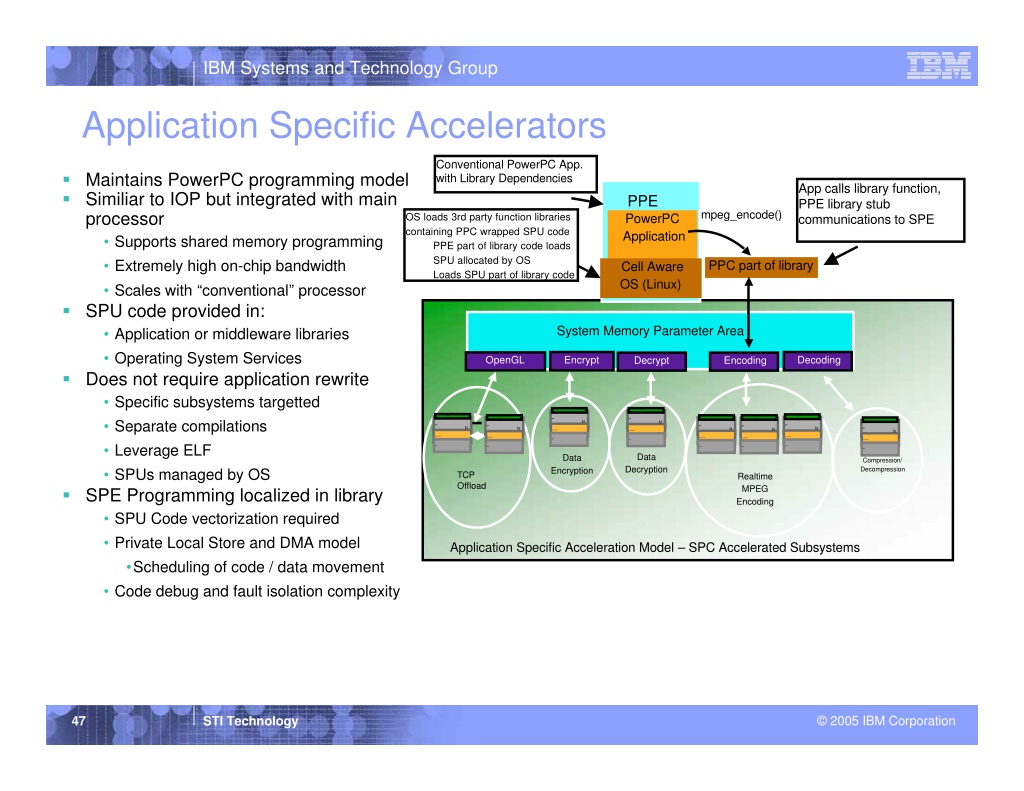

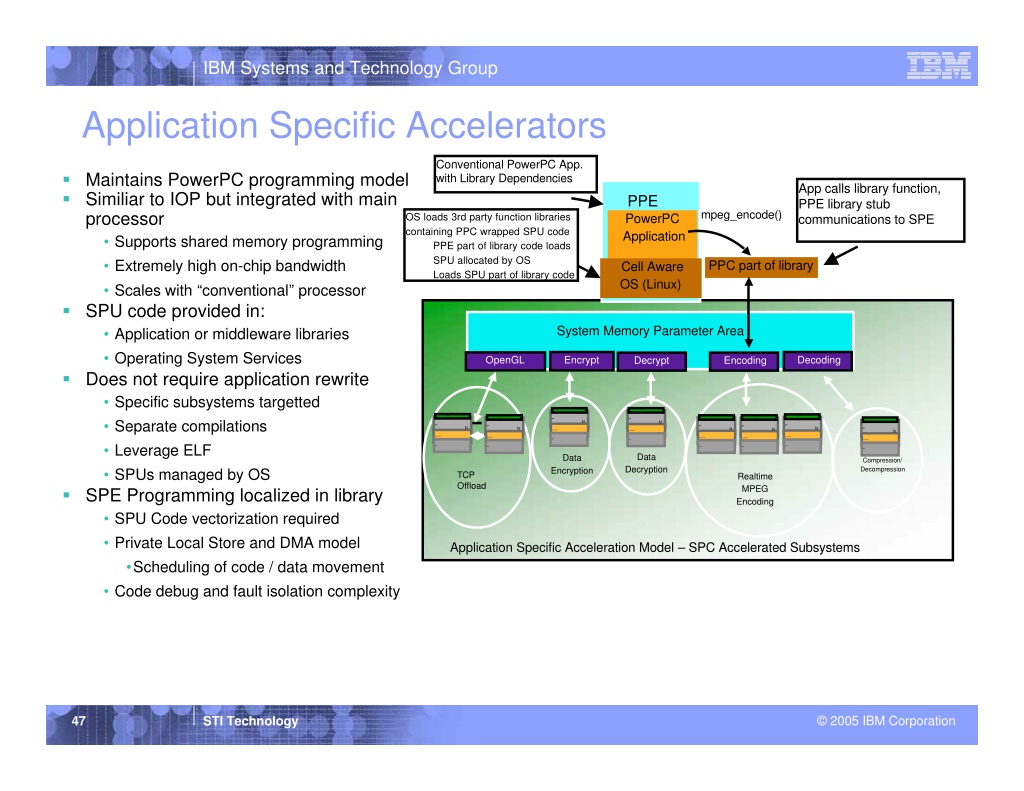

I share much of your sentiment. The I/O co-processors, decompression engine, coherency engines and DMAC of the I/O complex amount to what is basically an imitation CELL designed to do in hardware what SPUs did in software, as according IBM CELL is similar to an IO processor (IOP)...

The dissimilarities being that it has an integrated CPU (PowerPC-based PPE) and it isn't limited to just directing inputs/outputs, since one or more of its SPEs (IOP-like in their ability to relieve the PPE of I/O duties) can be partitioned into groups and assigned specific tasks if necessary. A CELL with a sufficient number of PPEs and SPEs could replace all the specialized hardware inside PS5's IO complex...

- SPUs extend to the GPU shader pipeline and maintain coherency with the GPU they assist via SPU <-> GPU synchronization techniques

- as you mentioned, SPUs do decompression (also seen on the above CELL slide)

- each SPE has its own DMAC to transfer data into/out of system memory and storage

- SPEs handle memory mapping and file IO (handled by PS5's two I/O co-processors)

Yeah, the popular opinion is that using CELL for PS3 was "mistake" that resulted in Sony's worst financial failure and a loss of marketshare; but in my opinion the success of PS4/pro and PS5 is directly and largely attributable to the future-oriented nature of CELL/PS3. In '07 it was reported that Ken Kutaragi viewed PS3's losses as investments.

It's apparent to me that PS3's "ROI" (as it relates strictly to gaming) has come mostly in the form of knowledge that's been used across two gens to beef up scrawny APUs. Prior expertise in heterogeneous system architecture design for PS3 and the transplanting of CELL-like asynchronous compute onto the GPU put some meat on their bones. Software techniques used to improve the image quality of PS3 games were built up to give the custom APU some muscle definition.

One of the best past examples of this is PS4 pro's hardware-based ID buffer (probably emulated by PS5's Geometry Engine) used for checkerboard rendering. Its origins can be traced back to PS3's software-based SPU MLAA implementation which used object IDs, color info and horizontal splitting to detect and smooth out aliased edges of objects in scenes...

- quick overview of SPU MLAA

- why edge detection is necessary

- horizontal MLAA with splitting illustrated on a checkerboard pattern

- capture of horizontal MLAA with splitting in KZ3

- process of object IDs and color info for edge detection in SOCOM

- cover for Frosbite Labs checkerboard rendering slidedeck

- the history of checkerboard rendering rooted in SOCOM object IDs for MLAA edge detection

- history continued to PS4 pro ID buffer hardware and Frostbite Labs support

In Cerny's explanation of the PS4 pro's ID buffer, he said:

"As a result of the ID buffer, you can now know where the edges of objects and triangles are and track them from frame to frame, because you can use the same ID from frame to frame,"... "And I'm going to explain two different techniques that use the buffer - one simpler that's geometry rendering and one more complex, the checkerboard."... "Second, we can use the colours and the IDs from the previous frame, which is to say that we can do some pretty darn good temporal anti-aliasing." -- Mark Cerny

In my view your feelings are justified and the investments (or losses if you prefer) linked to PS3's R&D generally, CELL's R&D specifically have been paying dividends in different ways for a long time now.

Based Ken Kutaragi-sama.

This is the shit I come here for.

Lunatic_Gamer

Member

Holy

Last edited:

Lunatic_Gamer

Member

UK politicians call for action against PS5 and Xbox Series scalpers

www.videogameschronicle.com

www.videogameschronicle.com

About time someone does something about this ongoing shit. Now we need to pass something similar here in the US.

Six Scottish National Party tabled an Early Day Motion on Monday, calling for legislative proposals "prohibiting the resale of gaming consoles and computer components at prices greatly above Manufacturer's Recommended Retail Price."

The motion states: "new releases of gaming consoles and computer components should be available to all customers at no more than the Manufacturer's Recommended Retail Price, and not be bought in bulk by the use of automated bots which often circumvent maximum purchase quantities imposed by the retailer".

UK politicians call for action against PS5 and Xbox Series scalpers | VGC

MPs motion a debate over resale of consoles and automated bots, which they say should be banned…

About time someone does something about this ongoing shit. Now we need to pass something similar here in the US.

kyliethicc

Member

Knack wins again

T

Three Jackdaws

Unconfirmed Member

ethomaz

Banned

Wow people were harsh with Knack... I played it and it is nowhere a 5/10 title... more like a 7/10.Knack wins again

KingT731

Member

Yeah it just got shit on ridiculously at launch for absolutely no reason. It wasn't bad just a solid platformer that had a bit of difficulty to it.Wow people were harsh with Knack... I played it and it is nowhere a 5/10 title... more like a 7/10.

ResilientBanana

Member

I played it also, I'd give it less.Wow people were harsh with Knack... I played it and it is nowhere a 5/10 title... more like a 7/10.

Imtjnotu

Member

i wonder whyI played it also, I'd give it less.

Shmunter

Member

Had the game since launch on PS4. Only started and finished it on PS5 due to the unflinching 60fps.Knack wins again

Good old school style feel to it all. Felt all warm and fuzzy including wanting to throw the controller out the window due to difficulty at times, lol.

Eyeing off that Knack 2 on my shelf whenever I walk past. Soon my precious, soon.

Last edited:

Dave_at_Home

Member

Knack wins again

saintjules

Gold Member

Knack wins again

lol. But but but but, it's a different score for a different genre!

Loxus

Member

Looking at Cyberpunk, makes me wonder how good it would have been if it was develop by PlayStation Studios.Knack wins again

Studios like these would have been in much better hands with Sony, seeing how Spiderman turned out.

Remedy Entertainment

-Control

Crystal Dynamics

-Tomb Raider

-Marvel's Avengers

Rocksteady Studios

-Batman

NetherRealm Studios (Not really this but I want an Injustice vs Avengers game.

-Injustice

-Mortal Kombat

These games are good on they own, but PlayStation just have this magical talent that push game beyond imagination.

Last edited:

Chris_Rivera

Member

It's a 7nm process part, so x-ray might not cut it, might need to file it down and use an electron microscope on it.

the nvidia 3090 die was recently shot, maybe someone just needs to donate their PS5. Unfortunately, I won't.

NVIDIA's Flagship Ampere Gaming GPU "GA102" Gets Beautiful Die Shot, Exposes The Entire Chip That Powers RTX 3090 & RTX 3080 Graphics Cards

NVIDIA's flagship GA102 Gaming Ampere GPU has recieved its first high-res die shot which shows what powers the RTX 3090 & RTX 3080 cards.

T

Three Jackdaws

Unconfirmed Member

Some interesting and old tweets I found from LeviathanGamer2 on the UE5 tech demo which clears up a lot of confusion. Thought it's worth sharing.

Classicrockfan

Banned

Would days gone be like knack got mediocre scores but was good?

roosnam1980

Member

dude sony cant buy so many studios .Looking at Cyberpunk, makes me wonder how good it would have been if it was develop by PlayStation Studios.

Studios like these would have been in much better hands with Sony, seeing how Spiderman turned out.

Remedy Entertainment

-Control

Crystal Dynamics

-Tomb Raider

-Marvel's Avengers

Rocksteady Studios

-Batman

NetherRealm Studios (Not really this but I want an Injustice vs Avengers game.)

-Injustice

-Mortal Kombat

These games are good on they own, but PlayStation just have this magical talent that push game beyond imagination.

PaintTinJr

Member

The number of ACEs/compute queues on a GPU has nothing to do with the SPU task manager (STM). According to Cerny it has everything to do with how much asynchronous compute a vendor (or customer in the case of SIE) wants a particular GPU to do for game systems (PS game systems) and middleware (PS middleware):

"For PS4, we've worked with AMD to increase the limit to 64 sources of compute commands -- "... "The reason so many sources of compute work are needed is that it isn't just game systems that will be using compute -- middleware will have a need for compute as well." -- Mark Cerny

What Volcanic Islands can do is schedule tasks and put them in the same number of computes queues that PS4's GPU has. What it (and every other PC GPU) can't do is use them as efficiently as PS4's GPU can. That's where STM-derived customizations to the cache and bus (reduces overhead associated with context switching) of PS4's GPU come into play. The 'volatile bit' in the article you linked relies on these STM-derived customizations to effectuate a knock-off SPURS on the GPU. As Cerny put it:

"First, we added another bus to the GPU that allows it to read directly from system memory or write directly to system memory, bypassing its own L1 and L2 caches." -- Mark Cerny

"Next, to support the case where you want to use the GPU L2 cache simultaneously for both graphics processing and asynchronous compute, we have added a bit in the tags of the cache lines, we call it the 'volatile' bit."... "-- in other words, it radically reduces the overhead of running compute and graphics together on the GPU." -- Mark Cerny

"We're trying to replicate the SPU Runtime System (SPURS) of the PS3 by heavily customizing the cache and bus," -- Mark Cerny

One of the people who worked on Volcanic Islands had no idea what the 'volatile bit' was until Cerny mentioned it:

"I worked on modifications to the core graphics blocks. So there were features implemented because of console customers. Some will live on in PC products, some won't. At least one change was very invasive, some moderately so. That's all I can say. Since Cerny mentioned it I'll comment on the volatile flag. I didn't know about it until he mentioned it, but looked it up and it really is new and driven by Sony. The extended ACE's are one of the custom features I'm referring to." -- anonymous AMDer

Without STM-derived customizations and the 'volatile bit', an AMD GPU for PC has higher context switching overhead. Increasing ACE/compute queue counts won't change that. With STM-derived customizations and the 'volatile bit', an AMD GPU becomes something akin to PS3's SPURS driven SPU+GPU hybrid rendering system with "radically" reduced context switching overhead, regardless of how few or many ACEs/compute queues it has.

Entries [0012] and [0013] of the patent you linked explain that SPURS is a type of SPU task system; and this patent credits Keisuke Inoue, Tatsuya Iwamoto and Masahiro Yasue with its invention. On CELL, the SPU task manager is a software component of SPURS that reduces context switching overhead within SPURS software.

Not because of CELL, because of Blu-ray. Estimated to initially cost over $400 to produce....

Blu-ray a player in PlayStation pricing

Sony's PlayStation 3 game console may cost more than the Xbox 360, but it also comes with Blu-ray. Will that bring in the buyers?www.cnet.com

HD DVD and Blu-ray drives cost over US$400 to build

A research firm has analyzed the cost of materials and royalties required to …arstechnica.com

CELL cost around $100 per chip (timestamped)...

Depends on who you ask...

I share much of your sentiment. The I/O co-processors, decompression engine, coherency engines and DMAC of the I/O complex amount to what is basically an imitation CELL designed to do in hardware what SPUs did in software, as according IBM CELL is similar to an IO processor (IOP)...

The dissimilarities being that it has an integrated CPU (PowerPC-based PPE) and it isn't limited to just directing inputs/outputs, since one or more of its SPEs (IOP-like in their ability to relieve the PPE of I/O duties) can be partitioned into groups and assigned specific tasks if necessary. A CELL with a sufficient number of PPEs and SPEs could replace all the specialized hardware inside PS5's IO complex...

- SPUs extend to the GPU shader pipeline and maintain coherency with the GPU they assist via SPU <-> GPU synchronization techniques

- as you mentioned, SPUs do decompression (also seen on the above CELL slide)

- each SPE has its own DMAC to transfer data into/out of system memory and storage

- SPEs handle memory mapping and file IO (handled by PS5's two I/O co-processors)

Yeah, the popular opinion is that using CELL for PS3 was "mistake" that resulted in Sony's worst financial failure and a loss of marketshare; but in my opinion the success of PS4/pro and PS5 is directly and largely attributable to the future-oriented nature of CELL/PS3. In '07 it was reported that Ken Kutaragi viewed PS3's losses as investments.

It's apparent to me that PS3's "ROI" (as it relates strictly to gaming) has come mostly in the form of knowledge that's been used across two gens to beef up scrawny APUs. Prior expertise in heterogeneous system architecture design for PS3 and the transplanting of CELL-like asynchronous compute onto the GPU put some meat on their bones. Software techniques used to improve the image quality of PS3 games were built up to give the custom APU some muscle definition.

One of the best past examples of this is PS4 pro's hardware-based ID buffer (probably emulated by PS5's Geometry Engine) used for checkerboard rendering. Its origins can be traced back to PS3's software-based SPU MLAA implementation which used object IDs, color info and horizontal splitting to detect and smooth out aliased edges of objects in scenes...

- quick overview of SPU MLAA

- why edge detection is necessary

- horizontal MLAA with splitting illustrated on a checkerboard pattern

- capture of horizontal MLAA with splitting in KZ3

- process of object IDs and color info for edge detection in SOCOM

- cover for Frosbite Labs checkerboard rendering slidedeck

- the history of checkerboard rendering rooted in SOCOM object IDs for MLAA edge detection

- history continued to PS4 pro ID buffer hardware and Frostbite Labs support

In Cerny's explanation of the PS4 pro's ID buffer, he said:

"As a result of the ID buffer, you can now know where the edges of objects and triangles are and track them from frame to frame, because you can use the same ID from frame to frame,"... "And I'm going to explain two different techniques that use the buffer - one simpler that's geometry rendering and one more complex, the checkerboard."... "Second, we can use the colours and the IDs from the previous frame, which is to say that we can do some pretty darn good temporal anti-aliasing." -- Mark Cerny

In my view your feelings are justified and the investments (or losses if you prefer) linked to PS3's R&D generally, CELL's R&D specifically have been paying dividends in different ways for a long time now.

Based Ken Kutaragi-sama.

Great info, real interesting the way you referenced so much to make a really solid argument that would be hard for someone to wave away.

One of the other things I would have never thought to attribute to the know-how gained with the Cell SPUs - but looking at the slide you linked, as show here (image processing background subtraction) and thinking about the Cell being the "networked processor" - Sony's new low latency programmable (logic on censor) image censors almost certainly must have stemmed from that R&D, too.

The ability to discard unneeded imagine data at source(the sensor) because the logic is processing locally while the imagine input is still in-flight, filtering it and vectorising/meta-ing it from megabytes down to kilobytes per frame is almost certainly the reason they have made a 2D screen (with a low-latency camera sensor) that provides the (single)observer with a dynamic stereoscopic view experience of their 3D models, by tracking the observer's eyes and relaying the intent of those eye moments with so little data/latency (and load on the system CPU) the observer believes they are looking round the object in real-time.

Thinking about that a little bit more, those sensors are probably the reason Sony have managed to automate building consoles all the way down to the robotics needed for inserting the tiny ribbon cables - which is apparently where Apple-et al failed . Such a low latency feedback response is almost human levels of coordinated feedback IMHO, and would make sense if a tiny lithography (programmable) SPU descendent on a sensor is their solution.

Last edited:

yewles1

Member

It got treated like a PS3 launch game.Wow people were harsh with Knack... I played it and it is nowhere a 5/10 title... more like a 7/10.

bitbydeath

Gold Member

More stock coming. Got the call on my second PS5. Will pick it up later today. I wasn't expecting it until next year.

Last edited:

PaintTinJr

Member

Some interesting and old tweets I found from LeviathanGamer2 on the UE5 tech demo which clears up a lot of confusion. Thought it's worth sharing.

I think he's got that completely wrong. They are referring to the amount of conventional dedicated GPU pipeline work that is taxing the PS5 GPU, and further info was given about it being Fornite at 1080p60 on the PS4 workload IIRC from the Dev video by UE5 team.

What that tells us, is that the majority of the demo, excluding the character/dynamic objects(water, falling rocks, particles, etc) are all rendering via async compute. They also said they were still trying to optimise for 1440p60, but ran out of time IIRC.

Sony Atomview 8K cinema assets (they are almost certainly using for the UE5 demo)will be largely getting culled in the IO complex and/or the CPU, so his suggestion wouldn't change anything - as his understanding of how it all works is wrong IMO.

Last edited:

yewles1

Member

Nah, Epic has plenty of their own assets from Quixel. Atom View might be more used by Sony first party in the future.I think he's got that completely wrong. They are referring to the amount of conventional dedicated GPU pipeline work that is taxing the PS5 GPU, and further info was given about it being Fornite at 1080p60 on the PS4 workload IIRC from the Dev video by UE5 team.

What that tells us, is that the majority of the demo, excluding the character/dynamic objects(water, falling rocks, particles, etc) are all rendering via async compute. They also said they were still trying to optimise for 1440p60, but ran out of time IIRC.

Sony Atomview 8K cinema assets (they are almost certainly using for the UE5 demo)will be largely getting culled in the IO complex and/or the CPU, so his suggestion wouldn't change anything - as his understanding of how it all works is wrong IMO.

IntentionalPun

Ask me about my wife's perfect butthole

60gb of assets to paint a single frame.

Lunatic_Gamer

Member

Best PS5 & Xbox Series X HDR Calibration Settings for Samsung Q80T / Q90T

Grinchy

Banned

Some interesting and old tweets I found from LeviathanGamer2 on the UE5 tech demo which clears up a lot of confusion. Thought it's worth sharing.

Our very own Xbox fanatic made a thread with a similar premise to let us know the PS5 was trash. It was shortly before or after making a thread about how the Series S doesn't exist

Last edited:

Ptarmiganx2

Member

Great having 2 ps5s have you thought about those kids that couldn't get theirs ?

I'm playing with you, congrats she probably had to kill a few other customers to get it.

If you have the opportunity can you give us your feedback about streaming the video of your game to another ps5?

None of my friend could get their hand on one so I couldn't try.

The thought did cross my mind lol. I hate the shortage, lots of disappointed gamers. I'll check out the streaming features and let you know once she picks it up Sunday.

captainclutch777

Member

Razer - BlackShark V2 Pro Wireless Gaming Headset how is this headset,was thinking about getting this?

Happy with mine on the PS5. Tracking footsteps with amazing accuracy in COD. Sounds better than my hyperX cloud flight and comfortable. No issues so far. I recommend it.

PaintTinJr

Member

It will be the same technology as Atomview whether it is officially called atomview or not IMO.Nah, Epic has plenty of their own assets from Quixel. Atom View might be more used by Sony first party in the future.

4 polys per pixel is how they've gone beyond nanite cinema asset aliasing, and at that size, a polygon is just a subpixel fragment so doesn't need shader vertex pipelines and culling.

The async should be able to do that type of task rapidly independent of asset geometry complexity - as they sort of implied by the stupid amount of warriors in that room - because it will be the speed of the iO system and the async compute solution for retrieving just the candidate samples and the combining software to provide a final nanite pixel for each coordinate on the sceen IMO.

Classicrockfan

Banned

Just ordered it can't wait,stupid question can I also use it to listen to music on my iPhone?Happy with mine on the PS5. Tracking footsteps with amazing accuracy in COD. Sounds better than my hyperX cloud flight and comfortable. No issues so far. I recommend it.

Shmunter

Member

Best PS5 & Xbox Series X HDR Calibration Settings for Samsung Q80T / Q90T

What a crap show Samsung displays are. Returning the 75" Q95 was the best move of 2020 for me.

SlimySnake

Flashless at the Golden Globes

I played it, I wish i didnt.I played it also, I'd give it less.

Bo_Hazem

Banned

It will be the same technology as Atomview whether it is officially called atomview or not IMO.Bo_Hazem brought the info to light in this thread and others a few months back, and how the data is captured, how it is stored is the reason why the ue5 demo provides a "continuous level of detail"(even that is in the old slide above

) for the nanite renderer background.

4 polys per pixel is how they've gone beyond nanite cinema asset aliasing, and at that size, a polygon is just a subpixel fragment so doesn't need shader vertex pipelines and culling.

The async should be able to do that type of task rapidly independent of asset geometry complexity - as they sort of implied by the stupid amount of warriors in that room - because it will be the speed of the iO system and the async compute solution for retrieving just the candidate samples and the combining software to provide a final nanite pixel for each coordinate on the sceen IMO.

Amazing details, as always. So having more polygons per pixel doesn't just provide a realistic blend of colors and zero aliasing, but also be "gentler" on the GPU as well? No wonder they said that it's as GPU taxing as Fortnite on PS4! Crazy how efficient when data streaming gets normalized, it'll push xbox in an odd region as it can't keep up. Nvidia promised 14GB/s using their GPU's to mimic the decompressor on PS5 but falls short to 17-22GB/s which is as crazy as single channel DDR4 RAM of 825GB! The 6GB/s cap on Xbox gonna hold back the industry, along with the vast majority of gaming PC's. The jump in graphics will be massive.

I still believe Atom View has a superior solution to Nanite that borrowed it from Sony, as from their trailer it seems to be compatible with mobile objects vs static on Nanite.

kyliethicc

Member

It was just "buy our merch" lol

DrDamn

Member

If you have the opportunity can you give us your feedback about streaming the video of your game to another ps5?

None of my friend could get their hand on one so I couldn't try.

I've used this a couple of times and it works really well. One session in Ghosts Legends co-op, sharing both ways. Really helpful to have a mini view of your team mates screen. The other was a PS5 party of 4 playing Worms Rumble which only supports a game party of 3. So we had at least two people sharing their screen and cycled people out of the worms party every couple of rounds. Meant anyone not playing could watch someone else's screen. Felt like a games night where you all sit round a console on one TV and take turns to play or watch.

Was really quick and simple to do once you'd done it a couple of times.

Panajev2001a

GAF's Pleasant Genius

Wireless is unnessiary price increase that Sony would not bother with for PSVR2. At most they would make it an optional upgrade. If the choice is wireless or a $100 price decrease, Sony would pick a 100 price decrease. PSVR2 need to be cheaper than PSVR1 in order to truly grow.

The PS5 will need to be in the same room as the headset, so PSVR2 was never going to be portable. The advantage of being wireless really couldn't be fully realized as long as it is tethered to a console in some way.

I just know that it was a pain with the original model that made me choose between HDR at 4K or PSVR and I had to buy a switch for it... good luck with HDMI 2.1 and external switches... Wireless or single cable based FTW.

Throttle

Member

Days Gone is good, don't trust reviews. Got the platinum for it.Would days gone be like knack got mediocre scores but was good?

On PS5 it runs at 4K60.

DeltaPolarBear

Member

psvr2 CANNOT be allowed to be priced like a console on its own. The amount of people who would be turned off by a $399 headset compared to $299 is insurmountable. Telling them it is wireless isn't going to get them to buy it.I just know that it was a pain with the original model that made me choose between HDR at 4K or PSVR and I had to buy a switch for it... good luck with HDMI 2.1 and external switches... Wireless or single cable based FTW.

Look, we have people here who would pay for a 700 dollar next gen console if it was offered. But if Consoles do this we would very soon not have a console eco system. This isn't about you or what you are willing to pay; this is about what is needed for more people to be able to afford VR to begin with.

Dibils2k

Member

dont trust reviews? its got 71... thats good rating lol just cause they didnt give it 90+ doesnt mean reviews were BSDays Gone is good, don't trust reviews. Got the platinum for it.

On PS5 it runs at 4K60.

Shmunter

Member

Looking at Quest, psvr2 should be cheaper than that and will surely be wireless, this is why PS5 has wifi 6, high bandwidth, low latency.psvr2 CANNOT be allowed to be priced like a console on its own. The amount of people who would be turned off by a $399 headset compared to $299 is insurmountable. Telling them it is wireless isn't going to get them to buy it.

Look, we have people here who would pay for a 700 dollar next gen console if it was offered. But if Consoles do this we would very soon not have a console eco system. This isn't about you or what you are willing to pay; this is about what is needed for more people to be able to afford VR to begin with.

It will be cheap being a dumb display. No breakout boxes needed, everything handled by the console.

The dual sense haptics are also future looking with psvr2 controllers incorporating the tech.

The path is laid.

Last edited:

azertydu91

Hard to Kill

Nice thank you for your reply.I've used this a couple of times and it works really well. One session in Ghosts Legends co-op, sharing both ways. Really helpful to have a mini view of your team mates screen. The other was a PS5 party of 4 playing Worms Rumble which only supports a game party of 3. So we had at least two people sharing their screen and cycled people out of the worms party every couple of rounds. Meant anyone not playing could watch someone else's screen. Felt like a games night where you all sit round a console on one TV and take turns to play or watch.

Was really quick and simple to do once you'd done it a couple of times.

I will probably use it with a friend while we both play binding of isaac( he's an expert at killing himself stupidly which is always hilarious).All that while party chatting will probably feel a bit like couch coop.

HawarMiran

Banned

To put it into perspective. State of Decay 2 has a Metacritic score of 66dont trust reviews? its got 71... thats good rating lol just cause they didnt give it 90+ doesnt mean reviews were BS

Last edited:

Radical_3d

Member

No Bo until next yearOh no,Bo_Hazem dusted again. Common bro, don't go out like this. Enjoy the game chatter without the petty crap. Be critical constructively, don't kick dirt in peoples faces.

saintjules

Gold Member

HoofHearted

Member

(gargles)....

"... it's the most wonderful time....... of the yearrrrrrrr!!!!!!!!!..."

Thank you, good night!, try the veal and don't forget to tip your waitstaff!

ResilientBanana

Member

It was shovelware. Sony has plenty of great games. Knack isn't one of them.i wonder why

- Status

- Not open for further replies.