You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Nvidia 5000 Series GPU Rumored To Offer Biggest Performance Leap In History

- Thread starter IbizaPocholo

- Start date

- Rumor Hardware

Insane Metal

Member

Glad I skipped the 4000 series.

I'll buy the 5080 if the price is right.

Moses85

Member

Gaiff

SBI’s Resident Gaslighter

It needs its own:

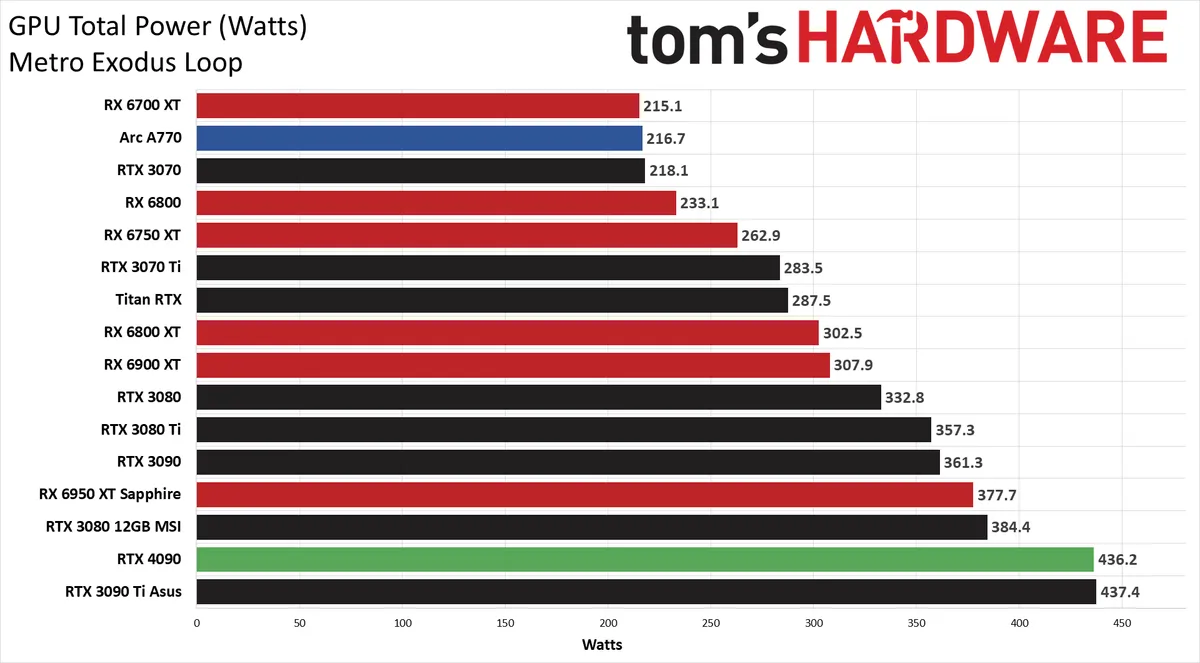

This joke would be funny if Lovelace wasn't the most power-efficient architecture on the market.

YCoCg

Member

But it still requires a high power PSU to run, cool that it's efficient at the level it's running at but when it requires a 850W PSU to begin with. Nvidia could turn around and claim the 5000 series is even more efficient BUT requires at least 1200W PSU to get started.This joke would be funny if Lovelace wasn't the most power-efficient architecture on the market.

Gaiff

SBI’s Resident Gaslighter

That's what NVIDIA recommends and they always overshoot their requirements. You can get by just fine with a 750W PSU. This thing consumes less than the 3090 Ti so this whole "hur hur, 10,000 gigawatts!!" is stupid as fuck.But it still requires a high power PSU to run, cool that it's efficient at the level it's running at but when it requires a 850W PSU to begin with. Nvidia could turn around and claim the 5000 series is even more efficient BUT requires at least 1200W PSU to get started.

Kenpachii

Member

This joke would be funny if Lovelace wasn't the most power-efficient architecture on the market.

Now run a game where it slams the GPU at 100% and not undervolted, because much like overclocking, undervolting is a shit show, u can't base any conclusion on it.

Last edited:

Gaiff

SBI’s Resident Gaslighter

What do you think this game does at a total system load of 300W? The stock 4090 is way past its optimal clocks, hence the high power consumption out of the box. Undervolting is nothing like overclocking and certainly not a "shitshow" so way to go to show that you got no idea what you're talking about.Now run a game where it slams the GPU at 100% and not undervolted, because much like overclocking, undervolting is a shit show, u can't base any conclusion on it.

This is at 70% power limit with no voltage adjustments or optimizations whatsoever. A 10% loss in performance for a 30% decrease in power consumption on my 3DMark score. Tried it in games and the net loss is consistently in the 10% range.

It's been well-documented by multiple people with you can do with an underclock/power limit so I have no idea why you're coming with that stock benchmark. At least do your research before answering.

Buggy Loop

Gold Member

This joke would be funny if Lovelace wasn't the most power-efficient architecture on the market.

And he should at least be -30 on his 5800X3D, they're known for bare minimum of -30 unless it's a very bad bin. I have mine to -35, and i'm sure i would be stable with -40, all cores.

Kenpachii

Member

Undervolting is the same as overclocking mate. Either you have a good chip that can handle it or not. Have had a GPU that couldn't even go a bit under the voltage it needed to operate. It's a complete shit show because every chip is different.What do you think this game does at a total system load of 300W? The stock 4090 is way past its optimal clocks, hence the high power consumption out of the box. Undervolting is nothing like overclocking and certainly not a "shitshow" so way to go to show that you got no idea what you're talking about.

This is at 70% power limit with no voltage adjustments or optimizations whatsoever. A 10% loss in performance for a 30% decrease in power consumption on my 3DMark score. Tried it in games and the net loss is consistently in the 10% range.

It's been well-documented by multiple people with you can do with an underclock/power limit so I have no idea why you're coming with that stock benchmark. At least do your research before answering.

There is a reason why those GPU's have those volts, as plenty of chips can't handle it.

Also about undervolting much like overclocking, some people do it and they play 2-3 games for 3-5 hours and think that its stable, i can tell you this without 100's of hours of testing in different type of games u will never know if its stable. This is why overclocking / undervolting is only fun if you like to mess around with a unstable system and thats why i call it a shit show let alone anybody advertising it as u can just do it is basically misleading as hell.

And if you undervolt with the idea of underclocking your GPU, then you are underclocking not undervolting.

Last edited:

Rusty Shackelford

Member

So what are we possibly looking at? 32-64GB of GDDR* of memory on the cards? 128? 512-768 bit memory interface?

Last edited:

Gaiff

SBI’s Resident Gaslighter

No it isn't and you have no idea what you're talking about.Undervolting is the same as overclocking mate. Either you have a good chip that can handle it or not. Have had a GPU that couldn't even go a bit under the voltage it needed to operate. It's a complete shit show because every chip is different.

This doesn't even make sense. Chips can all handle lower voltages, the issue is with higher voltages hence why GPUs such as the 2080 Ti had 300A and 300 chips. The 300A chips were better overclockers because they were certified for handling higher voltages. Just don't undervolt so low that your chip cannot be fed.There is a reason why those GPU's have those volts, as plenty of chips can't handle it.

You don't undervolt "with the idea of underclocking" your GPU. Undervolting your GPU to a certain degree will automatically power-starve it, resulting in lower clocks. You can otherwise underclock yourself to get lower power consumption but also lower performance.Also about undervolting much like overclocking, some people do it and they play 2-3 games for 3-5 hours and think that its stable, i can tell you this without 100's of hours of testing in different type of games u will never know if its stable. This is why overclocking / undervolting is only fun if you like to mess around with a unstable system and thats why i call it a shit show let alone anybody advertising it as u can just do it is basically misleading as hell.

And if you undervolt with the idea of underclocking your GPU, then you are underclocking not undervolting.

Undervolting and power limiting a GPU is a much safer science than overclocking which is easy to mess up. What breaks GPUs is going over what the chip is designed to handle, ie overclocking. The take that undervolting=overclocking is incredibly ignorant and completely false at that.

Last edited:

dcx4610

Member

This is why it's so hard to commit to buying a top of the line video game. I would usually buy mid range which was about $500. Now, mid range is over $1000 only for the GPU to come out next year to blow it away so what's the point?

Granted, if you buy a 4080 or 4090, it will be sufficient for years to come but it still sucks to know that you have an even more powerful card for probably around the same price if you just wait another year.

Granted, if you buy a 4080 or 4090, it will be sufficient for years to come but it still sucks to know that you have an even more powerful card for probably around the same price if you just wait another year.

Kenpachii

Member

This is why it's so hard to commit to buying a top of the line video game. I would usually buy mid range which was about $500. Now, mid range is over $1000 only for the GPU to come out next year to blow it away so what's the point?

Granted, if you buy a 4080 or 4090, it will be sufficient for years to come but it still sucks to know that you have an even more powerful card for probably around the same price if you just wait another year.

Problem with nvidia is that they keep sponsoring games to run like absolute dog shit on lower gen gpu's to force people to upgrade to newer gen gpu's. This makes future proofing or buying a higher tier card for longer period of time a waste of money. Imagine buying a 3090 and get barely 30 fps in witcher 3 next gen game, but any 4000 series card will net you 60 fps because of frame generation.

U can also blame game developers for this. Why invest into a 4090, when in 2 years? it won't run games at top settings anymore as i need a 5000 card? u better of just buying some mid range card and call it a day.

No it isn't and you have no idea what you're talking about.

This doesn't even make sense. Chips can all handle lower voltages, the issue is with higher voltages hence why GPUs such as the 2080 Ti had 300A and 300 chips. The 300A chips were better overclockers because they were certified for handling higher voltages. Just don't undervolt so low that your chip cannot be fed.

You don't undervolt "with the idea of underclocking" your GPU. Undervolting your GPU to a certain degree will automatically power-starve it, resulting in lower clocks. You can otherwise underclock yourself to get lower power consumption but also lower performance.

Undervolting and power limiting a GPU is a much safer science than overclocking which is easy to mess up. What breaks GPUs is going over what the chip is designed to handle, ie overclocking. The take that undervolting=overclocking is incredibly ignorant and completely false at that.

Its funny how you say i don't know what i talk about, yet your second quote is exactly why its a shit show.

GPU power starves it lowers clocks and fluxuates framerates, bad idea all around. This is why u don't want your GPU to starve on the voltages as more voltages are required in different type of taxations on the GPU so u basically will be underclocking or face a shit show.

GPU's break when u heat it to much up or overvolt it, both can't be done on modern nvidia gpu's anymore as the card will auto throttle. I demonstrated that a while ago on a topic with a 3080 where i completely disable gpu cooling.

U can read the test here: https://www.neogaf.com/threads/why-...re-is-more-than-enough.1628748/post-265472370

I have been overvolting / undervolting / overclocking / underclocking gpu's for near 2 decades now, so yea i kinda do know what i talk about.

Anyway, it seems like u just throw a tantrum towards me for the sake of it, have fun with it won't be reacting towards it anymore.

Last edited:

OLED_Gamer

Member

Got my 7900xt. I have to lower the framerate cap because of my OLED versus what the card can push through on most games. I'm good.

Gaiff

SBI’s Resident Gaslighter

No, not being an idiot and putting the voltage at adequate levels doesn't make it a shitshow. Overclocking seizes your whole system, can cause your drivers to crash and with overvolting, can outright kill your components so nah, not the same.Its funny how you say i don't know what i talk about, yet your second quote is exactly why its a shit show.

It's not a bad idea if you know what you're doing. It requires some serious undervolting for your frames to go haywire and at this point, your performance will be so below the baseline that you'd be better off buying a lower-tier model.GPU power starves it lowers clocks and fluxuates framerates, bad idea all around. This is why u don't want your GPU to starve on the voltages as more voltages are required in different type of taxations on the GPU so u basically will be underclocking or face a shit show.

Overclocking has been a downward spiral since Pascal. Maxwell overclocked great. Now everyone is about undervolting/power limiting. So no, undervolting or OCing aren't the same or even close.GPU's break when u heat it to much up or overvolt it, both can't be done on modern nvidia gpu's anymore as the card will auto throttle. I demonstrated that a while ago on a topic with a 3080 where i completely disable gpu cooling.

You've been doing this for 20 years and couldn't get it right? Up until recently, overclocking was fairly easy and unless you wanted to eek out the last 1-2% of your card and win competitions, it was no problem getting it to remain stable.U can read the test here: https://www.neogaf.com/threads/why-...re-is-more-than-enough.1628748/post-265472370

I have been overvolting / undervolting / overclocking / underclocking gpu's for near 2 decades now, so yea i kinda do know what i talk about.

Anyway, it seems like u just throw a tantrum towards me for the sake of it, have fun with it won't be reacting towards it anymore.

There are dozens of videos and tutorials of 4090 undervolting/power-limiting and it's not a shitshow by any stretch of the imagination.

Last edited:

dcx4610

Member

The problem is the 4080 pretty much IS the mid range card now and it's $1300. They've made the mid range the equivalent of a Titan card now. It's absurd. If you have enough money to buy a 4080, you probably should just go ahead and get a 4090 because the performance jump is so significant. I don't know what Nvidia is thinking. I think the mining community had them licking their lips and figured they could charge whatever they want and people would buy them. Those lost track of their core audience and what GPUs were intended for.Problem with nvidia is that they keep sponsoring games to run like absolute dog shit on lower gen gpu's to force people to upgrade to newer gen gpu's. This makes future proofing or buying a higher tier card for longer period of time a waste of money. Imagine buying a 3090 and get barely 30 fps in witcher 3 next gen game, but any 4000 series card will net you 60 fps because of frame generation.

U can also blame game developers for this. Why invest into a 4090, when in 2 years? it won't run games at top settings anymore as i need a 5000 card? u better of just buying some mid range card and call it a day.

GHG

Member

The problem is the 4080 pretty much IS the mid range card now and it's $1300. They've made the mid range the equivalent of a Titan card now. It's absurd. If you have enough money to buy a 4080, you probably should just go ahead and get a 4090 because the performance jump is so significant. I don't know what Nvidia is thinking. I think the mining community had them licking their lips and figured they could charge whatever they want and people would buy them. Those lost track of their core audience and what GPUs were intended for.

The xx80 cards are high end, not mid range.

The mid range tends to be the xx60 cards.

BennyBlanco

aka IMurRIVAL69

The last time I felt like I got a really good deal from Nvidia was the 970. They just get more and more out of touch with average PC gamers. That being said I have a 4090 coming in the mail friday.