DeepEnigma

Gold Member

It's tough to show outside of a real-world comparison -- and impossible if one does not have access to a quality plasma/CRT.

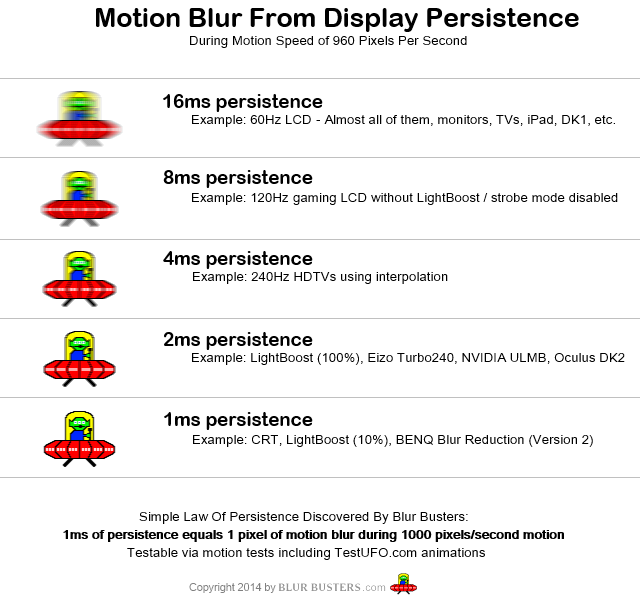

Basically, with LCD/OLED you're looking at something akin to the bottom of this picture with any excess processing (interpolation) turned off:

Forgive the CNET picture, Katz and co suck, but that's what showed up in a Google search.

Turn on the compensating "motion enhancers" and you're literally adding false frames that aren't a part of the original content. This creates the dreaded "soap opera effect" where everything looks robotic or, with some mfg implementations, something straight out of Jacob's Ladder.

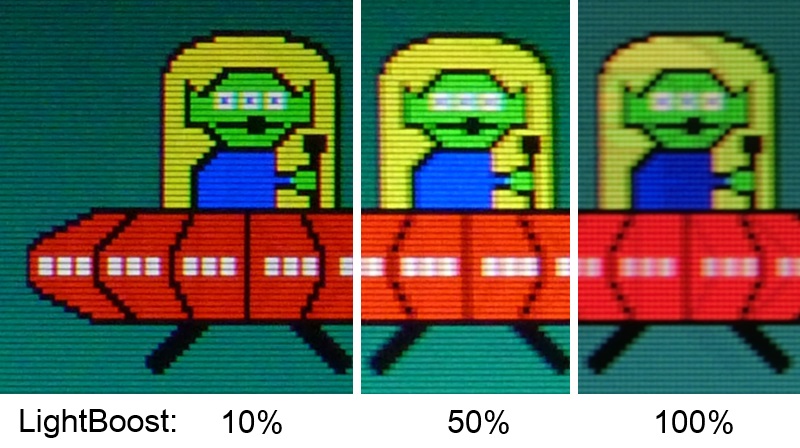

Black frame insertion is currently the best way to eliminate motion blur on sample-and-hold based displays. Ex. Sony's "impulse mode" is pretty good, but it reduces perceived screen brightness to levels that most find unusable. Instead of focusing on nonsensical gimmickry while ignoring the current crop of television's longstanding drawbacks, I wish that manufacturers would focus their efforts on improving/refining methodology like this.

Oh I see. Both (OLED/LED) suffer from this then?

I mean, I been gaming on LCD/LED monitors since the mid-2000's after finally retiring my CRT monitor... so I guess I am used to it, or do not notice as much (well I noticed motion blur on cheaper less quality displays, but I tend to buy higher end/spec'ed displays)? Or do PC monitors perform better than televisions on that front?

And would that LG G91 OLED perform better than let's say a Sony X810 or Samsung JU7500?