Still pretty sure it is in the register extensions they added to the CUs on the Pro - even if it turns out when I check if he doesn't explicitly state because the experimental nature of the ML AI field would make it a bit of a gamble to leave accelerated formats out that are the bedrock of unquantized ML data in PC desktop upscaling inference.

Also I found this reference in an article from yesterday.

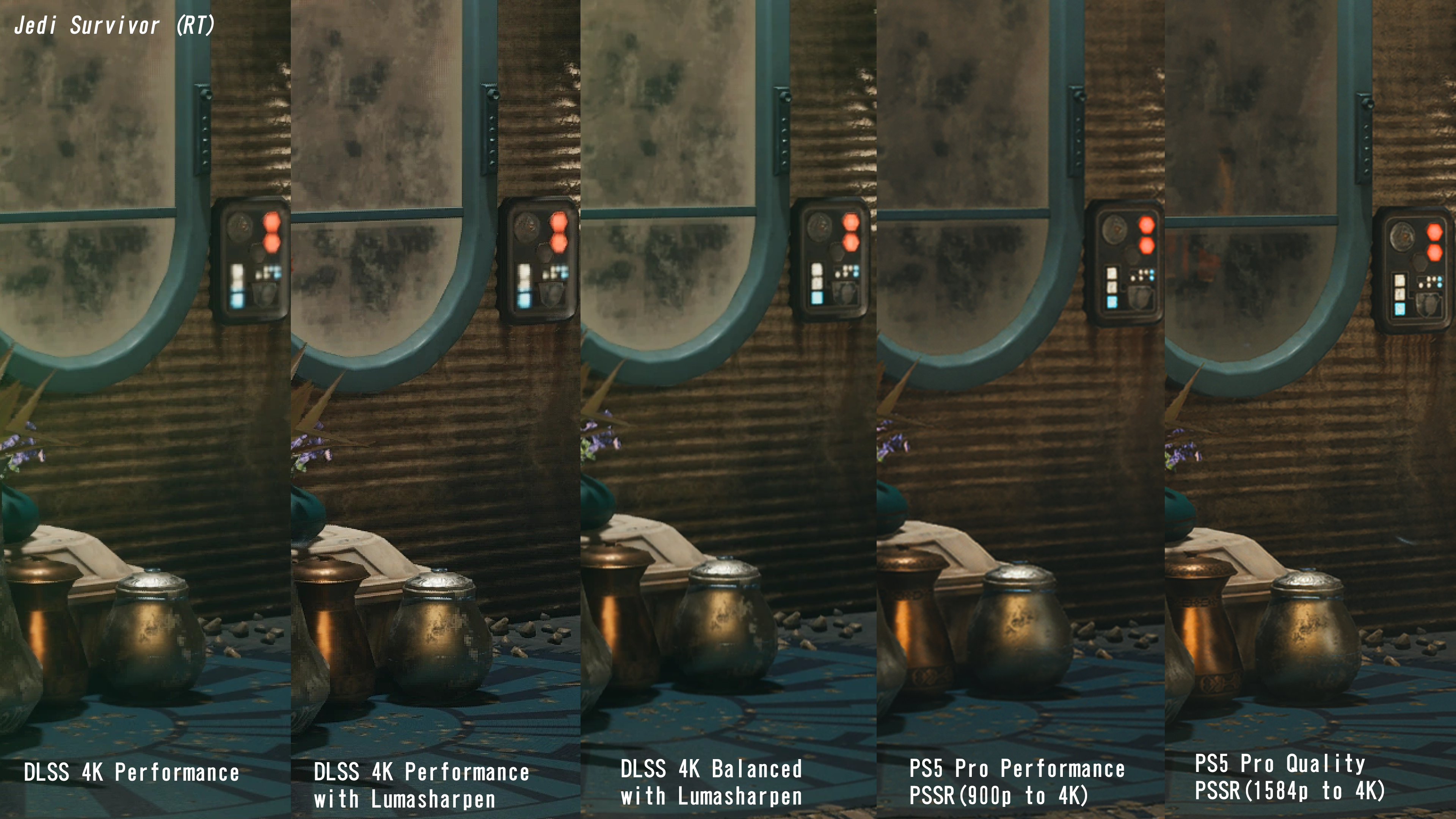

Sony has announced that the PlayStation 5 Pro will receive the full version of AMD's FidelityFX Super Resolution 4 upscaling technology in 2026. This upgrade delivers the exact same engine that PC users have accessed since its March release, replacing the current PlayStation Spectral Super...

www.techpowerup.com

That comment links to the following article:

Which is speculation from the article writer:

claims a "fully custom" AI accelerator capable of "300 TOPS 8-bit" (FP8+BF8 I guess).

A later comment has a pretty good rundown:

"PS5 Pro is technically an RDNA3 design with RDNA4 RT engines, so it has matrix FP16/INT8/INT4 instructions. The shader ISA, however, remains on RDNA2 (gfx10) to prevent work doubling between PS5 and PS5 Pro. So, no dual-issue FP32 support.

- The "AI cores" in RDNA3 aren't actually cores. They're instructions that execute in the CUs (sound familiar?). 2 AI ops can be issued per CU, one per SIMD32; RDNA has 2xSIMD32s per CU. So, 60 CUs = 120 "AI cores"

Thus, to access the matrix instructions in PS5 Pro, a separate SDK is used, in this case, PSSR. Devs also have to do a little coding to optimize for the new RDNA4 RT engines in PS5 Pro as well.

So, there are a couple of ways FSR4 can be ported, and in PS5 Pro, emulating FP8 through matrix FP16 is a possibility (or using 8-bit integers for most data, then FP16). PSSR 1.0 is heavy on INT8, and that can continue in PSSR 2.0/FSR4 along with emulated FP8 for the parts of the algorithm that require it.

PS5 Pro does not support FP8 natively."

I can't say with any certainty that FP8 is not supported, but I cannot find any source from Sony that says they do, despite talking about INT8 or FP16.